Paper Menu >>

Journal Menu >>

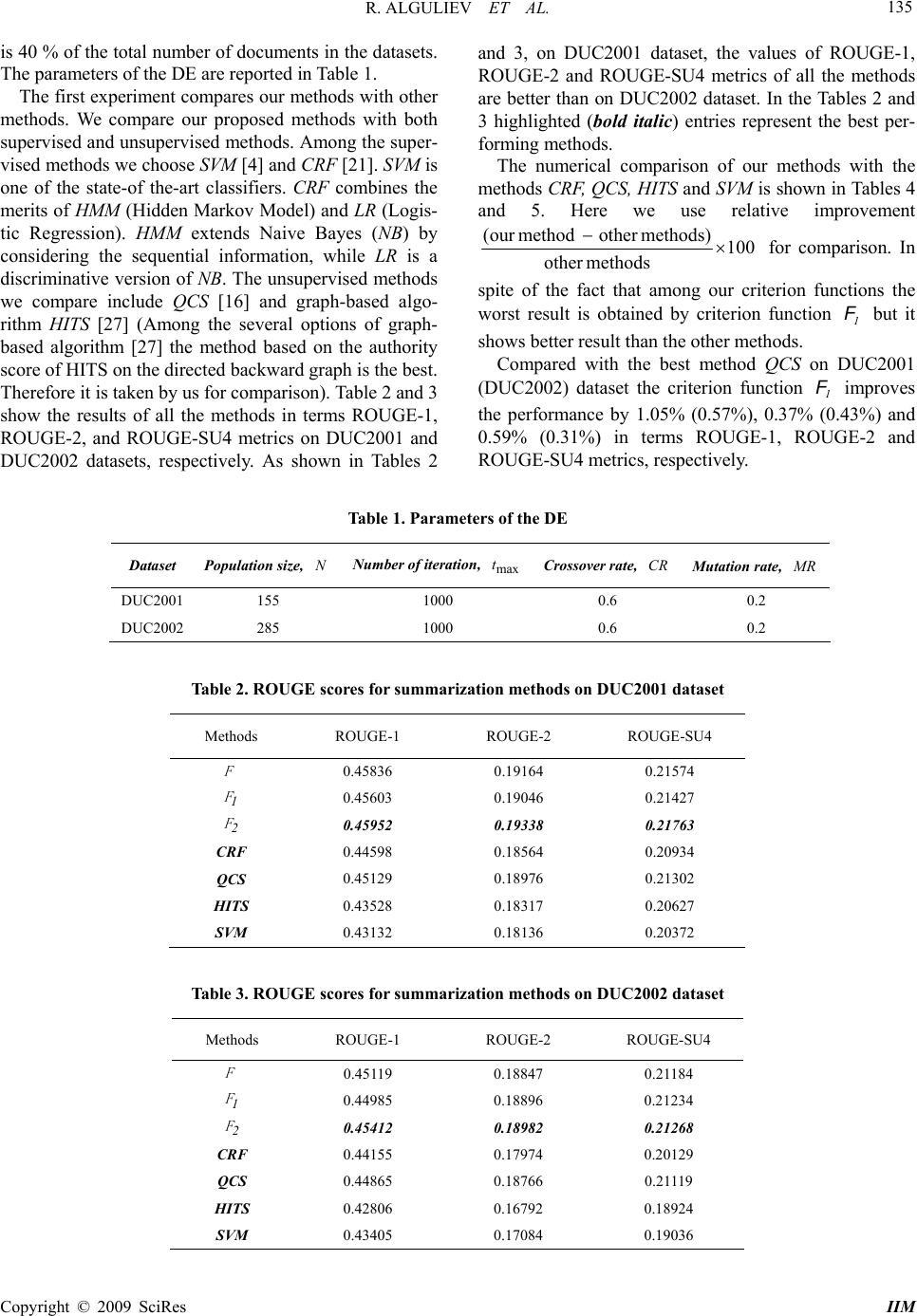

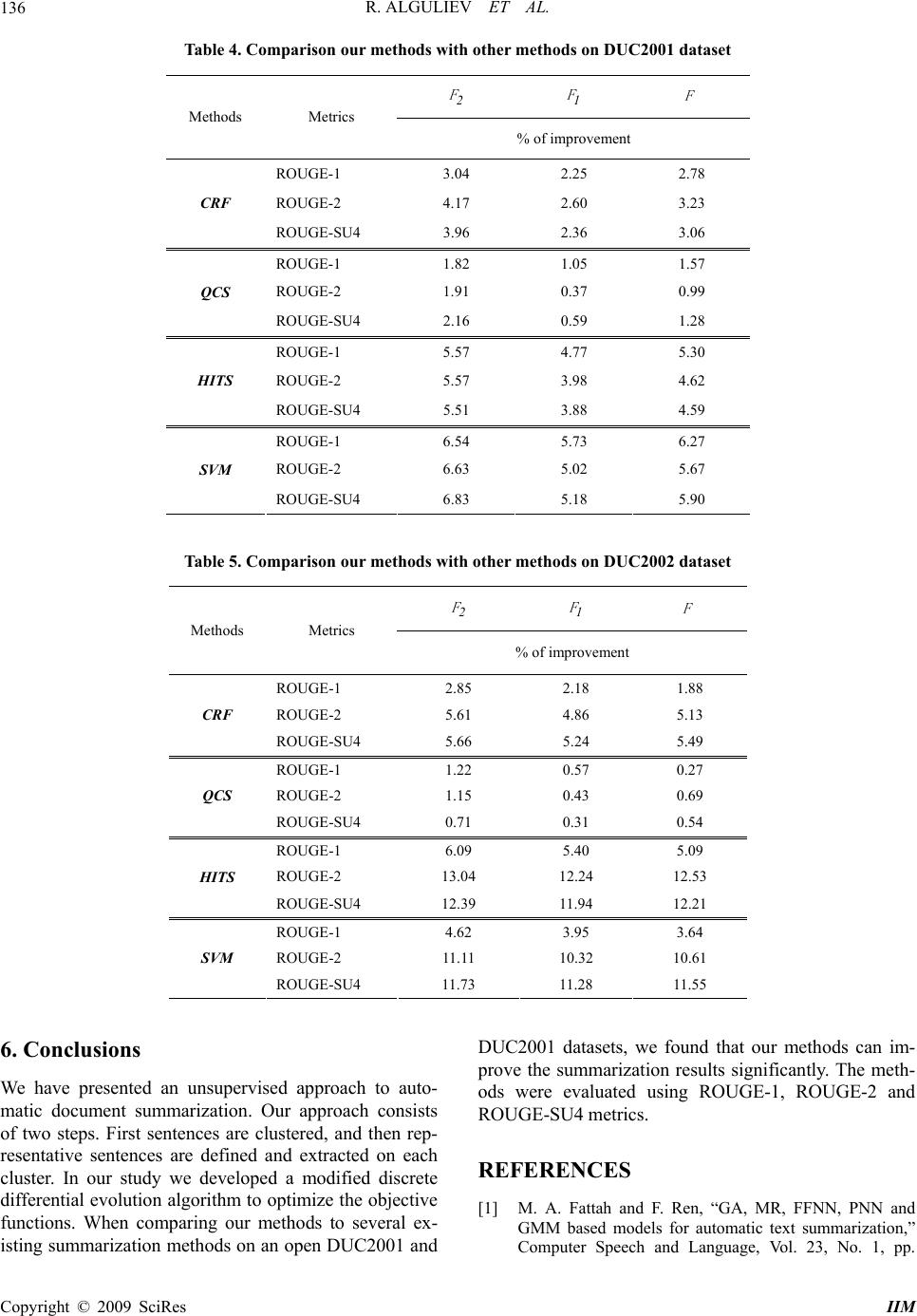

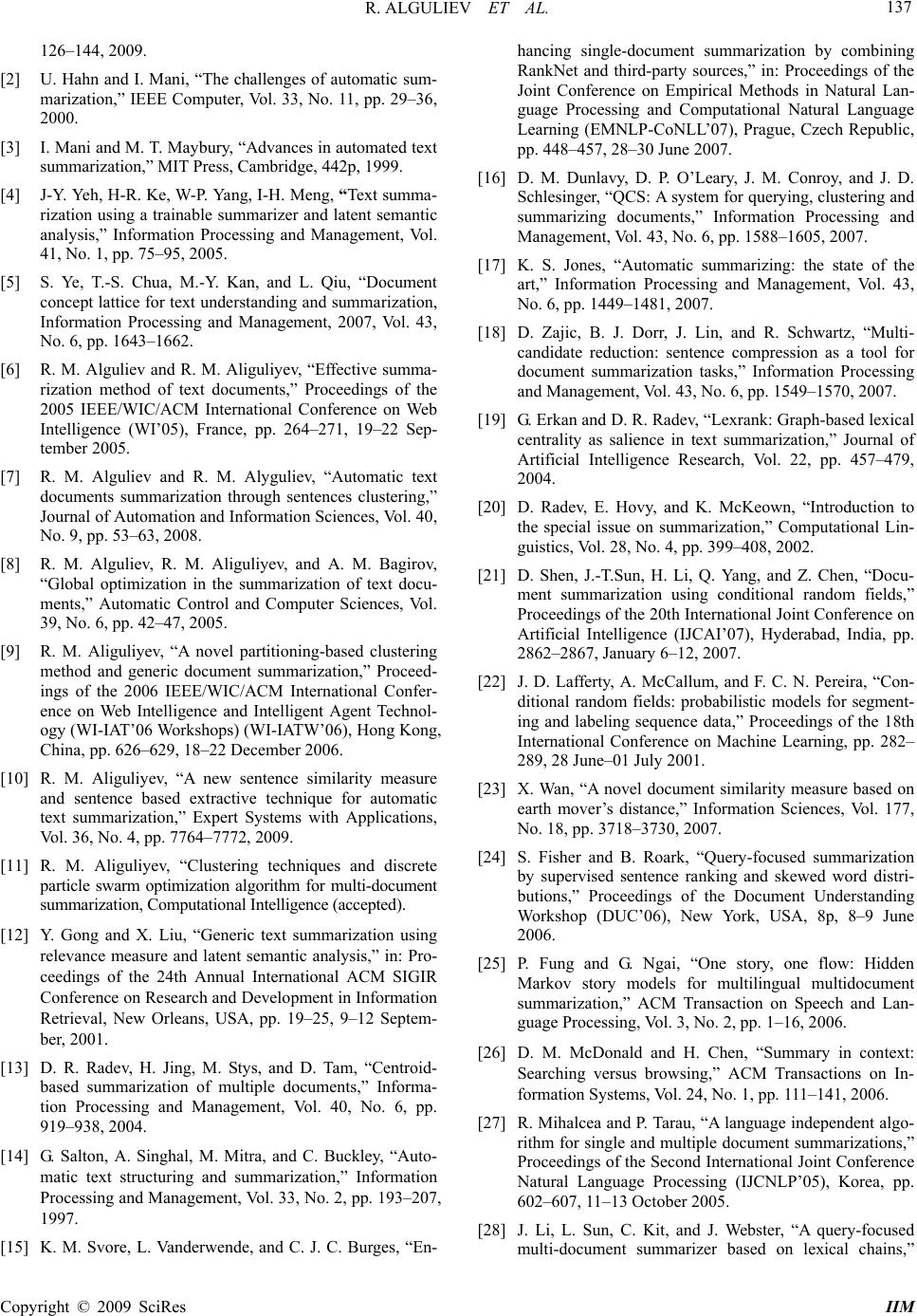

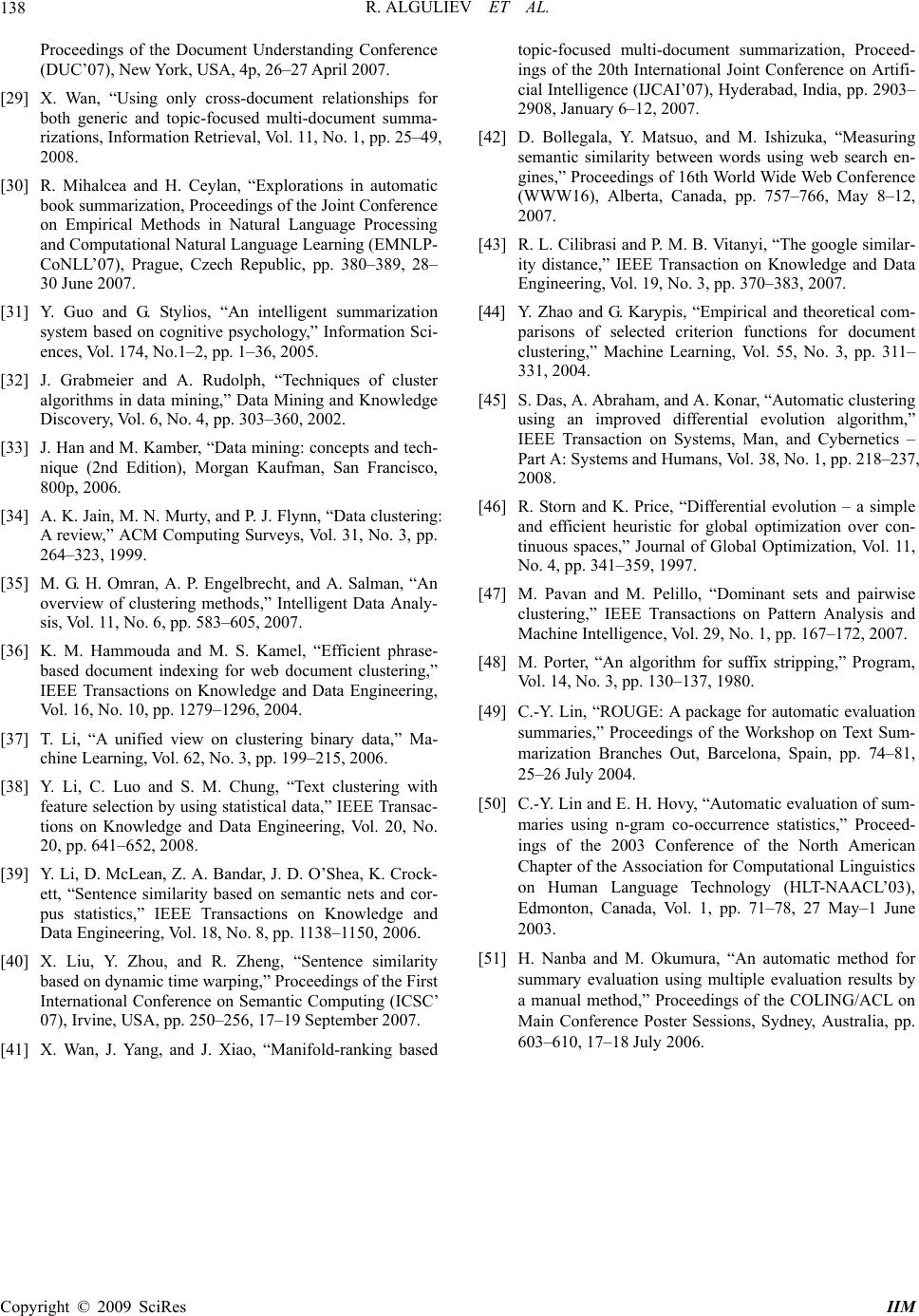

Intelligent Information Management, 2009, 1, 128-138 doi:10.4236/iim.2009.12019 Published Online November 2009 (http://www.scirp.org/journal/iim) Copyright © 2009 SciRes IIM Evolutionary Algorithm for Extractive Text Summarization Rasim ALGULIEV, Ramiz ALIGULIYEV Institute of Information Technology, Azerbaijan National Academy of Sciences, Baku, Azerbaijan Email: rasim@science.az, a.ramiz@science.az Abstract: Text summarization is the process of automatically creating a compressed version of a given document preserving its information content. There are two types of summarization: extractive and abstrac- tive. Extractive summarization methods simplify the problem of summarization into the problem of selecting a representative subset of the sentences in the original documents. Abstractive summarization may compose novel sentences, unseen in the original sources. In our study we focus on sentence based extractive document summarization. The extractive summarization systems are typically based on techniques for sentence extrac- tion and aim to cover the set of sentences that are most important for the overall understanding of a given document. In this paper, we propose unsupervised document summarization method that creates the summary by clustering and extracting sentences from the original document. For this purpose new criterion functions for sentence clustering have been proposed. Similarity measures play an increasingly important role in document clustering. Here we’ve also developed a discrete differential evolution algorithm to optimize the criterion functions. The experimental results show that our suggested approach can improve the performance compared to sate-of-the-art summarization approaches. Keywords: sentence clustering, document summarization, discrete differential evolution algorithm 1. Introduction Text summarization is the process of automatically cre- ating a compressed version of a given document pre- serving its information content. Automatic document summarization is an important research area in natural language processing (NLP). The technology of automatic document summarization is developing and may provide a solution to the information overload problem [1–3]. The process of text summarization can be decomposed into three phases: analysis, transformation, and synthesis. The analysis phase analyzes the input text and selects a few salient features. The transformation phase transforms the results of the analysis into a summary representation. Finally, the synthesis phase takes the summary represen- tation, and produces an appropriate summary corre- sponding to users’ needs. In the overall process, com- pression rate, which is defined as the ratio between the length of the summary and that of the original, is an im- portant factor that influences the quality of the summary. As the compression rate decreases, the summary will be more concise; however, more information is lost. While the compression rate increases, the summary will be lar- ger; relatively, more insignificant information is con- tained. In fact, when the compression rate is 5–30%, the quality of the summary is acceptable [1–4]. Text summarization can be categorized into two ap- proaches: extractive and abstractive. Extractive summa- rization methods simplify the problem of summarization into the problem of selecting a representative subset of the sentences in the original documents. Abstractive summarization may compose novel sentences, unseen in the original sources [1]. However, abstractive approaches require deep NLP such as semantic representation, in- ference and natural language generation, which have yet to reach a mature stage nowadays [5]. Extractive summarization systems are commonly used in automatic summarization to produce extractive sum- maries. Systems for extractive summarization are typi- cally based on technique for sentence extraction, and attempt to identify the set of sentences that are most im- portant for the overall understanding of a given docu- ment. Most commonly, such systems use some kind of similarity or centrality metric to identify the set of sen- tences to include in the summary [1,4,6–15]. For exam- ple, in [14] a paragraph extraction from a document based on intra-document links between paragraphs is proposed. It yields a TRM (Text Relationship Map) from intra-links, which indicate that the linked texts are se- mantically related. It proposes four strategies from the TRM: bushy path, depth-first path, segmented bushy path, augmented segmented bushy path. An improved version of this approach is proposed in [1,4,6]. In this paper we demonstrate an extractive text sum-  R. ALGULIEV ET AL. 129 marization method which is based on sentence clustering. For this purpose new criterion functions for sentence clustering have been offered. In our study we developed a discrete differential evolution algorithm to optimize the criterion functions. The experimental results on an open benchmark datasets from DUC2001 and DUC2002 show that our suggested approach can improve the perform- ance compared to state-of-the-art summarization ap- proaches. The rest of this paper is organized as follows: Section 2 introduces related works. The proposed sentence clus- tering based approach for generic single-document sum- marization is presented in Section 3. The discrete differ- ential evolution algorithm for optimization procedure is given in Section 4. The experiments and results are given in Section 5. Finally, we conclude our paper in Section 6. 2. Related Work Automatic document summarization has been actively investigated in recent years, and most researchers have concentrated on the extractive summarization method, but not the abstractive summarization method – see for example, the 6th issue of the Information Processing and Management: an International Journal of 2007. The cur- rent paper contains four references to the articles pub- lished in this edition [5,16–18]. A comprehensive survey of document summarization can be found in [17]. The centroid-based method [19,13] is one of the most popular extractive summarization methods. MEAD (http://www.summarization.com/mead/) is an implemen- tation of the centroid-based method for either single- or multi-document summarizing. It is based on sentence extraction. For each sentence in a cluster of related documents, MEAD computes three features and uses a linear combination of the three to determine what sen- tences are most salient. The three features used are cen- troid score, position, and overlap with first sentence (which may happen to be the title of a document). For single documents or (given) clusters it computes centroid topic characterizations using tf-idf-type data. It ranks candidate summary sentences by combining sentence scores against centroid, text position value, and tf-idf title/lead overlap. Sentence selection is constrained by a summary length threshold, and redundant new sentences avoided by checking cosine similarity against prior ones [20]. In [21] each document is considered as a sequence of sentences and the objective of extractive summariza- tion is to label the sentences in the sequence with 1 and 0, where a label of 1 indicates that a sentence is a summary sentence while 0 denotes a non-summary sentence. To accomplish this task, a conditional random field is ap- plied [22]. A novel extractive approach based on mani- fold-ranking of sentences to query-based multi-document summarization proposed in [23]. This approach first uses the manifold-ranking process to compute the manifold- ranking score for each sentence that denotes the biased information richness of the sentence, and then uses greedy algorithm to penalize the sentences with highest overall scores, which are considered both informative and novel, and highly biased to the given query. The summarization techniques can be classified into two groups: supervised techniques, that rely on machine learning algorithms trained on pre-existing document- summary pairs, and unsupervised techniques, based on properties and heuristics derived from the text. Super- vised extractive summarization techniques [1,4–6,21, 24–26] treat the summarization task as a two-class clas- sification problem at the sentence level, where the sum- mary sentences are positive samples while the non- summary sentences are negative samples. After repre- senting each sentence by a vector of features, the classi- fication function can be trained in two different manners [21]. The first is in a discriminative way with well- known algorithms such as SVM (Support Vector Ma- chine) [4]. In [1], the use of genetic algorithm (GA), mathematical regression (MR), feed forward neural net- work (FFNN), probabilistic neural network (PNN) and Gaussian mixture model (GMM) for automatic text summarization task have been investigated. This ap- proach is a trainable summarizer, which takes into ac- count several features, including sentence position, posi- tive keyword, negative keyword, sentence centrality, sentence resemblance to the title, sentence inclusion of name entity, sentence inclusion of numerical data, sen- tence relative length, bushy path of the sentence and ag- gregated similarity for each sentence to generate summa- ries. The article [25] presents a multi-document, multi- lingual, theme-based summarization system based on modeling text cohesion (story flow). Many unsupervised methods have been developed for document summariza- tion by exploiting different features and relationships of the sentences, such as clustering of sentences [7–11], the hidden topics in the documents [12], graphs based on the similarity of sentences[13,19,27]. Recently, graph-based methods have been offered to rank sentences. Lexrank [19] and [27] are two such sys- tems using the algorithms PageRank and HITS to com- pute sentence importance. Lexrank is used to compute sentence importance based on the concept of eigenvector centrality in a graph representation of sentences for multi-document summarization task. The graph-based extractive summarization algorithms succeed in identi- fying the most important sentences in a text based on information exclusively drawn from the text itself. Unlike other systems, which attempt to find out what makes a good summary by training on collections of summaries built for other articles, the graph-based meth- ods are fully unsupervised, and rely on the given texts to derive an extractive summary[23]. Copyright © 2009 SciRes IIM  R. ALGULIEV ET AL. 130 On the other hand, summarization task can also be categorized as either generic or query-based. A query- based summary presents the information that is most relevant to the given queries [16,23,24,28,29] while a generic summary gives an overall sense of the docu- ment’s content [7–14,19,27,30]. The QCS system (Query, Cluster, and Summarize)[16] performs the following tasks in response to a query: retrieves relevant docu- ments; separates the retrieved documents into clusters by topic, and creates a summary for each cluster. QCS is a tool for document retrieval that presents results in a for- mat so that a user can quickly identify a set of documents of interest. In [29] are developed a generic, a query- based, and a hybrid summarizer, each with differing amounts of document context. The generic summarizer used a blend of discourse information and information obtained through traditional surface-level analysis. The query-based summarizer used only query-term informa- tion, and the hybrid summarizer used some discourse information along with query-term information. Automatic document summarization is a highly inter- disciplinary research area related with computer science, multimedia, statistics, as well as cognitive psychology. In [31] is introduced an intelligent system, the event index- ing and summarization (EIS) system, for automatic document summarization, which is based on a cognitive psychology model (the event-indexing model) and the roles and importance of sentences and their syntax in document understanding. The EIS system involves syn- tactic analysis of sentences, clustering and indexing sen- tences with five indices from the event-indexing model, and extracting the most prominent content by lexical analysis at phrase and clause levels. 3. Sentence Clustering Clustering is the process of discovering natural group- ings or clusters and identifying interesting distributions and patterns within multidimensional data based on some similarity measure. The topic of clustering has been ex- tensively studied in many scientific disciplines such as text mining, pattern recognition, IR etc. Document clus- tering is a central problem in text mining which can be defined as grouping documents into clusters according to their topics or main contents. Document clustering has many purposes including expanding a search space, gen- erating a summary, automatic topic extraction, browsing document collections, organizing information in digital libraries and detecting topics. In the literature a wide variety of clustering algorithms have been proposed for different applications and sizes of data sets. The surveys on the topics [32–35] offer a comprehensive summary of the different applications and algorithms. Generally clustering problems are determined by four basic components [36,37]: 1) the (physical) representa- tion of the given data set; 2) the distance/dissimilarity measures between data points; 3) the criterion/objective function which the clustering solutions should aim to optimize; and, 4) the optimization procedure. For a given data clustering problem, the four components are tightly coupled. Various methods/criteria have been proposed over the years from various perspectives and with vari- ous focuses. 3.1. Sentence Similarity Measure Based on Terms Co-Occurrence Let a document is decomposed into a set of sen- tences D ,, n DS S12 ,...S, where n is the number of sentences. Let ..., m t i S 12 , ,tTt represents all the dis- tinct words (terms) occurring in a document , where is the number of words. In most existing document clustering algorithms, documents are represented using the vector space model (VSM) [33]. Each document is represented using these words as a vector in -dimen- sional space. A major characteristic of this representation is the high dimensionality of the feature space, which imposes a big challenge to the performance of clustering algorithms. They could not work efficiently in high-di- mensional feature spaces due to the inherent sparseness of the data [17]. The vector dimension m is very large compared to the number of words in a sentence, thus the resulting vectors would have many null components [38]. In our method, a sentence is represented as a set of distinct terms appearing in it, , where is the number of distinct terms in the sentence . D m i im t m i m 12 , ,...,tSt i S Similarity measures play an increasingly important role in NLP and IR. Similarity measures have been used in text-related research and application such as text min- ing, information retrieving, text summarization, and text clustering. These applications show that the computation of sentence similarity has become a generic component for the research community involved in knowledge rep- resentation and discovery. There are more papers on similarity between documents than between sentences or short texts, but not few [38,39]. The paper [39] presents a method for measuring the similarity between sentences or very short texts, based on semantic and word order information. First, semantic similarity is derived from a lexical knowledge base and a corpus. Second, the pro- posed method considers the impact of word order on the sentence meaning. The overall sentence similarity is de- fined as a combination of semantic similarity and word order similarity. Liu et al. [40] present a novel method to measure similarity between sentences by analyzing parts of speech and using Dynamic Time Warping technique. In [41] proposed a novel measure based on the earth Copyright © 2009 SciRes IIM  R. ALGULIEV ET AL. 131 mover’s distance (EMD) to evaluate document similarity by allowing many-to-many matching between subtopics. First, each document is decomposed into a set of subtop- ics, and then the EMD is employed to evaluate the simi- larity between two sets of subtopics for two documents by solving the transportation problem. The proposed measure is an improvement of the previous optimal matching (OM)-based measure, which allows only one-to-one matching between subtopics. The study of semantic similarity between words has long been an in- tegral part of IR and NLP [42]. The method, proposed in [42], integrates both page counts and snippets to measure semantic similarity between a given pair of words. In this paper modified four popular co-occurrence measures; Jaccard, Overlap (Simpson), Dice and PMI (Point-wise Mutual Information), to compute semantic similarity using page counts. In this section we present a method to measure simi- larity between sentences using the Normalized Google Distance (NGD) [43]. First we calculate a similarity measure between the terms before defining the similarity measure between the sentences. Using the NGD [43] the similarity measure between terms and we define as: k tl t NGD (,) exp(NGD(,)) kl kl s imt tt t (1) where maxlog(),log()log() NGD(, )logminlog(), log() kl kl kl kl f ff tt nff , (2) k f is the number of sentences containing the term , k t kl f denotes the number of sentences containing both terms and , is the number of sentences in the document. k tl tn From the properties of NGD follows that [43]: 1) The range of the is between and ; NGD (,) kl disst t0 1 If kl tt or if kl tt but 0, then ) 1 kl. That is, the semantics of and l t, in the Google sense is the same. klkl fff k t NGD (,t tsim If 0 k f, 0 l f and 0 kl f, we take N GD(, )1 kl tt, then (,)1 kl t t. NGD 0sim If 0kll k f ffn and klkl f fnf , then . NGD 0(,)1 kl mt tsi 2) for any . For every pair and , we have NGD (,) 1 kk simt t l t k t ) k t NGD NGD (, (,) kl lk s imttsimt t: It is symmetric. Using the formula (1) we define a similarity meas- ure between sentences and i S j S as follows: NGD NGD (,) (, )kil j kl tStS ij ij s imt t simS Smm (3) From the properties of follows that: 1) the range of the is in and ; 2) for every ; 3) for every pair and ),( NGD lkttsim ),( ji SS i S ,() NGD jSSsim NGD sim ,( ji SS 0 ) i 1 0),( NGD ii SSsim j SNGD sim i S : it is ex- changeable. 3.2. Objective Functions Typically clustering algorithms can be categorized as agglomerative or partitional based on the underlying methodology of the algorithm, or as hierarchical or flat (non-hierarchical) based on the structure of the final so- lution [32–35]. A key characteristic of many partitional clustering algorithms is that they use a global criterion function whose optimization drives the entire clustering process. In recent years, it has been recognized that the partitional clustering technique is well suited for cluster- ing a large document database due to their relatively low computational requirements [44]. Automatic clustering is a process of dividing a set of objects into unknown groups, where the best number of groups (or clusters) is determined by the clustering algorithm. That is, objects within each group should be highly similar to each other than to objects in any other group. The automatic clustering problem can be defined as follows [32–35,45]: k The set of sentences n SSSD,...,, 21 are clustered into non-overlapping groups Chere k C alled a cluster, k is the unknown number of clusters. The partition should possess three properties: k CC ,..., 1 , w is c 1) Two different clusters should have no sentences in common, i.e. qpCC for qp kqp ,...,2,1, ; 2) Each sentence should definitely be attached to a cluster, i.e. 1 k p p CD ; 3) Each cluster should have at least one sentence as- signed, i.e. p C kp ,...,2,1 . Partitional clustering can be viewed as an optimization procedure that tries to create high-quality clusters ac- cording to a particular criterion function. Criterion func- tions used in partitional clustering reflect the underlying definition of the “goodness” of clusters. Many criterion functions have been proposed in the literature [32–35,44] to produce more balanced partitions. We introduce a criterion function that is defined as fol- lows: max→))(1( 2 F 1 FF sigm (4) Copyright © 2009 SciRes IIM  R. ALGULIEV ET AL. 132 where is a sigmoid function th)(zsigm numbers at maps from the real into ]1,0[, )exp(1 1 )(zsigm. The criterion funct4) balust z ion (lances both intra-cer similarity and inter-cluster dissimilarity. This function is obtained by combining two criteria: max),( 1∈, NGD k simCF pCSS lip1 pli SS (5) and min),( 11 1 11 NGD2 k p k pqCSCS li qp piql SSsim CC F (6) The criterion Function (5) maximizes the average su spectively crlution Theat can be used to optimize le ve 1 F thm ofe pairwise similarity between the sentences assigned to each cluster. The 2 F criterion Function (6) computes the clustering by fing a solution that sepa- rates each cluster from other clusters. It minimizes the similarity between the sentences i S and l S assigned to different clusters p C and q C, r. 4. Modified Disete Differential Evo din e Algorithm (MDDE) re are many techniques th the criterion Functions (4)-(6) described in the previous Section 3. The paper [17] presents an up-to-date survey on evolutionary algorithms for clustering. It tries to re- flect the profile of this area by focusing more on those subjects that have been given more importance in the literature. Particularly, the paper has focused mainly on hard partitional algorithms, though overlapping (soft/ fuzzy) approaches have also been covered. An original contribution of the present paper is that it discusses key issues on the design of evolutionary algorithms for data partitioning problems, such as usually adopted represen- tations, evolutionary operators, and fitness functions, just to mention a few. In particular, mutation and crossover operators commonly described in the literature are con- ceptually analyzed, giving especial emphasis to those genetic operators specifically designed for clustering problems (i.e., cluster-oriented and context-sensitive operators). In our study these criterion functions were optimized using a differential evolution (DE) [45,46]. The execution of the differential evolution is similar to other evolutionary algorithms like genetic algorithms or evolution strategies. The evolutionary algorithms differ mainly in the representation of parameters (usually bi- nary strings are used for genetic algorithms while pa- rameters are real-valued for evolution strategies and dif- ferential evolution) and in the evolutionary operators. Like to other evolutionary algorithm, DE also starts with a population of N n-dimensional search variab ctors. The classical DE [45,46] is a population-based global optimization thuses a real-coded representation. In our study we use a genetic encoding that deals with discrete variables (clusters), such that each component of the chromosome takes a value between 1 and k and represents the cluster to which the sentence is assigned. Potential set of solutions to the optimization problem are represented by a population of chromosomes. The initial population of chromosomes is generated by producing the series of integer random numbers. These numbers are uniformly generated between 1 and k inclusively. Potential solutions (chromosomes) to the target problem are encoded as fixed length discrete strings, i.e., ,1 ,2, ( )[( ),( ),...,()] rrr rn at X txtxtxt where ,()1, 2,..., rs x tk, Nr ,...,2,1 , and ns,...,2,1 , N is the size of the en the nu population. For exam 4 ple, givmber ofrs cluste k, the ncumber s 8 and the popula- tion size 3 of sentenen N, a population can be as: ]3,4,1,2,4,3,1,2[ 1)( tX ,1,3,2, , ]1,1,3,2,2,4,)( 2tX , and ]4,4,2,1[)( 3 3,4[ 3 tX . In this example, chromosome idate solution where sentences 6 S are assigned to cluster 1 C; sentences 1 S 5 S are located in cluster 2 C; sentences 3 S and 8 S are assigned to cluster 3 C, and 4 S and S are located in cluster 4 C. For each individual vector )(tthat belongs to the current populationDE )( 1tX represents a 2 S and and cand 7 Xr omly nd X , randples three other dividuals )( 1tX r, )( 2tX r, a)( 3t r from the same generation (for mutually different 321rrrr sam in ). It then calcula ) and )( 3tX r, scales it by a scalar tes the difference of X( 2t r , and creattrial ofring )]1),...,1(),1([)1( ,2,1, es a ( fsp tytytytYnrrrr by the result to )(X. , for the adding 1t rThus s th component of each vector )1(ty otherwise),( CRrndif)),()(()( , ,2,2,1 ,tx txtxtx sr ssrsrsr sr (7 The scaling factor ( ) ) and the crossover rate are control parame DE, are set by the user va (C . B R) oth ters of dlues remain constant uring the search process. i C s a real-valued factor (usually in range ]1,0[ ), that con- trols the amplification of differential variations and R is a real-valued crossover factor in rang]1, control- ling the probability to choose mutated value for e 0[ x in- stead of its current value. s rnd is the unify distrib- uted random numbers within the range ]1,0[ csen orml ho Copyright © 2009 SciRes IIM  R. ALGULIEV ET AL. Copyright © 2009 SciRes IIM 133 of th rent in the next generation; where function to be maxim he population the process of tion - once for each ns ,...,2,1 If the new offspring yields a better value e objec- tive function, i ot . t replaces its pa r is the objective After initialization of t that it uses the mutation operation adopted from genetic algorithm. The algorithm proposed is based on the muta- tion adopted from genetic algorithms. In our modifica- tion, at the iteration 1 t [) for each vector cre- ates a vector )(tX r (),..., ,)]11(),1(1( 2,1, tm nr tmttm rr mr, which is defined as: herwise, the parent is retained in the population, i.e., ))(())1((if),( ))(())1((if),1( )1( tXftYftX tXftYftY tX rrr r (8) rr otherwise,0 ))1((randif,1 )1( , , tysigm tm srs sr (12) )(f ized. calculaof the fitness is performed. To judge the qual ity The vector )1( tmr represents the changes that will be needed to move the particle from to )(tXr )1( tX r )(tX r . If the component of vector is one, it means that this component will be copied from the to )1(tmr )1( tX r. If the component of )1( tmr is null, it means that this component will be mutated. The inversion operator has been used as a mutation operator. The inversion operator takes the components from the corresponding to the null components of the vector )(tX r )1( tmr and puts them to form a new particle. The pseudo-code of an inversion operator is described in Figure 1 ( S is the cardinality of the set ) [11]: S of a partition provided by a chromosome, it is neces- sary to have a fitness functions. The fitness functions are defined as ))(())(( 1tXtXfitness aa 1 F (9) ))(( 1 ))(( 2tXfitness a ))((tXfitnessa zation of the fitness F r minimi tX a2 F Maximiunctions (9)-(11) leads to maximization (ozation) of the tio , (10) ))((tX a F. (11) objective Func- ns (4)-(6), respectively. The main difference between the traditional discrete DE (DDE) algorithms and the algorithm proposed here is Let’s consider a following example (Figure 2): end_if stopthen0Sifelse ,\SS )(1)( )(1)( Smin Smax then0if end_for end_if SS else )(1)(then11)(if :1for S operatorinversion procedure , , ,, ,,, ss txtx txtx s s S s txtxtm ns sr sr srsr srsrsr Figure 1. Pseudo-code of an inversion operator 324 12341 () r Xt : 011 001 01 424 21331 (1) r mt : (1) r Xt : Figure 2. Inversion operator  R. ALGULIEV ET AL. 134 The components of a vector , using the pseudo-code described in Figure 1, are calculated as fol- lows: Step 1. then , i.e. , )1( tX r 1)1(if,tm sr 2)() 2, txr )()1( ,,txtx srsr )()1( 3,3, 1( 2, txr4 txt r 1)()1(8, xr 8, , 3)( 5, txr, and )1( 5, txr txt r xr Step 2. ,10 7,6,4 )1(:S tms ,sr Step 3. 77, , 6,4,1maxSmax sSmin s 17,6,4,1min Step 4. ,sr)1)()1( ,txtx sr and ( , txsr and )1( 7, )( ,tx sr , i.e. )()1( 7,1, txtx rr 4 txr 3 )( 1, txr Step 5. 6,47,1\7,6,4,1,\SS ss Step 6. 66,4maxSmax s, 46, Smin s 4min Step 7. and )()1(,,txtxsrsr )1( , txsr )1( 6, ), i.e. and ( ,tx sr 1)()1( 6,4, txtx rr txr 2)( 4, txr Step 8. 6,4\,6,4,\SS ss, i.e. 0 an S The stopping criterion of DE could be a given number of consecutive iterations within which no improveme on solutions, U time limit, or maximum number of (fitness calculat, is at tained. Unless otherwise specified, in this paper we use the last one as the termination criteria, i.e. the alg tewhen a maximum number of fitness c tion is a 5. Experiments and Results For evaluation the performance of our methods we used two document datasets DUC2001 and DUC2002 and corresponding 100-word summaries generated for each of documents. The DUC2001 and DUC2002 are an open benchmark datasets which contain 309 and 567 docu- ments-sumary pairs from Document Understanding (http://duc.nist.gov). The datasets DUC2001 ization evaluation. It effective for meas- d hence stop. nt a specified CP iterationsion), t- max orithm rminates alcula- chieved. Extractive summarization works by choosing a subset of the sentences in the original document. This process can be viewed as identifying the most salient sentences in a cluster that give the necessary and sufficient amount of information related to main content of the cluster (topic). In a cluster of related sentences, many of the sentences are expected to be somewhat similar to each other since they are all about the same topic. The ap- proach, proposed in papers [13,19], is to assess the cen- trality of each sentence in a cluster and extract the most important ones to include in the summary. In centroid- based summarization, the sentences that contain more words from the centroid of the cluster are considered as central. Centrality of a sentence is often defined in terms of the centrality of the words that it contains. In this sec- tion we use other criterion to assess sentence salience, developed in [11] which is based on technique proposed in [47]. and DUC2002 are clustered into 30 and 59 topics, re- spectively. At a preprocessing step, the stopwords in each sen- tence were removed using the stoplist provided in ftp://ftp.cs.cornell.edu/pub/smart/english.stop and the remaining words were stemmed using the Porter’s scheme [48]. We use the ROUGE (Recall Oriented Understudy for Gisting Evaluation) toolkit [49,50], which was adopted m Conference by DUC for automatically summar has been shown that ROUGE is very uring document summarization. It measures summary quality by counting overlapping units such as the N-gram, word sequences and word pairs between the candidate summary and the reference summary. The ROUGE-N measure compares N-grams of two summaries, and counts the number of matches. The measure is defined by Formula (31) [49–51]: Ngram Ngram OUGE-N (N-gram) ref ref SS umm S SSumm S Count R (N-gram) match Count (14) where N stands for the length of the N-gram, N( )gram match is the maximum number of N-grams co-occurring in candidate summary and a set of reference-summaries. )gramN( Count is the number of N-grams in the reference summaries. We show three of the ROUGE metrics in the experimental results: ROUGE-1, ROUGE-2 and ROUGE-SU4. The ROUGE- 1 and ROUGE-2 scores are based on the overlap of uni- grams and bigrams, respectively, between the candidate summary and the reference summary. The ROUGE-SU4 score is also based on the overlap of bigrams between summaries, but allows Count for gaps to occur between words (skip-bigram), with a maximum gap length of words, and includes unigram co-occurrence statistics as well. The optimization procedure used here is stoch nature. Hence, for each criterion function ( ) it has been run several times for diffeof astic in and 1 F, ren 2 F t values F parameters ]8.0;3.0[ CR and ]5.0;1.0[MR. At ex- nts the size of population and the number of itera- tion we kept unchanged changing only parameters CR and MR with step 0.1. For both datasets we take the same number of iterations w population size perime hich is 1000. The Copyright © 2009 SciRes IIM  R. ALGULIEV ET AL. 135 is 40 % of the total number of documents in the datasets. The parameters of the DE are reported in Table 1. The first experiment compares our methods with other methods. We compare our proposed methods with both supervised and unsupervised methods. Among the super- vised methods we choose SVM [4] and CRF [21]. SVM is one of the state-of the-art classifiers. CRF combines the merits of HMM (Hidden Markov Model) and LR (Logis- tic Regression). HMM extends Naive Bayes (NB) by considering the sequential information, while LR is a discriminative version of NB. The unsupervised methods we compare include QCS [16] and graphalgo- hm HITS [27] (Among the several options of graph- based algoriethodauthority score of HITS on the directed backward graph is the best. Therefore it is taken by us for comparison). Table 2 and 3 show the results of all the methods in terms ROUGE-1, ROUGE-2, and ROUGE-SU4 metrics on DUC2001 and DUC2002 datasets, respectively. As shown in Tables 2 and 3, on DUC2001 dataset, the values of ROUGE-1, ROUGE-2 and ROUGE-SU4 metrics of all the methods are better than on DUC2002 dataset. In the Tables 2 and 3 highlighted (bold italic) entries represent the best per- forming methods. The numerical comparison of our methods with the methods CRF, QCS, HITS and SVM is shown in Tables 4 and 5. Here we use relative improvement 100 methodsother for comparison. In spite of the fact that among our criterion functions the worst result is obtained by criterion function 1 F but it shows better result than the other methods. -based rit thm [27] the m based on the methods)other methodour( Table 1. Param Dataset Population size, N Number of itera Compared with the best method QCS on DUC2001 (DUC2002) dataset the criterion function 1 F improves the performance by 1.05% (0.57%), 0.37% (0.43%) and -1, ROUGE-2 and ers of the DE 0.59% (0.31%) in terms ROUGE ROUGE-SU4 metrics, respectively. et tion, max tCrossover rate, CRMutation rate, M R DUC2001 155 1000 0.6 0.2 DUC2002 285 1000 0.6 0.2 Table 2. ROUGE scores for summarization methods on DUC2001 dataset Methods ROUGE-1 ROUGE-2 ROUGE-SU4 F 0.0.19164 0.215745836 4 1 F 0.45603 0.19046 0.21427 0.45952 0.19338 0.21763 CRF 0.44598 0.18564 0.20934 2 F QCS 0.45129 0.18976 0.21302 HITS 0.43528 0.18317 0.20627 SVM 0.43132 0.18136 0.20372 Table ROUGE scoummarization methods on DUC2002 dataset Methods ROUGE-1 R 3.res for s OUGE-2 ROUGE-SU4 F 0.45119 0.18847 0.21184 1 F 0.44985 0.18896 0.21234 2 F 0.45412 0.18982 0.21268 CRF 0.44155 0.17974 0.20129 SVM 0.43405 0.17084 0.19036 QCS 0.44865 0.18766 0.21119 HITS 0.42806 0.16792 0.18924 Copyright © 2009 SciRes IIM  R. ALGULIEV ET AL. 136 Tads with otherble 4. Comparison our metho methods on DUC2001 dataset 2 F 1 F F Methods Metr provement ics % of im ROUGE-1 3.04 2.25 2.78 ROUGE-2 4.17 2.60 3.23 C ROUGE-SU4 3.96 2.36 3.06 ROUGE-1 1.82 1.05 1.57 RF ROUGE-2 1.91 0.37 0.99 QCS ROUGE-SU4 2.16 0.59 1.28 ROU5.57 4.77 5.30 GE-1 ROUGE-2 5.57 3.98 4.62 HITS 4 ROUGE-SU5.51 3.88 4.59 ROUGE-1 6.54 5.73 6.27 ROUGE-2 6.63 5.02 5.67 SVM 4 ROUGE-SU6.83 5.18 5.90 Table 5. Comds ther methods on DUC2002 dataset parison our methowith o 2 F 1 F F Methods M % of imment etrics prove ROUGE-1 2.85 2.18 1.88 ROUGE-2 5.61 4.86 5.13 CRF ROUGE-SU4 5.66 5.24 5.49 ROUGE-1 1.22 0.57 0.27 ROUGE-2 1.15 0.43 0.69 QCS ROUGE-SU4 0.71 0.31 0.54 ROU6.09 5.40 5.09 GE-1 ROUGE-2 13.04 12.53 HITS ROUGE-SU4 12.39 11.94 12.21 12.24 ROUGE-1 4.62 3.95 3.64 ROUGE-2 11.11 10.32 10.61 SVM ROUGE-SU4 11.73 11.28 11.55 6. Conclusions We have presented an unsuperviseh to auto- matic document summarization. Och consists of two steps. First sentences are cluthen rep- resentative sentences are defined a cluster. In our study we ded discrete differential evolution algo to oe objective functions. When comparing our mveral ex- isting summarization methods on an open DUC2001 and 2001ets, we fat our methods can im- prove the summarization results significantly. The meth- were sinOUGE-1, ROUGE-2 and REES M. A. Fattah and F. Ren, “GA, MR, FFNN, PNN and GMM based models for automatic text summarization,” Computer Speech and Language, Vol. 23, No. 1, pp. d approac ur approa ROUGE-SU4 metrics. stered, and nd extracted on each REFE develope rithm a modifi ptimize th ethods to se DUC datasound th ods evaluated ug R NC [1] Copyright © 2009 SciRes IIM  R. ALGULIEV ET AL. 137 126–144, 2009. Mani, “The challenges of automatic sum- R. M. Aliguliyev, “Effective su rization method of text documents,” Proceedings of the CM International Conference on Web . 264–271, 19–22 Sep- tion and Information Sciences, Vol. 40, l optimization in the summarization of text docu- and Development in Information ltiple documents,” Informa- [15] K. M. Svore, L. Vanderwende, and C. J. C. Burges, “En- hancing single-document summarization by combining e, Czech Republic, J. Dorr, J. Lin, and R. Schwartz, “Multi- . Radev, “Lexrank: Graph-based lexical in context: al Joint Conference multi-document summarizer based on lexical chains,” [2] U. Hahn and I. marization,” IEEE Computer, Vol. 33, No. 11, pp. 29–36, 2000. [3] I. Mani and M. T. Maybury, “Advances in automated text summarization,” MIT Press, Cambridge, 442p, 1999. [4] J-Y. Yeh, H-R. Ke, W-P. Yang, I-H. Meng, “Text summa- rization using a trainable summarizer and latent semantic analysis,” Information Processing and Management, Vol. 41, No. 1, pp. 75–95, 2005. [5] S. Ye, T.-S. Chua, M.-Y. Kan, and L. Qiu, “Document concept lattice for text understanding and summarization, Information Processing and Management, 2007, Vol. 43, No. 6, pp. 1643–1662. [6] R. M. Alguliev and mma- 2005 IEEE/WIC/A Intelligence (WI’05), France, pp tember 2005. [7] R. M. Alguliev and R. M. Alyguliev, “Automatic text documents summarization through sentences clustering,” Journal of Automa cent No. 9, pp. 53–63, 2008. [8] R. M. Alguliev, R. M. Aliguliyev, and A. M. Bagirov, “Globa ments,” Automatic Control and Computer Sciences, Vol. 39, No. 6, pp. 42–47, 2005. [9] R. M. Aliguliyev, “A novel partitioning-based clustering method and generic document summarization,” Proceed- ings of the 2006 IEEE/WIC/ACM International Confer- ence on Web Intelligence and Intelligent Agent Technol- ogy (WI-IAT’06 Workshops) (WI-IATW’06), Hong Kong, China, pp. 626–629, 18–22 December 2006. [10] R. M. Aliguliyev, “A new sentence similarity measure and sentence based extractive technique for automatic text summarization,” Expert Systems with Applications, Vol. 36, No. 4, pp. 7764–7772, 2009. [11] R. M. Aliguliyev, “Clustering techniques and discrete particle swarm optimization algorithm for multi-document summarization, Computational Intelligence (accepted). 289, [12] Y. Gong and X. Liu, “Generic text summarization using relevance measure and latent semantic analysis,” in: Pro- ceedings of the 24th Annual International ACM SIGIR Conference on Research Retrieval, New Orleans, USA, pp. 19–25, 9–12 Septem- ber, 2001. [13] D. R. Radev, H. Jing, M. Stys, and D. Tam, “Centroid- based summarization of mu tion Processing and Management, Vol. 40, No. 6, pp. 919–938, 2004. [14] G. Salton, A. Singhal, M. Mitra, and C. Buckley, “Auto- matic text structuring and summarization,” Information Processing and Management, Vol. 33, No. 2, pp. 193–207, 1997. RankNet and third-party sources,” in: Proceedings of the Joint Conference on Empirical Methods in Natural Lan- guage Processing and Computational Natural Language Learning (EMNLP-CoNLL’07), Pragu pp. 448–457, 28–30 June 2007. [16] D. M. Dunlavy, D. P. O’Leary, J. M. Conroy, and J. D. Schlesinger, “QCS: A system for querying, clustering and summarizing documents,” Information Processing and Management, Vol. 43, No. 6, pp. 1588–1605, 2007. [17] K. S. Jones, “Automatic summarizing: the state of the art,” Information Processing and Management, Vol. 43, No. 6, pp. 1449–1481, 2007. [18] D. Zajic, B. candidate reduction: sentence compression as a tool for document summarization tasks,” Information Processing and Management, Vol. 43, No. 6, pp. 1549–1570, 2007. [19] G. Erkan and D. R rality as salience in text summarization,” Journal of Artificial Intelligence Research, Vol. 22, pp. 457–479, 2004. [20] D. Radev, E. Hovy, and K. McKeown, “Introduction to the special issue on summarization,” Computational Lin- guistics, Vol. 28, No. 4, pp. 399–408, 2002. [21] D. Shen, J.-T.Sun, H. Li, Q. Yang, and Z. Chen, “Docu- ment summarization using conditional random fields,” Proceedings of the 20th International Joint Conference on Artificial Intelligence (IJCAI’07), Hyderabad, India, pp. 2862–2867, January 6–12, 2007. [22] J. D. Lafferty, A. McCallum, and F. C. N. Pereira, “Con- ditional random fields: probabilistic models for segment- ing and labeling sequence data,” Proceedings of the 18th International Conference on Machine Learning, pp. 282– 28 June–01 July 2001. [23] X. Wan, “A novel document similarity measure based on earth mover’s distance,” Information Sciences, Vol. 177, No. 18, pp. 3718–3730, 2007. [24] S. Fisher and B. Roark, “Query-focused summarization by supervised sentence ranking and skewed word distri- butions,” Proceedings of the Document Understanding Workshop (DUC’06), New York, USA, 8p, 8–9 June 2006. [25] P. Fung and G. Ngai, “One story, one flow: Hidden Markov story models for multilingual multidocument summarization,” ACM Transaction on Speech and Lan- guage Processing, Vol. 3, No. 2, pp. 1–16, 2006. [26] D. M. McDonald and H. Chen, “Summary Searching versus browsing,” ACM Transactions on In- formation Systems, Vol. 24, No. 1, pp. 111–141, 2006. [27] R. Mihalcea and P. Tarau, “A language independent algo- rithm for single and multiple document summarizations,” Proceedings of the Second Internation Natural Language Processing (IJCNLP’05), Korea, pp. 602–607, 11–13 October 2005. [28] J. Li, L. Sun, C. Kit, and J. Webster, “A query-focused Copyright © 2009 SciRes IIM  R. ALGULIEV ET AL. Copyright © 2009 SciRes IIM 138 p, 26–27 April 2007. Vol. 11, No. 1, pp. 25–49, putational Natural Language Learning (EMNLP- on Sci- e nn, “Data clustering: naly- ransactions on Knowledge and Data Engineering, SA, pp. 250–256, 17–19 September 2007. rtifi- en words using web search en- - t ms, Man, and Cybernetics – spaces,” Journal of Global Optimization, Vol. 11, ysis and ROUGE: A package for automatic evaluation ydney, Australia, pp. Proceedings of the Document Understanding Conference (DUC’07), New York, USA, 4 [29] X. Wan, “Using only cross-document relationships for both generic and topic-focused multi-document summa- rizations, Information Retrieval, cial 2008. [30] R. Mihalcea and H. Ceylan, “Explorations in automatic book summarization, Proceedings of the Joint Conference on Empirical Methods in Natural Language Processing and Com CoNLL’07), Prague, Czech Republic, pp. 380–389, 28– 30 June 2007. [31] Y. Guo and G. Stylios, “An intelligent summarization system based on cognitive psychology,” Informati ences, Vol. 174, No.1–2, pp. 1–36, 2005. [32] J. Grabmeier and A. Rudolph, “Techniques of cluster algorithms in data mining,” Data Mining and Knowledg clus Discovery, Vol. 6, No. 4, pp. 303–360, 2002. [33] J. Han and M. Kamber, “Data mining: concepts and tech- nique (2nd Edition), Morgan Kaufman, San Francisco, 800p, 2006. [34] A. K. Jain, M. N. Murty, and P. J. Fly Part A review,” ACM Computing Surveys, Vol. 31, No. 3, pp. 264–323, 1999. [35] M. G. H. Omran, A. P. Engelbrecht, and A. Salman, “An overview of clustering methods,” Intelligent Data A sis, Vol. 11, No. 6, pp. 583–605, 2007. [36] K. M. Hammouda and M. S. Kamel, “Efficient phrase- based document indexing for web document clustering,” IEEE T Mach Vol. 16, No. 10, pp. 1279–1296, 2004. [37] T. Li, “A unified view on clustering binary data,” Ma- chine Learning, Vol. 62, No. 3, pp. 199–215, 2006. [38] Y. Li, C. Luo and S. M. Chung, “Text clustering with feature selection by using statistical data,” IEEE Transac- tions on Knowledge and Data Engineering, Vol. 20, No. 20, pp. 641–652, 2008. [39] Y. Li, D. McLean, Z. A. Bandar, J. D. O’Shea, K. Crock- ett, “Sentence similarity based on semantic nets and cor- pus statistics,” IEEE Transactions on Knowledge and Data Engineering, Vol. 18, No. 8, pp. 1138–1150, 2006. [40] X. Liu, Y. Zhou, and R. Zheng, “Sentence similarity based on dynamic time warping,” Proceedings of the First International Conference on Semantic Computing (ICSC’ 07), Irvine, U Edmo [41] X. Wan, J. Yang, and J. Xiao, “Manifold-ranking based topic-focused multi-document summarization, Proceed- ings of the 20th International Joint Conference on A Intelligence (IJCAI’07), Hyderabad, India, pp. 2903– 2908, January 6–12, 2007. [42] D. Bollegala, Y. Matsuo, and M. Ishizuka, “Measuring semantic similarity betwe gines,” Proceedings of 16th World Wide Web Conference (WWW16), Alberta, Canada, pp. 757–766, May 8–12, 2007. [43] R. L. Cilibrasi and P . M. B. Vitanyi, “The google similar ity distance,” IEEE Transaction on Knowledge and Data Engineering, Vol. 19, No. 3, pp. 370–383, 2007. [44] Y. Zhao and G. Karypis, “Empirical and theoretical com- parisons of selected criterion functions for documen tering,” Machine Learning, Vol. 55, No. 3, pp. 311– 331, 2004. [45] S. Das, A. Abraham, and A. Konar, “Automatic clustering using an improved differential evolution algorithm,” IEEE Transaction on Syste A: Systems and Humans, Vol. 38, No. 1, pp. 218–237, 2008. [46] R. Storn and K. Price, “Differential evolution – a simple and efficient heuristic for global optimization over con- tinuous No. 4, pp. 341–359, 1997. [47] M. Pavan and M. Pelillo, “Dominant sets and pairwise clustering,” IEEE Transactions on Pattern Anal ine Intelligence, Vol. 29, No. 1, pp. 167–172, 2007. [48] M. Porter, “An algorithm for suffix stripping,” Program, Vol. 14, No. 3, pp. 130–137, 1980. [49] C.-Y. Lin, “ summaries,” Proceedings of the Workshop on Text Sum- marization Branches Out, Barcelona, Spain, pp. 74–81, 25–26 July 2004. [50] C.-Y. Lin and E. H. Hovy, “Automatic evaluation of sum- maries using n-gram co-occurrence statistics,” Proceed- ings of the 2003 Conference of the North American Chapter of the Association for Computational Linguistics on Human Language Technology (HLT-NAACL’03), nton, Canada, Vol. 1, pp. 71–78, 27 May–1 June 2003. [51] H. Nanba and M. Okumura, “An automatic method for summary evaluation using multiple evaluation results by a manual method,” Proceedings of the COLING/ACL on Main Conference Poster Sessions, S 603–610, 17–18 July 2006. |