Applied Mathematics

Vol.06 No.09(2015), Article ID:58953,18 pages

10.4236/am.2015.69142

From Nonparametric Density Estimation to Parametric Estimation of Multidimensional Diffusion Processes

Julien Apala N’drin1, Ouagnina Hili2

1Laboratory of Applied Mathematics and Computer Science, University Felix Houphouët Boigny, Abidjan, Côte d’Ivoire

2Laboratory of Mathematics and New Technologies of Information, National Polytechnique Institute Houphouët-Boigny of Yamoussoukro, Yamoussoukro, Côte d’Ivoire

Email: lecorrige@yahoo.fr, o_hili@yahoo.fr

Copyright © 2015 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 17 June 2015; accepted 18 August 2015; published 21 August 2015

ABSTRACT

The paper deals with the estimation of parameters of multidimensional diffusion processes that are discretely observed. We construct estimator of the parameters based on the minimum Hellinger distance method. This method is based on the minimization of the Hellinger distance between the density of the invariant distribution of the diffusion process and a nonparametric estimator of this density. We give conditions which ensure the existence of an invariant measure that admits density with respect to the Lebesgue measure and the strong mixing property with exponential rate for the Markov process. Under this condition, we define an estimator of the density based on kernel function and study his properties (almost sure convergence and asymptotic normality). After, using the estimator of the density, we construct the minimum Hellinger distance estimator of the parameters of the diffusion process and establish the almost sure convergence and the asymptotic normality of this estimator. To illustrate the properties of the estimator of the parameters, we apply the method to two examples of multidimensional diffusion processes.

Keywords:

Hellinger Distance Estimation, Multidimensional Diffusion Processes, Strong Mixing Process, Consistence, Asymptotic Normality

1. Introduction

Diffusion processes are widely used for modeling purposes in various fields, especially in finance. Many papers are devoted to the parameter estimation of the drift and diffusion coefficients of diffusion processes by discrete observation. As a diffusion process is Markovian, the maximum likelihood estimation is the natural choice for parameter estimation to get consistent and asymptotical normally estimator when the transition probability density is known [1] . However, in the discrete case, for most diffusion processes, the transition probability density is difficult to calculate explicitly which prevents the use of this method. To solve this problem, several methods have been developed such as the approximation of the likelihood function [2] [3] , the approximation of the transition density [4] , schemes of approximation of the diffusion [5] or methods based on martingale estimating functions [6] .

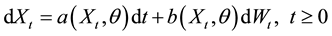

In this paper, we study the multidimensional diffusion model

under the condition that  is positive recurrent and exponentially strong mixing. We assume that the diffusion process is observed at regular spaced times

is positive recurrent and exponentially strong mixing. We assume that the diffusion process is observed at regular spaced times  where

where  is a positive constant. Using the density of the invariant distribution of the diffusion, we construct an estimator of θ based on minimum Hellinger distance method.

is a positive constant. Using the density of the invariant distribution of the diffusion, we construct an estimator of θ based on minimum Hellinger distance method.

Let  denote the density of the invariant distribution of the diffusion. The estimator of

denote the density of the invariant distribution of the diffusion. The estimator of  is that value (or values)

is that value (or values)  in the parameter space

in the parameter space  which minimizes the Hellinger distance between

which minimizes the Hellinger distance between  and

and , where

, where  is a nonparametric density estimator of

is a nonparametric density estimator of .

.

The interest for this method of parametric estimation is that the minimum Hellinger distance estimation method gives efficient and robust estimators [7] . The minimum Hellinger distance estimators have been used in parameter estimation for independent observations [7] , for nonlinear time series models [8] and recently for univariate diffusion processes [9] .

The paper is organized as follows. In Section 2, we present the statistical model and some conditions which imply that  is positive recurrent and exponentially strong mixing. Consistence and asymptotic normality of the kernel estimator of the density of the invariant distribution are studied in the same section. Section 3 defines the minimum Hellinger distance estimator of

is positive recurrent and exponentially strong mixing. Consistence and asymptotic normality of the kernel estimator of the density of the invariant distribution are studied in the same section. Section 3 defines the minimum Hellinger distance estimator of  and studies its properties (consistence and asymptotic normality). Section 4 is devoted to some examples and simulations. Proofs of some results are presented in Appendix.

and studies its properties (consistence and asymptotic normality). Section 4 is devoted to some examples and simulations. Proofs of some results are presented in Appendix.

2. Nonparametric Density Estimation

We consider the d-dimensional diffusion process solution of the multivariate stochastic differential equation:

(1)

(1)

where  is a standard l-dimensional Wiener process,

is a standard l-dimensional Wiener process,

We assume that the functions a and b are known up to the parameter

We denote by

For a matrix

The process

We make the following assumptions on the model:

(A1): there exists a constant C such that

(A2): there exist constants

(A3): the matrix function

Assumptions (A1)-(A3) ensure the existence of a unique strong solution for the Equation (1) and an invariant measure for the process

In the sequel, we assume that the initial value

We consider the kernel estimator

where

(A4)

(1) There exists

(2)

(A5)

We finish with assumptions concerning the density of the invariant distribution:

(A6)

(A7)

Properties (consistence and asymptotic normality) of the kernel density estimator are examined in the following theorems. The proof of the two theorems can be found in the Appendix.

Theorem 1. Under assumptions (A1)-(A4), if the function

Theorem 2. Under assumptions (A1)-(A6), if

distribution of

3. Estimation of the Parameter

The minimum Hellinger distance estimator of

where

Let

Define the functional

where

If

Theorem 3. (almost sure consistency)

Assume that assumptions (A1)-(A4) and (A7) hold. If for all

Proof. By Theorem 1,

Using the inequality

Since

By theorem 1 [7] ,

ger topology. Therefore

This achieves the proof of the theorem.

Denote

when these quantities exist. Furthermore, let

To prove asymptotic normality of the estimator of the parameter, we begin with two lemmas.

Lemma 1. Let

(1) assumptions (A1)-(A5) are satisfied,

(2)

(3)

(4)

then for any positive sequence

The proof can be found in the Appendix.

Remark 1. The two dimensional stochastic process (see Section 4) with invariant density

where

Lemma 2. Let

(1)

(2)

(3)

(4)

(5)

then

The proof can be found in the Appendix.

Remark 2. Let

numbers diverging to infinity. Let

where

Theorem 4. (asymptotic normality)

Under assumption (A7) and conditions of Lemma 1 and Lemma 2, if

(1) for all

(2) the components of

(3)

Proof. From Theorem 2 [7] , we have:

where

We have

Denote

We have

where

By Lemma 2,

since

Therefore the limiting distribution of

where

This completes the proof of the theorem.

4. Examples and Simulations

4.1. Example 1

We consider the two-dimensional Ornstein-Uhlenbeck process solution of the stochastic differential equation

where

Let

Furthermore [15] ,

The solution of the Equation (3) is

Therefore [16] , the density of the invariant distribution is

The minimum Hellinger distance estimator of

where

with

where

Let

which gives the the following system

Thus,

We now give simulations for different parameter values using the R language. For each process, we generate sample paths using the package “sde” [17] and to compute a value of the estimator, we use the function “nlm” [18] of the R language. The kernel function

bandwidth

Simulations are based on 1000 observations of the Ornstein-Uhlenbeck process with 200 replications.

Simulation results are given in the Table 1.

Table 1. Means and standard errors of the minimum Hellinger distance estimator.

In Table 1,

4.2. Example 2

We consider the Homogeneous Gaussian diffusion process [19] solution of the stochastic differential equation

where

Let

As in [19] , we suppose that

Then we have

Let

Let

Table 2. Means and standard errors of the estimators.

For simulation, we must write the stochastic differential Equation (4) in matrix form as follows:

As in [19] , the true values of the parameter

Now, we can simulate a sample path of the Homogeneous Gaussian diffusion using the “yuima” package of R language [20] . We use the function “nlm” to compute a value of the estimator.

We generate 500 sample paths of the process, each of size 500. The kernel function and the bandwidth are those of the previous example.

We compare the estimator obtained by the minimum Hellinger distance method (MHD) of this paper and the estimator obtained in [19] by estimating function. Table 2 summarizes results of simulation of means and standard errors of the different estimators.

Table 2 shows that the two estimators have good behavior. For the two methods, the means of the estimators are close to the true values of the parameter. But the standard errors of the MHD estimator are lower than those of the estimating function estimator.

Cite this paper

Julien ApalaN’drin,OuagninaHili, (2015) From Nonparametric Density Estimation to Parametric Estimation of Multidimensional Diffusion Processes. Applied Mathematics,06,1592-1610. doi: 10.4236/am.2015.69142

References

- 1. Dacunha-Castelle, D. and Florens-Zmirou, D. (1986) Estimation of the Coefficients of a Diffusion from Discrete Observations. Stochastics, 19, 263-284.

http://dx.doi.org/10.1080/17442508608833428 - 2. Pedersen, A.R. (1995) A New Approach to Maximum Likelihood Estimation for Stochastic Differential Equations Based on Discrete Observations. Scandinavian Journal of Statistics, 22, 55-71.

- 3. Yoshida, N. (1992) Estimation for Diffusion Processes from Discrete Observation. Journal of Multivariate Analysis, 41, 220-242.

http://dx.doi.org/10.1016/0047-259X(92)90068-Q - 4. Aït-Sahalia, Y. (2002) Maximum Likelihood Estimation of Discretely Sampled Diffusions: A Closed-Form Approximation Approach. Econometrica, 70, 223-262.

http://dx.doi.org/10.1111/1468-0262.00274 - 5. Florens-Zmirou, D. (1989) Approximate Discrete-Time Schemes for Statistics of Diffusion Processes. Statistics, 20, 547-557.

http://dx.doi.org/10.1080/02331888908802205 - 6. Bibby, B.M. and Sørensen, M. (1995) Martingale Estimation Functions for Discretely Observed Diffusion Processes. Bernoulli, 1, 17-39.

http://dx.doi.org/10.2307/3318679 - 7. Beran, R. (1977) Minimum Hellinger Distance Estimates for Parametric Models. Annals of Statistics, 5, 445-463.

http://dx.doi.org/10.1214/aos/1176343842 - 8. Hili, O. (1995) On the Estimation of Nonlinear Time Series Models. Stochastics: An International Journal of Probability and Stochastic Processes, 52, 207-226.

http://dx.doi.org/10.1080/17442509508833972 - 9. N’drin, J.A. and Hili, O. (2013) Parameter Estimation of One-Dimensional Diffusion Process by Minimum Hellinger Distance Method. Random Operators and Stochastic Equations, 21, 403-424.

http://dx.doi.org/10.1515/rose-2013-0019 - 10. Bianchi, A. (2007) Nonparametric Trend Coefficient Estimation for Multidimensional Diffusions. Comptes Rendus de l’Académie des Sciences, 345, 101-105.

- 11. Pardoux, E. and Veretennikov, Y.A. (2001) On the Poisson Equation and Diffusion Approximation. I. The Annals of Probability, 29, 1061-1085.

http://dx.doi.org/10.1214/aop/1015345596 - 12. Veretennikov, Y.A. (1997) On Polynomial Mixing Bounds for Stochastic Differential Equations. Stochastic Process, 70, 115-127.

http://dx.doi.org/10.1016/S0304-4149(97)00056-2 - 13. Devroye, L. and Györfi, L. (1985) Nonparametric Density Estimation: The L1 View. Wiley, New York.

- 14. Glick, N. (1974) Consistency Conditions for Probability Estimators and Integrals of Density Estimators. Utilitas Mathematica, 6, 61-74.

- 15. Jacobsen, M. (2001) Examples of Multivariate Diffusions: The Time-Reversibility, a Cox-Ingersoll-Ross Type Process. Department of Statistics and Operations Research, University of Copenhagen, Copenhagen.

- 16. Caumel, Y. (2011) Probabilités et processus stochastiques. Springer-Verlag, Paris.

http://dx.doi.org/10.1007/978-2-8178-0163-6 - 17. Iacus, S.M. (2008) Simulation and Inference for Stochastic Differential Equations. Springer Series in Statistics, Springer, New York.

http://dx.doi.org/10.1007/978-0-387-75839-8 - 18. Lafaye de Micheaux, P., Drouilhet, R. and Liquet, B. (2011) Le logiciel R: Maitriser le langage-Effectuer des analyses statistiques. Springer-Verlag, Paris.

http://dx.doi.org/10.1007/978-2-8178-0115-5 - 19. Sørensen, H. (2001) Discretely Observed Diffusions: Approximation of the Continuous-Time Score Function. Scandinavian Journal of Statistics, 28, 113-121.

http://dx.doi.org/10.1111/1467-9469.00227 - 20. Iacus, S.M. (2011) Option Pricing and Estimation of Financial Models with R. John Wiley and Sons, Ltd., Chichester.

http://dx.doi.org/10.1002/9781119990079 - 21. Roussas, G.G. (1969) Nonparametric Estimation in Markov Processes. Annals of the Institute of Statistical Mathematics, 21, 73-87.

http://dx.doi.org/10.1007/BF02532233 - 22. Bosq, D. (1998) Nonparametric Statistics for Stochastic Processes: Estimation and Prediction. Second Edition, Springer-Verlag, New York.

http://dx.doi.org/10.1007/978-1-4612-1718-3 - 23. Dharmenda, S. and Masry, E. (1996) Minimum Complexity Regression Estimation with Weakly Dependent Observations. IEEE Transactions on Information Theory, 42, 2133-2145.

http://dx.doi.org/10.1109/18.556602 - 24. Dominique, F. and Aimé, F. (1998) Calcul des probabilities: Cours, exercices et problèmes corrigés. 2e edition, Dunod, Paris.

Appendix

A1. Proof of Theorem 1

Proof.

We have:

Step 1:

by Theorem 2.1 [21] .

Hence

Step 2:

where

Then by theorem 2.1 [9] , we have for all

We have

where

Then

Therefore

by the Borel-Cantelli’s lemma.

(6) and (7) imply that

This achieves the proof of the theorem.

A2. Proof of Theorem 2

Proof.

(1)

By making the change of variable

(2)

where

We have

Let

Define

and

We have

Step 1: We prove that

By Minkowski’s inequality, we have

(1) Using Billingsley’s inequality [22] ,

(2)

Hence,

Therefore, choosing

we get

which implies that

Step 2: asymptotic normality of

From Lemma 4.2 [23] , we have

Setting

the charasteristic function of

We have

(1)

(2) Note that

Therefore

Since the random variables

the limiting distribution of

The condition (8), (9) and (10) are satisfied, for example, with

This achieves the proof of the theorem.

A3. Proof of Lemma 1

Proof. The proof of the lemma is done in two steps.

Step 1: we prove that

With assumptions (A4) and (A5), we have

Furthermore,

Therefore

Step 2: asymptotic normality of

(1)

Proof is similar to that of theorem 2; we use the inequality of Davidov [22] instead of that of Billingsley.

Note that:

and

(2)

Recall that

Let

strongly mixing with mean zero and variance

From (1),

Therefore,

This completes the proof of the lemma.

A4. Proof of Lemma 2

Proof.

We have,

Now,

(1)

Using Davidov’s inequality for mixing processes, we get

Choose

Hence,

(2)

Therefore,

The last relation implies that

Furthermore,

We have,

Therefore, if

then

(11) and (12) imply that