Applied Mathematics

Vol.4 No.6(2013), Article ID:32694,7 pages DOI:10.4236/am.2013.46126

An Application of the ABS Algorithm for Modeling Multiple Regression on Massive Data, Predicting the Most Influencing Factors

1Advanced Bioinformatics Centre, Birla Institute of Scientific Research, Jaipur, India

2Department of Operation Research, University of Bergamo, Bergamo, Italy

3Department of Endocrinology, SMS Medical College and Hospital, Jaipur, India

Email: *slalwani.math@gmail.com

Copyright © 2013 Soniya Lalwani et al. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Received April 17, 2013; revised May 17, 2013; accepted May 27, 2013

Keywords: ABS Algorithm; Linear Least Square; Regression; Diabetes; Huang Algorithm

ABSTRACT

Linear Least Square (LLS) is an approach for modeling regression analysis, applied for prediction and quantification of the strength of relationship between dependent and independent variables. There are a number of methods for solving the LLS problem but as soon as the data size increases and system becomes ill conditioned, the classical methods become complex at time and space with decreasing level of accuracy. Proposed work is based on prediction and quantification of the strength of relationship between sugar fasting and Post-Prandial (PP) sugar with 73 factors that affect diabetes. Due to the large number of independent variables, presented problem of diabetes prediction also presented similar complexities. ABS method is an approach proven better than other classical approaches for LLS problems. ABS algorithm has been applied for solving LLS problem. Hence, separate regression equations were obtained for sugar fasting and PP severity.

1. Introduction

ABS methods were developed by Abaffy, Broyden and Spedicato in 1984. ABS methods are a large class of algorithms for solving continuous and discrete linear algebraic equations, and nonlinear continuous algebraic equations, with applications to optimization problems. ABS methods are being found pertinent in application areas due to their suitability for ill conditioned and rank deficient problems [1-3]. Presented work is based on one more application of ABS algorithm because of its proven efficiency towards solution of Linear Least Square (LLS) problems. This section shows the basic structure of the manuscript that starts with the information about Diabetes followed by explanation of LLS method, Regression analysis and ABS method, respectively.

1.1. Diabetes

Diabetes is a chronic disease causing high levels of sugar in the blood due to under production or resistance to the production of insulin hormone in pancreas, as insulin control the blood sugar in the body.

This disease occurs when the food taken and sugar produced by it does not get stored into fat, liver or in muscles for energy production and they remain in the blood and come out of the body unused because either pancreas does not make enough insulin or cells do not respond to the insulin properly or both.

There are two main types of diabetes: Type 1 Diabetes that occur in children, teens and young adults, in this type the body either does not or makes very little insulin and so daily injections of insulin are needed; Type 2 Diabetes is characterized by high blood glucose in the context of insulin resistance and relative insulin deficiency mainly in adulthood. However, due to high obesity, teens and young adults are also now diagnosed with this disease [4].

According to World Health Statistics report 2012 global average prevalence of diabetes worldwide is 1 in each 10 people [5]. With reference to [6], Asian countries contribute to more than 60% of the world’s diabetic population. India, Nepal and China stand at the top three positions at increasing rural diabetic prevalence. These figures show the growing risk of diabetes for Asian and other countries. Present study has taken clinical data of type 2 diabetic patients with 73 different parameters as independent variables.

1.2. Linear Least Square

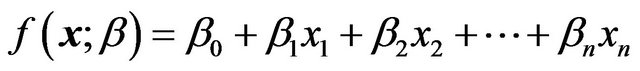

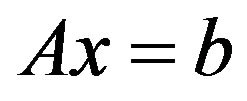

LLS methods are used to compute estimations of parameters and to fit data where parameters are estimated by minimizing the sum of square deviations between data and model. LLS model can be used directly, or with an appropriate data. LLS regression can be used to fit it in the form with any function:

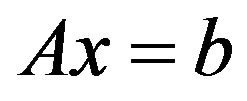

(1)

(1)

in which1) Each explanatory variable in the function is multiplied by an unknown parameter2) At most one unknown parameter exists with no corresponding explanatory variable3) All of the individual terms are summed to produce the final function value.

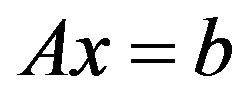

There are different methods for solving LLS problems such as Normal equations method, in which Cholesky factorization is used to obtain a solution to systems of normal equations, another method is QR factorization, in which LLS problem i.e.  is converted into traingular least square problem seeking an orthogonal matrix. Next method is Householder QR factorization that is used to compute the QR factorization of a matrix by using Householder transformations that annihilate the sub diagonal entries of each successive column. Next method is Modified Gram-Schmidt algorithm [7,8].

is converted into traingular least square problem seeking an orthogonal matrix. Next method is Householder QR factorization that is used to compute the QR factorization of a matrix by using Householder transformations that annihilate the sub diagonal entries of each successive column. Next method is Modified Gram-Schmidt algorithm [7,8].

The LLS method is used in various fields like in Linear and Multiple Regression, ANOVA and Goodness of Fit, The coefficient of Determination, Modelling workhorse, Process modelling tool, Polynomial fitting and Numerical smoothing and differentiation. Here the LLS method is applied for Multiple Regression.

1.3. ABS Algorithm

ABS algorithm is based on the initials of Abaffy, Broyden and Spedicato, introduced in 1984 for solving determined and underdetermined linear system, which was later used in LLS, nonlinear equations, optimization problems, integer (Diophantine) equations and LP problems. The ABS algorithm works faster on vector parallel machines and is more implementable in stable way, more accurate than the traditional algorithm and moreover it unifies most algorithms for solving the linear system [9, 10]. Basic ABS algorithm for solving the following linear system is defined by:

(2)

(2)

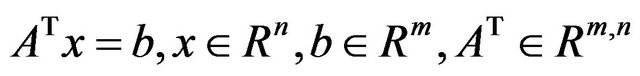

or equivalently

(3)

(3)

is based on the following procedure:

a) Assign an arbitrary  an arbitrary positive definite matrix

an arbitrary positive definite matrix ; set

; set ;

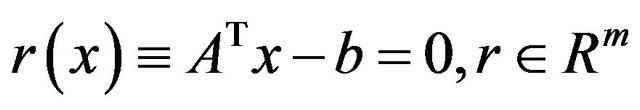

;

b) Compute the residual ; if

; if  stop,

stop,  is a solution; otherwise proceed;

is a solution; otherwise proceed;

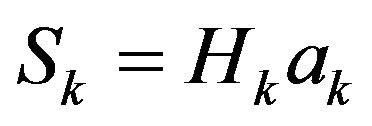

c) Compute the search vector by the following formula  where

where  is an arbitrary parameter vector, save for the well definiteness condition

is an arbitrary parameter vector, save for the well definiteness condition

(4)

(4)

where ![]() is the

is the  column of an arbitrarily assigned nonsingular matrix

column of an arbitrarily assigned nonsingular matrix .

.

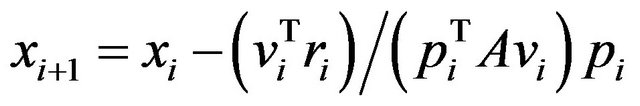

d) Update the estimate of the solution by the following formula

(5)

(5)

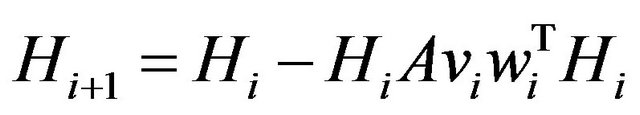

e) Update the matrix by the following formula

by the following formula

(6)

(6)

where  is an arbitrary parameter save for the well-definiteness condition

is an arbitrary parameter save for the well-definiteness condition .

.

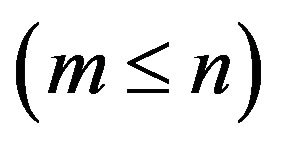

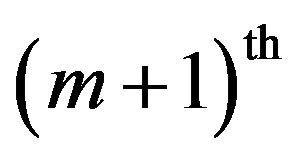

The basic form of ABS algorithm computes a solution x+ of the system  of m linear equations in n unknowns

of m linear equations in n unknowns  since the

since the  iterate of a sequence of approximation xi to x+ have following property as the approximation xi+1 obtained at the ith iteration in a solution of the i equations. Linear system were earlier solved by many approximation method and the oldest was the escalator method whose xi iterates generated shows similarity with LU algorithm of ABS class when this is started with the zero vector as initial estimate for x+.

iterate of a sequence of approximation xi to x+ have following property as the approximation xi+1 obtained at the ith iteration in a solution of the i equations. Linear system were earlier solved by many approximation method and the oldest was the escalator method whose xi iterates generated shows similarity with LU algorithm of ABS class when this is started with the zero vector as initial estimate for x+.

1.4. Regression and Multiple Regression

Regression analysis is used for modeling and analysis of the numerical data consisting of the values of one dependent variable and one or more independent variables. The dependent variable in the regression equation is modeled as a function of the independent variables, corresponding parameters “constants” and an “error term”. The error term is treated as a “random variable”. It represents unexplained variation in the dependent variable. The parameters are estimated to give a “best fit” of the data. Most commonly the best fit is evaluated by using the least squares method. Regression can be used for prediction (including forecasting of time-series data), inference and hypothesis testing, and modeling of causal relationships [11].

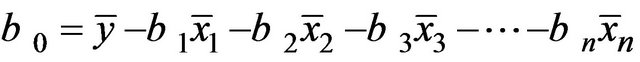

Multiple Regression equation has the form as earlier shown in Equation (1) for n number of independent variables. The coefficient  represents the constant coefficient, the predicted value of y when

represents the constant coefficient, the predicted value of y when  which is calculated by:

which is calculated by:

(7)

(7)

![]() and

and ![]() are the arithmetic means of x and y respectively.

are the arithmetic means of x and y respectively.

are the constants, called as the regression coefficients [12]. These coefficients are important in the sense that they act as the weight of each independent variable in the equation and in the prediction of dependent variable i.e. the strength of relation.

are the constants, called as the regression coefficients [12]. These coefficients are important in the sense that they act as the weight of each independent variable in the equation and in the prediction of dependent variable i.e. the strength of relation.

2. Materials and Methods

LLS problems in this proposed method have been solved by ABS class using modified Huang Algorithm applied for solving the linear systems.

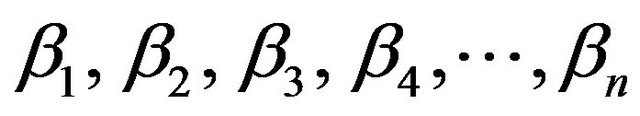

2.1. Huang Algorithm

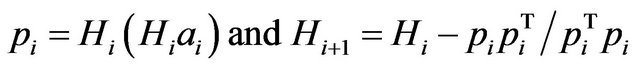

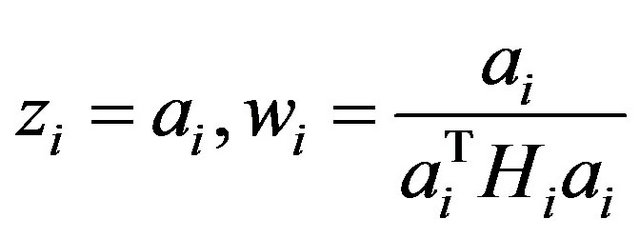

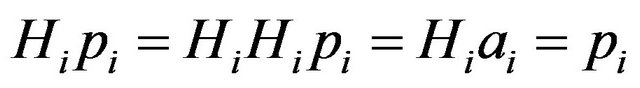

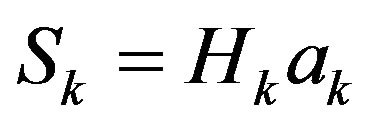

This method belongs to both the basic ABS class and the optimally scaled subclass. It can be obtained by the parameter choices:  in above algorithm. A mathematically equivalent, in the sense of generating the same iterates in exact arithmetic, but numerically more stable form is the modified Huang method, which is based upon formulas:

in above algorithm. A mathematically equivalent, in the sense of generating the same iterates in exact arithmetic, but numerically more stable form is the modified Huang method, which is based upon formulas:

(8)

(8)

The method generates orthogonal search vectors and the algorithm can solve a system of linear inequalities where in a finite number of steps it either proves that no feasible point exists or it finds the solution of the least Euclidean norm. The algorithm works on symmetric algorithm which corresponds on various parameters in ABS class like H1 symmetric positive definite and

(9)

(9)

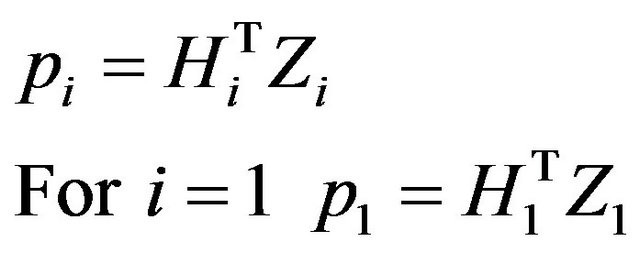

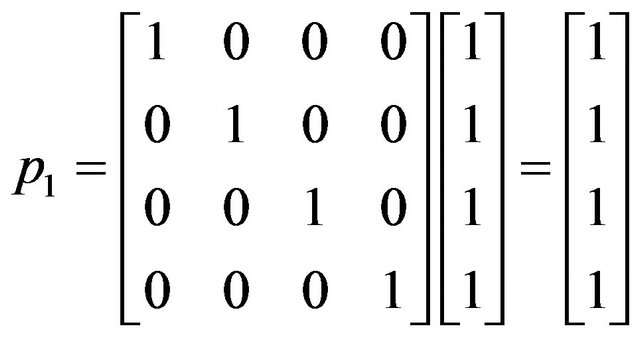

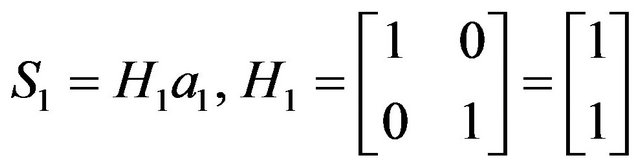

and in Huang algorithm corresponds H1 = I in symmetric algorithm. There are various versions of Huang algorithm according to various parameter choices and alternative formulations to compute p vectors and update H-matrices and the one used here takes:

(10)

(10)

which gives

. (11)

. (11)

Therefore, by putting , the recurrence relation

, the recurrence relation  to compute p vectors is obtained. Here, in the ith stage, the m-i vectors must be stored. In particular, this version is adequate for pivoting [13]. The time and space complexities of all the methods implied with ABS algorithm is discussed in [14] whereas modified Huang algorithm that has been used here, is found performing well at time and memory requirements.

to compute p vectors is obtained. Here, in the ith stage, the m-i vectors must be stored. In particular, this version is adequate for pivoting [13]. The time and space complexities of all the methods implied with ABS algorithm is discussed in [14] whereas modified Huang algorithm that has been used here, is found performing well at time and memory requirements.

2.2. Why ABS Class for Solving Linear Least Square Problem?

ABS algorithm has shown their superiority while comparing modified Huang methods, Gram-Schmidt algorithm, the iterated stabilized Gram-Schmidt algorithm, QR algorithm [14]. In [15] a comparison with codes in the NAG, LINPACK and LAPACK libraries shows that ABS method are of comparable accuracy and are faster on vector/parallel machines. The testing had been done on 243 well-conditioned, 45 ill conditioned and 117 rank deficient problems. The superiority was evident for rank deficient and ill conditioned problems. Remarkable superiority had been obtained with respect to the relative error and accuracy [16]. A number of ABS method based approaches are discussed and used various ways of applying these algorithms for the solution of LLS problems in [17].

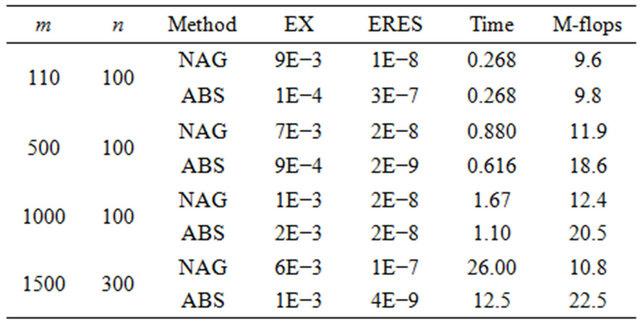

The testing was done on 4 families (A, B, C and D) of well-conditioned, ill conditioned, full rank, rank deficient and a few specific benchmark problems. The accuracy was specially obtained over NAG QR code [18] based upon QR factorization via Householder rotations. Hence due to the notable better computational performance, ABS algorithm with modified Huang method with LLS problem in [19] is found suitable for solving proposed problem. Table 1 shows the comparison of ABS algorithm with NAG code where EX stands for relative error in the solution, ERES stands for relative error in the residual, M-flops stands for Mega flops and m and n show the number of rows and columns respectively [15].

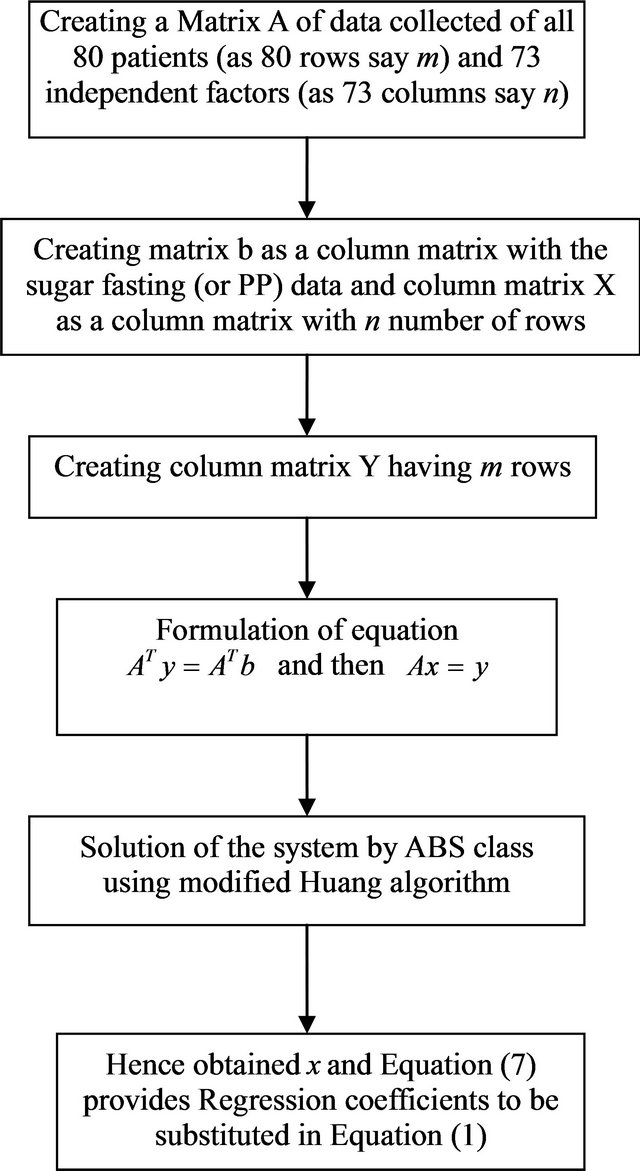

These results are one of the examples of ABS algorithm efficiency in terms of time and accuracy. Figure 1 shows the flowchart of the steps followed for modeling the regression equation using ABS class of algorithms.

Table 1. Comparison of ABS vs NAG code for classical techniques for solving LLS.

Figure 1. Flowchart of implementation of ABS algorithm for detection of sugar fastening level and PP.

2.3. Data Surveyed

A Survey on 80 patients has been done at an Endocrinologist’s (one of the author’s) patients for the number of factors affecting diabetes 73. All these 73 factors are taken as independent variables; sugar fasting and PostPrandial (PP) sugar as dependent variables. The factors affecting diabetes include: Age; Height; Weight; BMI; Waist Circumference; Waist Hip ratio; Biceps; Triceps; Suprailiac; Sub scapular; Total lipids; Phospholipids; Triglycerides; Total Cholesterol; HDL; LDL; VLDL; HOMA-B; HOMA-R; Insulin; Hb1Ac; Hb1Ac (%); E:I Ratio; S:L Ratio; Pulse Rate; Fat in grams: L arm BMC; L arm Fat; L arm Lean; L arm Lean + BMC; L arm Total Mass; L arm % Fat; R arm BMC; R arm Fat; R arm Lean; R arm Lean + BMC; R arm Total Mass; R arm % Fat; Trunk BMC; Trunk Fat; Trunk Lean; Trunk Lean + BMC; Trunk Total Mass; Trunk % Fat; L Leg BMC; L Leg Fat; L Leg Lean; L Leg Lean + BMC; L Leg Total Mass; L Leg % Fat; R Leg BMC; R Leg Fat; R Leg Lean; R Leg Lean + BMC; R Leg Total Mass; R Leg % Fat; Subtotal BMC; Subtotal Fat; Subtotal Lean; Subtotal Lean + BMC; Subtotal Total Mass; Subtotal % Fat; Head BMC; Head Fat; Head Lean; Head Lean + BMC; Head Total Mass; Head % Fat; Total BMC; Total Fat; Total Lean; Total Lean + BMC; Total Mass; Total % Fat.

3. Numerical Solution

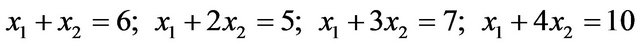

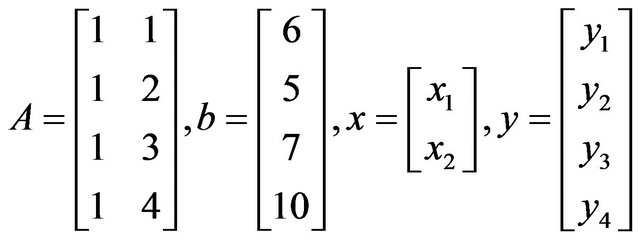

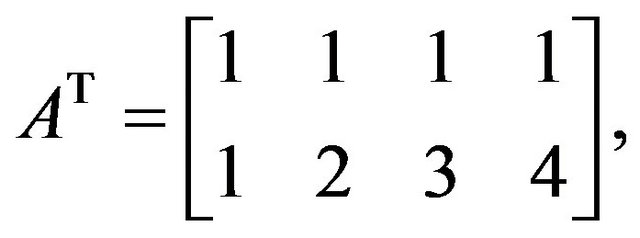

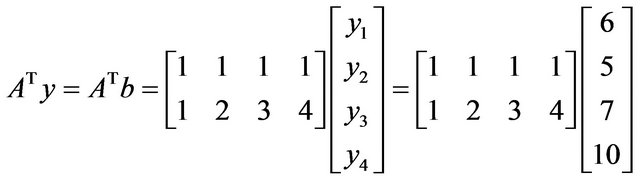

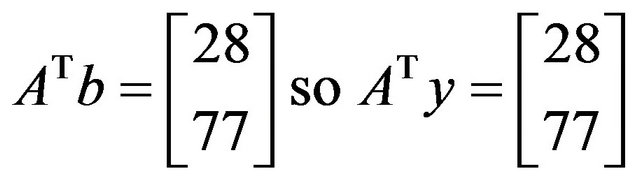

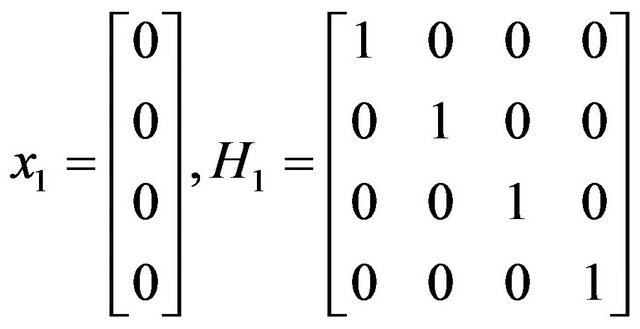

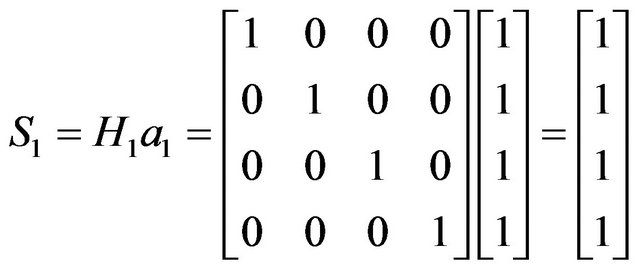

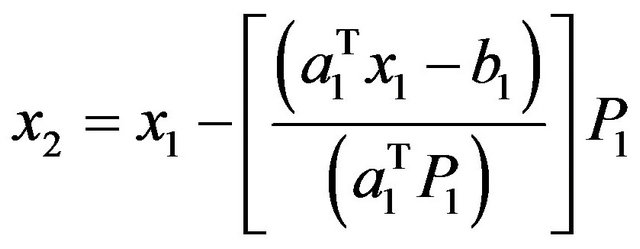

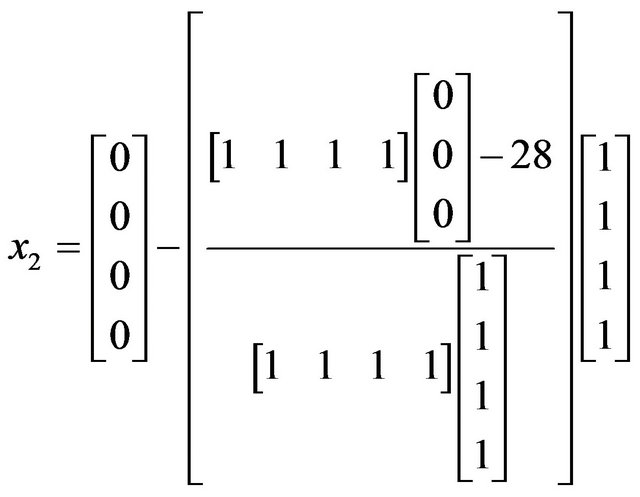

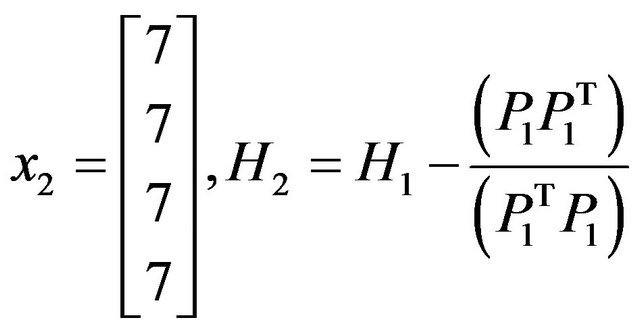

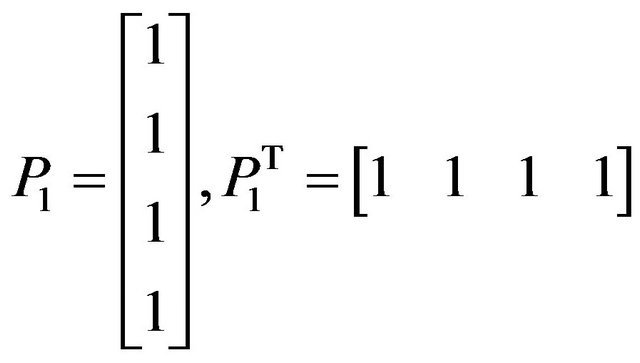

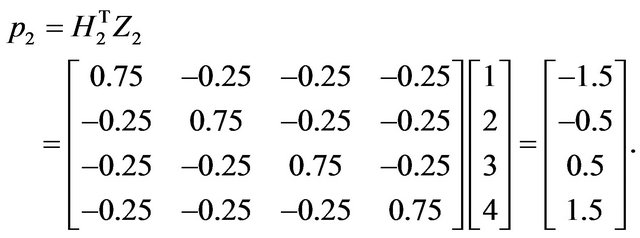

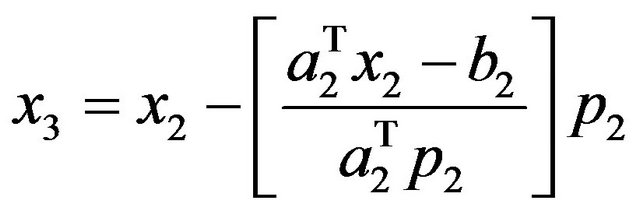

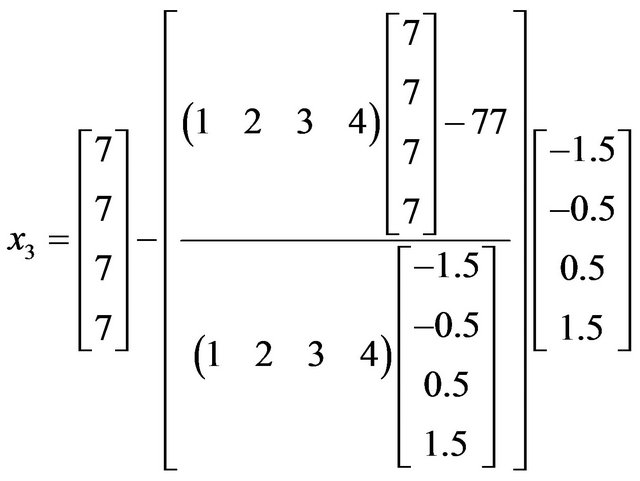

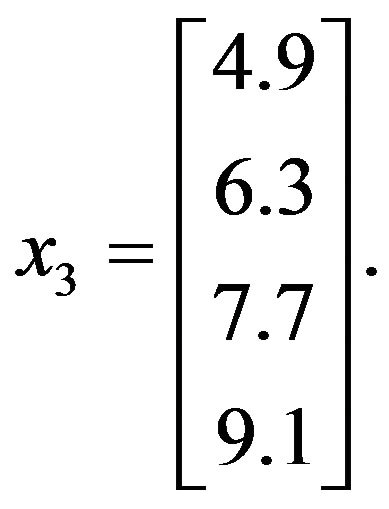

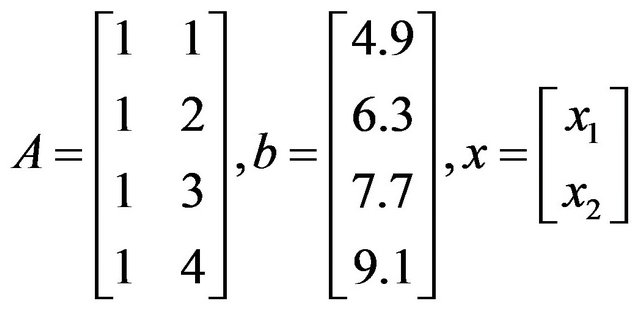

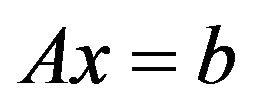

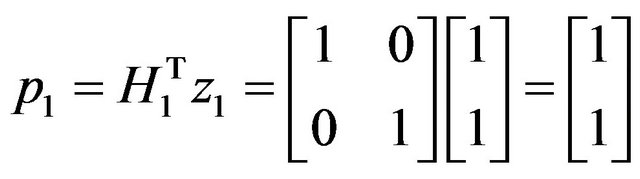

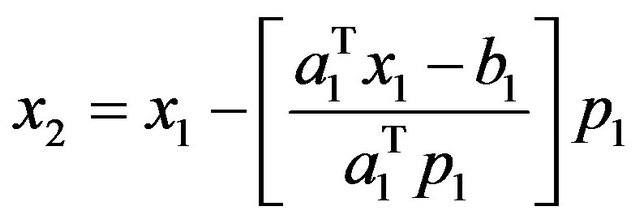

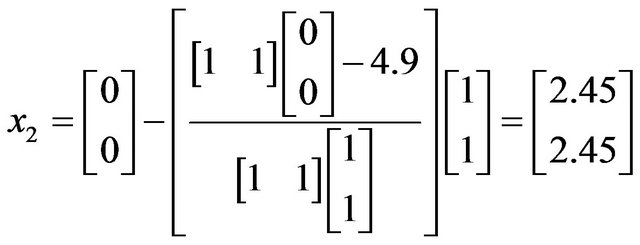

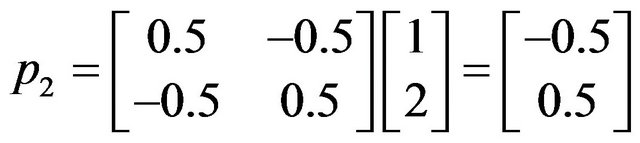

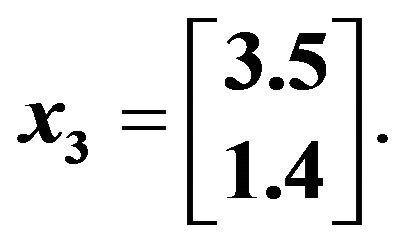

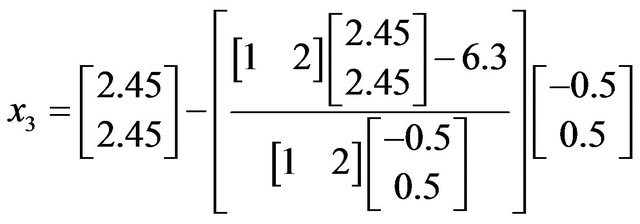

An illustrative example of the solution of LLS through the ABS class of linear systems, which has been verified with the other methods on wikipedia [20] is described as follows:

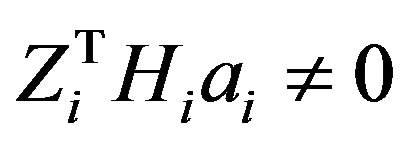

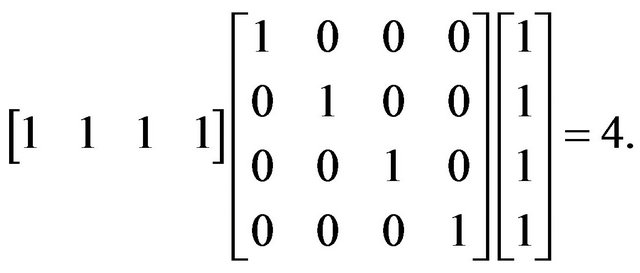

Comparison with  gives

gives

Let

,

,

Condition

Hence satisfied, Now,

Now,

Now,

The above equation is obtained for ABS linear problem and applying it to finding solution of Linear Least Square Problem,

Let for the equation

Now,

Hence, the solution has been verified with the other classical methods.

4. Results and Conclusion

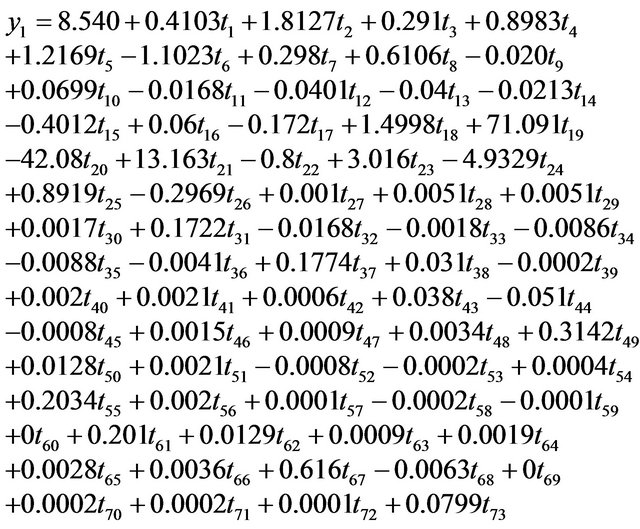

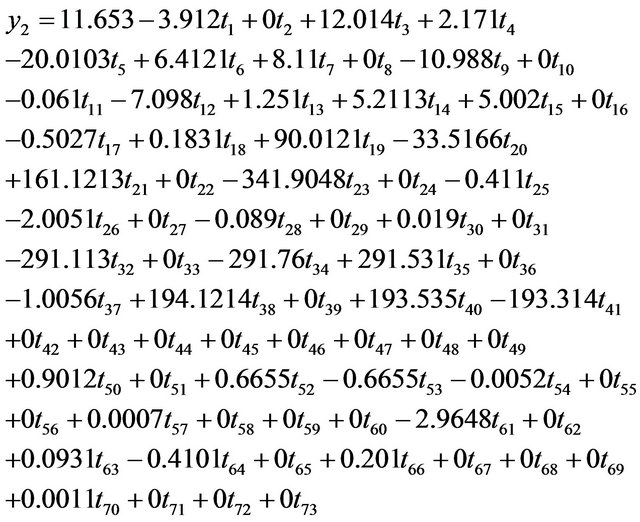

Proposed work contains two aims: First to find out the regression equation relating all the factors affecting diabetes with the sugar fasting level and PP; Second is to find out the strength of relationship among sugar fasting level and PP with these factors, that is clear from the below mentioned Equations (A) and (B) by their weights in the equation. General available softwares for regression analysis do have their limits for the number of independent variables and the data size i.e. for Microsoft Excel it is limited to 16 variables, this is not a case for presented program. The programming has been done is C language. The Regression equations obtained are:

(A)

(A)

(B)

(B)

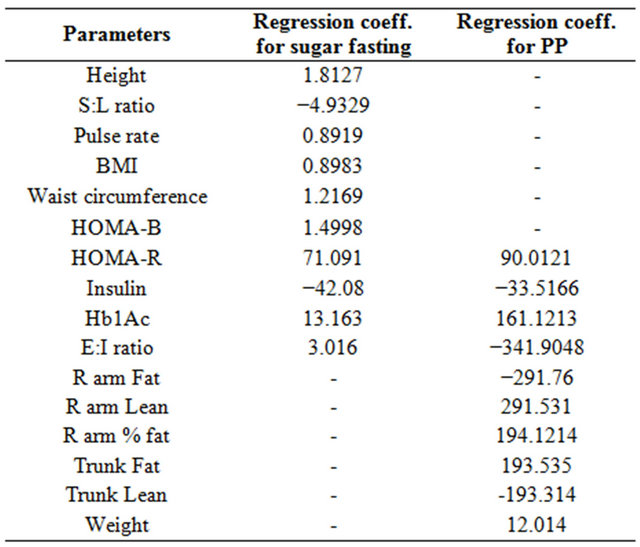

y1 and y2 present the sugar fasting and PP levels respectively, whereas  are the independent variables in the same order as discussed in 2.3. However, merely the regression coefficients cannot conclude about the significance of relationship between the parameters. Since, the scope of proposed study is limited towards finding the regression equation via ABS algorithm, not the p values and the significant impact of independent variables on the dependent one. The weights in regression equation have been used for further discussion for the proposed study and are summarized in Table 2.

are the independent variables in the same order as discussed in 2.3. However, merely the regression coefficients cannot conclude about the significance of relationship between the parameters. Since, the scope of proposed study is limited towards finding the regression equation via ABS algorithm, not the p values and the significant impact of independent variables on the dependent one. The weights in regression equation have been used for further discussion for the proposed study and are summarized in Table 2.

For sugar fasting level:

1) It can be observed that the regression coefficients of HOMA-R, Hb1Ac, E:I ratio, HOMA-B, height, waist circumference, pulse rate, BMI, insulin (negative value), S:L ratio (negative value) were the top 10 positive/negative parameters (largest regression coefficient values), showing their observable impact on sugar fasting (y1).

Table 2. Top 10 most influencing factors affecting sugar fasting and PP.

2) The regression coefficients of subtotal lean, subtotal lean + BMC, subtotal total mass, total fat, total mass have the smallest values; hence they are the parameters having least effect on either increase or decrease in sugar fasting level (y1).

For PP level:

1) It can be observed that the regression coefficients of biceps, weight, HOMA-R, Hb1Ac, trunk lean, trunk BMC, R arm lean + BMC, E:I Ratio (negative value), trunk lean + BMC (negative value), insulin (negative value) were the top 10 positive/negative parameters (largest regression coefficient values), showing their observable impact on PP (y2).

2) The regression coefficients of a large number of parameters have the small values depicting no impact on PP (y2).

Further development of the work could be in the direction of application of LLS via ABS algorithm for more applications i.e. ANOVA and Chi-square, finding out the coefficient of determination for the significance of strength of correlation, polynomial fitting, numerical smoothing & differentiation and modelling workhorse & process modelling. Besides more ABS algorithm variants can be used for LLS, linear programming and non-linear programming problems due to their vast applicability in real life problems. The binary diabetes parameters can be solved by logistic regression using integer programming applications of ABS algorithms.

5. Acknowledgements

We wish to thank the Executive Director, Birla Institute of Scientific Research for the support given during this work. We gratefully acknowledge financial support by BTIS-sub DIC (supported by DBT, Govt. of India) and Advanced Bioinformatics Centre (supported by Govt. of Rajasthan) at Birla Institute of Scientific Research for infrastructure facilities for carrying out this work.

REFERENCES

- E. Spedicato, E. Bodon, Z. Xia and N. Mahdavi-Amiri, “ABS Method for Continuous and Integer Linear Equations and Optimization,” Central European Journal of Operations Research, Vol. 18, No. 1, 2010, pp. 73-95. doi:0.1007/s10100-009-0128-9

- S. Lalwani, R. Kumar, E. Spedicato and N. Gupta, “An Application of the ABS LX Algorithm to Multiple Sequence Alignment,” Iranian Journal of Operations Research, Vol. 3, 2012, pp. 31-45.

- S. Lalwani, R. Kumar, V. Rastogi and E. Spedicato, “An Application of the ABS LX Algorithm to Schedule Medical Residents,” Journal of Computer and Information Technology, Vol. 1, 2011, pp. 95-118.

- S. S. Rich, “Genetics of Diabetes and Its Complications,” Journal of the American Society of Nephrology, Vol. 17, No. 2, 2006, pp, 353-360. doi:0.1681/ASN.2005070770

- World Health Statistics Report, 2012. http://www.diabetes24-7.com/?p=1272

- A. Ramachandran, C. Snehalatha, A. S. Shetty and A. Nanditha, “Trends in Prevalence of Diabetes in Asian Countries,” World Journal of Diabetes, Vol. 3, No. 6, 2012, 110-117. doi:0.4239/wjd.v3.i6.110

- C. L. Lawson and R. J. Hanson, “Solving Least Squares Problems,” Society for Industrial and Applied Mathematics, 1995. doi:0.1137/1.9781611971217

- A. Bjõrck, “Numerical Methods for Least Squares Problems,” Society for Industrial and Applied Mathematics, 1996. doi:0.1137/1.9781611971484

- J. Abaffy, C. G. Broyden and E. Spedicato, “A Class of Direct Methods for Linear Equations,” Numerische Mathematic, Vol. 45, 1984, pp. 361-376.

- J. Abaffy and E. Spedicato, “Numerical Experiments with the Symmetric Algorithm in the ABS Class for Linear Systems,” Optimization, Vol. 18, No. 2, 1987, pp. 197- 212. doi:0.1080/02331938708843232

- J. O. Rawlings, S. G. Pantula and D. A. Dickey, “Applied Regression Analysis: A Research Tool,” 2nd Edition, Springer, Berlin, 1998. doi:0.1007/b98890

- S. Chatterjee and A. A. S. Hadi, “Regression Analysis by Example,” 4th Edition, Wiley Interscience, 2006. doi:0.1002/0470055464

- H. Y. Huang, “A Direct Method for the General Solution of a System of Linear Equations,” Optimization Theory and Application, Vol. 16, No. 5, 1975, pp. 429-445.

- E. Spedicato and E. Bodon, “ABSPACK 1: A Package of ABS Algorithms for Solving Linear Determined, Underdetermined and Overdetermined Systems.” www.unibg.it/dati/persone/636/404.pdf

- E. Spedicato, “ABS Algorithm for Linear Systems and Linear Least Squares: Theoretical Results and Computational Performance,” Scientia Iranica, Vol. 1, 1995, pp. 289-303.

- J. Abaffy and E. Spedicato, “ABS Projection Algorithms: Mathematical Techniques for Linear and Nonlinear Equations,” Ellis Horwood Ltd., Chichester, 1989.

- E. Spedicato and E. Bodon, “Solution of Linear Least Squares via the ABS Algorithms,” Mathematical Programming, Vol. 58, No. 1-3, 1993, pp. 111-136. doi:0.1007/BF01581261

- Numerical Algorithms Group (NAG) Codes. http://www.nag.co.uk/

- E. Spedicato, “On the Solution of Linear Least Squares through the ABS Class for Linear Systems,” AIRO Conference, 1985, pp. 89-98.

- Linear Least Squares (Mathematics), Motivational Example on Wikipedia. http://en.wikipedia.org/wiki/Linear_least_squares_%28mathematics%29#Motivational_example

NOTES

*Corresponding author.