Least Squares Matrix Algorithm for State-Space Modelling of Dynamic Systems

290

sample number

sample number

millivolt smillivolt s

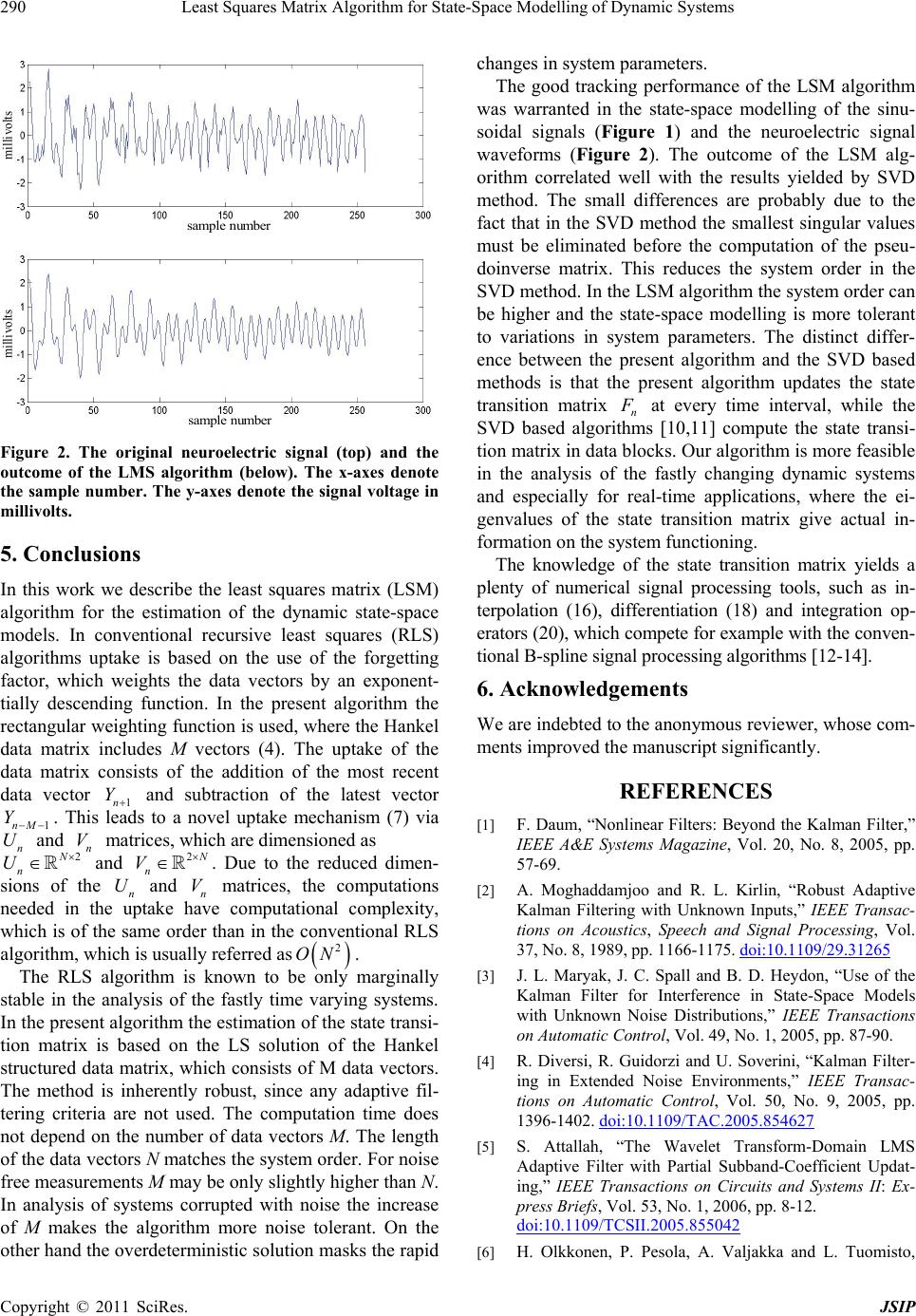

Figure 2. The original neuroelectric signal (top) and the

outcome of the LMS algorithm (below). The x-axes denote

the sample number. The y-axes denote the signal voltage in

millivolts.

5. Conclusions

In this work we describe the least squares matrix (LSM)

algorithm for the estimation of the dynamic state-space

models. In conventional recursive least squares (RLS)

algorithms uptake is based on the use of the forgetting

factor, which weights the data vectors by an exponent-

tially descending function. In the present algorithm the

rectangular weighting function is used, where the Hankel

data matrix includes M vectors (4). The uptake of the

data matrix consists of the addition of the most recent

data vector 1n and subtraction of the latest vector

1. This leads to a novel uptake mechanism (7) via

and n matrices, which are dimensioned as

and

Y

nM

Y

n

U

n

U

V

2N2

n

V

V. Due to the reduced dimen-

sions of the n

U and n matrices, the computations

needed in the uptake have computational complexity,

which is of the same order than in the conventional RLS

algorithm, which is usually referred as.

2

ON

The RLS algorithm is known to be only marginally

stable in the analysis of the fastly time varying systems.

In the present algorithm the estimation of the state transi-

tion matrix is based on the LS solution of the Hankel

structured data matrix, which consists of M data vectors.

The method is inherently robust, since any adaptive fil-

tering criteria are not used. The computation time does

not depend on the number of data vectors M. The length

of the data vectors N matches the system order. For noise

free measurements M may be only slightly higher than N.

In analysis of systems corrupted with noise the increase

of M makes the algorithm more noise tolerant. On the

other hand the overdeterministic solution masks the rapid

changes in system parameters.

The good tracking performance of the LSM algorithm

was warranted in the state-space modelling of the sinu-

soidal signals (Figure 1) and the neuroelectric signal

waveforms (Figure 2). The outcome of the LSM alg-

orithm correlated well with the results yielded by SVD

method. The small differences are probably due to the

fact that in the SVD method the smallest singular values

must be eliminated before the computation of the pseu-

doinverse matrix. This reduces the system order in the

SVD method. In the LSM algorithm the system order can

be higher and the state-space modelling is more tolerant

to variations in system parameters. The distinct differ-

ence between the present algorithm and the SVD based

methods is that the present algorithm updates the state

transition matrix n

at every time interval, while the

SVD based algorithms [10,11] compute the state transi-

tion matrix in data blocks. Our algorithm is more feasible

in the analysis of the fastly changing dynamic systems

and especially for real-time applications, where the ei-

genvalues of the state transition matrix give actual in-

formation on the system functioning.

The knowledge of the state transition matrix yields a

plenty of numerical signal processing tools, such as in-

terpolation (16), differentiation (18) and integration op-

erators (20), which compete for example with the conven-

tional B-spline signal processing algorithms [12-14].

6. Acknowledgements

We are indebted to the anonymous reviewer, whose com-

ments improved the manuscript significantly.

REFERENCES

[1] F. Daum, “Nonlinear Filters: Beyond the Kalman Filter,”

IEEE A&E Systems Magazine, Vol. 20, No. 8, 2005, pp.

57-69.

[2] A. Moghaddamjoo and R. L. Kirlin, “Robust Adaptive

Kalman Filtering with Unknown Inputs,” IEEE Transac-

tions on Acoustics, Speech and Signal Processing, Vol.

37, No. 8, 1989, pp. 1166-1175. doi:10.1109/29.31265

[3] J. L. Maryak, J. C. Spall and B. D. Heydon, “Use of the

Kalman Filter for Interference in State-Space Models

with Unknown Noise Distributions,” IEEE Transactions

on Automatic Control, Vol. 49, No. 1, 2005, pp. 87-90.

[4] R. Diversi, R. Guidorzi and U. Soverini, “Kalman Filter-

ing in Extended Noise Environments,” IEEE Transac-

tions on Automatic Control, Vol. 50, No. 9, 2005, pp.

1396-1402. doi:10.1109/TAC.2005.854627

[5] S. Attallah, “The Wavelet Transform-Domain LMS

Adaptive Filter with Partial Subband-Coefficient Updat-

ing,” IEEE Transactions on Circuits and Systems II: Ex-

press Briefs, Vol. 53, No. 1, 2006, pp. 8-12.

doi:10.1109/TCSII.2005.855042

[6] H. Olkkonen, P. Pesola, A. Valjakka and L. Tuomisto,

Copyright © 2011 SciRes. JSIP