Journal of Signal and Information Processing

Vol.4 No.2(2013), Article ID:30995,6 pages DOI:10.4236/jsip.2013.42019

Robust Palmprint Recognition Base on Touch-Less Color Palmprint Images Acquired*

![]()

School of Information Science and Engineering, Shenyang University of Technology, Shenyang, China.

Email: sanghaif@163.com, 491582403@qq.com, hj4393@gmail.com

Copyright © 2013 Haifeng Sang et al. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Received March 15th, 2013; revised April 14th, 2013; accepted April 22nd, 2013

Keywords: Robust Palmprint Recognition; Touch-Less; Skin-Color Thresholding; Local Binary Patterns (LBP); Chi Square Statistic

ABSTRACT

In order to make the environment of palmprint recognition more flexible and improve the accuracy of touchless palmprint recognition. This paper proposes a robust, touchless, palmprint recognition system which is based on color palmprint images. This system uses skin-color thresholding and hand valley detection algorithm for extracting palmprint. Then, the local binary pattern (LBP) is applied to the palmprint in order to extract the palmprint features. Finally, chi square statistic is used for classification. The experimental results present the equal error rate of 3.7668% and correct recognition rate of 97.0142%. Therefore the results show that approach is robust and efficient in color palmprint images which are acquired in lighting changes and cluttered background for touch-less palmprint recognition system.

1. Introduction

Palmprint recognition is a biometric technology, which exploits the effective information on our palm to separate different persons. Common palmprint captured method is divided into contact and contactless [1]. In the contact palmprint recognition systems, palmprint image may be distorted for pressure and public sanitary issue is especially serious. Due to the users must touch the sensor for their hand images to be acquired, which is non-hygienic, and some artifacts can be created during the acquisition for illegitimate uses according to the pressure of users on the sensor surface. Therefore, there is pressing need for contactless palmprint recognition system which is more user-friendly and hygienic. Recently, the research in hand biometrics has oriented towards the contactless approach. One of the important considerations in the contactless setting is how the hand can be detected and segmented from the background. In order to segment the hand from the background, Doublet et al. (2007) [2], applied more complicated machine learning tool like neural networks to model the human skin color, but this paper did not propose a palmprint recognition approach for variant

illumination. Poinsot et al. (2009) [3] also proposed themethod to segment human skin regions from non-skin regions. But this paper used a single green background and simple thresholding method on the RGB space to segment the hand from the background. The design and development of contactless palmprint recognition system is challenging. It brings an unstable environment for imaging. Especially, variant illuminations have seriously affected on the ability of the system to recognize individuals. Thus, the system must be capable of recognition the palmprint images across lighting changes.

In order to solve the problem that contactless palmprint recognition system is challenging, this paper attempts to use the following methods to solve this problem.

1) Skin-color thresholding: Segment the hand image from the background by using the skin-color thresholding method.

2) ROI location: A novel valley detection algorithm is used to find valley locations; these valleys serve as the base points to locate the ROI of the palm.

3) Feature extraction: Local binary patterns (LBP) that is robustness to illumination variation was applied to extract the texture feature of the palmprint.

4) Feature matching: Feature matching was performed by using chi square statistic.

2. Palmprint Recognition Processing Steps

2.1. ROI Location

ROI location steps consist of three stages. First, the skincolor model is established by using the images which were collected in different illumination and cluttered background conditions. Next, we segment the hand from the background. After that we find the valleys of the fingers by using a valley detection algorithm to extract the palmprint region.

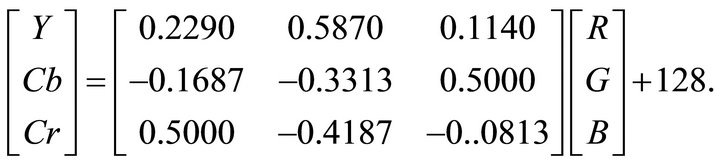

1) Skin-color model Skin Color is important and relatively stable information for hand. It is different from most of the background color. In order to segment the hand from the background based on color, we need a reliable skin color model. We transform the skin color model from RGB to YCbCr chrominance space which can separate color from luminance to reduce the influence of the lighting changes. The conversion formula between digital RGB data and YCbCr is:

(1)

(1)

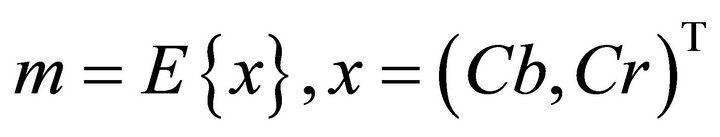

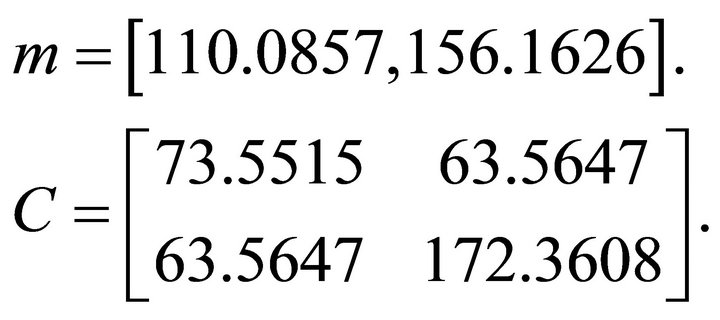

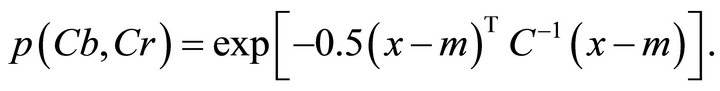

It has been shown in the researches, although skin colors of different people appear to vary over a wide range, they differ much less in color than in brightness [4]. Therefore, a skin color distribution can be represented by Gaussian distribution. The mathematical expression of Gaussian distribution is in the following:

(2)

(2)

(3)

(3)

where m and C are the mean and covariance.

In order to determine the values of m and C, We select 15 samples from 100 hand images which are collected in variant illumination and cluttered background, leaving only the skin regions through the manual segmentation, converting them to YCbCr space, and then counting skincolor distribution in YCbCr space.

Through calculation of the samples, the values of m and C are in the following:

(4)

(4)

With the values of m and C, we can obtain the likelihood of skin for any pixel of an image. The likelihood of skin for this pixel can be computed as follows:

(5)

(5)

where , m and C are the mean and covariance of the skin-color distribution.

, m and C are the mean and covariance of the skin-color distribution.

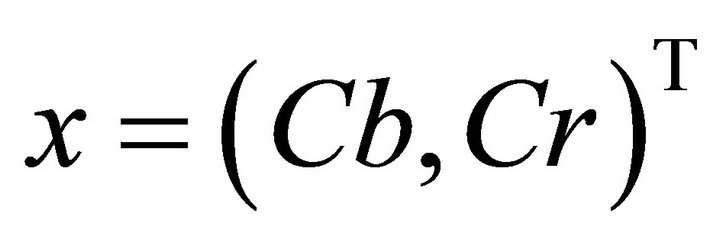

For example, an original color image is shown in Figure 1(a), the image is converted from RGB to YCbCr color space is shown in Figure 1(b), Figure 1(c) is a skin-likelihood image.

2) Skin segmented In order to segment the skin regions from the rest of the image, the skin-likelihood image will be a binary image through a thresholding process. After binary image obtained, the morphological opening and closing operations are used to remove noise. The segmented binary image is shown in Figure 1(d).

A novel hand valley detection algorithm is proposed to exact the ROI of the palm. A pixel must meet the following all four conditions to be considered as a valley. If one of the conditions is not satisfied, the pixel is not a valley, and the algorithm is used to detect whether the next pixel is a valley. Four conditions are in the following:

a) Condition 1: Four points are placed around the current pixel. The distance of the points from the current pixel is α pixels. If one of the points falls in the non-hand region, Condition 1 is satisfied.

b) Condition 2: Eight points are placed around the current pixel. The distance of the points from the current pixel is α + γ pixels. If 1 ≤ points ≤ 4 fall in the non-hand region, Condition 2 is satisfied.

c) Condition 3: Sixteen points are placed around the current pixel. The distance of the points from the current pixel is α + γ + μ pixels. If 1 ≤ points ≤ 6 fall in the non-hand region, Condition 3 is satisfied.

d) Condition 4: A line is drawn outwards the non-hand region. If it does not cross any hand region, the pixel is considered a valley location.

Figure 1. RBG image, YCbCr image, the skin-likelihood image and binary image.

In this paper, the values of α, γ and μ are set to 10. This detection algorithm is based on the assumption that anybody can not stretch his/her finger apart beyond 120˚.

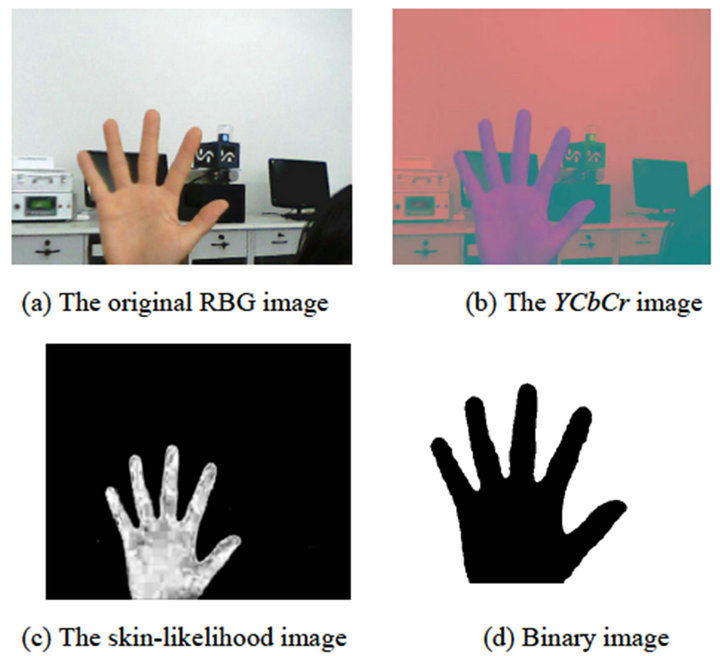

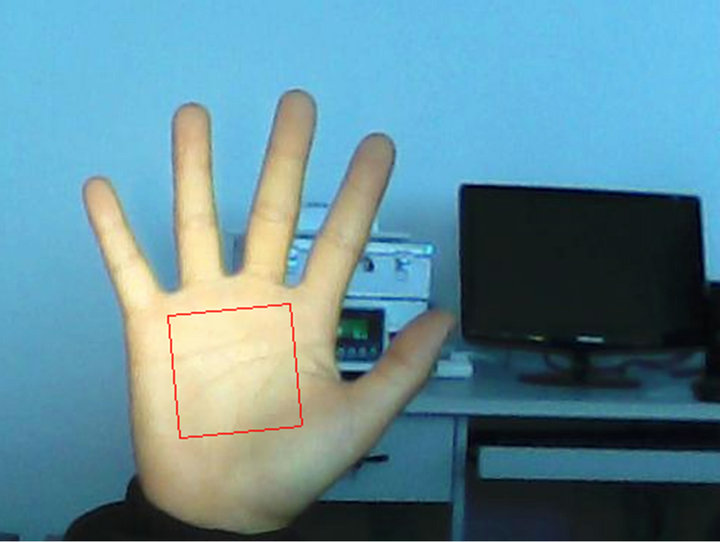

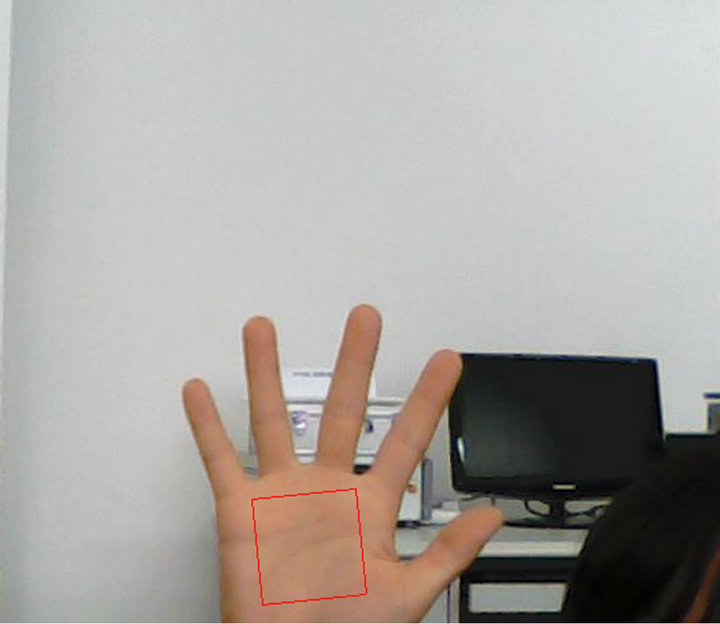

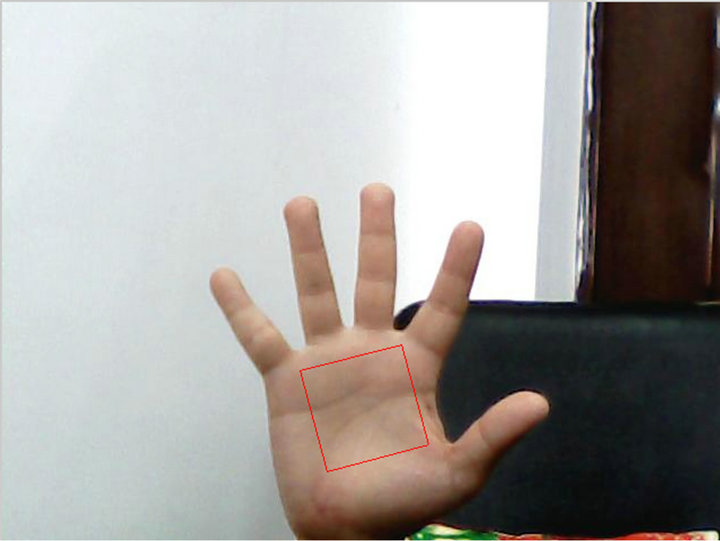

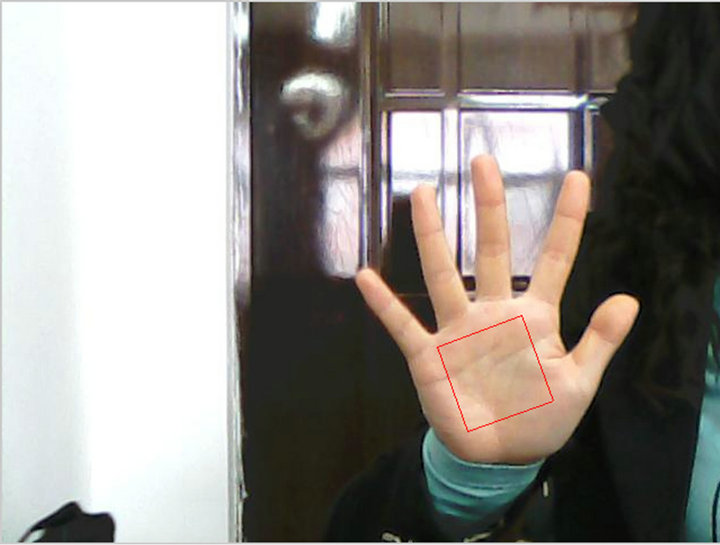

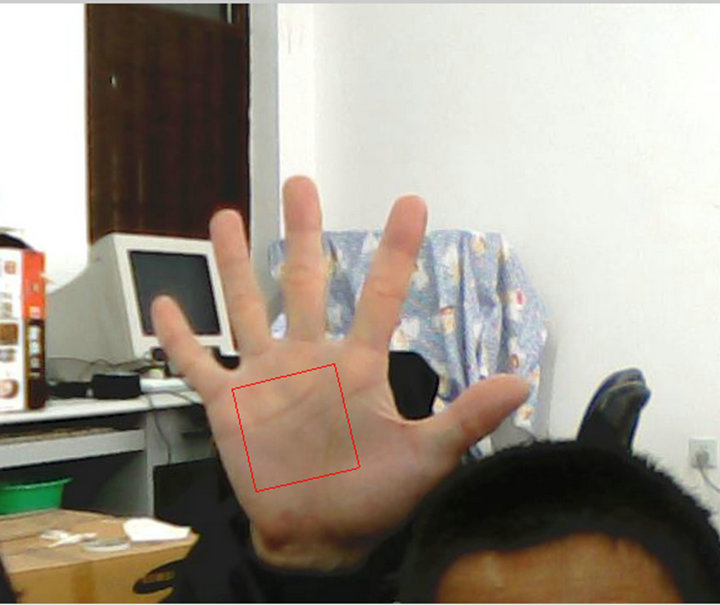

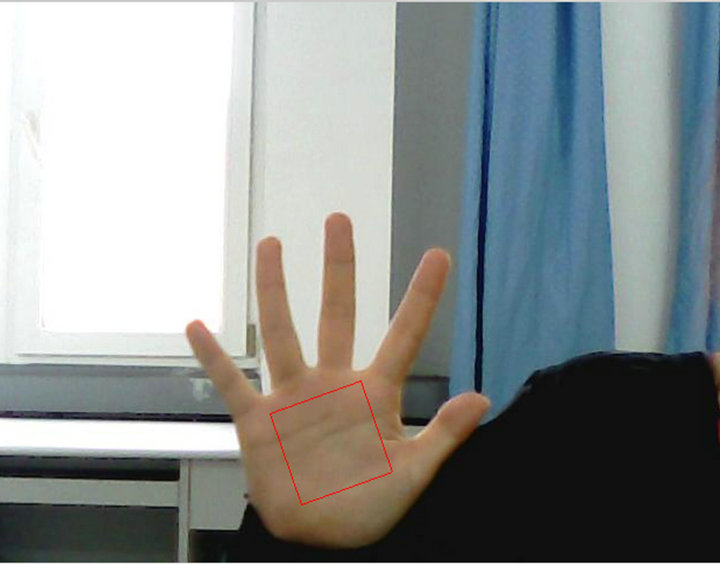

3) ROI extraction This paper used contactless method, the sizes and orientations of the ROIs are different. After obtaining the valleys of the finger, P1, P2, P3, and P4, we use relative length L (distance between P1, P3) to intercept a square ROI. A line which is formed between P1 and P3 and its midpoint, vertical are used to build a new coordinate system for coordinate transformation. According to the angle relation between the new coordinate system and the original coordinate, the image is rotated. After that, the size of the ROIs are resized a standard image to 128 pixels × 128 pixels. As is shown in Figure 2.

2.2. Feature Extraction and Matching

1) Local binary patterns The LBP operator [5] is a simple yet powerful texture descriptor. Due to its discriminative power and computational simplicity, LBP texture operator has become a popular approach in various applications. It is found that certain fundamental patterns in the bit string account for most of the information in the texture [5]. These patterns have the very strong capability of rotation invariance and gray invariance; these patterns are called uniform patterns that the binary pattern contains at most two bitwise transitions from 0 to 1 or vice versa when the bit pattern is traversed circularly. For example, the patterns, 00000000, 11110000, and 00001100 are uniform. In the LBP approach for texture classification, the occurrences of the LBP codes in an image are collected into a histogram, the LBP histogram can be defined as:

(6)

(6)

where n is the number of different labels produced by the LBP operator, while i and j refer to the pixel location.

LBP operator is a flexible method; it allows a low dimensional feature to represent the information of the whole image. Once the LBP operator is applied to the palmprint image, the ROI of palm image is divided into local regions and LBP texture descriptors are extracted

Figure 2. Segment ROI of palmprint.

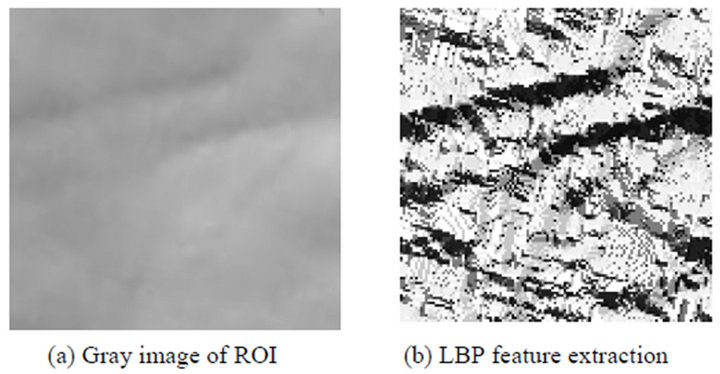

from each region independently. Then, LBP histogram of each region is combined together to form a single feature vector representing the whole image. As tradeoff between speed and performance, we divide the palmprint image into nine equally sized sub-windows, the size of each sub-windows is set to 42 pixels × 42 pixels. Uniform LBP in the (8, 1) neighborhood are applied on each of the sub-windows to extract the local texture feature, and concatenate the local features to get a global descriptor of the palm. Gray images of ROI and LBP feature extraction are shown in Figure 3.

2) Feature matching After feature extraction, feature matching can use a histogram distance measure such as histogram intersection, log-statistic, and chi square statistic. In this paper, we choose the method of chi square statistic.

Chi square (χ2) statistic:

(7)

(7)

where P is the training set and G is the testing set. Each image in the training and testing sets is converted to a LBP histogram. Distances from each training histogram to the testing histogram are calculated. Which two images have the minimum distance; these two images are into one classification.

3. Results and Discussion

3.1. Sources of Experiment Data

Experiment data are come from oneself gathering database. A touch-less palm print recognition system is designed by using low-resolution CMOS web camera with the resolution of 640 × 480 to acquire hand images. The system is designed in such a way that the user is asked to present his hand about 30 cm - 50 cm in front of the web camera.

The database is composed of 20 hand images in varying illumination and cluttered background conditions. The hand images come from 20 users in our database, among them, 10 images provided by each user. The capture restriction is that the users must present their right hands in

Figure 3. Gray image of ROI and LBP feature extraction.

front of the camera with theirs fingers separated. Some ROIs of palmprint images that are in different illumination and background conditions are located as shown in Figure 4.

According to the results of ROI location, the proposed algorithm performed well in the dynamic environment. The images contained other background objects like door, computer, box, desk in Figure 4. No matter in turning off the light and pulling the curtains, also a strong light irradiation, the ROI of the palmprint can be located. The algorithm is able to locate the ROI of the palmprint among the cluttered background and variant illumination conditions.

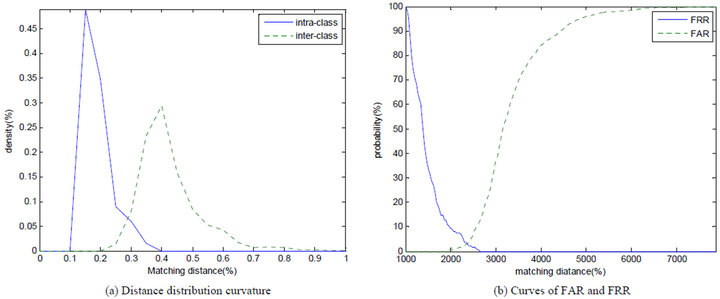

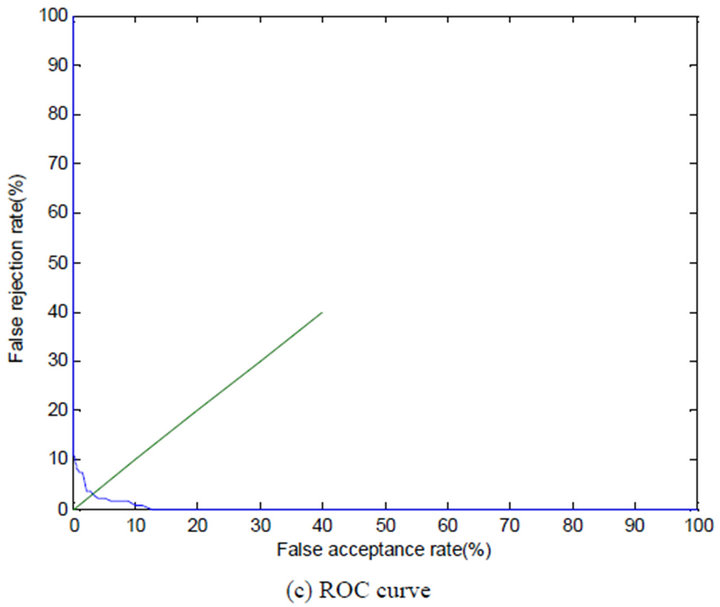

3.2. Discussion

The database contains 200 images of hands; there are a total of 19,900 times matching, among them 900 times for intra-class matching, 19,000 times for inter-class matching. The experiment results are shown in Figure 5. When the equal error rate (ERR) is 3.7668%, false acceptance rate (FAR) is 2.4622%, false rejection rate (FRR) is 3.9598%, and the accuracy is 97.0142%. Since the user’s hand is not touching any platform, the distance of the hand from the input sensor may vary. The palm image appeared large and clear when the palm was placed near the webcam. Many detailed line features and ridges could be captured at near distance. As the hand moved away from the web-cam, the focus faded and many prints information were lost. When the illumination is too strong or too dark and reflective objects are in the background, the accuracy of contactless palmprint recognition system will be affected.

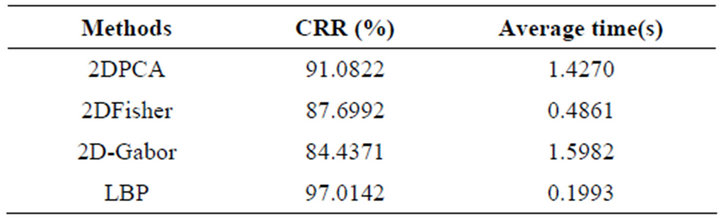

The database contains 200 images of hands from 20 users. Among the 10 images provided by each user forright hand, 5 images were used as gallery set while the others as probe set. We had applied some representative techniques in palmprint recognition which include 2DPCA, 2DFisher and 2D-Gabor to corroborate the proposed system. Euclidean distance was used for 2DPCA and 2DFisher, Hamming distance was applied for 2D-Gabor, whereas χ2 distance was deployed for LBP in the experiment. The comparative result is illustrated in Table 1.

It can be observed that LBP performed better than the others and gave CRR (correct recognition rate) 97.0142%. LBP has another big advantage over other methods because of its simplicity in computation. LBP operator only requires time complexity of O(2n), where n equals the number of neighborhoods. In the experiment, the average time taken to extract the feature of palmprint using LBP was only 0.1993 s.

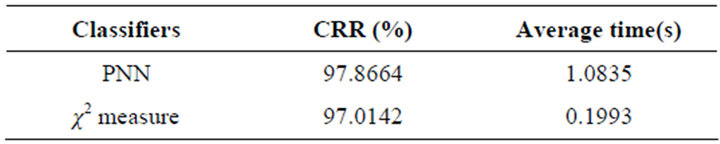

Apart from assessing the performance of LBP, a study was also conducted to determine a suitable feature matching tool for the proposed method. We compared the result of applying χ2 measure and PNN with LBP, and the comparison is provided in Table 2.

It can be observed PNN performed slightly better than χ2 measure, but the average time was long.

4. Conclusions

This paper presents the algorithm of robust palmprint recognition base on touch-less color palmprint images acquired. We used a novel algorithm to automatically detect and locate the ROI of the palm. The proposed algorithm works well under dynamic environment with cluttered background and varying illumination.

Experiments show that the proposed system is able to produce the desired result and cope with recognition challenges such as hand movement, lighting change and

Figure 4. Some ROI of the palm in varying illumination and complex background.

Figure 5. The experiments results.

Table 1. Accuracy of the methods and average time taken for feature extraction.

Table 2. CRR and speed taken for verification using χ2 and PNN.

varying illumination conditions. Although the accuracy of contactless palmprint recognition system is not as high as that of contact palmprint system, contactless palmprint system which is hygienic and user-friendliness increases can make the environment of palmprint recognition more flexible and expand the application of palmprint recognition.

REFERENCES

- D. Zhang, W.-K. Kong and J. You, “Online Palmprint Identification,” IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 25, No. 9, 2003, pp. 1041- 1050. doi:10.1109/TPAMI.2003.1227981

- J. Doublet, M. Revenu and O. Lepetit, “Robust Grayscale Distribution Estimation for Contactless Palmprint Recognition,” Proceedings of the 1st IEEE International Conference in Biometrics: Theory, Applications, and Systems. Washington DC, 27-29 September 2007, pp. 1-6.

- A. Poinsot, F. Yang and M. Pain Davoine, “Small Sample Biometric Recognition Based on Palmprint and Face Fusion,” 4th International Multi-Conference on Computing in the Global Information Technology, Riviera, 23-29 August 2009, pp. 118-122.

- J. Yang, W. Lu and A. Waibe, “Skin-Color Modeling and Adaptation,” CMU-CS, Pittsburgh, 1997, pp. 97- 146.

- T. Ojala, M. Pietikäinen and T. Mäenpää, “Multiresolution Gray-Scale and Rotation Invariant Texture Classification with Local Binary Patterns,” IEEE Transaction on Pattern Analysis and Machine Intelligence, Vol. 24, No. 7, 2002, pp. 469-481. doi:10.1109/TPAMI.2002.1017623

NOTES

*This work is supported by the Specialized Research Fund for the Doctoral Program of Higher Education of China (No. 20122102120004) and Foundation of Liaoning Educational Cimmittee (No. L2012034).