International Journal of Clinical Medicine

Vol. 3 No. 7A (2012) , Article ID: 26343 , 7 pages DOI:10.4236/ijcm.2012.37A133

Physician-Rated Utility of Procedure Videos for Teaching Procedures in the Emergency Department, Overall and during Emergency Department Crowding

![]()

1The Institute for Clinical Evaluative Sciences, Toronto, Canada; 2Division of Emergency Medicine, Department of Medicine, University of Toronto, Toronto, Canada; 3Department of Emergency Services, Sunnybrook Health Sciences Centre, Toronto, Canada.

Email: *clare.atzema@ices.on.ca

Received October 16th, 2012; revised November 26th, 2012; accepted December 24th, 2012

Keywords: Teaching; Education; Procedures; Emergency Department; Video

ABSTRACT

Background: Real-time use of procedure videos as educational tools has not been studied. We sought to determine whether viewing a video of a medical procedure prior to procedure performance in the emergency department improves the quality of teaching of procedures, and whether videos are particularly beneficial during periods of emergency department crowding. Methods: In this single-centre, prospective, before and after study standardized data collection forms were completed by both trainees and supervising emergency physicians (EPs) at the end of each emergency department shift in the before (August 2008-March 2009) and after (August 2009-March 2010) phase. Online procedure videos were introduced on emergency department computers in the after phase. The primary outcome measure was EP rating of the quality of teaching provided (5-point Likert scale). The interaction between crowding and videos was also assessed, to determine whether videos provided a specific additional benefit during periods of emergency department crowding. Results: There were 1159 procedures performed by 192 trainees. Median procedures performed per shift was 1.0 (IQR 0 - 2.0). Mean EP rating of teaching provided was significantly higher in the group that viewed videos, at 4.2 versus 3.7 (p < 0.001). In the adjusted analysis, EP ratings increased by 0.5 with a video (p < 0.001), while the odds of a score of 5.0 were 2.2 times greater if a video was viewed (p = 0.03). The interaction of crowding and procedure videos was not significant (the use of videos increased the average score by 0.24 in times of crowding compared to times of non-crowding, p = 0.19). Conclusions: Use of procedure videos was associated with EP perception of improved quality of teaching provided around procedures. While EPs rated the quality of their teaching as improved overall, the effect of videos on teaching quality was the same in crowded settings as it was in non-crowded settings.

1. Introduction

The effective performance of medical procedures is a skill that all physicians must obtain. Procedural skills training is a “core competency” that is mandated by the Accreditation Council of General Medical Education for all accredited residency programs [1]. Most trainees are required to work in the emergency department during residency and medical school: the emergency department is a key setting for the acquisition of procedural skills, given the breadth of patient illness and injury.

Procedure videos have previously been shown to improve the acquisition of procedural skills [2-5], but most of these studies take place in the controlled setting of procedural skills labs. No studies have assessed the utility of using procedure videos in real time, in the setting of the emergency department. As well, emergency department crowding has been documented in North America, the United Kingdom, and much of the Western world [6-9], and concern has been raised over how crowding affects the quality of teaching received by future physicians [10]. Innovative approaches to teaching procedures have been proposed to mitigate some of the postulated negative effects of crowding [11]; however, no study, to our knowledge, has assessed whether procedure videos are an adaptive response to teaching in a crowded clinical environment.

In this study we examined the effect of the use of procedure videos on the quality of teaching of procedures provided to trainees, both overall and specifically during periods of emergency department crowding. We hypothesized that the supervising emergency physician (EP) would find that the videos improved the overall quality of the teaching provided to the trainee, and that this effect would be particularly pronounced during periods of emergency department crowding.

2. Methods

2.1. Study Design

This was a prospective before and after study. The before phase occurred from August 14, 2008 to March 15, 2009, when no procedure videos were available in the study emergency department, while the after phase occurred in the same emergency department from August 14, 2009 to March 15, 2010. Research Ethics Board approval was obtained from Sunnybrook Health Sciences Centre.

2.2. Setting and Study Participants

The study was performed at an academic emergency department in Sunnybrook Health Sciences Centre. The hospital is a level 1 trauma centre, with consultation services available for all major sub-specialties, including neurosurgery and vascular surgery, with the exception of obstetrics. The annual emergency department census is 45,000, with an admission rate of 24.2%. The site serves as the home of the University of Toronto Emergency Medicine residency program for the city of Toronto, and the emergency department is a mandatory rotation for first-year residents from all University of Toronto residency programs, final (fourth) year medical students from the associated medical school, as well as a core site for all emergency medicine residents. Fourth year residents and higher were excluded from the study, as many of the most common procedures (e.g. suturing, casting) would not require a procedural video, given that they would be expected to be able to perform these procedures well without instruction.

2.3. Data Collection and Processing

For several years prior to study commencement all trainees were mandated to obtain an evaluation card from the supervising EP for that shift; colour-coded cards were available in all areas of the emergency department for medical students, junior residents, and senior residents. During the study period two standardized, piloted, data collection forms were stapled to every evaluation card, with one labeled for the supervising EP, and one for the trainee (medical student, junior, or third year resident). Sealed boxes were placed in each emergency department area for collection of the completed study forms. Prior to study initiation (and with re-commencement in the after phase), emails were sent to all EPs advertising the study, assigning each EP a code-name for signing the data collection form. Shifts where no eligible trainee was present were excluded. Emails were sent to the incoming trainees assigning them a code-name and explaining the study, and one co-investigator (R.A.S.) met with each group at the start of the rotation to explain the study. Resident rotations were two months in length, while medical students were present for one month.

Prior to initiation of the after phase, procedure videos were installed on all emergency department computers and advertised to both incoming trainees and emergency physicians at the site. These videos included both those available from the New England Journal of Medicine, plus all 74 videos available through a subscription to Procedures Consult™. Study investigators (C.L.A. or R.A.S.) met with each EP individually during a shift, in the emergency department, to review how to access procedure videos on each computer, and to encourage use of the videos.

2.4. Outcome Measures

The primary outcome measure was EP rating of the quality of the teaching provided around each procedure. We chose this outcome measure because it was felt to be less susceptible to a “ceiling effect”, or “leniency bias” [12, 13], whereby trainees as well as evaluators tend to assign very high ratings to others (everyone is “above average”), rather than on a normal curve, potentially eliminating room for improvement in the after phase. It was postulated a priori that the secondary outcome measures, trainee rating of teaching and EP rating of trainee procedural skill, would be more susceptible to this effect than rating of one’s own teaching quality.

2.5. Variable Definitions

Procedures that were part of the physical exam (e.g. speculum exam) or were standardly performed by the nurses at the study site (e.g. intravenous lines) were excluded. Crowding was measured two ways. The first was with a dichotomous measure: physician perception of crowding during the shift worked. EP perception of crowding has been shown to have good correlation with many standard crowding measures [14]. This was measured as either “yes” or “no”, and if “yes”, whether it was such that the EP was very busy managing the emergency department (emergency department crowded), or such that there were no beds to see patients in, and the EP had more time available (emergency department not crowded, in terms of teaching availability). This was utilized to provide a more discerning assessment of crowding as it relates to teaching, compared to standard measures of crowding. However we also employed a standard crowding measure, which was continuous: median emergency department length-of-stay (LOS) of all patients who were present in the emergency department during the eighthour shift that the trainee worked, obtained from the Emergency Department Information System, software that tracks all emergency department patients. Emergency department LOS is a commonly used measure in crowding research [15,16], and has been shown to be a good proxy for emergency department crowding [17]. To internally validate the physician perception measure of crowding with the standard measure utilized (ED LOS), we also assessed the Point Biserial Correlation between the two measures.

2.6. Data Analysis and Sample Size Calculation

A five-point Likert scale was utilized for outcome measures, with 0.5 markings available between numerals. Differences between groups were assessed using student’s t-tests [18]. Regression models were used to estimate the effect of viewing a video on teaching scores after adjusting for characteristics of the trainee and of the shift. These models were estimated using generalized estimating equation (GEE) methods to account for clustering of measurements within EPs. Specifically, EP rating of teaching quality, trainee rating of teaching received, and EP rating of trainee skill were assessed using linear regression analyses estimated with GEEs. As a secondary analysis, the Likert scale was dichotomized a priori at “5” vs. less than 5, and a logistic regression model was estimated using GEE methods. Covariates included type of emergency department shift and area of the department (day in acute/major area vs. day intermediate vs. day minor vs. evening major vs. evening intermediate vs. minor intermediate vs. night shift), the trainee level (medical student vs. junior resident vs. third year resident), and a crowding measure.

To assess whether the effect of videos on teaching quality was different in periods of emergency department crowding compared to periods of non-crowding, an interaction between the crowding measure and the indicator variable denoting whether a video was viewed was added to the regression model. The coefficient for the interaction variable allows one to estimate the change in Likert scale rating that using a video provides during periods of emergency department crowding compared to the change in Likert scale rating that using a video provides during periods of non-crowding.

Using a student’s t-test to compare the mean EP rating between study periods, 64 ratings per time period were required to have 80% power to detect a mean change of 0.5 units (our a priori effect size). This assumes α = 0.05, β = 0.2, the mean rating in each group is 3.5 and 4.0, respectively, and a standard deviation for the ratings of 1.0 within each group. All analyses were performed with SAS software (Version 9.2, SAS Institute Inc., Cary, NC).

3. Results

During the study period there were 1159 procedures performed by 192 trainees, supervised by 38 EPs during 921 shifts. Most common procedure types (from a total of 70) performed were sutures (25.8%), splint/casting (13.9%), arterial blood gases (6.6%), nerve blocks (3.9%), and intubations (3.4%). During the before phase, 355 procedures were performed during 274 shifts (by 81 trainees), and in the after phase 804 procedures were performed during 647 shifts (by 113 trainees, including two who participated in both the before and after phase), of which 107 were performed after a procedure video was viewed in the emergency department. Of the 192 trainees, 96 (50%) were medical students: 38 (47%) in the before phase and 58 (51%) in the after phase. Median number of procedures performed per shift was 1.0 (IQR 0 - 2.0) overall, and in each phase.

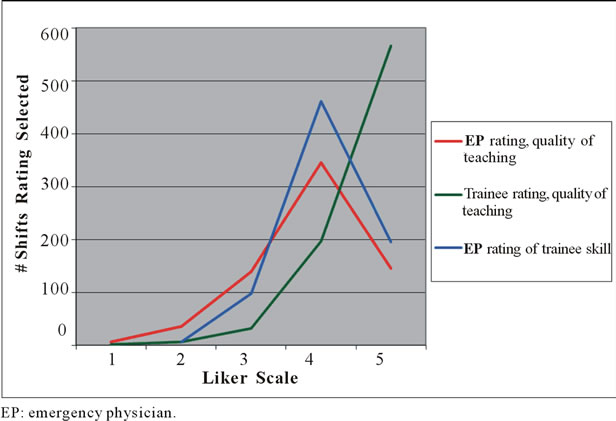

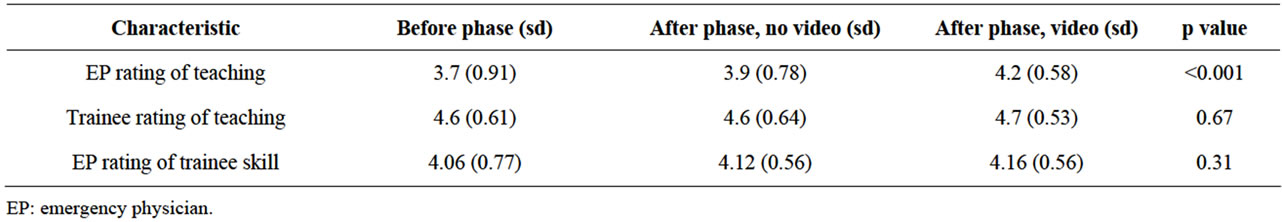

EP ratings of the quality of the teaching they provided, trainee ratings of teaching received, and EP rating of trainee procedural skill for the entire study period are shown in Figure 1: as anticipated, trainee ratings were highly left-skewed. Mean EP rating of the quality of the teaching provided was higher in the group that viewed videos, while trainee rating of teaching received, and EP rating of trainee skill, were not significantly different (Table 1).

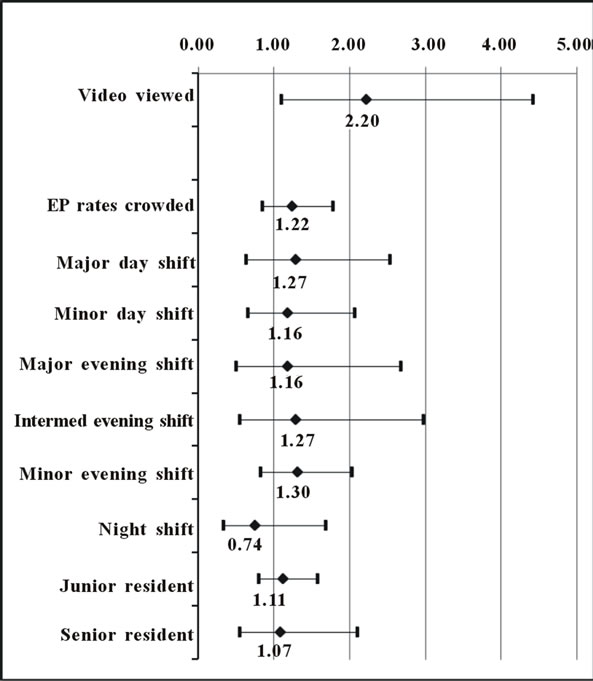

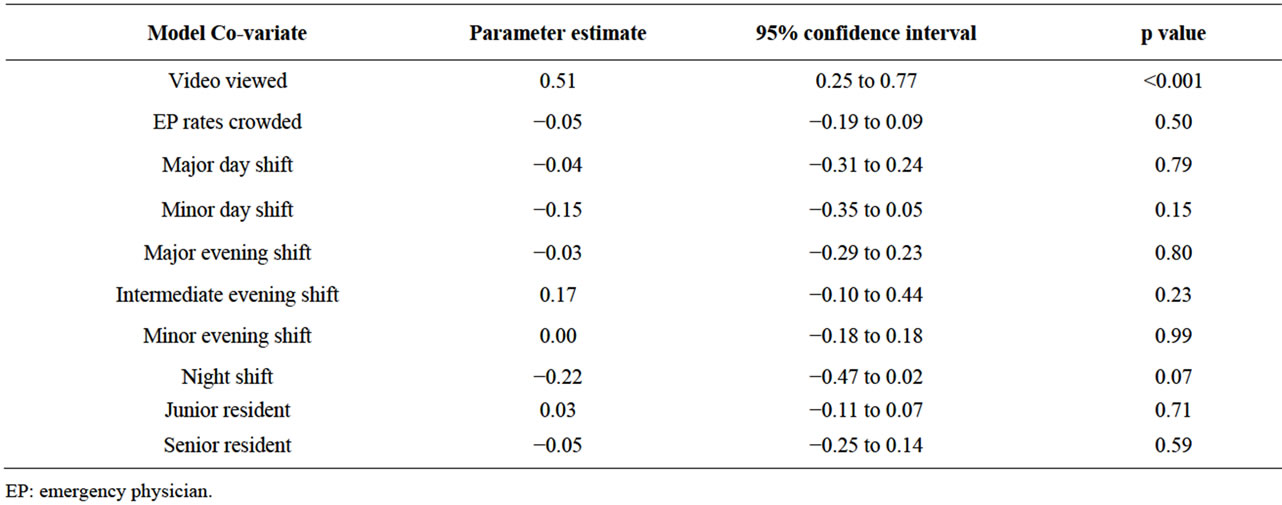

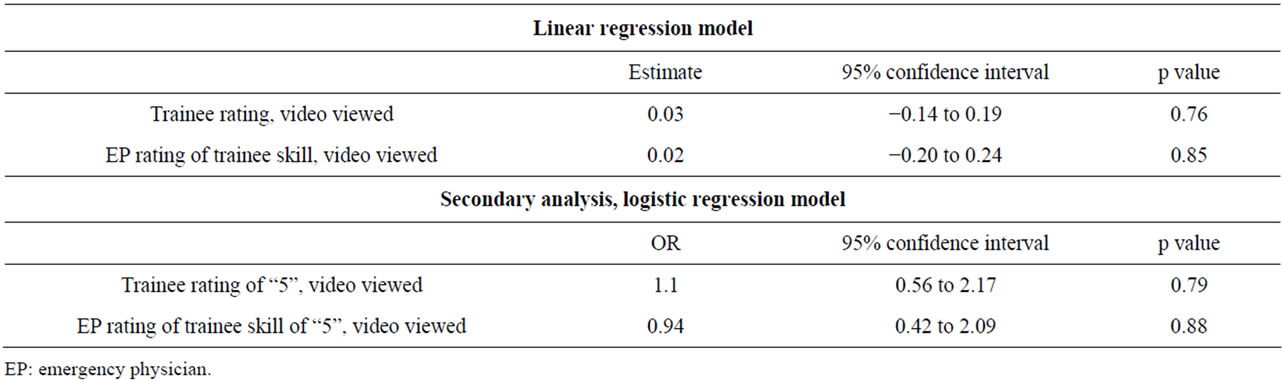

In the regression model the EP rating of the quality of the teaching provided was 0.5 units higher (p < 0.001) when a procedure video was viewed (Table 2). In the secondary analysis, using logistic regression, results were similar: OR 2.2 (95% confidence interval, 1.1 to 4.4; p = 0.03) of receiving a score of “5” if a video was viewed (Figure 2). Trainee rating of teaching received was not significantly associated with whether a procedure video was viewed, nor was EP rating of trainee

Figure 1. Overall Likert scale ratings (1 to 5) for each emergency department shift in which a procedure was performed, over the entire study period, by study outcome.

procedural skill (Table 3). Results were similar in the secondary analyses of trainee rating of teaching received and EP rating of trainee skill (Table 3), and when the median emergency department LOS was used as the crowding measure in the models. Internal validation of the two crowding measures demonstrated significant correlation (p < 0.001).

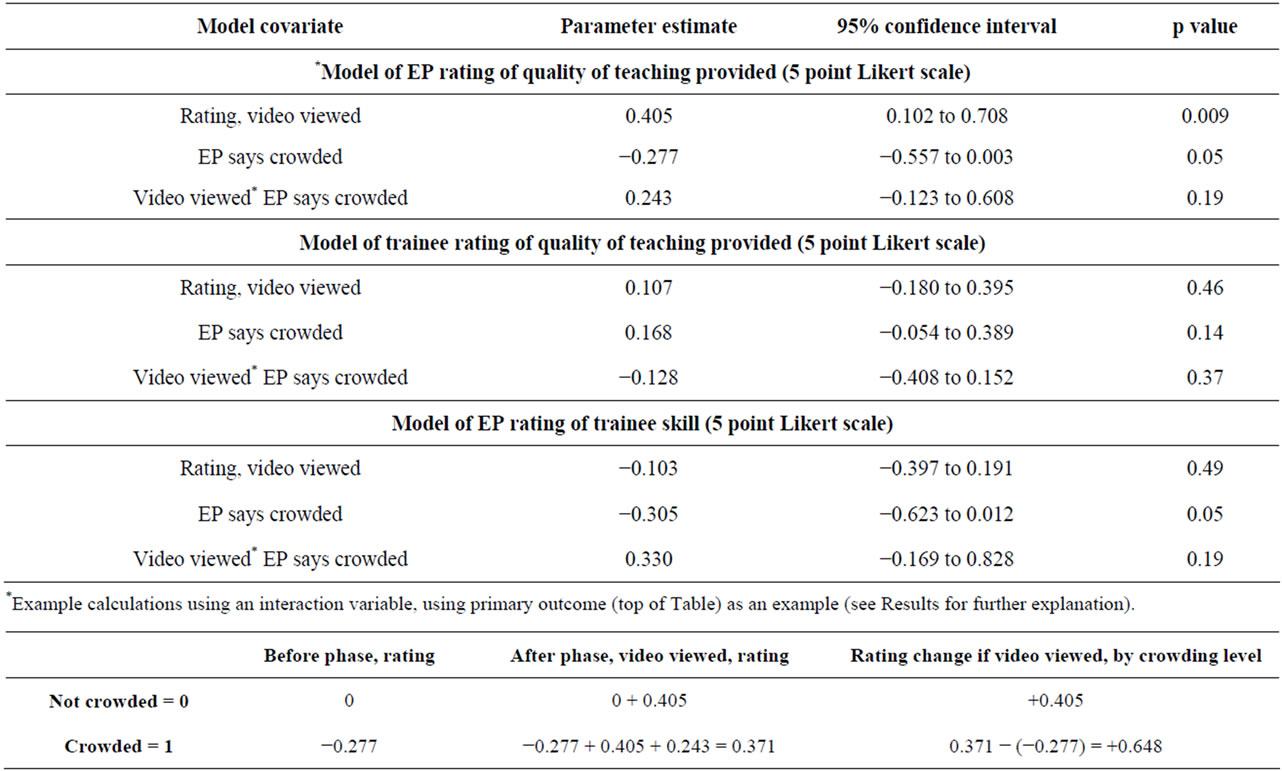

When measuring EP rating of the quality of the teaching provided, there was no significant interaction between viewing a video and emergency department crowding (p = 0.19) (Table 4). In particular, during pe riods of noncrowding, viewing a video was associated with a 0.41 increase in EP rating of quality of teaching; during periods of crowding, viewing a video was associated with a 0.65 increase in EP of quality of teaching.

However, the incremental benefit of the video during periods of crowding (0.24) was not significant. There was no change in rating when median emergency department LOS was utilized to measure emergency department crowding (–0.02 per hour increase of median emergency department LOS). Similar to the EP rating of quality of teaching outcome, there was a small increase in EP rating of the trainee’s procedural skills for the interaction of crowding and whether a video was utilized

Figure 2. Adjusted odds of a high score (“5”) on a 5-point Likert scale, for quality of procedural teaching provided, as assessed by the supervising emergency physician.

Table 1. Mean ratings in the before phase, the after phase where a video was not viewed, and after phase where a video was viewed.

Table 2. Linear regression results of emergency physician rating of quality of the teaching they provided around each procedure, with each parameter estimate representing the adjusted change in Likert scale rating.

Table 3. Regression results for effect of viewing a video on secondary outcome measures, trainee rating of teaching received and EP rating of trainee procedural skill.

Table 4. Regression results including interaction parameters, for calculated difference in adjusted effect of viewing a video on outcome measures during periods of crowding versus no crowding.

(+0.33) that was also not significant (p = 0.19). There was no significant change in trainee rating of procedural teaching.

4. Discussion

All future physicians must learn how to perform procedures, and the emergency department is a key setting for acquisition of these skills. In this study we found a small increase in physician rating of their teaching when their skill set was augmented with procedure videos. Previous studies on procedure videos have been restricted to the lab setting, where videos have been shown to be beneficial [2-5]; our results are consistent with these studies, and extend the findings to real-time, in the emergency department. Procedure videos may be added to the teaching armamentarium of the academic emergency physiccian, and we surmise that they will offer their greatest benefit for procedures that are performed infrequently by the supervising physician. Similarly, it is likely that the demonstrated benefit of procedure videos was small because it does not apply to all procedures, but rather to certain procedures for each EP (a video may be of limited additional benefit in the teaching of a common procedure, but helpful for a procedure with which the physician is less familiar or comfortable).

We note that the uptake of the more than 75 videos available was not high, at 107 (13.3%) out of 804 procedures performed in phase II. This was despite the availability on all computers, posted physical lists of available procedure videos beside each computer, direct demonstration in how to access the videos, and relatively simple access (requiring three “clicks” in total, to choose and open a video). While information technology is becoming increasingly more ubiquitous in today’s emergency departments, workplace ergonomics may need to be further optimized before EPs integrate procedure videos into routine teaching in the emergency department. Future studies could assess barriers to utilization.

Emergency departments report that crowding is greatest at teaching hospitals [19]. Thus, any new teaching initiative must be evaluated both overall and in this setting, which constitutes the “new normal” in emergency department-based medical education. It has been proposed that in a crowded emergency department the supervising physician has little time for the extensive oneon-one teaching that is required before an inexperienced trainee performs a procedure on a patient [10]. A similar concern has been raised in crowded outpatient clinics and family doctors’ offices [20]. In this study we did not find a significant benefit to videos in the crowded emergency department setting, relative to their benefit in the noncrowded setting. While a larger study might find a statistically significant benefit, most clinicians would likely agree that a benefit of less than half a point on a 5-point Likert scale is not a worthwhile increase, thus we conclude that there does not seem to be a specific benefit to viewing a procedure video in a crowded emergency department, relative to the non-crowded emergency department.

Unfortunately our study results on trainee rating of teaching are not useful, due to the “leniency bias” that has been reported in the literature [12,13]. Ratings of teaching were extremely high during pilot testing, as they were in the before phase, so the “ceiling effect” prevents the proper evaluation of this outcome in this study. However it was felt that advising trainees to score conservatively would be, potentially, altering the validity of the study, thus we did not do this, but instead made this outcome a secondary outcome measure.

Finally, we note that EP rating of the teaching provided increased in phase 2, even when a video was not utilized (though not as much as when a video was viewed). This may be in part because EP’s may not use a video for procedures where they are highly confident of their teaching ability, such that the ratings that are assigned to those procedures are likely higher than ratings for less familiar procedures, which may have been supplemented by a video in phase 2. This would result in higher average teaching assessments for procedures without a video in phase 2.

This study has several limitations. During the before phase there were less shifts with an eligible trainee, due to a chance lower number of trainees rotating through the emergency department. The scheduling varies at random (based on yearly rotation schedules, number of trainees going to different sites, etc.), thus we don’t believe this introduced significant bias to our study. This study was performed at a single, academic site that is the homebase for the emergency medicine residency training program, limiting the generalizability to teaching sites with ample trainees. Standard t-tests and linear regression were used despite our responses being measured on a Likert scale. However, use of these parametric methods with ordinal response data are warranted [18]. We note that our results were robust, providing the same findings regardless of regression modeling technique utilized, as well as by shift assignment (7-level versus day, evening, and night) and trainee type (3-level versus student or resident).

In this study we did not assess safety outcomes, which might improve with the standardization of teaching that occurs with procedure videos. These outcomes have been used in critical care research [21], but might be less realistic in the emergency department setting due to a much smaller number of high-acuity procedures performed (e.g. central lines). Use of an independent procedure evaluator was not feasible in this study given limited funding sources.

5. Conclusion

Procedure videos were associated with a small but significant increase in supervising physician rating of teaching provided for procedures performed in the emergency department. Specifically in the setting of emergency department crowding, however, they did not offer a significant improvement in the EP rating of quality of teaching over utilization in a non-crowded emergency department.

6. Acknowledgements

We wish to thank the Education Development Fund from the University of Toronto, and the Canadian Association of Emergency Physicians, for financial support of this project. Peter Austin was supported by a Career Investigator Award from the Heart and Stroke Foundation.This study was also supported by the Institute for Clinical Evaluative Sciences (ICES), which is funded by an annual grant from the Ontario Ministry of Health and LongTerm Care (MOHLTC). The opinions, results and conclusions reported in this paper are those of the authors and are independent from the funding sources. No endorsement by ICES or the Ontario MOHLTC is intended or should be inferred.

REFERENCES

- Accreditation Council for Graduate Medical Education (ACGME), 2011. http://www.acgme.org/acWebsite/navPages/nav_PDcoord.asp http://www.acgme.org/acgmeweb/Portals/0/PDFs/commonguide/CompleteGuide_v2%20.pdf

- E. L. Einspruch, B. Lynch, T. P. Aufderheide, G. Nichol and L. Becker, “Retention of CPR Skills Learned in a Traditional AHA Heartsaver Course versus 30-Min Video Self-Training: A Controlled Randomized Study,” Resuscitation, Vol. 74, No. 3, 2007, pp. 476-486. doi:10.1016/j.resuscitation.2007.01.030

- J. C. Lee, R. Boyd and P. Stuart, “Randomized Controlled Trial of an Instructional DVD for Clinical Skills Teaching,” Emergency Medicine Australasia, Vol. 19, No. 3, 2007, pp. 241-245. doi:10.1111/j.1742-6723.2007.00976.x

- P. Sookpotarom, T. Siriarchawatana, Y. Jariya and P. Vejchapipat, “Demonstration of Nasogastric Intubation Using Video Compact Disc as an Adjunct to the Teaching Processes,” Journal of the Medical Association of Thailand, Vol. 90, No. 3, 2007, pp. 468-472.

- I. Treadwell, T. W. de Witt and S. Grobler, “The Impact of a New Educational Strategy on Acquiring Neonatology Skills,” Medical Education, Vol. 36, No. 5, 2002, pp. 441-448. doi:10.1046/j.1365-2923.2002.01201.x

- Institute of Medicine, “Hospital-Based Emergency Care: At the Breaking Point,” National Academy Press, Washington DC, 2006.

- N. C. Proudlove, K. Gordon and R. Boaden, “Can Good Bed Management Solve the Overcrowding in Accident and Emergency Departments?” Emergency Medicine Journal, Vol. 20, No. 2, 2003, pp. 149-155. doi:10.1136/emj.20.2.149

- M. J. Schull, J. P. Szalai, B. Schwartz and D. A. Redelmeier, “Emergency Department Overcrowding Following Systematic Hospital Restructuring: Trends at Twenty Hospitals over Ten Years,” Academic Emergency Medicine, Vol. 8, No. 11, 2001, pp. 1037-1043. doi:10.1111/j.1553-2712.2001.tb01112.x

- F. Y. Shih, M. H. Ma, S. C. Chen, H. P. Wang, C. C. Fang, R. S. Shyu, G. T. Huang and S. M. Wang, “ED Overcrowding in Taiwan: Facts and Strategies”, American Journal of Emergency Medicine, Vol. 17, No. 2, 1999, pp. 198-202. doi:10.1016/S0735-6757(99)90061-X

- C. Atzema, G. Bandiera and M. J. Schull, “Emergency Department Crowding: The Effect on Resident Education,” Annals of Emergency Medicine, Vol. 45, No. 3, 2005, pp. 276-281. doi:10.1016/j.annemergmed.2004.12.011

- G. Bandiera, S. Lee and R. Tiberius, “Creating Effective Learning in Today’s Emergency Departments: How Accomplished Teachers Get It Done,” Annals of Emergency Medicine, Vol. 45, No. 1, 2005, pp. 253-261. doi:10.1016/j.annemergmed.2004.08.007

- G. Bandiera and D. Lendrum, “Daily Encounter Cards Facilitate Competency-Based Feedback While Leniency Bias Persists,” Canadian Journal of Emergency Medicine, Vol. 10, No. 1, 2008, pp. 44-50.

- N. L. Dudek, M. B. Marks and G. Regehr, “Failure to Fail: The Perspectives of Clinical Supervisors,” Academic Medicine, Vol. 80, Suppl. 10, 2005, pp. S84-S87. doi:10.1097/00001888-200510001-00023

- U. Hwang, M. L. McCarthy, D. Aronsky, B. Asplin, P. W. Crane, C. K. Craven, S. K. Epstein, C. Fee, D. A. Handel, J. M. Pines, N. K. Rathlev, R. W. Schafermeyer, F. L. Zwemer Jr and S. L. Bernstein, “Measures of Crowding in the Emergency Department: A Systematic Review,” Academic Emergency Medicine, Vol. 18, No. 5, 2011, pp. 527-538. doi:10.1111/j.1553-2712.2011.01054.x

- J. M. Pines, A. R. Localio, J. E. Hollander, W. G. Baxt, H. Lee, C. Phillips and J. P. Metlay, “The Impact of Emergency Department Crowding Measures on Time to Antibiotics for Patients with Community-Acquired Pneumonia,” Annals of Emergency Medicine, Vol. 50, No. 5, 2007, pp. 510-516. doi:10.1016/j.annemergmed.2007.07.021

- M. J. Schull, A. Kiss and J. P. Szalai, “The Effect of LowComplexity Patients on Emergency Department Waiting Times,” Annals of Emergency Medicine, Vol. 49, No. 3, 2007, pp. 257-264. doi:10.1016/j.annemergmed.2006.06.027

- M. L. McCarthy, S. L. Zeger, R. Ding, S. R. Levin, J. S. Desmond, J. Lee and D. Aronsky, “Crowding Delays Treatment and Lengthens Emergency Department Length of Stay, Even among High-Acuity Patients,” Annals of Emergency Medicine, Vol. 54, No. 4, 2009, pp. 492-503. doi:10.1016/j.annemergmed.2009.03.006

- G. Norman, “Likert Scales, Levels of Measurement and the ‘Laws’ of Statistics,” Advances in Health Sciences Education: Theory and Practice, Vol. 15, No. 5, 2010, pp. 625-632. doi:10.1007/s10459-010-9222-y

- B. Rowe, K. Bond, B. Ospina, S. Blitz, M. Afilalo, S. Campbell and M. Schull, “Frequency, Determinants, and Impact of Overcrowding on Emergency Departments in Canada: A National Survey of Emergency Department Directors,” Technology Report No. 67.3, Canadian Agencies for Drugs and Technologies in Health, Ottawa, 2006.

- K. M. Skeff, J. L. Bowen and D. M. Irby, “Protecting Time for Teaching in the Ambulatory Care Setting,” Academic Medicine, Vol. 72, No. 8, 1997, pp. 694-697. doi:10.1097/00001888-199708000-00014

- S. M. Berenholtz, P. J. Pronovost, P. A. Lipsett, D. Hobson, K. Earsing, J. E. Farley, S. Milanovich, E. Garrett-Mayer, B. D. Winters, H. R. Rubin, T. Dorman and T. M. Perl, “Eliminating Catheter-Related Bloodstream Infections in the Intensive Care Unit,” Critical Care Medicine, Vol. 32, No. 10, 2004, pp. 2014-2020.

NOTES

*Corresponding author.