Open Journal of Statistics

Vol.05 No.06(2015), Article ID:60515,8 pages

10.4236/ojs.2015.56057

Spectral Gradient Algorithm Based on the Generalized Fiser-Burmeister Function for Sparse Solutions of LCPS

Chang Gao, Zhensheng Yu, Feiran Wang

College of Science, University of Shanghai for Science and Technology, Shanghai, China

Email: gaochang45622@163.com

Copyright © 2015 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 27 August 2015; accepted 20 October 2015; published 23 October 2015

ABSTRACT

This paper considers the computation of sparse solutions of the linear complementarity problems LCP(q, M). Mathematically, the underlying model is NP-hard in general. Thus an lp(0 < p < 1) regularized minimization model is proposed for relaxation. We establish the equivalent unconstrained minimization reformation of the NCP-function. Based on the generalized Fiser-Burmeister function, a sequential smoothing spectral gradient method is proposed to solve the equivalent problem. Numerical results are given to show the efficiency of the proposed method.

Keywords:

Linear Complementarity Problem, Sparse Solution, Spectral Gradient, Generalized Fischer-Burmeister

1. Introduction

Given a matrix

and an n-dimensional vector q, the linear complementarity problem, denoted by LCP(q, M), is to find a vector

and an n-dimensional vector q, the linear complementarity problem, denoted by LCP(q, M), is to find a vector

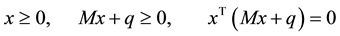

such that

such that

.

.

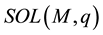

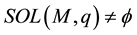

The set of solutions to this problem is denoted by . Throughout the paper, we always suppose

. Throughout the paper, we always suppose . The LCP has many wide applications in physics, engineering, mechanics, and economics design [1] [2] . Numerical methods for solving LCPs, such as the Newton method, the interior point method and the non-smooth equation methods, have been extensively investigated in the literature. However, it seems that there are few methods to solve the sparse solutions for LCPs. In fact, it is very necessary to research the sparse solution of the LCPs such as portfolio selection [3] [4] and bimatrix games [5] in real applications.

. The LCP has many wide applications in physics, engineering, mechanics, and economics design [1] [2] . Numerical methods for solving LCPs, such as the Newton method, the interior point method and the non-smooth equation methods, have been extensively investigated in the literature. However, it seems that there are few methods to solve the sparse solutions for LCPs. In fact, it is very necessary to research the sparse solution of the LCPs such as portfolio selection [3] [4] and bimatrix games [5] in real applications.

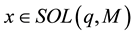

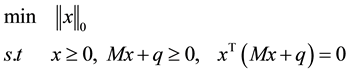

In this paper, we consider the sparse solutions of the LCP. We call

a sparse solution of LCP (q, M) if

a sparse solution of LCP (q, M) if

is a solution of the following optimization problem

is a solution of the following optimization problem

(1)

(1)

To be more precise, we seek a vector

by solving the l0 norm minimization problem, where

by solving the l0 norm minimization problem, where

stands for the number of nonzero components of x. A solution of (1) is called the sparsest solution of the LCP.

stands for the number of nonzero components of x. A solution of (1) is called the sparsest solution of the LCP.

Recently, Meijuan Shang, Chao Zhang and Naihua Xiu design a sequential smoothing gradient method to solve the sparse solution of LCP [6] . We inspire by the model and use the spectral method based on the generalized Fischer-Burmeister function to solve our new model (3). The spectral method is proposed by Barzilai and Borwein [7] and further analyzed by Raydan [8] [9] . The advantage of this method is that it requires little computational work and greatly speeds up the convergence of gradient methods. Therefore, this technique has received successful applications in unconstrained and constrained optimizations [10] -[13] .

In fact, the above minimization problem (1) is a sparse optimization with equilibrium constraints. From the problem of constraint conditions, as well as the non-smooth objective function, it is difficult to get solutions due to the equilibrium constraints to overcome the difficultly, and we use the NCP-functions to construct the penalty of violating the equilibrium constraints.

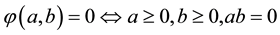

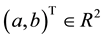

A function φ: R2 → R1 is called a NCP-function, if for any pair

.

.

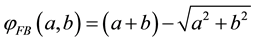

A popular NCP-functions is the Fischer-Burmeister (FB), which is defined as

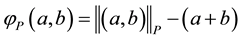

The Fischer-Burmeister function has many interesting properties. However, it has limitations in dealing with monotone complementarity problems since it is too flat in the positive orthant, the region of main interest for a complementarity problem. In terms of the above disadvantage of the Fischer-Burmeister function, we consider the following generalized Fischer-Burmeister function [10] .

(2)

(2)

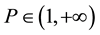

where p is any fixed real number from

and

and

denotes the p-norm, i.e.

denotes the p-norm, i.e.

In other words, in the function

Define

where

where

regularized minimization problem.

Let us denote the first term of (3) by the function

For any given

The paper is organized as follows: In Section 2, we present absolute lower bounds for nonzero entries in local solution of (3). In section 3, we approximate the minimal zero norm solutions of the LCP. In section 4, we give a sequential smoothing spectral gradient method to solve the model. In Section 5, numerical results are given to demonstrate the effectiveness of the sequential smoothing spectral gradient method.

2. The lp Regularized Approximation

In this section, we consider the minimizers of (3). We study the relation between the original model (1) and the lp regularized model (3), which indicates the regularized model is a good approximation. We use a threshold lower bound L [6] for nonzero entries in local minimizers and the choice of the lp minimization problem (3).

2.1. Relation between (3) and (1)

The following result is given in [6] , which is essentially based on some results given by Chen Xiaojun [14] .

Lemma 2.1. [6] for any fixed

of (3), and

2.2. Lower Bounds for Nonzero Entries in Solutions

In this section, we extend the above result to the lp norm regularization model (2) for approximating minimal l0 norm solutions of the LCP. We provide a threshold lower bound L > 0 for any local minimizer, and show

that any nonzero entries of local minimizers must exceed L. Since

is bound below and

Lemma 2.2. [6] let

Then we have: for any

Moreover, the number of nonzero entries in

Let us denote the first term of (3) by the function

First we present some properties of

Lemma 2.3. [12] let

1)

2)

3) If

when

4) Given a point

sgn(.) represents the sign function;

Theorem 2.1. The function

where

en by

where

3. Smoothing Method for lp Regularization

Most optimization algorithms are efficient only for convex and smooth problems. However, some algorithms for

Non-smooth and non-convex optimization problems have been developed recently. Note that the term

p < 1) in (3) is neither convex nor Lipschitz continuous in

Smoothing Counterpart for (3)

For

It is clear to see that, for any

and

We can construct a smoothing approximation of (2) as

by noting that

for any

Let

Theorem 3.1. Let

Then, any accumulation point of

Proof. Let

We can deduce from (11) that

On the other hand, we have

Which indicates

Lemma 3.2. [6] for any

4. SS-SG Algorithm

We suggest a sequential Smoothing Spectral Gradient (SS-SG) Method to solve (3). With the SS-SG method, we need the Spectral Gradient method as the main step for decreasing the objective value. The smoothing method is very easy to implement and efficient to deal with optimization; see [15] .

We first introduce the spectral projected gradient method in [8] as follow.

Algorithm 1. Smoothing Spectral Gradient Method

Step 0: Choose an initial point

Step1: Let

Step 2: Compute the step size

Set

Step 3: If

Algorithm 2. Sequential Smoothing Spectral Gradient Method

Step 1: Find

Step 2: Compute

Use the lower bound

Step 3: Decrease the parameter

5. Numerical Experiments

In this section, we test some numerical experiments to demonstrate the effectiveness of our SG algorithm. In order to illustrating the effectiveness of the SS-SG algorithm we proposed, we introduce another algorithm of talking the LCPs. In [6] , the authors designed a sequential smoothing (SSG) method to solve the lp regularized model and get a sparse solution of LCP(q, M). Numerical experiments show that our algorithm is more effective than (SSG) algorithm.

The program code was written in and run in MATLAB R2013 an environment. The parameters are chooses as

5.1. Test for LCPs with Positive Semidefinite Matrices

Example 1. We consider the LCP(q, M) with

The solution set is

When

Example 2. We consider the LCP(q, M) with

The solution set is

When

These examples show that, given the proper initial point, our algorithm can effectively find an approximate sparse solution.

5.2. Test for LCPs with Z-Matrix [6]

Let us consider LCP(q, M) where

Here

Among all the solutions, the vector

In Table 1, “

In order to test the effectiveness of the SS-SG algorithm, we compare with the SSG algorithm of talking the LCPs. In [10] , the authors use the Fiser-Burmeister function established a lp (0 < p < 1) regularized minimization model and designed a SSG method to solve the LCPs. The results are displayed in Table 2, where “_” denotes the method is invalid. Although the sparsity

Table 1. SS-SG’s computation results on LCPs with Z-matrices.

Table 2. SSG’s computation results on LCPs with Z-matrices.

6. Conclusion

In this paper, we have studied a lp (0 < p < 1) model based on the generalized FB function defined as in (2) to find the sparsest solution of LCPs. Then, an lp normregularized and unconstrained minimization model is proposed for relaxation, and we use a sequential smoothing spectral gradient method to solve the model. Numerical results demonstrate that the method can efficiently solve this regularized model and gets a sparsest solution of LCP with high quality.

Acknowledgements

This work is supported by Innovation Programming of Shanghai Municipal Education Commission (No. 14YZ094).

Cite this paper

ChangGao,ZhenshengYu,FeiranWang, (2015) Spectral Gradient Algorithm Based on the Generalized Fiser-Burmeister Function for Sparse Solutions of LCPS. Open Journal of Statistics,05,543-551. doi: 10.4236/ojs.2015.56057

References

- 1. Facchinei, F. and Pang, J.S. (2003) Finite-Dimensional Variational Inequalities and Complementarity Problems. Springer Series in Operations Research, Vol. I & II, Springer, New York.

- 2. Ferris, M.C., Mangasarian, O.L. and Pang, J.S. (2001) Complementarity: Applications, Algorithms and Extensions. Kluwer Academic Publishers, Dordrecht.

- 3. Gao, J.D. (2013) Optimal Cardinality Constrained Portfolio Selection. Operations Research, 61, 745-761.

http://dx.doi.org/10.1287/opre.2013.1170 - 4. Xie, J., He, S. and Zhang, S. (2008) Randomized Portfolio Selection with Constraints. Pacific Journal of Optimization, 4, 87-112.

- 5. Cottle, R.W., Pang, J.S. and Stone, R.E. (1992) The Linear Complementarity Problem. Academic Press, Boston.

- 6. Shang, M.J., Zhang, C. and Xiu, N.H. (2014) Minimal Zero Norm Solutions of Linear Complementarity Problems. Journal of Optimization Theory and Applications, 163, 795-814.

http://dx.doi.org/10.1007/s10957-014-0549-z - 7. Barzilai, J. and Borwein, J.M. (1988) Two Point Step Size Gradient Method. IMA Journal of Numerical Analysis, 8, 174-184.

http://dx.doi.org/10.1093/imanum/8.1.141 - 8. Raydan, M. (1997) The Barzilai-Borwein Gradient Method for the Large Scale Unconstrained Optimization Problem. SIAM Journal on Optimization, 7, 26-33.

http://dx.doi.org/10.1137/S1052623494266365 - 9. Raydan, M. (1993) On the Barzilai and Borwein Choice of Step Length for the Gradient Method. IMA Journal of Numerical Analysis, 13, 321-326.

http://dx.doi.org/10.1093/imanum/13.3.321 - 10. Chen, J.-S. and Pan, S.-H. (2010) A Neural Network Based on the Generalized Fischer-Burmeister Function Fornonlinear Complementarity Problem. Information Sciences, 180, 697-711.

http://dx.doi.org/10.1016/j.ins.2009.11.014 - 11. Chen, J.-S. and Pan, S.-H. (2008) A Family of NCP Functions and a Descent Method for the Nonlinear Complementarity Problem. Computation Optimization and Application, 40, 389-404.

http://dx.doi.org/10.1007/s10589-007-9086-0 - 12. Chen, J.-S. and Pan, S.-H. (2008) A Regularization Semismooth Newton Method Based on the Generalized Fischer-Burmeister Function for P0-NCPs. Journal of Computation and Applied Mathematics, 220, 464-479.

http://dx.doi.org/10.1016/j.cam.2007.08.020 - 13. Chen, J.-S., Gao, H.-T. and Pan, S.-H. (2009) A Derivative-Free R-Linearly Convergent Derivative-Free Algorithm for the NPCs Based on the Generalized Fischer-Burmeister Merit Function. Journal of Computation and Applied Mathematics, 232, 455-471.

- 14. Chen, X.J., Xu, F. and Ye, Y. (2010) Lower Bound Theory of Nonzero Entries in Solutions of l2-lp Minimiization. SIAM: SIAM Journal on Scientific Computing, 32, 2832-2852.

http://dx.doi.org/10.1137/090761471 - 15. Chen, X. and Xiang, S. (2011) Implicit Solution Function of P0 and Z Matrix Linear Complementarity Constrains. Mathematical Programming, 128, 1-18.

http://dx.doi.org/10.1007/s10107-009-0291-8