Paper Menu >>

Journal Menu >>

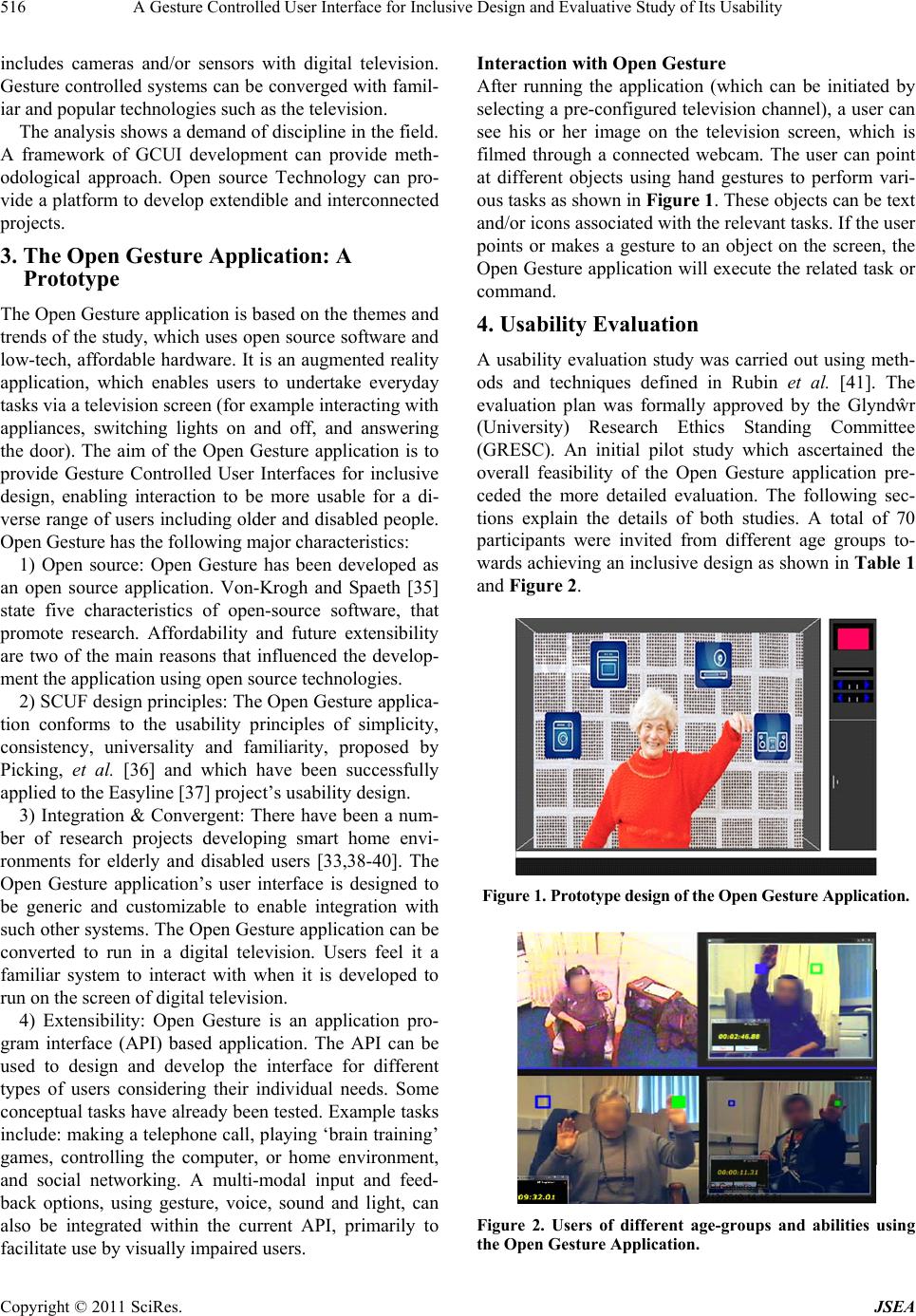

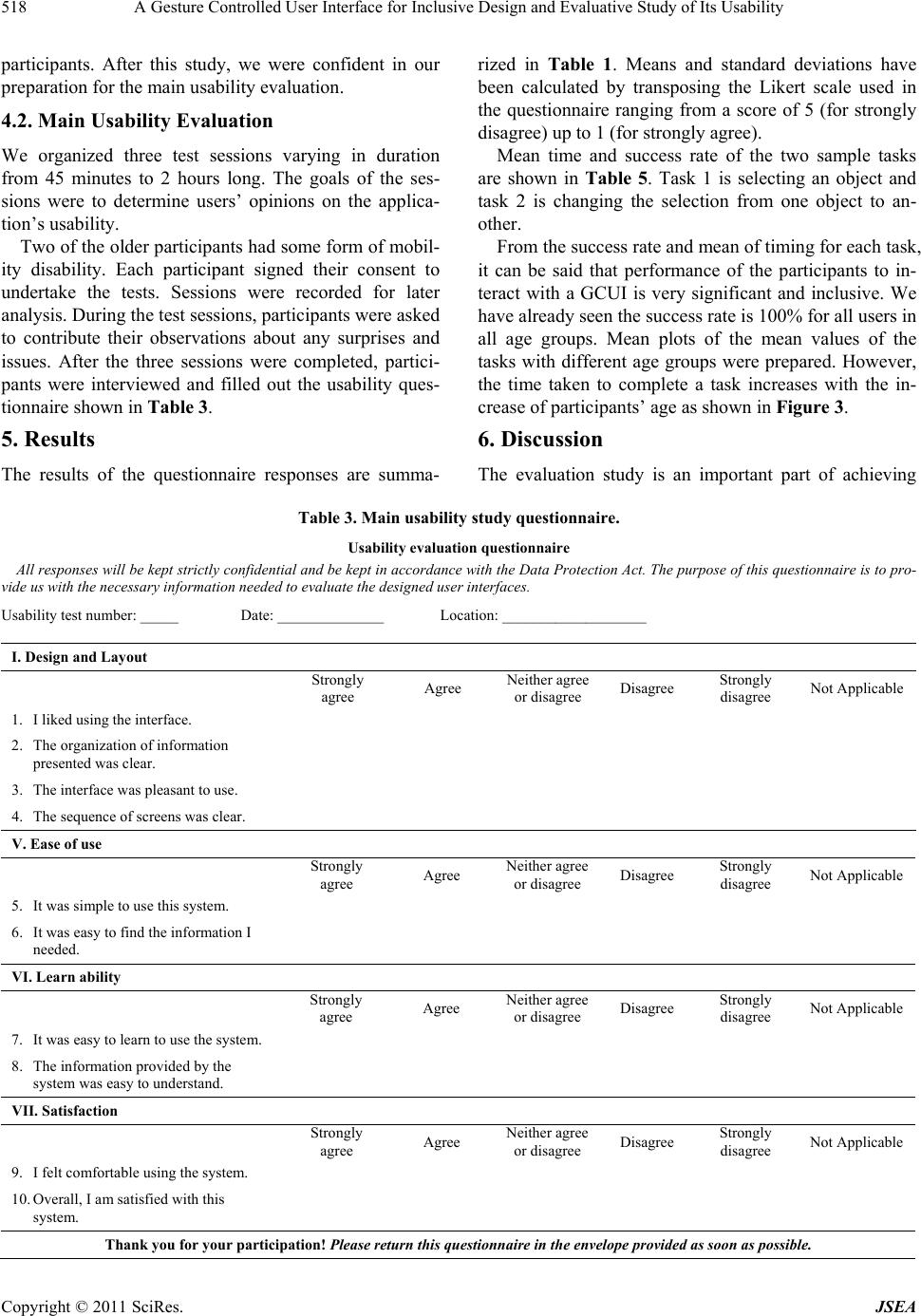

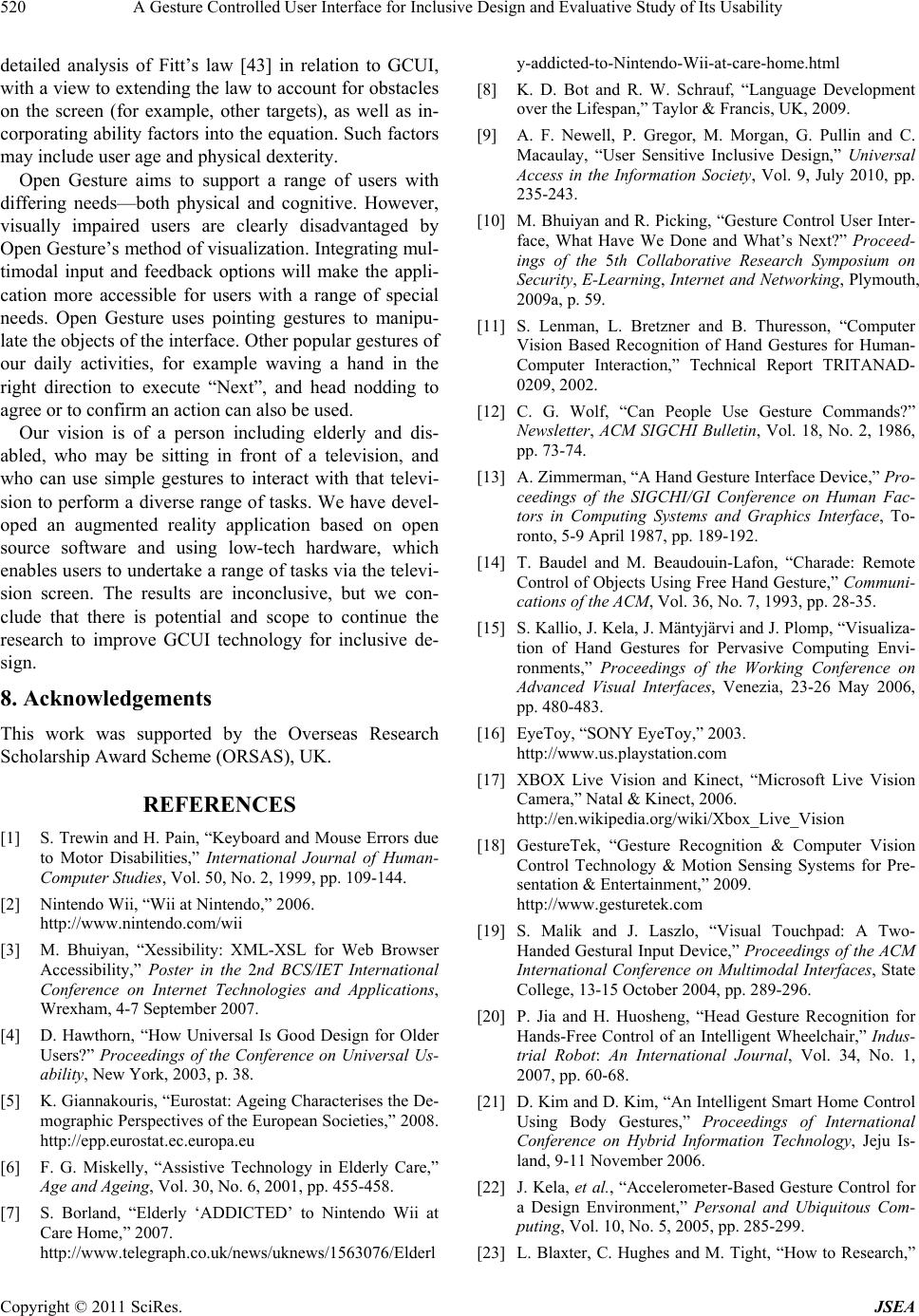

Journal of Software Engineering and Applications, 2011, 4, 513-521 doi:10.4236/jsea.2011.49059 Published Online September 2011 (http://www.SciRP.org/journal/jsea) Copyright © 2011 SciRes. JSEA 513 A Gesture Controlled User Interface for Inclusive Design and Evaluative Study of Its Usability Moniruzzaman Bhuiyan1, Rich Picking2 1Institute of Information technology, University of Dhaka, Dhaka, Bangladesh; 2Centre for Applied Internet Research, Glyndwr Uni- versity, Wrexham, United Kingdom. Email: mb@du.ac.bd; r.picking@glyndwr.ac.uk Received August 15th, 2011; revised September 6th, 2011; accepted September 13th, 2011. ABSTRACT To meet the challenges of ubiquitous computing, ambient technologies and an increasingly older population, research- ers have been trying to break away from traditional modes of interaction. A history of studies over the past 30 years reported in this paper suggests that Gesture Controlled User Interfaces (GCUI) now provide realistic and affordable opportunities, which may be appropriate for older and disabled people. We have developed a GCUI prototype applica- tion, called Open Gesture, to help users carry out everyday activities such as making phone calls, controlling their tele- vision and performing mathematical calculations. Open Gesture uses simple hand gestures to perform a diverse range of tasks via a television interface. This pap er describes Open Gesture and reports its usability eva luation. We conclude that this inclusive technology offers some potential to improve the independence and quality of life of older and dis- abled users along with general users, although there remain significant ch allenges to be overcome. Keywords: Ges t ure Co nt r ol, User Interfa ce , Inclusive Design, Augmented Reality, Television 1. Introduction The evolution of diverse technologies has in turn led to diverse styles of interaction. For example, most people use a keyboard and pointing device (e.g. a mouse) when they interact with a computer, even though this is not the optimal solution for all types of users [1]. Most people also use a remote keypad to control their televisions; they use a push-button interface evolved from an ‘old- fash- ioned’ telephone to interact with their mobile phones, and a proprietary handset evolved from a joystick to play computer games. More novel interaction is increasing in popularity, especially touch-screen technology (e.g. Ap- ple I-touch/phone/pad) and motion-sensing technology, as in the case of the Nintendo Wii [2]. Both these styles of interaction have proved popular with users who are uncomfortable with more common devices, and this in- cludes older users with certain disabilities. People with language barrier (e.g. non-English speaking users) also struggle to use interfaces when many interfaces are in English [3]. It is well-documented that the increasing older popula- tion is becoming one of the major challenges for devel- oped countries [4]. The population will continue to grow older in the future, whilst lower birth rates will result in fewer younger people to care for the elderly [5]. It is widely accepted that we need to address this issue through a range of initiatives, including researching the potential for technology to extend independent living. Such initiatives may also support younger people, in- cluding those with disabilities. Most computer-based assistive technology products have been developed for relatively small groups of users, and have consequently been rather expensive and spe- cialized for specific user groups. In the future, the num- ber of people who may benefit from assistive technolo- gies will inevitably increase. Assistive and ambient tech- nologies are already supporting independent living [6]. Higher demand will reduce prices as those of us who become elderly feel more comfortable with computer technologies. These phenomena are already evident, for example it has been reported that users aged 80 and above in residential care homes have reacted very posi- tively to the Nintendo Wii [7], a gesture controlled gam- ing console. A gesture is a non-verbal, natural commu- nication made with a part of the body. It has been dem- onstrated that young children can readily learn to com- municate with gesture before they learn to talk [8]. However, as our faculties fail, we need to design and  A Gesture Controlled User Interface for Inclusive Design and Evaluative Study of Its Usability 514 develop consistent and familiar interfaces and applica- tions, to reduce confusion and exclusion as much as pos- sible [9]. In the early stages of the present work, we conducted a survey [10] of research and development of exemplar GCUI technology which covers the past thirty years or so. The trends suggest that we are now in a position to sup- port gesture technology using affordable technologies which are familiar and intuitive for many users, and which can be converged with everyday household objects, such as the television. The findings of the study moti- vated the development of Open Gesture, an augmented reality application, which superimposes a mirror image of the user onto the television screen. Open Gesture uses low cost hardware (typically a web cam) and open source software technology, and can be incorporated into pro- grammable digital television equipment. The aim of this application is to provide Gesture Controlled User Inter- faces for inclusive design, enabling interaction to be more usable for older and disabled people. 2. GCUI Research Survey and Analysis The aforementioned historical survey of GCUI technolo- gies undertaken by the authors [10], which is by no means an exhaustive review; rather it maps out a chro- nology of exemplar research projects and commercial products in the domain. It summarizes the projects and classifies them according to the user populations, gesture types, technologies, interfaces, research/evaluation me- thods, and issues addressed. The user populations are categorized as any (when disabled and elderly are men- tioned), disabled, elderly, and general (when disabled or elderly are not mentioned). Gesture types are hand, head, finger, body or any. The technologies briefly summarize the tools or technology used in the system. The interfaces field provides the information where gesture commands take place and it may also describe the feedback given to the user following a gesture input. The task(s) and meth- odologies required to find the result have also been in- cluded. The research issues addressed and study out- comes are also listed in the case of research projects. Twenty six exemplar research projects and eleven commercial projects have been selected after studying over 180 published research papers and projects. The research shows clear signs that gesture controlled tech- nologies are now becoming feasible in terms of their af- fordability, usability, and convergence with established and familiar technologies. Though there are different aspects and many points to mention from the research studied, the following themes were extrapolated to sum- marize the evolution of GCUI development: 1) gesture type; 2) users; 3) application area; 4) technology; and 5) research methodology. These themes will now be briefly discussed, with a view to justifying the design choices made for the Open Gesture application. 2.1. Gesture Type Most research projects have focused on hand gestures. There are also examples of body gesture, head gesture and finger pointing gesture. In earlier research, technol- ogy was expensive and obstructive, for example gloves with microcontrollers connected with the device through a wire were used. Overwhelmingly, hand gestures have been the most dominant gesture type, and given that such interaction is now readily available, affordable and wire- less (e.g. using Bluetooth technologies), we chose this gesture type for the Open Gesture project. There are dis- advantages of hand gestures, for example direct control via hand posture is immediate, but limited in the number of choices [11]. However, this observation was not a problem for us, as we had already chosen to limit the choices for users at any given time to simplify the inter- action as much as possible. 2.2. Users Most of the research reported in our survey considered users of any age. Early projects tended to consider com- puter users interacting with typical applications (e.g. file handling, image interaction or computer-based presenta- tions). Disabled (e.g. wheelchair) users have also been highly considered for accelerometer-based gesture con- trolled systems. Most current investigations are focused on older and disabled people, as researchers begin to see the value that GCUIs may provide. Consequently, it ap- pears very timely to focus on such user groups now. 2.3. Application Area The research shows that gesture-based applications can be used for many different things: entertainment; con- trolling home appliance; tele-care; tele-health; elderly or disabled care, to name a few. The increasing scope of the application areas suggests the importance of undertaking more work in GCUI research. Earlier applications were developed to replace traditional input devices (e.g. the keyboard and mouse) in order to improve accessibility. For example, some used gesture for text editing [12,13] and for presentations [14]. Gesture visualization has also been developed for training applications [15]. Recently, digital cameras have provided new dimensions to de- velop gesture-based user interface development. Now people can interact with a range of media using gesture to control a wide range of applications. The prevalence of gesture-based commercial products has increased since 2003, as the technology has improved and become commercially viable. Copyright © 2011 SciRes. JSEA  A Gesture Controlled User Interface for Inclusive Design and Evaluative Study of Its Usability 515 2.4. Technology The ways of recognizing gestures have progressed sig- nificantly over time. Image processing technology has played an important role here. In the past, gestures have been captured by using infrared beams, data gloves, still cameras, wired and many inter-connected technologies, for example using gloves, pendants, infrared signals and so forth. Recent computer vision techniques have made it possible to capture intuitive gestures for ubiquitous de- vices from the natural environment with visualization, without the need for intrusive tools [16]. The games in- dustry is the main target of these products [2,16,17] al- though other sectors such as healthcare, training, hand- held applications and industrial 3D simulations are also becoming feasible [18]. It appears that GCUI technology is now becoming much more acceptable in terms of us- ability and affordability. 2.5. Research Methodology Typically, a combination of qualitative and quantitative methods has been used in GCUI research. Post-study semi-structured interviews have been popular [12,19-21]. These studies asked users for aggregate reports about their own decision-making processes. Kallio et al. [15] and Kela et al. [22] also used such semi-structured in- terviews, as well as the analysis of users’ instant reac- tions to the interface prototypes. These approaches are very suitable for investigating users’ reactions to new designs. Such types of qualitative methods enable researchers to focus on relevant aspects of the issue and to begin formulating relevant hypotheses. However, the qualita- tive approach is limited by the users’ ability to analyze their own decision-making processes [23], in particular with regard to a complex phenomenon such as gesture. A number of the surveyed research studies also used quantitative methods. In the majority of studies an expe- riential survey [20,24-26] approach was employed, where participants were asked to perform specific ges- tures and several predefined tasks. Subsequently, their impressions were recorded by filling out a questionnaire. Kela et al. [22] applied a basic survey approach, fol- lowed by experimental methods. In this research, both qualitative and quantitative methods have been used. Previous research demonstrates that the initial activities of gesture controlled interfaces are largely based on qualitative methods since this ap- proach is essential when beginning to investigate a new issue or when exploring long-term effects. For this re- search, quantitative approaches have been followed in the pilot study as well as in the detailed usability evalua- tion. In this way the research can make use of the advan- tages and disadvantages of each methodology, making the potential to gather more information greater than when using a single method. As this research is in the domains of Human-Computer Interaction (HCI) and inclusivity, many issues is required to be addressed; not only issues of the design itself but also questions surrounding the design process, such as ethics and communication with the users. Experts in the field of HCI for older adults are agreed that ethical issues should be considered in the design process [27] and [28]. We have been aware of the importance of focusing on users’ needs, wants, and capabilities. User-centred design (UCD) is a set of techniques and processes that enable developers to focus on the users within the design proc- ess [29]. This is a popular approach to this challenge also recommended by the British Standard (ISO 1999). This research demands strong involvement of the users espe- cially during the analysis and evaluation stage. Due to “the cultural and experimental gap” between the designer and older and disabled users, this involvement is particu- larly important [30]. 2.6. Analysis of the Survey The number of research and commercial projects has shown clear signs that gesture controlled technologies are now in the interest of both the people and researchers. There are important issues, which are addressed by the researchers based on traditional systems, interactions and usability. The natural intuition of gesture control has been addressed by many researchers. Gestural interfaces have a number of potential advantages as well as some potential disadvantages [31]. Gesture styles, application domain, input and output are now a discipline of study and research. The commercial sector has now started its journey in response to the weight of research. In future, we may see more research and commercial products. The significance of the trends, necessities and themes of the research studied, can be as follows. Inclusive Design: Inclusive gesture controlled systems can support a wide range of users including the elderly and disabled. Extensibility: The research and projects can be con- nected to develop a standard platform. The platform can be extended as time goes by. All commercial products are proprietary types, which are in many cases, difficult to extend and contribute. Low Cost and Affordable Technology: The review suggests that gesture controlled technology is now read- ily affordable and offers a realistic opportunity. We see that a very low cost DIY tool has been developed [32]. Converged and Ubiquitous: Technologies are being converged. Digital television is now common in every house hold. Net television [33], Gesture television [34] Copyright © 2011 SciRes. JSEA  A Gesture Controlled User Interface for Inclusive Design and Evaluative Study of Its Usability 516 includes cameras and/or sensors with digital television. Gesture controlled systems can be converged with famil- iar and popular technologies such as the television. The analysis shows a demand of discipline in the field. A framework of GCUI development can provide meth- odological approach. Open source Technology can pro- vide a platform to develop extendible and interconnected projects. 3. The Open Gesture Application: A Prototype The Open Gesture application is based on the themes and trends of the study, which uses open source software and low-tech, affordable hardware. It is an augmented reality application, which enables users to undertake everyday tasks via a television screen (for example interacting with appliances, switching lights on and off, and answering the door). The aim of the Open Gesture application is to provide Gesture Controlled User Interfaces for inclusive design, enabling interaction to be more usable for a di- verse range of users including older and disabled people. Open Gesture has the following major characteristics: 1) Open source: Open Gesture has been developed as an open source application. Von-Krogh and Spaeth [35] state five characteristics of open-source software, that promote research. Affordability and future extensibility are two of the main reasons that influenced the develop- ment the application using open source technologies. 2) SCUF design principles: The Open Gesture applica- tion conforms to the usability principles of simplicity, consistency, universality and familiarity, proposed by Picking, et al. [36] and which have been successfully applied to the Easyline [37] project’s usability design. 3) Integration & Convergent: There have been a num- ber of research projects developing smart home envi- ronments for elderly and disabled users [33,38-40]. The Open Gesture application’s user interface is designed to be generic and customizable to enable integration with such other systems. The Open Gesture application can be converted to run in a digital television. Users feel it a familiar system to interact with when it is developed to run on the screen of digital television. 4) Extensibility: Open Gesture is an application pro- gram interface (API) based application. The API can be used to design and develop the interface for different types of users considering their individual needs. Some conceptual tasks have already been tested. Example tasks include: making a telephone call, playing ‘brain training’ games, controlling the computer, or home environment, and social networking. A multi-modal input and feed- back options, using gesture, voice, sound and light, can also be integrated within the current API, primarily to facilitate use by visually impaired users. Interaction with Open Gesture After running the application (which can be initiated by selecting a pre-configured television channel), a user can see his or her image on the television screen, which is filmed through a connected webcam. The user can point at different objects using hand gestures to perform vari- ous tasks as shown in Figure 1. These objects can be text and/or icons associated with the relevant tasks. If the user points or makes a gesture to an object on the screen, the Open Gesture application will execute the related task or command. 4. Usability Eval u a tion A usability evaluation study was carried out using meth- ods and techniques defined in Rubin et al. [41]. The evaluation plan was formally approved by the Glyndŵr (University) Research Ethics Standing Committee (GRESC). An initial pilot study which ascertained the overall feasibility of the Open Gesture application pre- ceded the more detailed evaluation. The following sec- tions explain the details of both studies. A total of 70 participants were invited from different age groups to- wards achieving an inclusive design as shown in Table 1 and Figure 2. Figure 1. Prototype design of the Op en Gesture App lication. Figure 2. Users of different age-groups and abilities using the Open Gesture Application. Copyright © 2011 SciRes. JSEA  A Gesture Controlled User Interface for Inclusive Design and Evaluative Study of Its Usability Copyright © 2011 SciRes. JSEA 517 Table 1. Age-groups and numbers of evaluation studies. Though the participants were small in number for the pilot study, however, it was ensured to accommodate representative people from different age groups. There were nine participants in all, three of whom were over 50 years old. Numbers Male 39 Female 31 Gender Total 70 18 - 30 16 31 - 40 16 41 -50 8 51 - 60 8 61 - 70 7 71 - 80 8 80+ 1 Age Special 6 The majority of the participants agreed with question 1 (rated 5 or higher out of 7) that it was simple to use this system. Similarly, they also responded positively about the learning of the system (question-2) and completing the conceptual tasks (question-3). The concept of using the GCUI was strongly supported by the participants. Every participant was asked for their views on using GCUI by older and disabled people to perform daily tasks. The majority of the participants believed using the system in households would increase the independence of elderly users, especially for those with limited mobil- ity. However, the need to support individual users with varying abilities and requirements was highlighted. 4.1. Pilot Usability Evaluation A pilot usability study conducted with selected partici- pants using questions derived from a well-known generic usability questionnaire [42], as depicted in Table 2. The focus was on the following: The pilot study offered positive progress towards the effective interface design and detailed usability evalua- tion. It provided a valuable opportunity of using the GCUI, its associated hardware, Glyndwr University’s usability laboratory, and the questionnaire tool with the a) Personal usability feedback of the participant after using the GCUI. b) The participant’s general feedback regarding the usability of the GCUI for older and disabled people. c) Positive and negative aspects of the GCUI. Table 2. Pilot usability evaluation questionnaire. 1 2 3 4 5 6 7 NA 1. It was simple to use this system strongly disagree strongly agree 2. It was easy to learn to use this system strongly disagree strongly agree 3. I can effectively complete the conceptual tasks using this system strongly disagree strongly agree 4. It is easy to find the information I needed to perform a task. strongly disagree strongly agree 5. I like using the concept of using Gesture Controlled user interface. strongly disagree strongly agree 6. Using the system in households would enable users to perform daily activities more quickly. unlikely likely 7. Using the system in households would increase independence of elderly users. unlikely likely 8. Overall, I am satisfied with this System. unlikely likely Please write your overall impression about the research project: 9. List the most negative aspect(s): 10. List the most positive aspect(s): 11. What is your age group? 16 - 22 23 - 30 31 - 40 41 - 50 50+  A Gesture Controlled User Interface for Inclusive Design and Evaluative Study of Its Usability 518 participants. After this study, we were confident in our preparation for the main usability evaluation. 4.2. Main Usability Evaluation We organized three test sessions varying in duration from 45 minutes to 2 hours long. The goals of the ses- sions were to determine users’ opinions on the applica- tion’s usability. Two of the older participants had some form of mobil- ity disability. Each participant signed their consent to undertake the tests. Sessions were recorded for later analysis. During the test sessions, participants were asked to contribute their observations about any surprises and issues. After the three sessions were completed, partici- pants were interviewed and filled out the usability ques- tionnaire shown in Table 3. 5. Results The results of the questionnaire responses are summa- rized in Table 1. Means and standard deviations have been calculated by transposing the Likert scale used in the questionnaire ranging from a score of 5 (for strongly disagree) up to 1 (for strongly agree). Mean time and success rate of the two sample tasks are shown in Table 5. Task 1 is selecting an object and task 2 is changing the selection from one object to an- other. From the success rate and mean of timing for each task, it can be said that performance of the participants to in- teract with a GCUI is very significant and inclusive. We have already seen the success rate is 100% for all users in all age groups. Mean plots of the mean values of the tasks with different age groups were prepared. However, the time taken to complete a task increases with the in- crease of participants’ age as shown in Figure 3. 6. Discussion The evaluation study is an important part of achieving Table 3. Main usability study questionnaire. Usability evaluation questionnaire All responses will be kept strictly confidential and be kept in accordance with the Data Protection Act. The purpose of this questionnaire is to pro- vide us with the necessary information needed to evaluate the designed user interfaces. Usability test number: _____ Date: ______________ Location: ___________________ I. Design and Layout Strongly agree Agree Neither agree or disagree Disagree Strongly disagree Not Applicable 1. I liked using the interface. 2. The organization of information presented was clear. 3. The interface was pleasant to use. 4. The sequence of screens was clear. V. Ease of use Strongly agree Agree Neither agree or disagree Disagree Strongly disagree Not Applicable 5. It was simple to use this system. 6. It was easy to find the information I needed. VI. Learn ability Strongly agree Agree Neither agree or disagree Disagree Strongly disagree Not Applicable 7. It was easy to learn to use the system. 8. The information provided by the system was easy to understand. VII. Satisfaction Strongly agree Agree Neither agree or disagree Disagree Strongly disagree Not Applicable 9. I felt comfortable using the system. 10. Overall, I am satisfied with this system. Thank you for your participation! Please return this questionnaire in the envelope provided as soon as possible. Copyright © 2011 SciRes. JSEA  A Gesture Controlled User Interface for Inclusive Design and Evaluative Study of Its Usability 519 Table 4. Questionnaire results. Question Mean Standard deviation 1 2.8 1.1 2 2.96 0.88 3 2.69 0.88 4 3.13 0.9 5 2.91 0.99 6 3.21 0.74 7 3.13 1.01 8 3.38 0.88 9 2.69 0.88 10 2.74 0.86 Table 5. Success rate and mean time of the tasks. Tasks Success (%) Mean time (in sec) Task 1 100% 0.9808 Task 2 100% 1.0959 inclusive design and usability goals of any user interface. The scale of 1.5 for question responses gives us a neutral value (neither agree nor disagree). Table 4 clearly shows that this neutrality is largely propagated throughout. These figures are very similar for all age groups, indicat- ing consistency across the full age range. Whilst these results are disappointing to an extent, in that the GCUI has not been positively endorsed by the participants, nei- ther has it been rejected. Concerning the usability analysis, it could be argued that an average value of two to three could be accepted for an application such as Open Gesture. More informa- tion on learning and understanding needs to be provided in the interface. Time and age factors in a GCUI were studied (in Ta- ble 5 and chart 1) and the data was analysed. It was re- vealed that users from any age group, or of limited ability, could perform the gesture interaction in a GCUI, and that the timing of tasks varies with users’ age and ability. An analysis of variance (ANOVA) on all of the data was run (not shown here) thorough which participants’ feedback was verified with different age groups and it revealed a non-significant effect of the age on the participants’ feedback. 7. Future Work and Conclusions Clearly, more research is required into determining the best configurations for the Open Gesture application. Individual preferences should also be supported, espe- cially where issues of inclusion are apparent. We intend to continue this research, and will evaluate more alterna- tives with our user groups. We also plan to undertake a Figure 3. Mean plot of fastest time of the tasks. Copyright © 2011 SciRes. JSEA  A Gesture Controlled User Interface for Inclusive Design and Evaluative Study of Its Usability 520 detailed analysis of Fitt’s law [43] in relation to GCUI, with a view to extending the law to account for obstacles on the screen (for example, other targets), as well as in- corporating ability factors into the equation. Such factors may include user age and physical dexterity. Open Gesture aims to support a range of users with differing needs—both physical and cognitive. However, visually impaired users are clearly disadvantaged by Open Gesture’s method of visualization. Integrating mul- timodal input and feedback options will make the appli- cation more accessible for users with a range of special needs. Open Gesture uses pointing gestures to manipu- late the objects of the interface. Other popular gestures of our daily activities, for example waving a hand in the right direction to execute “Next”, and head nodding to agree or to confirm an action can also be used. Our vision is of a person including elderly and dis- abled, who may be sitting in front of a television, and who can use simple gestures to interact with that televi- sion to perform a diverse range of tasks. We have devel- oped an augmented reality application based on open source software and using low-tech hardware, which enables users to undertake a range of tasks via the televi- sion screen. The results are inconclusive, but we con- clude that there is potential and scope to continue the research to improve GCUI technology for inclusive de- sign. 8. Acknowledgements This work was supported by the Overseas Research Scholarship Award Scheme (ORSAS), UK. REFERENCES [1] S. Trewin and H. Pain, “Keyboard and Mouse Errors due to Motor Disabilities,” International Journal of Human- Computer Studies, Vol. 50, No. 2, 1999, pp. 109-144. [2] Nintendo Wii, “Wii at Nintendo,” 2006. http://www.nintendo.com/wii [3] M. Bhuiyan, “Xessibility: XML-XSL for Web Browser Accessibility,” Poster in the 2nd BCS/IET International Conference on Internet Technologies and Applications, Wrexham, 4-7 September 2007. [4] D. Hawthorn, “How Universal Is Good Design for Older Users?” Proceedings of the Conference on Universal Us- ability, New York, 2003, p. 38. [5] K. Giannakouris, “Eurostat: Ageing Characterises the De- mographic Perspectives of the European Societies,” 2008. http://epp.eurostat.ec.europa.eu [6] F. G. Miskelly, “Assistive Technology in Elderly Care,” Age and Ageing, Vol. 30, No. 6, 2001, pp. 455-458. [7] S. Borland, “Elderly ‘ADDICTED’ to Nintendo Wii at Care Home,” 2007. http://www.telegraph.co.uk/news/uknews/1563076/Elderl y-addicted-to-Nintendo-Wii-at-care-home.html [8] K. D. Bot and R. W. Schrauf, “Language Development over the Lifespan,” Taylor & Francis, UK, 2009. [9] A. F. Newell, P. Gregor, M. Morgan, G. Pullin and C. Macaulay, “User Sensitive Inclusive Design,” Universal Access in the Information Society, Vol. 9, July 2010, pp. 235-243. [10] M. Bhuiyan and R. Picking, “Gesture Control User Inter- face, What Have We Done and What’s Next?” Proceed- ings of the 5th Collaborative Research Symposium on Security, E-Learning, Internet and Networking, Plymouth, 2009a, p. 59. [11] S. Lenman, L. Bretzner and B. Thuresson, “Computer Vision Based Recognition of Hand Gestures for Human- Computer Interaction,” Technical Report TRITANAD- 0209, 2002. [12] C. G. Wolf, “Can People Use Gesture Commands?” Newsletter, ACM SIGCHI Bulletin, Vol. 18, No. 2, 1986, pp. 73-74. [13] A. Zimmerman, “A Hand Gesture Interface Device,” Pro- ceedings of the SIGCHI/GI Conference on Human Fac- tors in Computing Systems and Graphics Interface, To- ronto, 5-9 April 1987, pp. 189-192. [14] T. Baudel and M. Beaudouin-Lafon, “Charade: Remote Control of Objects Using Free Hand Gesture,” Communi- cations of the ACM, Vol. 36, No. 7, 1993, pp. 28-35. [15] S. Kallio, J. Kela, J. Mäntyjärvi and J. Plomp, “Visualiza- tion of Hand Gestures for Pervasive Computing Envi- ronments,” Proceedings of the Working Conference on Advanced Visual Interfaces, Venezia, 23-26 May 2006, pp. 480-483. [16] EyeToy, “SONY EyeToy,” 2003. http://www.us.playstation.com [17] XBOX Live Vision and Kinect, “Microsoft Live Vision Camera,” Natal & Kinect, 2006. http://en.wikipedia.org/wiki/Xbox_Live_Vision [18] GestureTek, “Gesture Recognition & Computer Vision Control Technology & Motion Sensing Systems for Pre- sentation & Entertainment,” 2009. http://www.gesturetek.com [19] S. Malik and J. Laszlo, “Visual Touchpad: A Two- Handed Gestural Input Device,” Proceedings of the ACM International Conference on Multimodal Interfaces, State College, 13-15 October 2004, pp. 289-296. [20] P. Jia and H. Huosheng, “Head Gesture Recognition for Hands-Free Control of an Intelligent Wheelchair,” Indus- trial Robot: An International Journal, Vol. 34, No. 1, 2007, pp. 60-68. [21] D. Kim and D. Kim, “An Intelligent Smart Home Control Using Body Gestures,” Proceedings of International Conference on Hybrid Information Technology, Jeju Is- land, 9-11 November 2006. [22] J. Kela, et al ., “Accelerometer-Based Gesture Control for a Design Environment,” Personal and Ubiquitous Com- puting, Vol. 10, No. 5, 2005, pp. 285-299. [23] L. Blaxter, C. Hughes and M. Tight, “How to Research,” Copyright © 2011 SciRes. JSEA  A Gesture Controlled User Interface for Inclusive Design and Evaluative Study of Its Usability 521 Open University, USA, 1996. [24] A. Wilson and N. Oliver, “GWindows: Towards Robust Perception-Based UI,” Proceedings of CVPR 2003 (Workshop on Computer Vision for HCI), 2003. [25] H. Lee, et al., “Select-and-Point: A Novel Interface for Multi-Device Connection and Control Based on Simple Hand Gestures,” CHI 2008, Florence, 5-10 April 2008. [26] K. Tsukadaa and M. Yasumura, “Ubi-Finger: Gesture Input Device for Mobile Use,” Journal of Transactions of Information Processing Society of Japan, Vol. 43, No. 12, 2002, pp. 3675-3684. [27] J. Goodman, S. Brewster and P. Gray, “Not Just a Matter of Design: Key Issues surrounding the Inclusive Design Process,” Helen Hamlyn Research Centre, London, 2005. [28] R. Picking, et al., “A Case Study Using a Methodological Approach to Developing User Interfaces for Elderly and Disabled People,” The Computer Journal, Vol. 53, No. 6, 2010, pp. 842-859. [29] A. Helal, M. Mokhtari and B. Abdulrazak, “The Engi- neering Handbook of Smart Technology for Aging, Dis- ability and Independence,” 1st Edition, Wiley-Intersci- ence, USA, 2008. [30] R. Eisma, et al., “Early User Involvement in the Devel- opment o Information Technology-Related Products for Older People,” Universal Access in the Information Soci- ety, Vol. 3, No. 2, 2004, pp. 131-140. [31] R. Jim, “Dialogue Management for Gestural Interfaces,” ACM SIGGRAPH Computer Graphics, Vol. 21, No. 2, 1987, pp. 137-142. [32] Atlas Glove, “Atlas Glove,” 2006. http://atlasgloves.org [33] Philips Net TV, 2010. http://www.philips.co.uk/c/about-philips-nettv-partnershi ps/22183/cat/ http://www.philips.co.uk/c/about-philips-nettv-partnershi ps/22183/cat/ [34] E. Sellek, “Toshiba’s Gesture-Based User Interface,” 2010. http://www.slashgear.com/toshiba-airswing-is-a-gesture- based-ui-for-the-masses-video-2787379/ [35] G. Von Krogh and S. Spaeth, “The Open Source Software Phenomenon: Characteristics That Promote Research,” Journal of Strategic Information System, Vol. 16, No. 3, 2007, pp. 236-253. [36] R. Picking, V. Grout, J. Crisp and H. Grout, “Simplicity, Consistency, Universality and Familiarity: Applying ‘SCUF’ Principles to Technology for Assisted Living,” Proceedings of the 1st International Workshop on De- signing Ambient Interactions for Older Users—Part of the 3rd European Conference on Ambient Intelligence, Vienna, 2009. [37] Easyline, “Easyline+: Low Cost Advanced White Goods for a Longer Independent Life of Elderly People,” 2009. http://easylineplus.com/ [38] CAALYX, “Complete Ambient Assisted Living,” 2009. http://caalyx.eu/ [39] INHOME, “INHOME Project: An Intelligent Interactive Services Environment for Assisted Living,” 2009. http://www.ist-inhome.eu [40] OLDES, “OLDES: Older People’s E-Service at Home,” 2009. http://www.oldes.eu/ [41] J. Z. Rubin, D. Chisnell and J. M. Spool, “Handbook of Usability Testing: How to Plan, Design, and Conduct Ef- fective Tests,” 2nd Edition, Wiley Publishing, Indianapo- lis, 2008. [42] G. Perlman, “User Interface Usability Evaluation with Web-Based Questionnaire,” 2009. http://hcibib.org/perlman/questiOn.html [43] P. M. Fitts, “The Information Capacity of the Human Motor System in Controlling the Amplitude of Move- ment,” Journal of Experimental Psychology; Reading in Research Methods in Human-Co mpute r Inte raction (Lazar , Feng and Hochheiser, 2010), Vol. 47, No. 6, June 1954, pp. 381-391. Copyright © 2011 SciRes. JSEA |