Journal of Computer and Communications

Vol.05 No.03(2017), Article ID:74649,9 pages

10.4236/jcc.2017.53001

Light Field Flow Estimation Based on Occlusion Detection

Wei Zhang, Lili Lin

College of Information and Electronic Engineering, Zhejiang Gongshang University, Hangzhou, China

Received: December 19, 2016; Accepted: March 10, 2017; Published: March 13, 2017

ABSTRACT

Light field cameras have a wide area of applications, such as digital refocusing, scene depth information extraction and 3-D image reconstruction. By recording the energy and direction information of light field, they can well solve many technical problems that cannot be done by conventional cameras. An important feature of light field cameras is that a microlens array is inserted between the sensor and main lens, through which a series of sub-aperture images of different perspectives are formed. Based on this feature and the full-focus image acquisition technique, we propose a light-field optical flow calculation algorithm, which involves both the depth estimation and the occlusion detection and guarantees the edge-preserving property. This algorithm consists of three steps: 1) Computing the dense optical flow field among a group of sub-aperture images; 2) Obtaining a robust depth-estimation by initializing the light-filed optical flow using the linear regression approach and detecting occluded areas using the consistency; 3) Computing an improved light-field depth map by using the edge-preserving algorithm to realize interpolation optimization. The reliability and high accuracy of the proposed approach is validated by experimental results.

Keywords:

Light Field Images, Optical Flow, Edge-Preserve, Depth Estimation, Occlusion Detection

1. Introduction

Light Field cameras, due to their ability of collecting 4D light field information, provide many capabilities that can never be provided by conventional cameras, such as controlling of field depth, refocusing after the fact, and changing of view points [1]. In recent years, light field cameras enjoy a variety of applications, such like light field depth map estimation [2], digital refocusing [3], 3-D image reconstruction and stereo vision matching. An important prerequisite for achieving these applications is to obtain an accurate and efficient depth estimation of light-field images. Recently, many depth estimation algorithms based on light-field images have been proposed [4]. However, these algorithms do not take spatial and angular resolution into consideration, thus having the following two limitations in the presence of depth discontinuities or occlusions.

1. The occlusion detection algorithms involved in these algorithms are sensitive to the surface texture and color of objects in some cases.

2. The accuracy of depth estimation usually depends on the distance between two focal stack images.

To overcome the aforementioned drawbacks, this work proposes an edge- preserving light-field flow estimation algorithm. Firstly, the dense optical flow field of multi-frame sub-apertures of light-field images is computed. Then, all occlusions that may occur during the computation are taken into account. Based on the geometrical features of light-field images and the correspondence among them, a robust light-field flow can be obtained, which contains the accurate depth estimation and occlusion detection. It is worth noting that linear regression is performed on multi-aperture images in our proposed algorithm. As a result, it can well handle two problems that are suffered by conventional optical flow approaches: one is the accuracy-loss during the computation of the optical flow value between two images, and the other is that the occlusion region cannot be detected correctly.

2. Related Work

Optical flow estimation, as a hot topic, has been extensively studied [5]. Most of the existing approaches are based on variational formulations and the related energy minimization. Basically, coarse-to-fine schemes are adopted in these approaches to perform the minimization. Unfortunately, such approaches suffer from the problem of error accumulation. More specifically, since the precision of minimization depends on the range of local minima and cross-scale calculations are required by coarse-to-fine schemes, errors are gradually accumulated during cross-scale calculations. Consequently, this problem leads to serious distortion of images, especially in the case of large displacements.

Recently, some approaches with matching features involved are proposed. Xu et al. merged the estimated flow with matching features at each level of the coarse-to-fine scheme [6]. A penalization of the difference between the optical flow and HOG matches is added to the energy by Brox and Malik [7]. Weinzaepfel et al. replaced the HOG matches by an approach based on similarities of non-rigid patches: DeepMatching [8]. Braux-Zin et al. used segment features in addition to key points matching [9]. As mentioned before, these approaches rely on coarse-to-fine schemes and thus some defects cannot be eliminated. In particular, it could happen that some details will lost after scaling up an image. Besides, it is difficult to distinguish a target object from the background when they have similar textures.

In this paper, we propose a novel optical flow calculation algorithm, where the edge preserving technique is adopted. The existing algorithms generally do not consider the edge parts of the target image, while ours does. Note that it is difficult to do the depth estimation and occlusion detection without considering the edge parts. Besides, the conventional algorithms fail to accurately estimates the model of the optical flow field when there are occluded areas during imaging. In addition, the conventional algorithms can hardly guarantee the integrity of the edge parts, which leads to the blurring of the moving boundary and thus affects the estimation of the whole depth map. For example, the results of depth estimation and occlusion detection obtained by the algorithm proposed by Wang et al. [10] are shown in Figure 1. It can be seen that the edge parts are not well handled.

3. Light Field Flow Detection Based on Occlusion Detection

In this section, we propose a new optical flow detection approach, which aims to obtain the dense and accurate light field flow estimation. Firstly, we get a series of sub-aperture images from the original light-field image. Secondly, we regard the central frame as the reference frame and compute the dense optical flow between it and all the other sub-aperture images. Thirdly, a robust depth estimation is obtained by initializing the light-field flow with linear regression approach. Note that occluded areas are detected with consistency during this process. Finally, an improved light-field depth map is obtained by using the edge-preserving algorithm to realize interpolation optimization. The detailed procedure is shown in Figure 2.

3.1. Dense Optical Flow in Multi-Frame Sub-Aperture Images

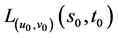

In order to calculate the optical flow, it is necessary to compute the correspondence points between frames. Let  denote a set of 4-D light field data, where

denote a set of 4-D light field data, where  are the spatial coordinates and

are the spatial coordinates and  are the angular coordinates. For clarity, we use

are the angular coordinates. For clarity, we use  and

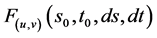

and  to denote a sub-aper- ture image and the central sub-aperture image, respectively. The estimation of optical flow between the central sub-aperture image and the other sub-aperture images is denoted as

to denote a sub-aper- ture image and the central sub-aperture image, respectively. The estimation of optical flow between the central sub-aperture image and the other sub-aperture images is denoted as , where the pixel

, where the pixel  in

in  corresponds to the pixel

corresponds to the pixel  in

in .

.

As shown in Figure 3, there are totally 49 images in the sub-aperture image

Figure 1. Visual effects of depth estimation and occlusion detection. (a) Input image; (b) Wang’s depth estimation; (c) Wang’s occlusion detection

Figure 2. Flow chart of optical flow detection in light field.

Figure 3. Diagram of matching points between multi-frame sub-aperture images.

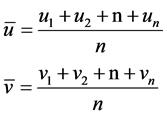

array, and the central sub-aperture image is the 25th one. From the sub-aperture image array, we select one row (e.g. the 29th image - 35th image) and one column (e.g. the 5th, 12th, 19th, 26th, 33th, 40th, 47th) for analysis. The horizontal displacements of the pixels between the 25th central sub-aperture image and the horizontal ones in the selected row are estimated and denoted by u1, u2, …, u7. And the vertical displacements of the pixels between the 25th central sub-aperture image and the vertical ones in the selected column are estimated and denoted as v1, v2, …, v7. Theoretically, it should be a linear relationship between any two horizontal or any two vertical displacements when the pixel movement is stable and not occluded. Under this assumption, we try to calculate these two linear relationships. We firstly calculate the average horizontal and vertical displacements

(1)

(1)

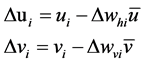

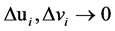

where n is the number of sub-aperture images in this row and column. Then, we define the deviation between the measured and the calculated displacements as follows:

(2)

(2)

When , we can obtain the most suitable values

, we can obtain the most suitable values

3.2. Initial Depth Estimation

By reconstructing the optical flow estimation

where α is a depth factor of the refocus plane, generally ranging in [0.2, 2], and and denote the displacement of the counterpart of the coordinates

3.3. Depth Map

During the calculation of optical flow, we can use the consistency of forward and backward flows to detect and remove the occlusions between every two sub- aperture images. Specifically, a comparison is made between the forward flow

To further improve the performance, we calculate the forward and backward flows between multiple sub-aperture images, in a way that is similar to the calculation of horizontal and vertical displacements of optical flow, and then compare their consistency. The pixels that are inconsistent are regarded as occluded and should be removed. The occlusion detection results of Wang’s and ours are shown in Figure 5. It can be seen that our result is much better than that of Wang et al. in terms of accuracy.

Figure 4. Comparison of initial depth estimation between traditional approach and ours. (a) Result between only 2 frames; (b) our result.

Figure 5. Comparison of occlusion detection between our method and Wang’s. (a) Input light field image; (b) occlusion detection results of our method; (c) occlusion detection results of Wang’s.

4. Optimization of Deep Estimation Based on EpicFlow

In the aforementioned optical flow calculation and occlusion detection, the edge information is neglected as shown in Figure 6. As a result, the accuracy is decreased when finding the corresponding point to estimate the optical flow. Furthermore, there may exist errors during matching the corresponding points.

Therefore, we further use the Epicflow algorithm proposed by Revaud et al [11]., which uses the structured edge detection (SED), to perform interpolation optimization for the blank areas that are caused by the removal of occluded areas. In this way, we can compensate for the accuracy loss of depth estimation that are caused by inaccurate occluded areas and the matching error of corresponding points.

The most recently proposed Fullflow method [12] also adopts EpicFlow. But the overall performance of optical flow estimation of our method is much better than that of Fullflow method as shown in Figure 7. Clearly, it is necessary to preserve the edge of the target region and to perform the interpolation optimization for the occluded regions in the optical flow estimation.

5. Optimization of Deep Estimation Based on EpicFlow

In this section, a set of experiments is carried out to validate the superiority of our proposed approach over the state-of-the-art methods. Note that light field images with the resolution of 398 × 398 are chosen for test.

Firstly, totally 7 × 7 sub-aperture images are extracted from a raw light field image. Then, on the basis of conventional Lucas-Kanade algorithm, we calculate the set of corresponding points between the central sub-aperture image and all the other ones. Based on the obtained set of corresponding points, the optical flow estimation results are obtained in the horizontal direction and the vertical direction. As a result, the stable and homogeneous optical flow of the light field image can be calculated. Note that the effects of the noise, varying light intensity and occlusions are minimized during the calculation of the corresponding points of the target area. Finally, we optimize depth estimation of the light field image by the EpicFlow approach.

Figure 6. The image edge detection result using SED algorithm.

As shown in Figure 8, our proposed approach can achieve a more stable optical flow and the effects of occluded areas are reduced significantly. In addition,

Figure 7. Comparisons of edge details obtained by our approach and Fullflow method. (a) The edge detail obtained by our approach; (b) the edge detail obtained by FullFlow method.

Figure 8. Comparison of several algorithms. (a) Input image; (b) LK; (c) sparseFlow; (d) SimpleFlow; (e) fullFlow; (f) ourFlow.

the edge parts are properly processed, resulting in a better depth map estimation. Compared with some other mainstream optical flow algorithms, our approach has better performance.

Cite this paper

Zhang, W. and Lin, L.L. (2017) Light Field Flow Estimation Based on Occlusion Detection. Journal of Computer and Communications, 5, 1-9. https://doi.org/10.4236/jcc.2017.53001

References

- 1. Ng, R., Levoy, M., Brédif, M., et al. (2005) Light Field Photography with a Hand-Held Plenoptic Camera. Stanford University: Computer Science Technical Report CSTR, 2(11).

- 2. Jeon, H.G., Park, J., Choe, G., et al. (2015) Accurate Depth Map Estimation from a Lenslet Light Field Camera. Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Boston, 1547-1555. https://doi.org/10.1109/CVPR.2015.7298762

- 3. Nava, F.P., Marichal-Hernández, J.G. and Rodríguez-Ramos, J.M. (2008) The Discrete Focal Stack Transform. Proceedings of 16th European Signal Processing Conference, IEEE, Lausame, 1-5.

- 4. Butler, D.J., Wulff, J., Stanley, G.B. and Black, M.J. (2012) Anaturalistic Open Source Movie for Optical Flow Evaluation.

- 5. Horn, B.K.P. and Schunck, B.G. (1981) Determining Optical Flow. Artificial Intelligence. https://doi.org/10.1016/0004-3702(81)90024-2

- 6. Xu, L., Jia, J. and Matsushita, Y. (2012) Motion Detail Preservingoptical Flow Estimation. IEEE Transactions on Pattern Analysis and Machine Intelligence.

- 7. Brox, T. and Malik, J. (2011) Large Displacement Optical Flow: De-Scriptor Matching in Variational Motion Estimation. IEEE Transactions on Pattern Analysis and Machine Intelligence.

- 8. Weinzaepfel, P., Revaud, J., Harchaoui, Z. and Schmid, C. (2013) Deepflow: Large Displacement Optical Flow with Deep Matching.

- 9. Braux-Zin, J., Dupont, R. and Bartoli, A. (2013) A General Dense Image Matching Framework Combining Direct and Feature-Basedcosts.

- 10. Adelson, E. and Wang, J. (1992) Single Lens Stereo with a Plenoptic Camera. IEEE Transactions on Pattern Analysis and Machine Intelligence. https://doi.org/10.1109/34.121783

- 11. Revaud, J., Weinzaepfel, P., Harchaoui, Z. and Schmid, C. (2015) EpicFlow: Edge-Preserving Interpolation of Correspondences for Optical Flow. In: CVPR 2015.

- 12. Chen, Q. and Koltun, V. (2016) Full Flow: Optical Flow Estimation By Global Optimization over Regular Grids. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). https://doi.org/10.1109/cvpr.2016.509