Journal of Software Engineering and Applications

Vol.7 No.5(2014), Article ID:46053,7 pages DOI:10.4236/jsea.2014.75037

Flying in Complex Environments: Can Insects Bind Multiple Sensory Perceptions and What Could Be the Lessons for Machine Vision?

Adrian G. Dyer1,2, Sridhar Ravi3,4, Jair E. Garcia1,5

1School of Media and Communication, RMIT University, Melbourne, Australia

2Department of Physiology, Monash University, Clayton, Australia

3Department of Organismic and Evolutionary Biology, Harvard University, Ambridge, USA

4School of Aerospace, Mechanical and Manufacturing Engineering, RMIT University, Bundoora, Australia

5School of Applied Sciences, RMIT University, Melbourne, Australia

Email: jair.garcia@rmit.edu.au

Copyright © 2014 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 21 March 2014; revised 20 April 2014; accepted 27 April 2014

ABSTRACT

The possibility of having flying machines in complex natural environments presents many exciting possibilities, but also technical challenges. Insects often rely on visual cues for flight and decision making, and recent work suggests that the perception of wind force through tactile sensory inputs also provides important information for flight control. However, the extent to which these respective cues might potentially be bound together in the brain to enable accurate decisions remains untested. Here we discuss recent evidence that the brain of insects possesses mechanisms that may allow for the binding of complex multisensory information, and we propose an experiment that could dissect whether insects like bees may have such a capacity. We additionally discuss areas of the bee brain that might facilitate decision making in order to provide a road map forward for future work on understanding the mechanisms of flying in complex natural environments.

Keywords:Wind, Decision-Making, UAV, Brain, Perception, Color

1. Flying in Complex Natural Environments

With the recent rapid advances in material and sensor technology, the potential applications for small, lightweight UAVs to operate in highly complex environments promises to be a great boom for fields like disaster surveillance, commercial product delivery, or the distribution of medication in remote and inaccessible terrain. A real world challenge for such systems, however, is the highly variable nature of life “outside the lab”. Several factors can be very problematic in natural conditions, including but not limited to 1) the size of objects as perceived by a visual system is dependent upon viewing distance during an approach flight and so can be an ambiguous cue [1] , 2) the shape of specific elements, and the spatial relationship to other elements in a scene can vary with “observer” viewpoint [2] , and 3) external factors like wind can make flight difficult in natural conditions, potentially influencing what decisions need to be made [3] . Despite these challenges, many insects like honeybees (Apis mellifera) and bumblebees (Bombus terrestris) often fly in very complex and challenging environments [4] , and have potentially evolved mechanisms that might be of high value for bio-inspired solutions for computer vision and unmanned vehicles. In this short report we provide a theoretical background based on recent studies for how insect vision may deal with the complexity of natural environments, and we propose future work that can further build our understanding of how flying in complex natural environments might benefit from multisensory integration of information.

2. The Size Problem

The absolute size of an image on a sensor or retina may be an unreliable cue. For example, a flying bee that detects a flower which subtends a visual angle of 5˚ can not necessarily know if the flower is a small (rewarding type), or a larger (non-rewarding type) flower since visual angle depends both on object size and viewing distance [1] [5] . Indeed, if free flying honeybees have to learn a stimulus discrimination task at a fixed visual angle then it appears that bees only use a fixed retinotopic mechanism and cannot reliably process the stimuli at a novel visual angle [6] . However, recent work shows that individual free flying honeybees can, under the right conditions, learn to use rules to make correct decisions between stimuli of different sizes, even if actual size of a particular stimulus is ambiguous [6] [7] . For example, if a bee has to choose between two “yellow” squares (and/or diamonds) of different sizes from a learning set of 6 sizes ranging between 1 - 6 cm, and repeat such learning in over many trials with random combinations of sizes, bees can both learn to choose the appropriate size relationship coupled with a sucrose solution, and then transfer the learnt smaller-larger rule to novel shapes and colors without any additional learning. The bee brain is capable of doing this independent of whether certain sizes (e.g. 3 cm) are a rewarded target or distracter containing a bitter solution in a given trial, depending upon the alternative stimulus presented (e.g. 2 or 4 cm). This demonstrates that bees can learn, through experience, to use a rule to solve relative size problems [7] . Rule learning is potentially a useful framework for computer vision in complex environments because rules can be applied to novel situations that were not previously envisaged, and the fact that the honeybee brain with less than one million neurons can solve rule learning problems suggests there may be efficient computational mechanisms underlying such complex behavioral outcomes [6] [8] .

3. Relationship Learning

Whilst it was previously believed that insect vision was exclusively mediated by hard wired mechanisms that extracted elementary cues from stimuli with little or no plasticity for learning [9] , a series of experiments have shown that the way in which bees acquire information is very dependent on the type of conditioning employed during a training regime [10] -[15] . Indeed with appetitive-aversive differential conditioning protocols [10] [16] bees learn very difficult tasks, for example, recognizing human faces using configural mechanisms [11] . This has recently opened the door to understanding relationship learning in free flying honeybees. One of the classic problems for understanding relationship learning in primates is how an animal can process the concept of whether a particular object is above, or below another referent object [17] [18] . It has been recently possible to show that the honeybee brain can learn to appropriately choose a novel object in relation to a known referent (e.g. above or below a horizontal line), or even discriminate between two similar objects and correctly identify a known referent and the correct relationship to another object [8] , analogous to the primate experiments accessing concept learning [19] . Furthermore, the honeybee brain can learn to apply dual relationship rules between stimuli (e.g. above/below and same/different), and apply such rules to novel stimuli [20] . Indeed bees can learn such simultaneous mastering of dual abstract concepts within 30 trials experience with stimuli, and currently it remains untested whether primate brains can actually acquire such complex information so rapidly. Given the highly reduced size of the insect brain and the evidence of relationship learning that was thought to require large mammalian brains until recently [19] [21] [22] , the promise of understanding the mechanisms of such processing in a miniature brain promises to be an insightful tool for bio inspired applications of software in complex and challenging environments.

4. Challenging Climatic Conditions (Wind)

Flying UAVs in a simple environment is, by current standards, very achievable in well controlled environments. However, the conditions for flying in complex natural environments can be challenging, even for insects [23] . Insects reside in the lowest region of the atmosphere, known as the Atmospheric Boundary Layer (ABL), where the spatial and wind environment can be very complex [24] . During flight insects would routinely have to call upon fine motor-skills to execute rapid maneuvers to slalom past obstacles like trees, leaves, branches, etc. that lay in their flight path. The wind environment insects generally fly in is also very challenging as the wind speed and direction can change rapidly within the ABL [25] [26] . While these conditions are very unfavorable for flight, insects are seemingly capable of flying stably and making accurate decisions. Recent work on the flight performance of bumblebees highlighted their flight prowess in very turbulent wind [3] . Though the bumblebees were clearly influenced by unsteady winds and experienced increased fluctuations in flight speed and body orientation, the bees were exceptional at mitigating the influence of wind turbulence through different flight control strategies. Some of these strategies included, changing wing kinematics, actively changing body orientation and/or changing angle of abdomen with respect to the body [3] [27] . Similar observations have been made on hawk moths flying in unsteady winds [28] , and orchid bees have also been shown to deploy their legs when flying in turbulent environments [25] . While these experiments suggest that insects do encode time-resolved wind information during flight and use the same as part of a closed-loop flight control system, there exits limited information on the mechanistic underpinning of such a system. Our understanding of the influence of unsteady wind on insect flight performance and the various flight control strategies employed by the same is very limited.

The sensory processing demands for stable flight in unsteady wind can also have significant influence on the foraging efficiency and other behavioral aspects. One study tested individual bumblebees (Bombus terrestris) that had to learn a perceptually difficult color discrimination task that required appetitive-aversive differential conditioning to learn and has been implicated in attentional processes for bees. The bees were subsequently tested on their capacity to solve the visual discrimination problem when 6 m/s air puffs were delivered, and this condition caused a significant reduction in the accuracy of decisions for the difficult visual task, suggesting the possibility of links between different perceptual dimensions of vision and tactile wind perception [29] .

It is very likely insects rely on information from a variety of sensory organs and motor controls to enable stable flight. Honeybees and a number of other insects have been shown to be highly visually mediated for various tasks including navigation using visual odometery [30] and landmark learning [31] [32] , predator avoidance [33] and detecting or discriminating between various nutritional resources [4] . While the visual system is very stable and robust, it requires significant processing resources and consequently involves high latency in sensory processing (> 60 ms) [34] . The role of vision in flight behaviors occurring over longer time-scales has been well established however the role of vision in maneuvers occurring over short time-scales e.g. rapid evasive maneuver or maintaining stable flight in turbulence is still unclear. Insects may potentially rely on a host of other sensors including tactile sensors that highly augment the sensory information provided by the visual system. Though tactile sensors are generally rate based and are susceptible to noise and drift, they have no latency and may hence serve as a viable sensory platform for rapid responses [35] . An understanding of the tactile sensory system in honeybees and their functional role in flight might provide insight into the mechanisms that enables them to be such exceptional flyers.

5. Inside the Black Box

To understand how bees can learn complex visual tasks with a miniature brain, and the possible implications for software architecture, it is useful to understand how information is processed in the brain. Visual signals are initially sensed by two compound eyes [4] , each of which contains ommatidia that have cells maximally sensitive to different wavelengths of light (350 nm UV; 440 nm Blue; 540 nm Green). From early on in the visual process there is image segregation where the Green signals are rapidly processed in the lamina facilitating fast achromatic motion processing [36] . The Lamina neurons also project to the medulla; and signals from the UV and Blue bee receptors are first processed in the medulla which contains broad-band, narrow band and also color opponent neurons. Here image segregation may route information along ‘hard wired’ pathways facilitating fast learning of simple problems, or pathways capable of tuning that may assist learning of complex problems and that project into structures including the mushroom body of the bee brain [4] . Interestingly, the mushroom body receives input from visual and olfactory sensors, and appears to be a multimodal integration processor which may explain the multisensory rule learning capacities of bees described above [8] [37] -[39] .

Recent research has suggested the integration of tactile and visual stimuli by fruit flies as means for controlling flight speed in uneven wind conditions [34] . Therefore, it is reasonable to propose the presence of multimodal processing of visual and tactile signal in hymenopterans as well. Even though it has been demonstrate the ability of bees to maneuver in turbulent wind [3] and an effect of current flow in color perception by bumblebees [29] , currently there is little understanding of multisensory processing for visual and tactile sensors that might help explain these observations. Below we discuss a plausible experiment that may reveal the presence of such a capacity and give insight into what mechanisms bees may have evolved to enable flight and decision making in complex natural environments.

6. Multi-Sensory Processing Free Flying Insects

Interestingly, to make accurate visual discrimination or decisions bees have to be in a free flying condition [40] . Given the evidence that bees can learn complex visual information well, it becomes interesting to know how bees might use multisensory processing to help with potential uncertainty in complex environments. In a natural context this might include flying between similar flower colors of different species that provide ambiguous visual information, but may additionally have olfactory cues that help a bee disentangle signals to identify the most rewarding flowers from which to collect nutrition [41] . The processing of visual and olfactory information in the bee brain can be linked by rules, since honeybees that have learnt to fly into a Y-maze and solve a complex delayed matching to sample task with visual information, can transfer the acquired information to quickly solve a novel delayed matching to sample problem with olfactory stimuli [42] . This demonstrates that the bee brain can apply learnt rules across these two multiple dimensions of perception. As discussed above, such information is likely to involve processing in the mushroom body of bees as this region of the brain receives multiple sensory inputs and has been implicated as an integration processor of information [8] .

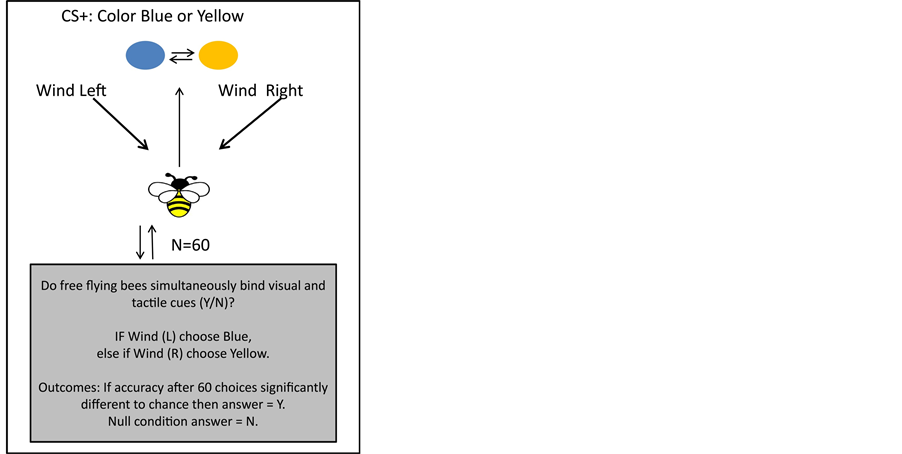

To test how tactile and visual information may be linked we propose that future work could consider bees flying in a wind tunnel such that the wind direction is modulated to either be from the right-left hand side as a bee approaches a dual choice visual task. If, for example, the wind is from the right the bee may have to learn to choose a “yellow” color, but if the wind is from the left had side the bee would have to choose a “blue” color in a counterbalanced experiment design Only one colour (Blue or Yellow) is correct in a given trial (50% chance), and to make a correct choice the bee must bind the tactile and colour information. Between trials, position cues are randomised. If bees can learn this task significant from chance expectation after 60 trials, then it suggests the bee brain has evolved mechanisms to simultaneously bind visual and tactile information. The null condition, if bees do not learn task, would suggest information is handled independently during flight. This would be informative for software engineering to understand what sensory channels should be combined for UAV decision making in complex environments (Figure 1).

This is not a trivial task, as it requires the bee brain to potentially link the different perceptions. If bees can learn to solve this task it will mean that there has been evolutionary pressure to evolve connecting mechanisms between these sensory inputs, but if bees cannot learn the task it would mean that simultaneously processing both tactile and visual information may not be important for flying in complex environments. Either way, such results are likely to be of high value for understanding how to integrate different sensory information in flight systems for machine vision that might operate in complex environments, and should be accounted for and included as an integral part of the control strategy of any autonomous flight program implemented in flying UAVs.

Figure 1. Bees trained in a wind tunnel fly towards and choose between Yellow and Blue stimuli, where each correct choice (Conditioned Stimulus: CS+) requires perception of the wind direction (Left/Right) in a given trial.

Acknowledgements

JEG was supported by an RMIT University Research and Innovation Post-Doctoral Scholarship.

References

- Giurfa, M., Vorobyev, M., Kevan, P. and Menzel, R. (1996) Detection of Coloured Stimuli by Honeybees: Minimum Visual Angles and Receptor Specific Contrasts. Journal of Comparative Physiology A: Neuroethology, Sensory, Neural, and Behavioral Physiology, 178, 699-709. http://dx.doi.org/10.1007/BF00227381

- Vuong, Q.C. and Tarr, M.J. (2004) Rotation Direction Affects Object Recognition. Vision Research, 44, 1717-1730. http://dx.doi.org/10.1016/j.visres.2004.02.002

- Ravi, S., Crall, J.D., Fisher, A. and Combes, S.A. (2013) Rolling with the Flow: Bumblebees Flying in Unsteady Wakes. Journal of Experimental Biology, 216, 4299-4309. http://dx.doi.org/10.1242/jeb.090845

- Dyer, A.G., Paulk, A.C. and Reser, D.H. (2011) Colour Processing in Complex Environments: Insights from the Visual System of Bees. Proceedings of the Royal Society B: Biological Sciences, 278, 952-959. http://dx.doi.org/10.1098/rspb.2010.2412

- Dyer, A.G., Spaethe, J. and Prack, S. (2008) Comparative Psychophysics of Bumblebee and Honeybee Colour Discrimination and Object Detection. Journal of Comparative Physiology A: Neuroethology, Sensory, Neural, and Behavioral Physiology, 194, 617-627. http://dx.doi.org/10.1007/s00359-008-0335-1

- Dyer, A.G. and Griffiths, D.W. (2012) Seeing Near and Seeing Far; Behavioural Evidence for Dual Mechanisms of Pattern Vision in the Honeybee (Apis Mellifera). The Journal of Experimental Biology, 215, 397-404. http://dx.doi.org/10.1242/jeb.060954

- Avarguès-Weber, A., d’Amaro, D., Metzler, M. and Dyer, A.G. (2014) Conceptualization of Relative Size by Honeybees. Frontiers in Behavioral Neuroscience, 8, 80. http://dx.doi.org/10.3389/fnbeh.2014.00080

- Avarguès-Weber, A. and Giurfa, M. (2013) Conceptual Learning by Miniature Brains. Proceedings of the Royal Society B: Biological Sciences, 280, Article ID: 20131907.

- Horridge, A. (2000) Seven Experiments on Pattern Vision of the Honeybee, with a Model. Vision Research, 40, 2589- 2603. http://dx.doi.org/10.1016/S0042-6989(00)00096-1

- Avarguès-Weber, A., de Brito Sanchez, M.G., Giurfa, M. and Dyer, A.G. (2010) Aversive Reinforcement Improves Visual Discrimination Learning in Free-Flying Honeybees. PLoS ONE, 5, Article ID: e15370.

- Avarguès-Weber, A., Portelli, G., Benard, J., Dyer, A. and Giurfa, M. (2010) Configural Processing Enables Discrimination and Categorization of Face-Like Stimuli in Honeybees. Journal of Experimental Biology, 213, 593-601.

- Giurfa, M., Hammer, M., Stach, S., Stollhoff, N., Müller-Deisig, N. and Mizyrycki, C. (1999) Pattern Learning by Honeybees: Conditioning Procedure and Recognition Strategy. Animal Behaviour, 57, 315-324. http://dx.doi.org/10.1006/anbe.1998.0957

- Giurfa, M., Schubert, M., Reisenman, C., Gerber, B. and Lachnit, H. (2003) The Effect of Cumulative Experience on the Use of Elemental and Configural Visual Discrimination Strategies in Honeybees. Behavioural Brain Research, 145, 161-169. http://dx.doi.org/10.1016/S0166-4328(03)00104-9

- Stach, S., Benard, J. and Giurfa, M. (2004) Local-Feature Assembling in Visual Pattern Recognition and Generalization in Honeybees. Nature, 429, 758-761.

- Stach, S. and Giurfa, M. (2005) The Influence of Training Length on Generalization of Visual Feature Assemblies in Honeybees. Behavioural Brain Research, 161, 8-17. http://dx.doi.org/10.1016/j.bbr.2005.02.008

- Chittka, L., Dyer, A.G., Bock, F. and Dornhaus, A. (2003) Psychophysics: Bees Trade off Foraging Speed for Accuracy. Nature, 424, 388. http://dx.doi.org/10.1038/424388a

- Quinn, P.C., Polly, J.L., Furer, M.J., Dobson, V. and Narter, D.B. (2002) Young Infants’ Performance in the ObjectVariation Version of the Above-Below Categorization Task: A Result of Perceptual Distraction or Conceptual Limitation? Infancy, 3, 323-347. http://dx.doi.org/10.1207/S15327078IN0303_3

- Spinozzi, G., Lubrano, G. and Truppa, V. (2004) Categorization of Above and Below Spatial Relations by Tufted Capuchin Monkeys (Cebus apella). Journal of Comparative Psychology, 118, 403-412. http://dx.doi.org/10.1037/0735-7036.118.4.403

- Chittka, L. and Jensen, K. (2011) Animal Cognition: Concepts from Apes to Bees. Current Biology, 21, R116-R119. http://dx.doi.org/10.1016/j.cub.2010.12.045

- Avarguès-Weber, A., Dyer, A.G., Combe, M. and Giurfa, M. (2012) Simultaneous Mastering of Two Abstract Concepts by the Miniature Brain of Bees. Proceedings of the National Academy of Sciences of the United States of America, 109, 7481-7486. http://dx.doi.org/10.1073/pnas.1202576109

- Chittka, L. and Dyer, A. (2012) Cognition: Your Face Looks Familiar. Nature, 481, 154-155. http://dx.doi.org/10.1038/481154a

- Chittka, L. and Niven, J. (2009) Are Bigger Brains Better? Current Biology, 19, R995-R1008. http://dx.doi.org/10.1016/j.cub.2009.08.023

- Vicens, N. and Bosch, J. (2000) Weather-Dependent Pollinator Activity in an Apple Orchard, with Special Reference to Osmia cornuta and Apis mellifera (Hymenoptera: Megachilidae and Apidae). Environmental Entomology, 29, 413- 420. http://dx.doi.org/10.1603/0046-225X-29.3.413

- Stull, R.B. (1988) An Introduction to Boundary Layer Meteorology. Springer, Berlin. http://dx.doi.org/10.1007/978-94-009-3027-8

- Combes, S.A. and Dudley, R. (2009) Turbulence-Driven Instabilities Limit Insect Flight Performance. Proceedings of the National Academy of Sciences of the United States of America, 106, 9105-9108. http://dx.doi.org/10.1073/pnas.0902186106

- Watkins, S., Milbank, J., Loxton, B.J. and Melbourne, W.H. (2006) Atmospheric Winds and Their Implications for Microair Vehicles. AIAA Journal, 44, 2591-2600. http://dx.doi.org/10.2514/1.22670

- Luu, T., Cheung, A., Ball, D. and Srinivasan, M.V. (2011) Honeybee Flight: A Novel ‘Streamlining’ Response. Journal of Experimental Biology, 214, 2215-2225. http://dx.doi.org/10.1242/jeb.050310

- Ortega-Jimenez, V.M., Greeter, J.S.M., Mittal, R. and Hedrick, T.L. (2013) Hawkmoth Flight Stability in Turbulent Vortex Streets. Journal of Experimental Biology, 216, 4567-4579. http://dx.doi.org/10.1242/jeb.089672

- Dyer, A.G. (2007) Windy Condition Affected Colour Discrimination in Bumblebees (Hymenoptera: Apidae: Bombus). Entomologia Generalis, 30, 165-166.

- Srinivasan, M.V., Zhang, S., Altwein, M. and Tautz, J. (2000) Honeybee Navigation: Nature and Calibration of the “Odometer”. Science, 287, 851-853. http://dx.doi.org/10.1126/science.287.5454.851

- Vladusich, T., Hemmi, J.M., Srinivasan, M.V. and Zeil, J. (2005) Interactions of Visual Odometry and Landmark Guidance during Food Search in Honeybees. Journal of Experimental Biology, 208, 4123-4135. http://dx.doi.org/10.1242/jeb.01880

- Chittka, L. and Geiger, K. (1995) Can Honey Bees Count Landmarks? Animal Behaviour, 49, 159-164. http://dx.doi.org/10.1016/0003-3472(95)80163-4

- Heiling, A.M., Herberstein, M.E. and Chittka, L. (2003) Pollinator Attraction: Crab-Spiders Manipulate Flower Signals. Nature, 421, 334. http://dx.doi.org/10.1038/421334a

- Fuller, S.B., Straw, A., Peek, M., Murray, R. and Dickinson, M. (2014) Flying Drosophila Stabilize Their Vision-Based Velocity Controller by Sensing Wind with Their Antennae. Proceedings of the National Academy of Sciences of the United States of America, 111, E1182-E1191. http://dx.doi.org/10.1073/pnas.1323529111

- Dickinson, M.H. (2014) Death Valley, Drosophila, and the Devonian Toolkit. Annual Review of Entomology, 59, 51- 72.

- Skorupski, P. and Chittka, L. (2010) Differences in Photoreceptor Processing Speed for Chromatic and Achromatic Vision in the Bumblebee, Bombus terrestris. Journal of Neuroscience, 30, 3896-3903. http://dx.doi.org/10.1523/JNEUROSCI.5700-09.2010

- Ehmer, B. and Gronenberg, W. (2002) Segregation of Visual Input to the Mushroom Bodies in the Honeybee (Apis mellifera). Journal of Comparative Neurology, 451, 362-373. http://dx.doi.org/10.1002/cne.10355

- Grünewald, B. (1999) Physiological Properties and Response Modulations of Mushroom Body Feedback Neurons during Olfactory Learning in the Honeybee, Apis mellifera. Journal of Comparative Physiology A, 185, 565-576. http://dx.doi.org/10.1007/s003590050417

- Leonard, A. and Masek, P. (2014) Multisensory Integration of Colors and Scents: Insights from Bees and Flowers. Journal of Comparative Physiology A, Epub Ahead of Print. http://dx.doi.org/10.1007/s00359-014-0904-4

- Niggebrugge, C., Leboulle, G., Menzel, R., Komischke, B. and de Ibarra, N.H. (2009) Fast Learning but Coarse Discrimination of Colours in Restrained Honeybees. Journal of Experimental Biology, 212, 1344-1350. http://dx.doi.org/10.1242/jeb.021881

- Giurfa, M., Núñez, J. and Backhaus, W. (1994) Odour and Colour Information in the Foraging Choice Behaviour of the Honeybee. Journal of Comparative Physiology A, 175, 773-779. http://dx.doi.org/10.1007/BF00191849

- Giurfa, M., Zhang, S., Jenett, A., Menzel, R. and Srinivasan, M.V. (2001) The Concepts of “Sameness” and “Difference” in an Insect. Nature, 410, 930-933. http://dx.doi.org/10.1038/35073582