Journal of Software Engineering and Applications

Vol. 5 No. 11A (2012) , Article ID: 25185 , 8 pages DOI:10.4236/jsea.2012.531109

Reasoning about Context Information in Cloud Computing Environments

![]()

1Faculty of Information Technology and Business, Cyber University, Japan; 2Graduate School of Human Sciences, Waseda University, Tokyo, Japan.

Email: drnkamide08@kpd.biglobe.ne.jp, syuisui@gmail.com

Received September 9th, 2012; revised October 8th, 2012; accepted October 17th, 2012

Keywords: Context Information; Context-Mixture Rule; Sequent Calculus; Resource-Sensitive Reasoning; Context-Aware Reasoning

ABSTRACT

The notion of context provides flexibility and adaptation to cloud computing services. Location, time identity and activity of users are examples of primary context types. The motivation of this paper is to formalize reasoning about context information in cloud computing environments. To formalize such context-aware reasoning, the logic LCM of context-mixture is introduced based on a Gentzen-type sequent calculus for an extended resource-sensitive logic. LCM has a specific inference rule called the context-mixture rule, which can naturally represent a mechanism for merging formulas with context information. Moreover, LCM has a specific modal operator called the sequence modal operator, which can suitably represent context information. The cut-elimination and embedding theorems for LCM are proved, and a fragment of LCM is shown to be decidable. These theoretical results are intended to provide a logical justification of context-aware cloud computing service models such as a flowable service model.

1. Introduction

1.1. Contexts in Cloud Computing Environments

The motivation of this paper is to formalize reasoning about context information in cloud computing environments. To formalize such context-aware reasoning, the logic LCM of context-mixture is introduced as a Gentzentype sequent calculus based on linear logic [1,2], which is known to be a useful resource-sensitive logic. LCM has a specific inference rule called the context-mixture rule and a specific modal operator called the sequence modal operator [3,4]. The cut-elimination and embedding theorems for LCM are proved as the main results of this paper. A fragment of LCM is also shown to be decidable. These theoretical results are intended to provide a concrete logical justification of context-aware cloud computing service models such as a flowable service model [5,6].

The definitions of cloud computing, including on-demand, pay-by-use, virtualized and dynamically-scalable, imply the characteristics of cloud computing environments [7,8]. Cloud-related issues have been discussed and studied based on the notion of contexts which include location, time, identity and activity of users. The use of context is known to be very important in cloud and ubiquitous computing.

There is a widely accepted definition of context [9]:

Context is any information that can be used to characterize the situation of an entity. An entity is a person, place, or object that is considered relevant to the interaction between the user and application, including the user and application themselves.

Location, time, identity and activity are primary context types for characterizing the situation of a particular entity. Contexts can be classified into three categories [6]: nature context, human context and culture context. In the present paper, nature context is especially considered. Nature context includes when (time context) and where (location or space context) information.

1.2. Flowable Services

Context provides flexibility and adaptation to services. A flowable service, which is a new notion of context-aware cloud computing services, is a logical stream that organizes and provides circumjacent services in such a way that they are perceived by individuals as those naturally embedded in their surrounding environments [5,6,10-14]. A flow of service is a metaphor for a subconsciously controlled navigation that guides the user through fulfillment of a flowable service process that fits the user’s context and situation and runs smoothly with unbroken continuity in an unobtrusive and supportive way. Flowable services can be useful to context-aware cloud computing applications such as Cloud Campus which is the e-learning environment of Cyber University in Japan.

The original intention of the flowable service model is to apply resources in open cloud environments [5,6,10- 14]. The model uses intensifying context information to adjust services flow to be more usable. The model shares resources or services fairly and to utmost extent. To formalize reasoning about the context-aware flowable service model in open cloud computing environments, we need an appropriate logic that can represent the following three items:

1) Context-mixture rule;

2) Resource-sensitive reasoning;

3) Context information.

1.3. Context-Mixture Rule

In this paper, the logic LCM of context-mixture, which can represent the above three items (context-mixture rule, resource-sensitive reasoning and context information), is introduced as a Gentzen-type sequent calculus based on linear logic. LCM has a specific inference rule called the context-mixture rule, which can naturally represent a mechanism for merging formulas with context information.

Merging formulas with context information, which represents an interaction between different context information, is required for suitable representation of context-aware flowable services, since to handle various kinds of context information is an important issue for flowable services. We call here such a merging mechanism context-mixture.

The notion of context-mixture is also important for representing deployment models in cloud computing environments [8]. Deployment models are classified as private cloud, community cloud, public cloud and hybrid cloud. The cloud infrastructure of hybrid cloud is a “mixture” (or composition) of two or more distinct cloud infrastructures (private, community or public) that remain unique entities, but are bound together by standardized or proprietary technology that enables data and application portability (e.g., cloud bursting for load balancing between clouds).

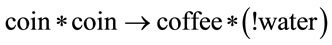

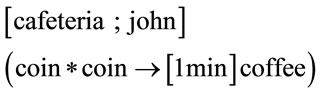

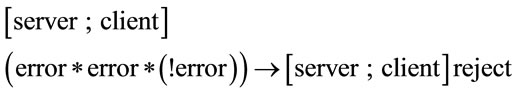

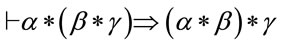

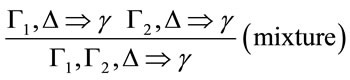

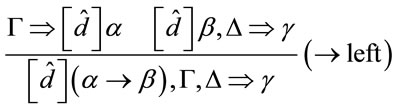

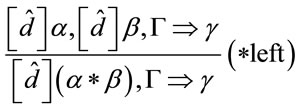

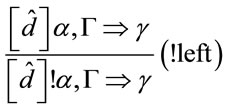

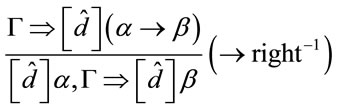

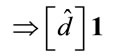

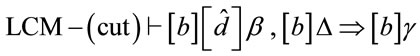

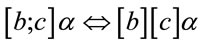

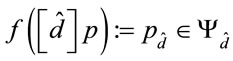

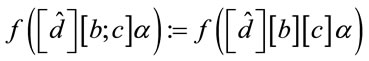

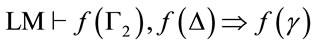

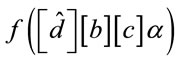

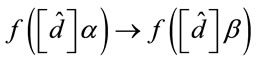

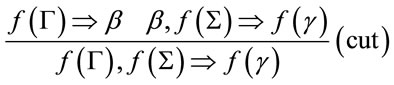

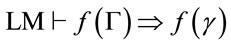

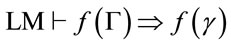

The context-mixture rule of LCM is of the form:

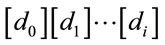

where the multisets  and

and  of formulas with context information are mixed by this rule.

of formulas with context information are mixed by this rule.

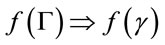

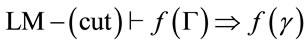

The rule (mixture) was introduce in [15], and was called the mingle rule. The name “mingle” was from the originnal version [16] of the mingle rule:

This original rule and the corresponding Hilbert-style axiom scheme  have been studied in formalizing “relevant” human reasoning [16,17], grammatical reasoning [18] and reasoning about communicationmerge in process algebras [15].

have been studied in formalizing “relevant” human reasoning [16,17], grammatical reasoning [18] and reasoning about communicationmerge in process algebras [15].

As presented in [15], the context-mixture rule has been used for representing communication-merge in process algebras. This is also justified for the present study, since there is the slogan “context-as-process” presented in [19]: We must consider “context as a part of process of interacting with an ever-changing environment that is composed of reconfigurable, migratory, distributed and multiscale resources” because it is “not simply the state of a predefined environment with a fixed set of interaction resources”.

1.4. Resource-Sensitive Reasoning

The logic LCM of context-mixture is obtained from linear logic [1,2] by adding the context-mixture rule (mixture) and a sequence modal operator, which represents a sequence of symbols. By the sequence modal operator in LCM, we can appropriately express “context information” in “resource-sensitive reasoning”.

The notion of “resources”, encompassing concepts such as processor time, memory, cost of components and energy requirements, is fundamental to computational systems. This notion is also very important for handling efficient resource management in cloud computing environments [20]. Examples of resources in cloud computing environments include storage, processing, memory, and network bandwidth [8].

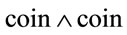

Linear logic can elegantly represent the notion of “resources” [1]. In linear logic, the concept of “resource consumption” can be represented by using the linear implication connective  and the fusion connective, and the concept of “reusable resource” can be represented by using the linear exponential operator

and the fusion connective, and the concept of “reusable resource” can be represented by using the linear exponential operator . A typical example formula is:

. A typical example formula is:

This example means “if we spend two coins, then we can have a cup of coffee and as much of water as we like” when the price of coffee is two coins and water is free. It is to be noted that this example cannot be expressed using classical logic, since the formula  (two coins) in classical logic is logically equivalent to coin (one coin), i.e., classical logic has no resource-awareness.

(two coins) in classical logic is logically equivalent to coin (one coin), i.e., classical logic has no resource-awareness.

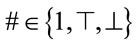

1.5. Context Information

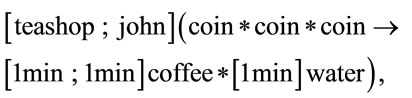

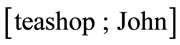

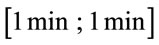

In order to discuss certain real and practical examples, the resource descriptions in linear logic should be more fine-grained and expressive and capable of conveying context information. For example, the following expressions may be necessary for some practical situations:

These examples respectively mean “in a teashop, if John spends three coins, then he can have a cup of coffee after two minutes and a cup of water after one minute,” and “in a cafeteria, if John expends two coins, then he can have a cup of coffee after one minute.” In these examples, the expressions ,

,  ,

,  and

and , which are regarded as “context information”, can naturally be represented by the sequence modal operator in LCM.

, which are regarded as “context information”, can naturally be represented by the sequence modal operator in LCM.

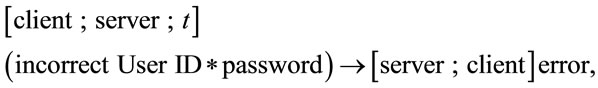

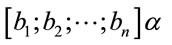

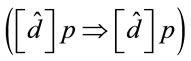

As presented in [4], the following expressions are available in a subsystem of LCM:

which respectively mean:

“if a client sends an incorrect user ID and a correct password to login to a server at the  -th login attempt, then the server returns an error message to the client.”

-th login attempt, then the server returns an error message to the client.”

“if a server returns the error messages more than twice to a client, then the server returns the password reject message to the client.”

Note that the error messages are expressed as a “resource” by using the connectives  and

and , and the “information” on servers, clients, and login-attempts is expressed by the sequence modal operator.

, and the “information” on servers, clients, and login-attempts is expressed by the sequence modal operator.

1.6. Informational Interpretation

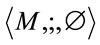

The reason underlying the use of the notion of “sequences” in the sequence modal operator is explained below. The notion of “sequences” is fundamental to practical reasoning in computer science because it can appropriately represent “data sequences”, “program-execution sequences”, “action sequences”, “time sequences” etc. The notion of sequences is thus useful to represent the notions of “information”, “attributes”, “trees”, “or-ders”, “preferences” and “ontologies”. To represent “context information” by sequences is especially suitable because a sequence structure gives a monoid  with informational interpretation [21]:

with informational interpretation [21]:

1) M is a set of pieces of (ordered or prioritized) information (i.e., a set of sequences);

2) ; is a binary operator (on M) that combines two pieces of information (i.e., a concatenation operator on sequences);

3)  is the empty piece of information (i.e., the empty sequence).

is the empty piece of information (i.e., the empty sequence).

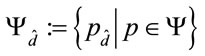

Based upon the informational interpretation, a formula of the form  intuitively means that “α is true based on a sequence

intuitively means that “α is true based on a sequence  of (ordered or prioritized) information pieces.” Further, a formula of the form

of (ordered or prioritized) information pieces.” Further, a formula of the form , which coincides with α, intuitively means that “α is true without any information (i.e., it is an eternal truth in the sense of classical logic).”

, which coincides with α, intuitively means that “α is true without any information (i.e., it is an eternal truth in the sense of classical logic).”

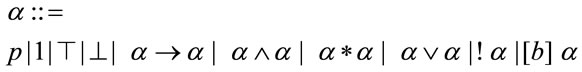

2. The Logic LCM of Context-Mixture

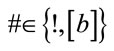

Prior to the precise discussion, the language of the proposed logic is introduced below. Formulas are constructed from propositional variables, 1 (multiplicative truth constant),  (additive truth constant),

(additive truth constant),  (additive falsity constant),

(additive falsity constant),  (implication),

(implication),  (conjunction),

(conjunction),  (fusion),

(fusion),  (disjunction),

(disjunction),  (exponential), and

(exponential), and  (sequence modal operator) where

(sequence modal operator) where  is a sequence. Sequences are constructed from atomic sequences,

is a sequence. Sequences are constructed from atomic sequences,  (empty sequence) and; (composition). Lower-case letters

(empty sequence) and; (composition). Lower-case letters  are used for sequences, lower-case letters

are used for sequences, lower-case letters  are used for propositional variables, Greek lower-case letters

are used for propositional variables, Greek lower-case letters  are used for formulas, and Greek capital letters

are used for formulas, and Greek capital letters  are used for finite (possibly empty) multisets of formulas. For any

are used for finite (possibly empty) multisets of formulas. For any , an expression

, an expression  is used to denote the multiset

is used to denote the multiset  . The symbol

. The symbol  is used to denote the equality of sequences (or multisets) of symbols. An expression

is used to denote the equality of sequences (or multisets) of symbols. An expression  means

means , and expressions

, and expressions  and

and  mean

mean . A sequent is an expression of the form

. A sequent is an expression of the form  where

where  is nonempty. It is assumed that the terminological conventions regarding sequents (e.g., antecedent and succedent) are the usual ones. If a sequent S is provable in a sequent calculus L, then such a fact is denoted as

is nonempty. It is assumed that the terminological conventions regarding sequents (e.g., antecedent and succedent) are the usual ones. If a sequent S is provable in a sequent calculus L, then such a fact is denoted as  or

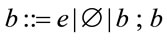

or . The parentheses for

. The parentheses for  is omitted since

is omitted since  is associative, i.e.,

is associative, i.e.,  and

and  for any formulas α, β and γ. A rule R of inference is said to be admissible in a sequent calculus L if the following condition is satisfied: for any instance

for any formulas α, β and γ. A rule R of inference is said to be admissible in a sequent calculus L if the following condition is satisfied: for any instance

of R, if  for all i, then

for all i, then .

.

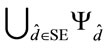

Definition 2.1. Formulas and sequences are defined by the following grammar, assuming p and e represent propositional variables and atomic sequences, respectively:

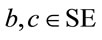

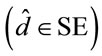

The set of sequences (including the empty sequence) is denoted as SE. An expression  is used to represent

is used to represent  with

with  and

and , i.e.,

, i.e.,  can be the empty sequence. Also, an expression

can be the empty sequence. Also, an expression  is used to represent

is used to represent  with

with  and

and .

.

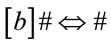

The logic LCM of context-mixture is then introduced below.

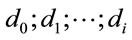

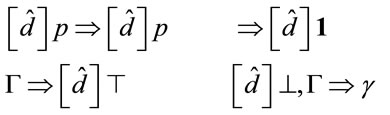

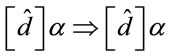

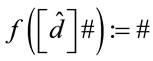

Definition 2.2. The initial sequents of LCM are of the form: for any propositional variable p,

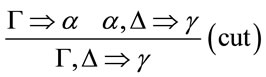

The cut rule of LCM is of the form:

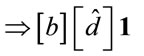

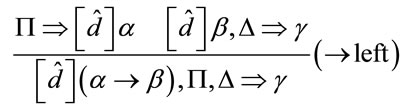

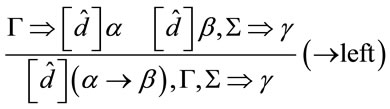

The context-mixture rule of LCM is of the form:

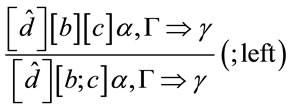

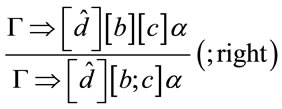

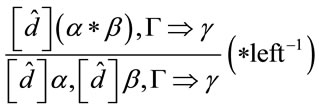

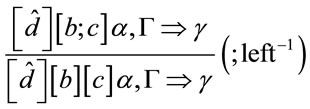

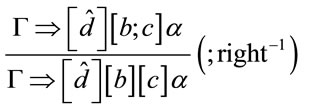

The sequence rules of LCM are of the form:

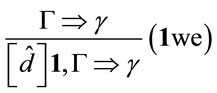

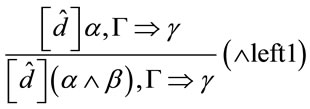

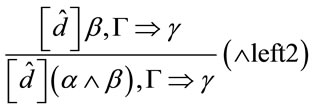

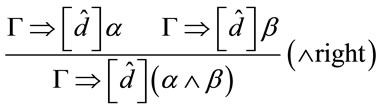

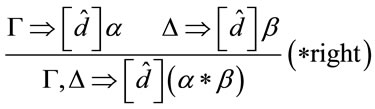

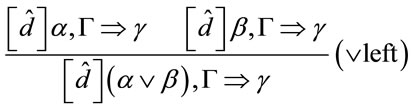

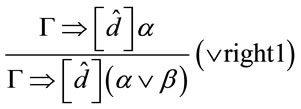

The logical inference rules of LCM are of the form:

It is remarked that Girard’s intuitionistic linear logic ILL is a subsystem of LCM: It is obtained from LCM by deleting (mixture) and the sequence modal operators.

The sequents of the form  for any formula α are provable in cut-free LCM. This fact is shown by induction on α.

for any formula α are provable in cut-free LCM. This fact is shown by induction on α.

The (possibly empty) multiset expression  in (mixture) is needed to show the cut-elimination theorem for an extended linear logic with (mixture) [15].

in (mixture) is needed to show the cut-elimination theorem for an extended linear logic with (mixture) [15].

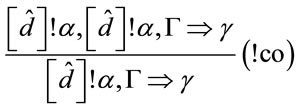

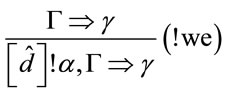

Proposition 2.3. The following rules are admissible in cut-free LCM.

Proof. Straightforward. Here, we show only for the rule ( regu) by induction on the proofs P of

regu) by induction on the proofs P of  in cut-free LCM. We distinguish the cases according to the last inference of P. We show only the following cases.

in cut-free LCM. We distinguish the cases according to the last inference of P. We show only the following cases.

Case ( ): The last inference of P is of the form:

): The last inference of P is of the form: . In this case,

. In this case,  is also an initial sequent.

is also an initial sequent.

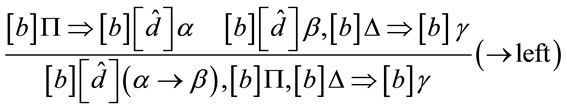

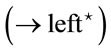

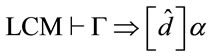

Case ( left): The last inference of P is of the form:

left): The last inference of P is of the form:

By induction hypothesis, we obtain:

and

.

.

We then obtain the required fact:

■

■

An expression  means two sequents

means two sequents  and

and .

.

Proposition 2.4. The following sequents are provable in cut-free LCM: for any formulas  and any

and any 1)

1)  where

where ;

;

2)  where

where ;

;

3) ;

;

4) .

.

3. Some Results on LCM

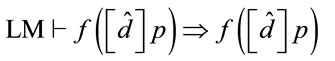

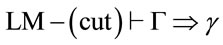

Definition 3.1. LM is obtained from LCM by deleting {(;left), (;right)} and all the expressions as  appearing in the initial sequents and the logical inference rules. The names of the logical inference rules of LM are denoted by labeling “

appearing in the initial sequents and the logical inference rules. The names of the logical inference rules of LM are denoted by labeling “ ” in superscript position, e.g.,

” in superscript position, e.g.,

( ).

).

The logic LM is equivalent to a logic introduced in [15]. Indeed, LM is equivalent to the  -free fragment of MILLm [15] where

-free fragment of MILLm [15] where  is the multiplicative falsity constant. As shown in [15], the cut-elimination theorem holds for LM. This fact will be used to show the cutelimination theorem for LCM. The fact that the

is the multiplicative falsity constant. As shown in [15], the cut-elimination theorem holds for LM. This fact will be used to show the cutelimination theorem for LCM. The fact that the  -free fragment LM

-free fragment LM of LM is decidable will also be used to show the decidability of the

of LM is decidable will also be used to show the decidability of the  -free fragment LCM

-free fragment LCM of LCM.

of LCM.

Definition 3.2. We fix a countable set  of propositional variables, and define the sets

of propositional variables, and define the sets

of propositional variables where

of propositional variables where i.e.,

i.e., . The language (or the set of formulas)

. The language (or the set of formulas)  of LCM is obtained from

of LCM is obtained from ,

,  and

and . The language (or the set of formulas)

. The language (or the set of formulas)  of LM is obtained from

of LM is obtained from ,

,  and

and .

.

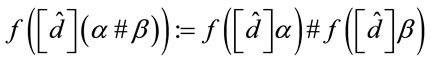

A mapping  from

from  to

to  is defined by:

is defined by:

1) for any ,

, ;

;

2)  where

where ;

;

3)  where

where  ;

;

4) ;

;

5) .

.

Let  be a set of formulas in

be a set of formulas in . Then, an expression

. Then, an expression  means the result of replacing every occurrence of a formula α in

means the result of replacing every occurrence of a formula α in  by an occurrence of

by an occurrence of .

.

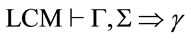

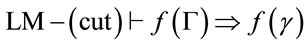

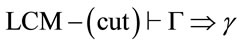

Theorem 3.3. (Embedding) Let  be a multiset of formulas in

be a multiset of formulas in , γ be a formula in

, γ be a formula in , and f be the mapping defined in Definition 3.2. Then:

, and f be the mapping defined in Definition 3.2. Then:

.

.

Proof.  (

( ): By induction on the proofs P of

): By induction on the proofs P of  in LCM. We distinguish the cases according to the last inference of P. We show some cases.

in LCM. We distinguish the cases according to the last inference of P. We show some cases.

Case : The last inference of P is of the form:

: The last inference of P is of the form: . Since

. Since  by the definition of f, we obtain the required fact

by the definition of f, we obtain the required fact

.

.

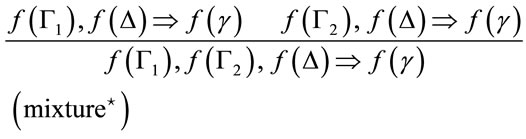

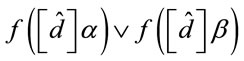

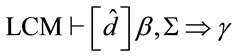

Case (mixture): The last inference of P is of the form:

By induction hypothesis, we have  and

and  . Then, we obtain the required fact:

. Then, we obtain the required fact:

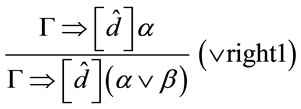

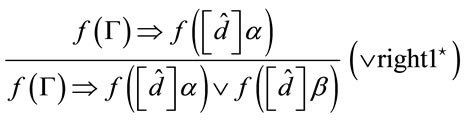

Case ( right1): The last inference of P is of the form:

right1): The last inference of P is of the form:

By induction hypothesis, we have

. Then, we obtain the required fact:

. Then, we obtain the required fact:

where  coincides with

coincides with

by the definition of f.

by the definition of f.

Case (; left): The last inference of P is of the form:

By induction hypothesis, we have

. Then, we obtain the required fact, since

. Then, we obtain the required fact, since  coincides with

coincides with  by the definition of f.

by the definition of f.

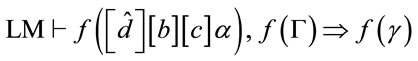

: By induction on the proofs Q of

: By induction on the proofs Q of  in LM. We distinguish the cases according to the last inference of Q. We show some cases.

in LM. We distinguish the cases according to the last inference of Q. We show some cases.

Case : The last inference of Q is of the form:

: The last inference of Q is of the form:

where  coincides with

coincides with

by the definition of f. By induction hypothesis, we have

by the definition of f. By induction hypothesis, we have  and

and

. Then, we obtain the required fact:

. Then, we obtain the required fact:

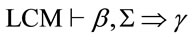

Case (cut): The last inference of Q is of the form:

Since  is in

is in , we can obtain

, we can obtain  by induction on

by induction on . Then, by induction hypothesis, we have

. Then, by induction hypothesis, we have  and

and . We then obtain the required fact

. We then obtain the required fact  by using (cut) in LCM. ■

by using (cut) in LCM. ■

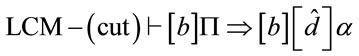

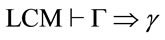

Theorem 3.4. (Cut-elimination) The rule (cut) is admissible in cut-free LCM.

Proof. We have the following modified statements of Theorem 3.3:

1) if , then

, then ;

;

2) if , then

, then  .

.

To show the second statement, we do not need to prove the case for (cut) as in Theorem 3.3.

We now prove the cut-elimination theorem for LCM as follows. Suppose . Then, we have

. Then, we have  by the modified statement 1) of Theorem 3.3, and hence

by the modified statement 1) of Theorem 3.3, and hence  by the cut-elimination theorem for LM. By the modified statement 2) of Theorem 3.3, we obtain

by the cut-elimination theorem for LM. By the modified statement 2) of Theorem 3.3, we obtain  . ■

. ■

Corollary 3.5. (Consistency) LCM is consistent, i.e., the empty sequent  is not provable in cut-free LCM.

is not provable in cut-free LCM.

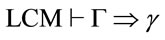

In the following, we show that the  -free fragment LCM

-free fragment LCM of LCM is decidable. Before to show the decidability of LCM

of LCM is decidable. Before to show the decidability of LCM , we mention that LCM is undecidable. ILL is known to be undecidable. The proof of the undecidability of ILL is carried out by encoding Minsky machine. LCM can encode Minsky machine in the same way as in ILL, since LCM is an extension of ILL.

, we mention that LCM is undecidable. ILL is known to be undecidable. The proof of the undecidability of ILL is carried out by encoding Minsky machine. LCM can encode Minsky machine in the same way as in ILL, since LCM is an extension of ILL.

Definition 3.6. LCM is obtained from LCM by deleting {(

is obtained from LCM by deleting {( left), (

left), ( right), (

right), ( co), (

co), ( we)}, i.e., LCM

we)}, i.e., LCM is the

is the  -free fragment of LCM.

-free fragment of LCM.

Definition 3.7. LM is obtained from LCM

is obtained from LCM by deleting {(;left), (;right)} and all the expressions as

by deleting {(;left), (;right)} and all the expressions as  appearing in the initial sequents and the logical inference rules.

appearing in the initial sequents and the logical inference rules.

Theorem 3.8. (Decidability) LCM is decidable.

is decidable.

Proof. The provability of LCM can be transformed into that of LM

can be transformed into that of LM by the restriction of Theorem 3.3. Since LM

by the restriction of Theorem 3.3. Since LM is decidable, LCM

is decidable, LCM is also decidable. ■

is also decidable. ■

4. Conclusions

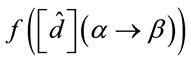

In this paper, the logic LCM of context-mixture, which can suitably express context information in cloud computting environments, was introduced. The cut-elimination and embedding theorems for LCM were proved, and the !-free fragment of LCM was shown to be decidable. LCM is based on an extended resource-sensitive (intuitionistic linear) logic with both the context-mixture rule (mixture) and the sequence modal operator [b]. The rule (mixture) of LCM can suitably represent a mechanism for merging formulas with context information, and the operator [b] of LCM can represent context information. A concrete logical foundation of reasoning about context information in cloud computing environments was thus obtained in this paper. Some technical remarks on LCM and some related works on context-aware modeling are addressed in the rest of this paper.

It is remarked that the sequence modal operator in LCM can be adapted to a wide range of non-classical logics. An extended intuitionistic linear logic with the sequence modal operator but without the context-mixture rule was shown to be useful for describing secure password authentication protocols [4]. An extended full computation-tree logic with the sequence modal operator was shown to be applicable to certain ontological descriptions [3]. An extended linear-time temporal logic with the sequence modal operator was shown to be useful for specifying some time-dependent secure authentication systems [22,23]. The sequence modal operator may be applicable to other useful non-classical logics, e.g., some extended linear logics [24,25].

The present paper was intended to provide a logical justification of context-aware cloud computing service models (such as a flowable service model) in cloud computing environments. We now give a survey of such context-aware model approaches. Context is used to challenge various issues in cloud and ubiquitous environments. Many context models have been proposed and developed: A key-value model, a markup model, an object-oriented model, and an ontology-based model (see [26] for a survey). Since location is one of the most typical context information, the location context involves special models: Geometric models, symbolic models and hybrid models. Wohltorf et al. [27] introduced a context-awareness module which combines three sub-modules: The location-based service module, the personalization module and the device and network independence module. This work introduced an agent-based serviceware framework to assist service providers in developing innovative services. Gu et al. [28] proposed an ontologybased context model which is based on the OWL inside the SOCAM (Service-Oriented Context-Aware Middleware) architecture. Coutaz et al. [19] proposed a conceptual framework for context-aware computing, including ontological and architectural foundations. In this method, the context is modeled as a directed state graph, where the nodes denote contexts and the edges denote the conditions for changes in contexts. Macedo et al. [29] developed a distributed information repository for automatic context-aware MANETs in order to adapt the multimedia context-rich application into service computing. Feug et al. [30] conceived a shared situation awareness model that supplies each user with agents for focused and customized decision support according to the user’s context.

REFERENCES

- J.-Y. Girard, “Linear Logic,” Theoretical Computer Science, Vol. 50, No. 1, 1987, pp. 1-102. doi:10.1016/0304-3975(87)90045-4

- A. S. Troelstra, “Lectures on Linear Logic,” CSLI Lecture Notes, CSLI, Stanford, 1992.

- N. Kamide and K. Kaneiwa, “Extended Full Computation-Tree Logic with Sequence Modal Operator: Representing Hierarchical Tree Structures,” Proceedings of the 22nd Australasian Joint Conference on Artificial Intelligence (AI’09), Lecture Notes in Artificial Intelligence 5866, Melbourne, 1-4 December 2009, pp. 485-494.

- N. Kamide and K. Kaneiwa, “Resource-Sensitive Reasoning with Sequential Information,” Proceedings of the 23rd Australasian Joint Conference on Artificial Intelligence (AI’10), Lecture Notes in Artificial Intelligence 6464, Adelaide, 7-10 December 2010, pp. 22-31.

- Y. Zhu, R. Y. Shtykh and Q. Jin, “Provision of Flowable Services in Cloud Computing Environments,” Proceedings of the 5th International Conference on Future Information Technology (FutureTech’2010), Busan, 21-23 May 2010, pp. 1-6.

- Y. Zhu, R. Y. Shtykh and Q. Jin, “A Human-Centric Framework for Context-Aware Flowable Services in Cloud Computing Environments,” Information Sciences, 2012, in Press.

- I. Foster, Y. Zhao, I. Raicu and S. Lu, “Cloud Computing and Grid Computing 360-Degree Compared,” Grid Computing Environments Workshop (GCE'08), Austin, 16 November 2008, pp. 1-10. doi:10.1109/GCE.2008.4738445

- P. Mell and T. Grance, “The NIST Definition of Cloud Computing,” 2011. http://csrc.nist.gov/publications/nistpubs/800-145/SP800-145.pdf

- G. D. Abowd, A. K. Dey, P. J. Brown, N. Davies, M. Smith and P. Steggles, “Towards Better Understanding of Context and Context-Awareness,” Proceedings of the 1st International Symposium on Handheld and Ubiquitous Computing (HUC’99), Lecture Notes in Computer Science 1707, Karlsruhe, 27-29 September 1999, pp. 304- 307.

- R. Y. Shtykh, Y. Zhu and Q. Jin, “A Context-Aware Framework for Flowable Services,” Proceedings of the 3rd International Conference on Multimedia and Ubiquitous Engineering (MUE’09), Qingdao, 4-6 June 2009, pp. 251- 256.

- Y. Zhu, R. Y. Shtykh, Q. Jin and J. Ma, “A Flowable Service Model for Seamless Integration of Services,” Proceedings of the 4th International Conference on Advances in Computer Science and Engineering (ACSE’2009), Phuket, 16-18 March 2009, pp. 199-204.

- Y. Zhu, R. Y. Shtykh and Q. Jin, “Harnessing User Contexts to Enable Flowable Services Model,” Proceedings of the 3rd International Conference on Human-Centric Computing (HumanCom-10), Cebu, 11-13 August, 2010, pp. 1-6.

- Y. Zhu and Q. Jin, “An Adaptively Emerging Mechanism for Context-Aware Service Selections Regulated by Feedback Distributions,” Human-Centric Computing and Information Sciences, 2012, in Press.

- Y. Zhu and Q. Jin, “An Adaptively Emerging Mechanism for Selection of Ambient Services,” Proceedings of the 2012 FTRA International Conference on Advanced IT, Engineering and Management (FTRA AIM’2012), Seoul, 6-8 February 2012, pp. 157-158.

- N. Kamide, “Linear Logics with Communication-Merge,” Journal of Logic and Computation, Vol. 15, No. 1, 2006, pp. 3-20. doi:10.1093/logcom/exh029

- M. Ohnishi and K. Matsumoto, “A System for Strict Implication,” Annals of the Japan Association for Philosophy of Science, Vol. 2, No. 4, 1964, pp. 183-188.

- A. R. Anderson and N. D. Belnap Jr., “Entailment: The Logic of Relevance and Necessity, Volume 1,” Princeton University Press, Princeton, 1975.

- N. Kamide, “Substructural Logic with Mingle,” Journal of Logic, Language and Information, Vol. 11, No. 2, 2002, pp. 227-249. doi:10.1023/A:1017586008091

- J. Coutaz, J. L. Crowley, S. Dobson and D. Garlan, “Context Is Key,” Communications of the ACM, Vol. 48, No. 3, 2005, pp. 49-53. doi:10.1145/1047671.1047703

- A. J. Younge, G. von Laszewski, L. Wang, S. LopezAlarcon and W. Carithers, “Efficient Resource Management for Cloud Computing Environments,” Proceedings of Green Computing Conference, Chicago, 15-18 August 2010, pp. 357-364. doi:10.1109/GREENCOMP.2010.5598294

- H. Wansing, “The Logic of Information Structures,” Lecture Notes in Artificial Intelligence 681, 1993, pp. 1-163.

- N. Kamide, “A Proof System for Temporal Reasoning with Sequential Information,” Proceedings of the 20th Brazilian Symposium on Artificial Intelligence (SBIA 2010), Lecture Notes in Artificial Intelligence 6404, São Bernardo do Campo, 23-28 October 2010, pp. 283-292.

- K. Kaneiwa and N. Kamide, “Sequence-Indexed LinearTime Temporal Logic: Proof System and Application,” Applied Artificial Intelligence, Vol. 24, No. 10, 2010, pp. 896-913. doi:10.1080/08839514.2010.514231

- N. Kamide, “Combining Soft Linear Logic and SpatioTemporal Operators,” Journal of Logic and Computation, Vol. 14, No. 5, 2004, pp. 625-650. doi:10.1093/logcom/14.5.625

- N. Kamide, “Linear and Affine Logics with Temporal, Spatial and Epistemic Operators,” Theoretical Computer Science, Vol. 353, No. 1-3, 2006, pp. 165-207.

- C. Hoareau and I. Satoh, “Modeling and Processing Information for Context-Aware Computing: A Survey,” New Generation Computing, Vol. 27, No. 3, 2009, pp. 177- 196. doi:10.1007/s00354-009-0060-5

- J. Wohltorf, R. Cissee and A. Rieger, “BerlinTainment: An Agent-Based Context-Aware Environment Planning System,” IEEE Communications Magazine, Vol. 43, No. 6, 2005, pp. 102-109. doi:10.1109/MCOM.2005.1452837

- T. Gu, H. K. Pung and D. Q. Zhang, “A Service-Oriented Middleware for Building Context-Aware Services,” Journal of Network and Computer Applications, Vol. 28, No. 1, 2005, pp. 1-18. doi:10.1016/j.jnca.2004.06.002

- D. Macedo, A. Dos Santos, J. M. S. Nogueira and G. Pujolle, “A Distributed Information Repository for Autonomic Context-Aware MANETs,” IEEE Transactions on Network and Service Management, Vol. 6, No. 1, 2009, pp. 45-55. doi:10.1109/TNSM.2009.090304

- Y. Feng, T. Teng and A. Tan, “Modeling Situation Awareness for Context-Aware Decision Support,” Expert System with Applications, Vol. 36, No. 1, 2009, pp. 455-463. doi:10.1016/j.eswa.2007.09.061