Applied Mathematics

Vol.07 No.16(2016), Article ID:71473,27 pages

10.4236/am.2016.716162

On Optimal Sparse-Control Problems Governed by Jump-Diffusion Processes

Beatrice Gaviraghi1, Andreas Schindele1, Mario Annunziato2, Alfio Borzì1

1Institut für Mathematik, Universität Würzburg, Würzburg, Germany

2Dipartimento di Matematica, Università degli Studi di Salerno, Fisciano (SA), Italy

Copyright © 2016 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY 4.0).

http://creativecommons.org/licenses/by/4.0/

Received: July 25, 2016; Accepted: October 22, 2016; Published: October 25, 2016

ABSTRACT

A framework for the optimal sparse-control of the probability density function of a jump-diffusion process is presented. This framework is based on the partial integro-differential Fokker-Planck (FP) equation that governs the time evolution of the probability density function of this process. In the stochastic process and, correspondingly, in the FP model the control function enters as a time-dependent coefficient. The objectives of the control are to minimize a discrete-in-time, resp. continuous-in- time, tracking functionals and its L2- and L1-costs, where the latter is considered to promote control sparsity. An efficient proximal scheme for solving these optimal control problems is considered. Results of numerical experiments are presented to validate the theoretical results and the computational effectiveness of the proposed control framework.

Keywords:

Jump-Diffusion Processes, Partial Integro-Differential Fokker-Planck Equation, Optimal Control Theory, Nonsmooth Optimization, Proximal Methods

1. Introduction

Recently, largely motivated by computational finance applications, there has been a growing interest in stochastic jump-diffusion processes. In fact, empirical facts suggest that a discontinuous path could be most appropriate for describing the dynamics of stock prices; see [1] and references therein. Therefore, in many application models, the stock price is modeled by a jump-diffusion stochastic process, rather than by an Itô-diffusion process [2] [3] . In this framework, when option pricing models and portfolio optimization problems are considered, partial integro-differential equations (PIDEs) naturally arise; see [1] [4] and references therein. In the present paper, we focus on a stochastic jump-diffusion (JD) process, whose jump component is given by a compound Poisson process subject to given barriers. Also concerning market models, systems driven by Poisson processes have been considered; see, e.g., [5] .

When one considers decision making issues involving random quantities, stochastic optimization problems must be solved. Such problems have largely been examined in the scientific literature, because of the numerous applications in, e.g., physics, biology, finance, and economy [6] - [8] . In these references, the usual procedure consists of minimizing a deterministic objective function that depends on the state and on the control variables. However, within this approach, statistical expectation objectives must be considered, since the state evolution is subject to randomness.

In this work, we tackle the issue of controlling a stochastic process by following an alternative approach already proposed in [9] - [11] , where the problem is reformulated from stochastic to deterministic. The key idea of this strategy is to focus on the probability density function (PDF) of the considered process, whose time evolution is modeled by the Fokker-Planck (FP) equation, also known as the Kolmogorov forward equation. The FP control approach is advantageous since it allows to model the action of the control over the entire space-time range of the underlying process, which is characterized by the shape of its PDF.

In the case of our JD process, the FP equation takes the form of a PIDE endowed with initial and boundary conditions. While the Cauchy data must be the initial distribution of the given random variable, the boundary conditions of a FP problem depend on the considered model. For the derivation of the FP equation and a discussion about boundary conditions, see [12] - [14] . Starting from the controlled stochastic differential model, the coefficients of the FP equation and thus the control mechanism are authomatically determined and thus an infinite dimensional optimal control problem governed by the FP PIDE related to a JD process is obtained. Since the control variable enters the state equation as a coefficient of the partial integro-differential operator, the resulting optimization problem is nonconvex.

Infinite-dimensional optimization is a very active research field, motivated by a broad range of applications ranging from, e.g., fluid flow, space technology, heat phenomena, and image reconstruction; see, e.g., [15] - [17] . The main focus of this research work has been on problems with smooth cost functionals governed by partial differential equations (PDEs) with linear control mechanism [16] [17] . However, bilinear control problems governed by parabolic and elliptic PDEs have been also recently investigated; see, e.g., [9] [18] [19] and references therin. In these references, the purpose is often to compute optimal controls such that an appropriate norm of the difference between a given target and the resulting state is minimized. In the present paper, we consider tracking objectives that include mean expectation values as in [20] . Our framework aims at the minimization of the difference between a known sequence of values and the first moment of a JD process, such that our formulation can also be considered as a parameter estimation problem for stochastic processes. In the discrete-in-time case, the form of the cost functional gives rise to a finite number of discontinuities in time in the adjoint variable and hence of the control. A similar situation has already been considered in [21] .

Very recently, PDE-based optimal control problems with sparsity promoting L1-cost functionals have been investigated starting with [22] . See [19] for a short survey and further references. Such formulation gives rise to a sparse optimal control, and for their solution variants of the semismooth Newton (SSN) method [23] have been considered. An alternative to such techniques is represented by proximal iterative schemes, introduced in [24] and [25] and further developed in the framework of finite-dimensional optimization [26] [27] . Recents works have adapted the structure of these algorithms for solving infinite-dimensional PDE optimization problems [19] . Moreover, it has been shown in [19] that in infinite-dimensional problems, proximal algorithms have a computational performance comparable to SSN methods while they do not require the construction of the second-order derivatives. In the present paper, we consider a L1 cost functional and apply the proximal algorithm proposed in [19] [28] . One of the novelties of our work consists of combining pioneering techniques for nonsmooth problems with the control of the PDF of a FP PIDE of a JD process.

This paper is organized as follows. In the next section, we discuss the functional setting of the FP problem modeling the evolution of the PDF of a JD stochastic process. In Section 3, we formulate our optimal control problems. Section 4 is devoted to the formulation of the corresponding first-order optimality systems. In Section 5, we discuss the discretization of the state and adjoint equations of the optimality system. In Section 6, we illustrate a proximal method for solving our optimal control problems. Section 7 is devoted to presenting results of numerical tests, including a discussion on the robustness of the algorithm to the choice of the parameters of the optimization problem. A section of conclusions completes this work.

2. The Fokker-Planck Equation of a Jump-Diffusion Process

In this section, we introduce a JD process and the corresponding FP equation that models the time evolution of the PDF of this process. Further, we discuss well-posed- ness and regularity of solutions to our FP problem.

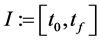

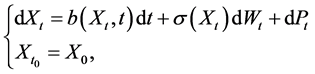

We consider a time interval  and a stochastic process

and a stochastic process  with range in a bounded domain

with range in a bounded domain . We assume that the set

. We assume that the set  is convex with Lipschitz boundary. The dynamic of X is governed by the following initial value problem

is convex with Lipschitz boundary. The dynamic of X is governed by the following initial value problem

(1)

(1)

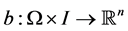

where  is a random variable with known distribution. The functions

is a random variable with known distribution. The functions  and

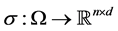

and  represent the drift and the diffusion coefficients, respectively. We assume that

represent the drift and the diffusion coefficients, respectively. We assume that  is full rank. Random increments to the process are given by a Wiener process

is full rank. Random increments to the process are given by a Wiener process  and a compound Poisson process

and a compound Poisson process . The rate of jumps and the jump distribution are denoted with

. The rate of jumps and the jump distribution are denoted with  and

and , respectively.

, respectively.

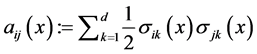

Define ,

, . Since

. Since  is full rank, a is posi-

is full rank, a is posi-

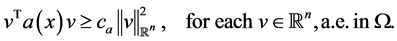

tive definite, and hence there exists  such that

such that

(2)

(2)

In this work, we consider a stochastic process with reflecting barriers. This assumption determines the boundary conditions for the FP equation corresponding to (1), see below. Define

where the differential operator

and

respectively. The definition of g in (5) takes into account the presence of reflecting barriers and the dependence on the jump amplitude

Notice that the differential operator

where

and

for each

The PDF f of X in (1) in the bounded domain

where

Notice that the flux F corresponds to the differential part of the FP equation, that is, to the drift and diffusion components of the stochastic process. In order to take into account the action of a reflecting barrier on the jumps, we consider a suitable definition of the kernel g, which can be conveniently illustrated in the one-dimensional case as follows.

Consider

where H is the Heaviside step function defined by

We normalize g and

The next remark motivates the choice of the boundary conditions (8) and of the condition (10).

Remark 2.1. Assume (8) and (10). Provided that

That is, the total probability over the space domain

Our FP problem is stated as follows

Next, we recall some definitions concerning the functional spaces needed to state the existence and uniqueness of solutions to (11). The space

These spaces are endowed with the following norms

where

We assume that the coefficients a and b in (4) satisfy the following conditions

for each

Notice that a and b must be defined on the closure

with

We have the following theorem [29] .

Theorem 2.1. Assume

Proof. See [29] .

Remark 2.2. Provided that

Consider the following spaces

Given the interval

which are also Banach spaces [17] equipped with the following norms

respectively. We consider the following space

which is a Hilbert space [17] with respect to the scalar product defined as follows

With this preparation, we can recall the following theorem [17] .

Theorem 2.2. The embedding

The following proposition provides a useful a priori estimate of the solution to (11).

Proposition 2.3. Let

Proof. Consider the H inner product of the equation in (11) with f. Exploiting the properties of the Gelf and triple, we have

We make use of the following fact [17] ,

First, we exploit the zero-flux boundary conditions in (11) and the coercivity of a as given in (2). Moreover, we make use of the following Cauchy inequality

which holds for each

We choose

We have

Therefore we have

Recalling the definition of I in (5) and defining

Since

Therefore,

Define

By applying the Gronwall inequality, we have

Next, we outline how to obtain an upper bound of

where we used the PIDE and the boundary condition of the FP problem in (11). Proceeding as above, we obtain

with

up to a redefinition of the constant

Proposition 2.4. Assume (13) and

Proof. The statement follows from the a priori estimates of Proposition 2.3 and Theorem 2.2.

We define

with F and I defined in (6) and (5), respectively.

3. Two Fokker-Planck Optimal Control Problems

In this section, we define our optimal control problems governed by (23) and prove the existence of at least an optimal solution.

We consider a control mechanism that acts on the drift function

We assume the presence of control constraints given by

Remark 3.1. The subset

Let

The term

1) Given a set of values

2) Given a square-integrable function

The norms in (26) are defined as follows

Remark 3.2. The choice of a bounded time interval I ensures that the L1-norm is finite whenever

Remark 3.3. The functional

We investigate the following optimal control problem (s)

In order to discuss the existence and uniqueness of solutions to (29), we consider the control-to-state operator

The next proposition can be proved by using standard arguments [9] [17] .

Proposition 3.1. The mapping

where b is the drift in (1) and F is defined in (6).

The constrained optimization problem (29) can be transformed into an unconstrained one as follows

where

The solvability of (31) is ensured by the next theorem, whose proof adapts techniques given in [30] [31] and [17] .

Theorem 3.2. There exists at least one optimal pair

Proof. The functional

We have that

The weakly lower convergent sequence

compact, there exists a subsequence

pass to the limit in

Thanks to the estimate (18) in Proposition 2.3, the sequence

sures that

Moreover, the convexity of

and therefore the pair

Remark 3.4. The uniqueness of the control

4. Two First-Order Optimality Systems

We follow the standard approach [17] [23] [32] of characterizing the solution of our optimal control problem as the solution to first-order optimality conditions that constitute the optimality system.

Consider the reduced problem (31) and write the reduced functional

Remark 4.1. The functional

The following definitions are needed in order to determine the first-order optimality system. If

where

since

The following proposition gives a necessary condition for a local minimum of

Proposition 4.1. If

or equivalently

Proof. Since

for v sufficiently close to

Dividing by

Dividing by

we conclude that

By using results in [22] [32] , we have that (34) implies that each

Moreover, recalling the definition of

A pointwise analysis of (35), which takes into account the definition (25) of the admissible controls, ensures the existence of two nonnegative functions

Proposition 4.2. The optimal solution

We refer to the last three conditions in (37) for the pair

The differentiability of

By considering the total derivative of

Therefore, we obtain

Defining the adjoint variable p as the solution to the following adjoint problem

we obtain the following reduced gradient

After some calculation, we have that (38) can be rewritten as the following adjoint system

where

The operator

The terminal boundary-value problem (40) admits a unique solution

The reduced gradient in (39), for given u, f, and p, takes the following form

The complementarity conditions in (37) can be recast in a more compact form, as follows. We define

The complementarity conditions in (37) and the inequalities related to the Lagrange multipliers

The previous considerations can be summarized in the following propositions.

Proposition 4.3. (Optimality system for a discrete-in-time tracking functional)

A local solution

Proposition 4.4. (Optimality system for a continuous-in-time tracking functional)

A local solution

5. Numerical Approximation of the Optimality Systems

In this section, we discuss the discretization of the optimality systems given in (42) and (43). For simplicity, we focus on a one-dimensional case with

fine

are defined as follows

Notice that a cell-centered space discretization is considered with cells midpoints at

The approximation of the forward and backward FP PIDEs is based on a discretization method discussed in [33] , where a convergent and conservative numerical scheme for solving the FP problem of a JD process is presented. This discretization scheme is obtained based on the so-called method of lines (MOL) [34] . The differential operator in (11) is discretized by applying the Chang-Cooper (CC) scheme [35] [36] . Setting

of the differential operator is carried out as follows

where

The zero-flux boundary conditions are implemented referring to the points

where

If we follow the optimize-before-discretize (OBD) approach, the optimality system has already been computed on a continuous level as in (42) and (43) and subsequently discretized. As a consequence, the OBD approach allows one to discretize the forward abd adjoint FP problems according to different numerical schemes. However, the OBD procedure might introduce an inconsistency between the discretized objective and the reduced gradient; see [15] and references therein. For this reason, the DBO (discretize-before-optimize) approach could be preferred and we pursue it in this work.

The DBO approach results in the following approximations

together with the midpoint quadrature formula applied to

The time integration of (45) is carried out with the combination of the SM splitting with a predictor corrector scheme, as in (44).

6. A Proximal Optimization Scheme

In this section, we discuss a proximal optimization scheme for solving (31). This scheme and the related theoretical discussion follow the work in [19] [27] . Proximal methods conveniently exploit the additive structure of the reduced objective, and in our framework, we have that the reduced functional

For our discussion, we need the following definitions and properties.

Definition 6.1. Let Z be a Hilbert space and l a convex lower semi continuous function,

Proposition 6.1. Let Z be a Hilbert space and l a convex lower semi continuous function,

where

Proof. See [27] .

Proposition 6.2. The solution

for each

Proof. From Proposition 4.7 and by using (46), we have

The relation (47) suggests that a solution procedure based on a fixed point iteration should be pursued. We discuss how such algorithm can be implemented.

In the following, we assume that

for each

for each

Inequality (49) is the starting point for the formulation of a proximal scheme, whose strategy consists of minimizing the right-hand side in (49). One can prove the following equality

Recall the definition of

Lemma 6.3. Let

where the projected soft thresholding function

Proof. See [19] .

Based on this lemma, we conclude the following

which can be taken as starting point for a fixed-point algorithm as follows

where

with

Algorithm 1 (Inertial proximal method).

Input: initial guess

1) While

(a) Evaluate

(b) Update

where

(c) Set

(d) Compute E according to (42) or (43).

(e) If

(f)

Remark 6.1. The backtracking scheme in Algorithm 1 provides an estimation of the upper bound of the Lipschitz constant in (48), since it is not known a priori. The initial guess for L is chosen as follows. Given a small variation

Algorithm 2 (Evaluation of the gradient).

Input:

1) Compute

2) Compute

3) Evaluate

Next, we discuss the convergence of our algorithm, using some existing results [28] [41] .

Proposition 6.4. The sequence

・ The sequence

・ There exists a weakly convergent subsequence

Definition 6.2. The proximal residual r is defined as follows

Proposition 6.12 tells us that

Proposition 6.5. Let

7. Numerical Experiments

In this section, we present results of numerical experiments to validate the performance of our optimal control framework. Our purpose is to determine a sparse control

We implement the discretization scheme and the algorithm described in Section 5. We take

In the first series of experiments, we consider the setting with

Also for the case

Figure 1. State variable in the case of the discrete-in-time tracking functional defined in (27), with

Figure 2. Adjoint variable in case of the discrete-in-time tracking functional defined in (27), with

Figure 3. State variable in the case of the continuous-in-time tracking functional defined in (28), with

Figure 4. Adjoint variable in case of the continuous-in-time tracking functional defined in (28), with

Table 2 the values of the tracking error for different values of the weight

Next, we investigate the behavior of the optimal solution considering the full optimization setting, that is, the case when the L1-cost actively enters in the optimization process, i.e.

In Figures 5-7, we depict the optimal controls for three different choices of values of

Finally, in the Table 1 and Table 2, we also report values of the tracking error when both the L2- and L1-costs are considered. For a direct comparison with the first series of experiments, we consider an unconstrained control. We find that already with a small value of

Table 1. Tracking error of the discrete-in-time functional

Table 2. Tracking error of the continuous-in-time functional

Figure 5. Optimal control with

Figure 6. Optimal control with

Figure 7. Optimal control with

Figure 8. Optimal control with

Figure 9. Optimal control with

Figure 10. Optimal control with

8. Conclusion

A framework for the optimal control of probability density functions of jump-diffusion processes was discussed. In this framework, two different, discrete-in-time and continuous-in-time, tracking functionals were considered together with a sparsity promoting L1-cost of the control. The resulting nonsmooth minimization problems governed by a Fokker-Planck partial integro-differential equation were investigated. The existence of at least an optimal control solution was proven. To characterize and compute the optimal controls, the corresponding first-order optimality systems were derived and their numerical approximation was discussed. These optimality systems in combination with a proximal scheme allowed to formulate an efficient solution procedure, which was also theoretically discussed. Results of numerical experiments were presented to validate the computational effectiveness of the proposed method.

Acknowledgements

Supported by the European Union under Grant Agreement Nr. 304617 “Multi-ITN STRIKE―Novel Methods in Computational Finance”. This publication was supported by the Open Access Publication Fund of the University of Würzburg. We thank very much the Referee for improving remarks.

Cite this paper

Gaviraghi, B., Schindele, A., Annunziato, M. and Borzì, A. (2016) On Optimal Sparse-Control Problems Governed by Jump-Diffusion Processes. Applied Mathematics, 7, 1978-2004. http://dx.doi.org/10.4236/am.2016.716162

References

- 1. Cont, R. and Tankov, P. (2004) Financial Modeling with Jump Processes. Chapman & Hall, London.

- 2. Kou, S.G. (2002) A Jump-Diffusion Model for Option Pricing. Management Science, 48, 1086-1101.

http://dx.doi.org/10.1287/mnsc.48.8.1086.166 - 3. Merton, R.C. (1976) Option Pricing When Underlying Stock Returns Are Discontinuous. Journal of Financial Economics, 3, 125-144.

http://dx.doi.org/10.1016/0304-405X(76)90022-2 - 4. Pascucci, A. (2011) PDE and Martingale Methods in Option Pricing. Springer, Berlin.

http://dx.doi.org/10.1007/978-88-470-1781-8 - 5. Harrison, J.M. and Pliska, S.R. (1983) A Stochastic Calculus Model of Continuous Trading: Complete Markets. Stochastic Processes and Their Applications, 15, 313-316.

http://dx.doi.org/10.1016/0304-4149(83)90038-8 - 6. Fleming, W.H. and Soner, M. (1993) Controled Markov Processes and Viscosity Solutions. Springer, Berlin.

- 7. Pham, H. (2009) Continuous-Time Stochastic Control and Optimization with Financial Applications. Springer, Berlin.

http://dx.doi.org/10.1007/978-3-540-89500-8 - 8. Yong, J. and Zhou, X.Y. (2000) Stochastic Controls, Hamiltonian Systems and Hamilton-Jacobi-Bellman Equations. Springer, Berlin.

- 9. Annunziato, M. and Borzì, A. (2013) A Fokker-Planck Control Framework for Multimensional Stochastic Processes. Journal of Computational and Applied Mathematics, 237, 487-507.

http://dx.doi.org/10.1016/j.cam.2012.06.019 - 10. Annunziato, M. and Borzì, A. (2010) Optimal Control of Probability Density Functions of Stochastic Processes. Mathematical Modelling and Analysis, 15, 393-407.

http://dx.doi.org/10.3846/1392-6292.2010.15.393-407 - 11. Roy, S., Annunziato, M. and Borzì, A. (2016) A Fokker-Planck Feedback Control-Constrained Approach for Modelling Crowd Motion. Journal of Computational and Theoretical Transport, 442-458.

http://dx.doi.org/10.1080/23324309.2016.1189435 - 12. Cox, D.R. and Miller, H.D. (1977) The Theory of Stochastic Processes. CRC Press, Boca Raton.

- 13. Schuss, Z. (2010) Theory and Applications of Stochastic Processes: An Analytical Approach. Springer, Berlin.

http://dx.doi.org/10.1007/978-1-4419-1605-1 - 14. Stroock, D.W. (2003) Markov Processes from K. Itô’s Perspective. Princeton University Press, Princeton.

http://dx.doi.org/10.1515/9781400835577 - 15. Borzì, A. and Schulz, V. (2012) Computational Optimization of Systems Governed by Partial Differential Equations. SIAM, Philadelphia.

- 16. Lions, J.L. (1971) Optimal Control of Systems Governed by Partial Differential Equations. Springer, Berlin.

- 17. Tröltzsch, F. (2010) Optimal Control of Partial Differential Equations: Theory, Methods and Applications. American Mathematical Society.

http://dx.doi.org/10.1090/gsm/112 - 18. Borzì, A. and Gonzalez, S. (2015) Andrade, Second-Order Approximation and Fast Multigrid Solution of Parabolic Bilinear Optimization Problems. Advances in Computational Mathematics, 41, 457-488.

http://dx.doi.org/10.1007/s10444-014-9369-9 - 19. Schindele, A. and Borzì, A. (2016) Proximal Methods for Elliptic Optimal Control Problems with Sparsity Cost Functional. Applied Mathematics, 7, 967-992.

http://dx.doi.org/10.4236/am.2016.79086 - 20. Jäger, S. and Kostina, E.A. (2005) Parameter Estimation for Forward Kolmogorov Equation with Application to Nonlinear Exchange Rate Dynamics. PAMM, 5, 745-746.

http://dx.doi.org/10.1002/pamm.200510347 - 21. Borzì, A., Ito, K. and Kunisch, K. (2002) Optimal Control Formulation for Determining Optical Flow. SIAM Journal on Scientific Computing, 24, 818-847.

http://dx.doi.org/10.1137/S1064827501386481 - 22. Stadler, G. (2009) Elliptic Optimal Control Problems with L1-Control Costs and Applications for the Placement of Control Devices. Computational Optimization and Applications, 44, 159-181.

http://dx.doi.org/10.1007/s10589-007-9150-9 - 23. Ulbrich, M. (2011) Semismooth Newton Methods for Variational Inequalities and Constrained Optimization Problems in Function Spaces. SIAM, Philadelphia.

http://dx.doi.org/10.1137/1.9781611970692 - 24. Nesterov, Y.E. (1983) A Method for Solving the Convex Programming Problem with Convergence Rate O(1/k2). Soviet Mathematics Doklady, 27, 372-376.

- 25. Tyrrell Rockafellar, R. (1976) Monotone Operators and the Proximal Point Algorithm. SIAM Journal on Control and Optimization, 14, 877-898.

http://dx.doi.org/10.1137/0314056 - 26. Bauschke, H.H., Burachik, R.S., Combettes, P.L., Elser, V., Luke, D.R. and Wolkowicz, H. (2011) Fixed-Point Algorithms for Inverse Problems in Science and Engineering. Springer, Berlin.

http://dx.doi.org/10.1007/978-1-4419-9569-8 - 27. Combettes, P.L. and Wajs, V.R. (2005) Signal Recovery by Proximal Forward-Backward Splitting. Multiscale Modeling & Simulation, 4, 1168-1200.

http://dx.doi.org/10.1137/050626090 - 28. Ochs, P.Y. and Cooper, G. (2014) IPiano: Inertial Proximal Algorithm for Nonconvex Optimization. SIAM Journal on Imaging Sciences, 7, 1388-1419.

http://dx.doi.org/10.1137/130942954 - 29. Garroni, M.G. and Menaldi, J.L. (1992) Green Functions for Second-Order Parabolic Integro-Differential Problems. Longman, London.

- 30. Addou, A. and Benbrik, A. (2002) Existence and Uniqueness of Optimal Control for a Distributed-Parameter Bilinear System. Journal of Dynamical Control Systems, 8, 141-152.

http://dx.doi.org/10.1023/A:1015372725255 - 31. Fursikov, A.V. (2000) Optimal Control of Distributed Systems. Theory and Applications. American Mathematical Society.

- 32. Ekeland, I. and Témam, R. (1999) Convex Analysis and Variational Problems. SIAM, Philadelphia.

- 33. Gaviraghi, B., Annunziato, M. and Borzì, A. (2015) Analysis of Splitting Methods for Solving a Partial-Integro Fokker-Planck Equation. Applied Mathematics and Computation, 294, 1-17.

http://dx.doi.org/10.1016/j.amc.2016.08.050 - 34. Schiesser, W.E. (1991) The Numerical Method of Lines. Academic Press, Pittsburgh.

- 35. Chang, J.S. and Cooper, G. (1970) A Practical Difference Scheme for Fokker-Planck Equations. Journal of Computational Physics, 6, 1-16.

http://dx.doi.org/10.1016/0021-9991(70)90001-X - 36. Mohammadi, M. and Borzì, A. (2015) Analysis of the Chang-Cooper Discretization Scheme for a Class of Fokker-Planck Equations. Journal of Numerical Mathematics, 23, 125-144.

http://dx.doi.org/10.1515/jnma-2015-0018 - 37. Strang, G. (1968) On the Construction and Comparison of Difference Schemes. SIAM Journal on Numerical Analysis, 5, 506-517.

http://dx.doi.org/10.1137/0705041 - 38. Marchuk, G.I. (1981) Methods of Numerical Mathematics. Springer, Berlin.

- 39. Hundsdorfer, W. and Verwer, J.G. (2010) Numerical Solutions of Time-Dependent Advection-Diffusion-Reaction Equations. Springer, Berlin.

- 40. Geiser, J. (2009) Decomposition Methods for Differential Equations: Theory and Applications. Chapman & Hall, London.

http://dx.doi.org/10.1201/9781439810972 - 41. Schindele, A. and Borzì, A. (2016) Proximal Schemes for Parabolic Optimal Control Problems with Sparsity Promoting Cost Functionals. International Journal of Control.