Applied Mathematics

Vol.05 No.16(2014), Article ID:49428,8 pages

10.4236/am.2014.516239

On the connection between the Hamilton-Jacobi-Bellman and the Fokker-Planck control frameworks

Mario Annunziato1, Alfio Borzì2, Fabio Nobile3, Raul Tempone4

1Dipartimento di Matematica, Università degli Studi di Salerno, Fisciano, Italy

2Institut für Mathematik, Universität Würzburg, Würzburg, Germany

3École Polytechnique Fédérale de Lausanne, Lausanne, Switzerland

4King Abdullah University of Science and Technology, Thuwal, Kingdom of Saudi Arabia

Email: mannunzi@unisa.it, alfio.borzi@mathematik.uni-wuerzburg.de, fabio.nobile@epfl.ch, raul.tempone@kaust.edu.sa

Copyright © 2014 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 28 June 2014; revised 1 August 2014; accepted 14 August 2014

ABSTRACT

In the framework of stochastic processes, the connection between the dynamic programming scheme given by the Hamilton-Jacobi-Bellman equation and a recently proposed control approach based on the Fokker-Planck equation is discussed. Under appropriate assumptions it is shown that the two strategies are equivalent in the case of expected cost functionals, while the Fokker- Planck formalism allows considering a larger class of objectives. To illustrate the connection between the two control strategies, the cases of an Itō stochastic process and of a piecewise-deter- ministic process are considered.

Keywords:

Hamilton-Jacobi-Bellman equation, Fokker-Planck equation, optimal control theory, stochastic differential equations, hybrid systems

1. Introduction

In the modelling of uncertainty, the theory of stochastic processes [1] provides established mathematical tools for the modelling and the analysis of systems with random dynamics. Furthermore in application, the possibility to control sequences of events subject to random disturbances is highly desirable for real applications. In this paper, we elucidate the connection between the well established Hamilton-Jacobi-Bellman (HJB) control frame- work [2] [3] and a control strategy based on the Fokker-Planck (FP) equation [4] [5] . Illustrative examples allow gaining additional insight on this connection.

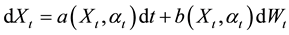

We focus on a representative n-dimensional continuous-time stochastic processes described by the following model

(1.1)

(1.1)

where  is a Lipschitz-continuous n-dimensional drift function and

is a Lipschitz-continuous n-dimensional drift function and  is a m-dimensional Wiener process with stochastically independent components. The dispersion function

is a m-dimensional Wiener process with stochastically independent components. The dispersion function  with values in

with values in  is assumed to be smooth and full rank; see [6] . This is the well-known Itō stochastic differential equation (SDE) [1] where we consider also the action of a d-components vector of controls

is assumed to be smooth and full rank; see [6] . This is the well-known Itō stochastic differential equation (SDE) [1] where we consider also the action of a d-components vector of controls , that allows driving the random process towards a certain goal [3] . We denote with

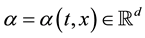

, that allows driving the random process towards a certain goal [3] . We denote with  the set of Markovian controls that contains

the set of Markovian controls that contains

all jointly measurable functions , where

, where  is a given compact set [3] . In determinis-

is a given compact set [3] . In determinis-

tic dynamics, the optimal control is achieved by finding a control law  that minimizes a given objective defined by a cost functional

that minimizes a given objective defined by a cost functional ; see, e.g., [2] .

; see, e.g., [2] .

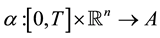

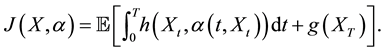

In the non-deterministic case, the state  is random, so that inserting a stochastic process into a deterministic cost functional will result into a random variable. Therefore, in stochastic optimal control prob- lems the expected value of a given cost functional is considered [7] . In particular, we have

is random, so that inserting a stochastic process into a deterministic cost functional will result into a random variable. Therefore, in stochastic optimal control prob- lems the expected value of a given cost functional is considered [7] . In particular, we have

(1.2)

(1.2)

This is a Bolza type cost functional in the finite-horizon case  and it is assumed here that the controller knows the state of the system at each instant of time (complete observations). For this case, the method of dynamic programming can be applied [2] [7] [8] in order to derive the HJB equation for

and it is assumed here that the controller knows the state of the system at each instant of time (complete observations). For this case, the method of dynamic programming can be applied [2] [7] [8] in order to derive the HJB equation for  with

with  as the optimization function. Some other cases of the cost structure of

as the optimization function. Some other cases of the cost structure of

A control approach close to the HJB formulation consists in approximating the continuous stochastic process by a discrete Markov decision chain. In this approach the information of the controlled stochastic process, carried by the transition probability density function of the approximating Markov process, is utilized to solve the Bellman equation; for details see [10] .

However, the common methodology to find an optimal controller of random processes consists in reformulat- ing the problem from stochastic to deterministic. This is a reasonable approach when we consider the problem from a statistical point of view, with the purpose to find out the collective “ensemble” behaviour of the process. In fact, the average

The value of the cost functional before averaging is a way to measure the cost of a single trajectory of the process. However, the knowledge of the single realization is not useful for the statistical analysis, that would require to determine the average, the variance, and other properties associated to the state of the stochastic process.

On the other hand, a stochastic process is completely characterized by its law which, in many cases, can be represented by the probability density function (PDF). Therefore, a control methodology that employs the PDF would provide an accurate and flexible control strategy that could accommodate a wide class of objectives. For this reason, in [11] - [14] PDF control schemes were proposed, where the cost functional depends, possibly non- linearly, on the PDF of the stochastic state variable; see, e.g., [11] - [14] for specific applications.

The important step in the Fokker-Planck control framework proposed in [4] [5] is to recognize that the evolution of the PDF associated to the stochastic process (1.1) is characterized as the solution of the Fokker- Planck (also known as forward Kolmogorov) equation; see, e.g., [15] [16] . This is a partial differential equation of parabolic type with Cauchy data given by the initial PDF distribution. Therefore, the formulation of objec- tives in terms of the PDF and the use of the Fokker-Planck equation provide a consistent framework to formu- late an optimal control strategy of stochastic processes.

In this paper, we discuss the relationship between the HJB and the FP frameworks. We show that the FP control strategy provides the same optimal control as the HJB method for an appropriate choice of the objectives. Specifically, this is the case for objectives that are formulated as expected cost functionals and assuming that both the HJB equation and the FP equation admit a unique classical solution. The latter assumption is motivated by the purpose of this work to show the connection between the HJB and FP frameworks, without aiming at finding the most general setting, e.g. for viscosity solution of the HJB equation [9] [17] [18] or FP equation with irregular coefficients [19] , where this connection holds. Furthermore, we remark that the FP approach allows accommodating any desired functional of the stochastic state and its density, that is now represented by the PDF associated to the controlled stochastic process.

In the next section, we illustrate the HJB framework. In Section 3, we discuss the FP method. Section 4 is devoted to specific illustrative examples. A section of conclusions completes this paper.

2. The HJB framework

We consider the optimal control of the state

The control function

We define the expected cost for the admissible controls

which is an expectation conditional to the process

are smooth and bounded. We call

control

We assume that this control is unique. Further, we define the following value function, also known as the cost to go function,

It is well known [2] [3] that

In the following, to ease notation we use the Einstein summation convention: when an index variable appears twice in a single term this means that a summation over all possible values of the index takes place. For exam-

ple, we have that

variable

Theorem 1. Assume that

with the Hamiltonian function

and

Notice that the optimal control satisfies at each time

Existence and uniqueness of solutions to the HJB equation often involve the concept of uniform parabolicity; see [3] [18] [20] . The HJB equation is called uniformly parabolic [3] if there exists a constant

where

If the non-degeneracy condition holds, results from the theory of PDEs of parabolic type imply existence and uniqueness of solutions to the HJB problem (1.7) with the properties required in the Verification Theorem [3] . In particular, we have the following theorem due to Krylov.

Theorem 2. If the non-degeneracy assumption holds, and in addition we have that

3. The Fokker-Planck formulation

In this section, we discuss an alternative to the HJB approach that is based on the formulation of a Fokker- Planck optimal control problem. We suppose that the functions

The time evolution of the PDF

Also in this case, the FP problem can be defined in a bounded or unbounded set in

Now, we consider a cost functional

It is important to recognize that if

that gives (1.4) in terms of the probability measure,

To characterize the optimal solution to (1.11) where the cost functional (1.4) is considered, we introduce the Lagrange functional [22] .

where the function

We have that the optimal control solution is characterized as the solution to the following optimality system

where

Now, we illustrate the equivalence between the HJB and the FP formulation. The key point is to notice that (1.16) corresponds to the first-order necessary optimality condition (1.9) for the minimization of the Hamil- tonian in the HJB formulation, once we identify the Lagrange multiplier

Notice that with the above setting, the optimal control

We also note that the solution to the adjoint FP equation (1.15) and to the optimality condition equation for the control function (1.16) do not depend on the initial condition

4. Illustrative examples

In this section, we consider two examples that illustrate that the FP optimal control formulation may provide the same control strategy as the HJB method. In the following, the first example refers to a Itōstochastic process, while the second example considers a piecewise deterministic process.

4.1. Controlled Itō stochastic process

We consider an optimal transport problem that is related to a model for mean-field games; see, e.g., [23] . It reflects the congestion situation, where the behaviour of the crowd depends on the form of the attractive strongly convex potential

where the velocity

where the PDF

The purpose of the optimal control is to determine a drift of minimal kinetic energy that moves a mass distribution from an initial location to a final destination. The corresponding objective is as follows [24]

In this functional, the kinetic energy term is augmented with the term

attractive potential of the final destination. It can be interpreted as the requirement that the crowd aims at reaching the region of low potential

The corresponding adjoint equation is given by the following backward evolution equation

and the optimality condition is given by

It is immediate to see that combining the adjoint equation and the optimality condition, we obtain the following HJB problem

4.2. Controlled piecewise deterministic process

Our second example refers to a class of piecewise deterministic processes (PDP). A first general formulation of these systems that switch randomly within a certain number of deterministic discrete states at random times is given in [25] . Specifically, we deal with a PDP model described by a state function that is continuous in time and is driven by a discrete S-state renewal Markov process denoted with

[28] . The state function

a) The state function satisfies the following equation

where

states

driven by the dynamics function

that all

this assumptions for fixed

reachable set of trajectories is a closed bounded subset of

b) The state function satisfies the initial condition

c) The process

for each state

When a transition event occurs, the PDP system switches instantaneously from a state

We have that the time evolution of the PDFs of the states of our PDP model is governed by the following Fokker-Planck system [26]

where

have

The initial conditions for the solution of the FP system are given as follows

Next, we consider an objective similar to (1.18) for all states of the system. We have

This objective corresponds to the expected functional (1.4) on the space

Now, consider the FP optimal control problem of finding

where (1.29)-(1.30) is the adjoint problem and (1.31) represents the optimality condition.

On the other hand, the HJB optimal control of our PDP model is considered in [29] , where the

Furthermore, in [29] it is proved that the corresponding HJB problem

admits a unique viscosity solution that is also the classical solution to the adjoint FP equation (1.29). Hence, also in this case the HJB formulation with (1.32) and (1.33) corresponds to the FP approach with (1.29) and (1.31), as much as the cost functions

5. Conclusion

In this paper, the connection between the Hamilton-Jacobi-Bellman dynamic programming scheme and a recently proposed Fokker-Planck control framework was discussed. It was shown that the two control strategies were equivalent in the case of mean cost functionals. To illustrate the connection between the two control strategies, the cases of an Itō stochastic process and of a piecewise-deterministic process were considered.

Acknowledgements

We would like to thank the Mathematisches Forschungsinstitut Oberwolfach for the kind hospitality and for inspiring this work.

M. Annunziato would like to thank the support by the European Science Foundation Exchange OPTPDE Grant.

A. Borzì acknowledges the support of the European Union Marie Curie Research Training Network “Multi- ITN STRIKE-Novel Methods in Computational Finance”.

R. Tempone is a member of the KAUST SRI Center for Uncertainty Quantification in Computational Science and Engineering.

F. Nobile acknowledges the support of CADMOS (Center for Advances Modeling and Science).

Funding

Supported in part by the European Union under Grant Agreement “Multi-ITN STRIKE-Novel Methods in Com- putational Finance”. Fund Project No. 304617 Marie Curie Research Training Network.

References

- Ghman, I.I. and Skorohod, A.V. (1972) Stochastic Differential Equations. Springer-Verlag, New York. http://dx.doi.org/10.1007/978-3-642-88264-7

- Bertsekas, D. (2007) Dynamic Programming and Optimal Control, Vols. I and II. Athena Scientific.

- Fleming, W.H. and Soner, H.M. (2006) Controlled Markov Processes and Viscosity Solutions. Springer-Verlag, Berlin.

- Annunziato, M. and Borzì, A. (2010) Optimal Control of Probability Density Functions of Stochastic Processes. Mathematical Modelling and Analysis, 15, 393-407. http://dx.doi.org/10.3846/1392-6292.2010.15.393-407

- Annunziato, M. and Borzì, A. (2013) A Fokker-Planck Control Framework for Multidimensional Stochastic Processes. Journal of Computational and Applied Mathematics, 237, 487-507. http://dx.doi.org/10.1016/j.cam.2012.06.019

- Cox, D.R. and Miller, H.D. (2001) The Theory of Stochastic Processes. Chapman & Hall/CRC, London.

- Fleming, W.H. and Rishel, R.W. (1975) Deterministic and Stochastic Optimal Control. Springer-Verlag, Berlin. http://dx.doi.org/10.1007/978-1-4612-6380-7

- Borkar, V.S. (2005) Controlled Diffusion Processes. Probability Surveys, 2, 213-244. http://dx.doi.org/10.1214/154957805100000131

- Falcone, M. (2008) Numerical Solution of Dynamic Programming Equations. In: Bardi, M. and Dolcetta, I.C., Eds., Optimal Control and Viscosity Solutions of Hamilton-Jacobi-Bellman Equations, Birkhauser.

- Kushner, H.J. (1990) Numerical Methods for Stochastic Control Problems in Continuous Time. SIAM Journal on Control and Optimization, 28, 999-1048. http://dx.doi.org/10.1137/0328056

- Forbes, M.G., Guay, M. and Forbes, J.F. (2004) Control Design for First-Order Processes: Shaping the Probability Density of the Process State. Journal of Process Control, 14, 399-410. http://dx.doi.org/10.1016/j.jprocont.2003.07.002

- Jumarie, G. (1992) Tracking Control of Nonlinear Stochastic Systems by Using Path Cross-Entropy and Fokker-Planck Equation. International Journal of Systems Science, 23, 1101-1114. http://dx.doi.org/10.1080/00207729208949368

- Kárný, M. (1996) Towards Fully Probabilistic Control Design. Automatica, 32, 1719-1722. http://dx.doi.org/10.1016/S0005-1098(96)80009-4

- Wang, H. (1999) Robust Control of the Output Probability Density Functions for Multivariable Stochastic Systems with Guaranteed Stability. IEEE Transactions on Automatic Control, 44, 2103-2107. http://dx.doi.org/10.1109/9.802925

- Primak, S., Kontorovich, V. and Lyandres, V. (2004) Stochastic Methods and Their Applications to Communications. John Wiley & Sons, Chichester.

- Risken, R. (1996) The Fokker-Planck Equation: Methods of Solution and Applications. Springer, Berlin.

- Lions, J.L. (1983) On the Hamilton-Jacobi-Bellman Equations. Acta Applicandae Mathematica, 1, 17-41. http://dx.doi.org/10.1007/BF02433840

- Crandall, M., Ishii, H. and Lions, P.L. (1992) User’s Guide to Viscosity Solutions of Second Order Partial Differential Equations. Bulletin of the American Mathematical Society, 27, 1-67. http://dx.doi.org/10.1090/S0273-0979-1992-00266-5

- Le Bris, C. and Lions, P.L. (2008) Existence and Uniqueness of Solutions to Fokker-Planck Type Equations with Irregular Coefficients. Communications in Partial Differential Equations, 33, 1272-1317.

- Aronson, D.G. (1968) Non-Negative Solutions of Linear Parabolic Equations. Annali della Scuola Normale Superiore di Pisa. Classe di Scienze, 22, 607-694.

- Bogachev, V., Da Prato, G. and Röckner, M. (2010) Existence and Uniqueness of Solutions for Fokker-Planck Equations on Hilbert Spaces. Journal of Evolution Equations, 10, 487-509. http://dx.doi.org/10.1007/s00028-010-0058-y

- Lions, J.L. (1971) Optimal Control of Systems Governed by Partial Differential Equations. Springer, Berlin.

- Lachapelle, A., Salomon, J. and Turinici, G. (2010) Computation of Mean Field Equilibria in Economics. Mathematical Models and Methods in Applied Sciences, 20, 567-588. http://dx.doi.org/10.1142/S0218202510004349

- Carlier, G. and Salomon, J. (2008) A Monotonic Algorithm for the Optimal Control of the Fokker-Planck Equation. IEEE Conference on Decision and Control, CDC 2008, Cancun, 9-11 December 2008, 269-273.

- Davis, M.H.A. (1984) Piecewise-Deterministic Markov Processes: A General Class of Non-Diffusion Stochastic Models. Journal of the Royal Statistical Society. Series B (Methodological), 46, 353-388.

- Annunziato, M. (2008) Analysis of Upwind Method for Piecewise Deterministic Markov Processes. Computational Methods in Applied Mathematics, 8, 3-20.

- Capuzzo Dolcetta, I. and Evans, L.C. (1984) Optimal Switching for Ordinary Differential Equations. SIAM Journal on Control and Optimization, 22, 143-161. http://dx.doi.org/10.1137/0322011

- Annunziato, M. and Borzì, A. (2014) Optimal Control of a Class of Piecewise Deterministic Processes. European Journal of Applied Mathematics, 25, 1-25. http://dx.doi.org/10.1017/S0956792513000259

- Moresino, F., Pourtallier, O. and Tidball, M. (1988) Using Viscosity Solution for Approximations in Piecewise Deterministic Control Systems. Report RR-3687-HAL-INRIA, Sophia-Antipolis.