World Journal of Neuroscience

Vol.3 No.4(2013), Article ID:37035,6 pages DOI:10.4236/wjns.2013.34031

Implications of rare neurological disorders and perceptual errors in natural and synthetic consciousness

![]()

Medical Research Division, New Terra Enterprises, Los Angeles, USA

Email: allend.allen@yahoo.com

Copyright © 2013 Allen D. Allen. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Received 27 August 2013; revised 12 September 2013; accepted 18 September 2013

Keywords: Human Brain; Consciousness; Artificial Consciousness; Clinical Neurology; Fractal Cortex; Perceptual Errors; Neurological Time; Emotional Precognition; Artificial Sociopath; Quantum Mechanical Measurement

ABSTRACT

Recent theories on natural and synthetic consciousness overlook the geometric structure necessary for awareness of 3-dimensional space, as strikingly illustrated by left-neglect disorder. Furthermore, awareness of 3-dimensional space entails some surprisingly tenacious optical illusions, as demonstrated by an experiment in the text. Awareness of linear time is also crucial and complex. As a consequence, synthetic consciousness cannot be realized by simply intercomnecting a large number of electronic circuits constructed from ordinary chips and transistors. Since consciousness is a subjective experience, there is no sufficient condition for consciousness that can be experimentally confirmed. The most we can hope for is agreement on the necessary conditions for consciousness. Toward that end, this paper reviews some relevant clinical phenomena.

1. INTRODUCTION

Advances in functional magnetic resonance imaging (fMRI) have made it possible to observe the reaction of the human brain to a given stimulus during both waking consciousness and deep unconsciousness, e.g., due to the administration of propofol [1-11]. During waking consciousness, but not otherwise, a stimulus generates integrated global activity all across specialized regions of the brain. This has led to the conjecture that such an information-processing methodology may be a sufficient condition for consciousness and thus admits to the possibility of synthetic or non-biologic consciousness. However, this theory is contradicted by rare neurological disorders that provide empirical evidence as to which conditions are, and are not, necessary for consciousness. The oversight is no doubt due, at least in part, to the fact that only a small number of clinical neurologists have ever seen the disorders in question. Also, there is a bias that only large controlled studies provide reliable information. While this is true for clinical trials of therapeutic and diagnostic methods, the first law of information theory tells us that rare events are precisely the ones that provide the most information [12,13].

Since consciousness is a subjective experience, there is no sufficient condition for consciousness that can be experimentally tested. To the contrary, the mimicking of conscious behavior by an artificial brain would only tempt us to assume it is experiencing consciousness based upon, say, an analog for the Glasgow Coma Score [14, 15] when there is no scientific basis for this assumption. The most we can hope to achieve is agreement on the characteristics of the human brain that are necessary for consciousness. We could then assume that an artificial brain satisfying all of these criteria would be capable of consciousness under the general rule that like causes produce like effects. Toward that end, the present paper reviews the implications of some real clinical phenomena as a guide to recognizing the necessary conditions for consciousness.

2. AWARENESS OF 3-DIMENSIONAL SPACE

2.1. Cerebral Geometry

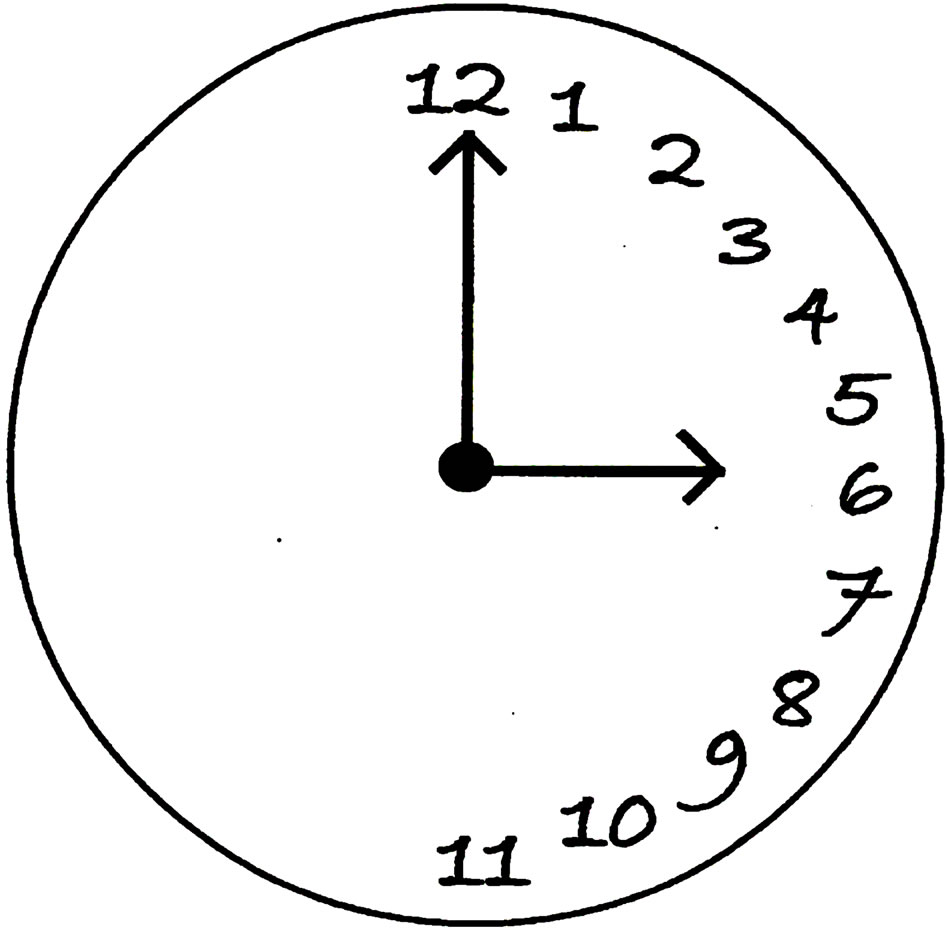

The rare condition known as left-neglect disorder is caused by a lesion on the right hemisphere of the brain [16-18]. Patients with this condition cannot perceive anything to the left of a reference point, including but not limited to, the left side of their own bodies. Indeed, they cannot even conceive of leftness (see Figure 1). In other

Figure 1. Wall clock drawn by a patient with left-neglect disorder who was asked to draw a wall clock without any particular time being specified. These patients cannot conceive of leftness.

words, the anatomy of the cerebral cortex must be intact in order for a person to be aware of 3-dimensional space. Moreover, the anatomy of the human brain contains fractal structures [19]. This is to be expected since the cerebral cortex packs a large surface into a small volume by being convoluted. Hence, it is unlikely that synthetic consciousness can be achieved by simply interconnecting a large number of chips and transistors. Rather, realizetion of the geometric structure needed for consciousness suggests a need to grow out biologic materials. This raises a question as to just how “synthetic” a synthetic brain could be.

2.2. Optical Illusions

Information processing by the human brain is not intended to be accurate nor is it. Rather, the human brain evolved to be biologically adaptive. As a consequence, waking consciousness necessarily includes such errors as optical illusions [20-23], pareidolia [24-26], change blindness [27-32] and plastic memories [33-36]. Indeed, even blind people experience optical illusions [23]. Some surprisingly tenacious optical illusions arise as part of an awareness of 3-dimensional space. This is illustrated below by a simple, do-it-yourself experiment well known to psychologists. Because there is a big difference between learning something by reading about it and by actually experiencing it, the reader is encouraged to take a few minutes to conduct the below experiment.

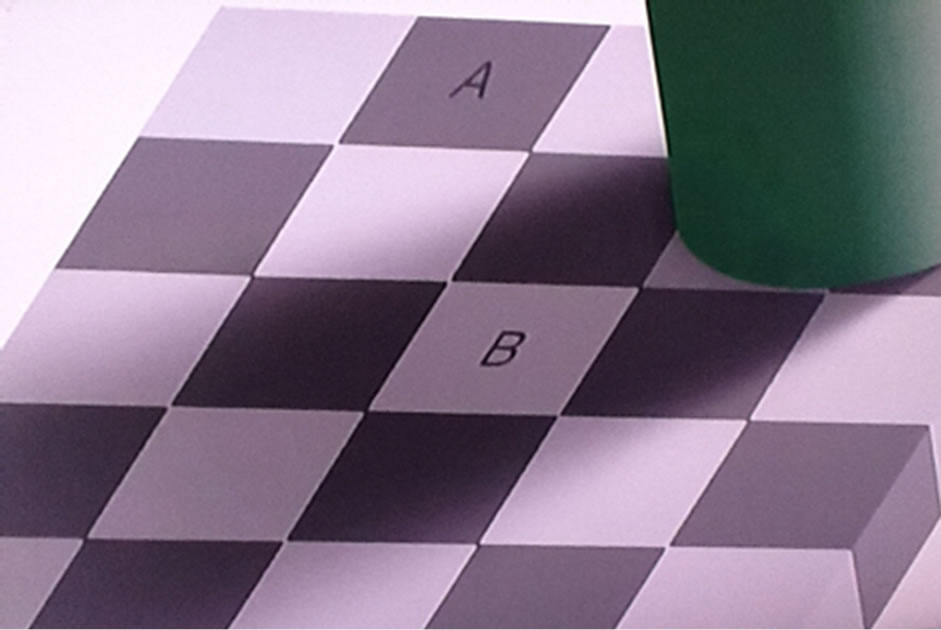

In looking at Figure 2 it is obvious that the square labeled A is much darker than the square labeled B. Now

Figure 2. Illustrates the tenacity and purpose of optical illusions.

conduct the following experiment.

1) Print two copies of the page containing Figure 2.

2) Use one copy to cut out the two squares labeled A and B.

3) Place the cutout squares labeled A and B next to each other. It will be seen that, despite appearances that seem certain, they are exactly the same color.

4) Place the cutout square labeled B over the square labeled A in Figure 2 on the intact page. When you do so, the cutout square labeled B will suddenly become much darker.

Even after you have learned that the two labeled squares are the same color, and moving one to the position of the square labeled A makes it appear much darker, you will not be able to see Figure 2 with the correct colors. The brain changes what you are seeing so that you can navigate through 3-dimensional space using shadows to determine the shapes and locations of objects.

3. AWARENESS OF LINEAR TIME

3.1. Waking Consciousness

Orientation in linear time is limited to waking consciousness [37-40]. Indeed, when awake, a person is only conscious of the neurological present. From a neurologic standpoint, “nowness” can be defined as that which is perceived when a person is awake (although the physical definition is different). A person may remember the past and can anticipate the future by remembering that tomorrow is a holiday or that he has an appointment next week. But these recollections are not perceived in the same way that the present is perceived when a person is awake.

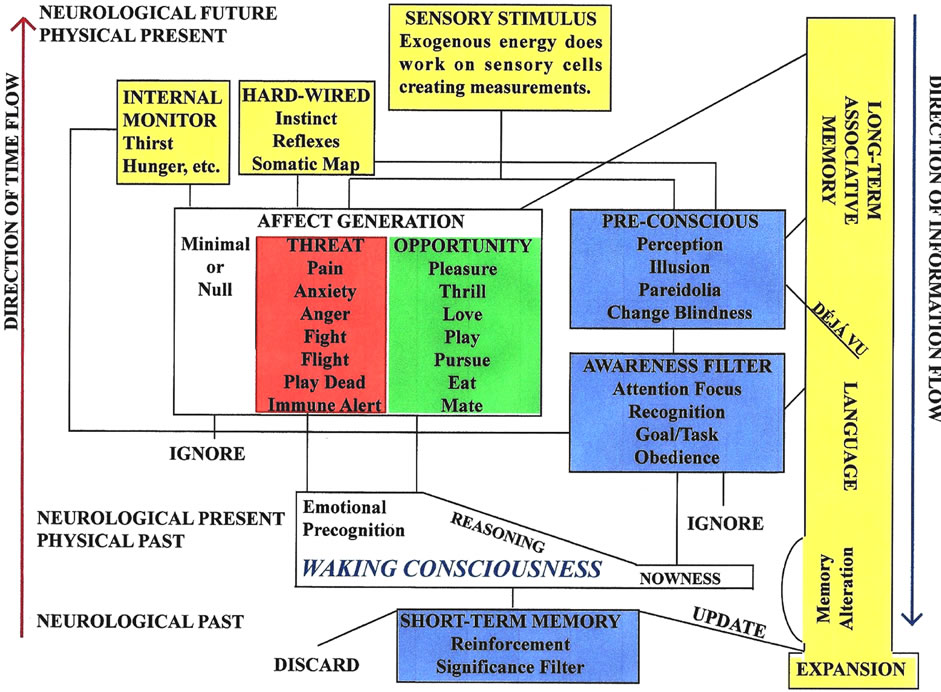

Figure 3 illustrates the flow of time in relation to the flow of exogenous and endogenous information in the fully awake human brain and is based upon a large body of interdisciplinary knowledge from psychology, psychiatry, and neurology.

Figure 3. Functional elements and pathways of the fully awake human brain based upon current knowledge and theories from the fields of psychology, psychiatry and neurology.

For the present purposes, the main point of Figure 3 concerns time. In keeping with the convention of physics, time advances in Figure 3 in the positive-y direction (up the vertical axis). It becomes immediately apparent that information flow proceeds in the opposite or negative-y direction (down the vertical axis). Note that Figure 3 necessarily uses some oblique pathways in order to have a 2-dimensional graphic. These oblique pathways have a vertical and horizontal component. Information flow is down the vertical component. The horizontal axis represents parallel information processing across specialized regions of the brain.

It is also apparent from Figure 3 that neurological time is not the same as physical time. The physical present lies in the neurological future and the neurological present lies in the physical past. This refutes the conjecture that the consciousness of the observer causes the quantum mechanical wave function to collapse [41-48] since a measurement that creates a sensory stimulus predates conscious awareness of the stimulus. Indeed, as in change blindness [27-32], conscious awareness may never occur if there is no focus on the stimulus and it is discarded by the awareness filter as irrelevant. Since the human brain operates on a limited amount of power, efficiency is important and leaves no room for the processing of overwhelming amounts of information deemed irrelevant to the individual.

3.2. Emotional Precognition

Synchronization during information processing may occasionally be inexact. Déjà vu, for example, occurs when a perception formed in pre-conscious processing enters long-term memory before the awareness filter accesses long-term memory [49]. Under nominal conditions, the affective content of a stimulus will enter waking consciousness before awareness of the stimulus itself because the latter is first formed in pre-conscious processing and then evaluated by the awareness filter. This results in what may be called “emotional precognition”. An example is when human subjects are aware of the affect inherent in photographs of faces that are threatening, inviting, or neutral, before being aware of the photographs themselves [50,51].

Pairing of emotional pre-cognition with awareness of the stimulus itself may be learned from the repeated pairing of sequential events, much as associative memory is formed [52-54]. For example, even a pre-historic human with no scientific knowledge of thunderstorms would soon learn to expect a thunderclap some seconds after seeing a lightning flash. On the other hand, when events are rare, the pairing of the emotional precognition and the perception of the stimulus itself is implied by the low probability of having both in quick succession under the product rule for independent events, i.e., as per the first law of information theory [12,13].

4. NECESSARY AND SUFFICIENT CONDITIONS FOR CONSCIOUSNESS

As noted in the Introduction, consciousness is a subjecttive experience. As a result, there is no sufficient condition for consciousness that can be experimentally confirmed. The most we can hope to achieve is agreement on the characteristics of the human brain that are necessary to experience consciousness. Rare neurological disorders can rule out some capabilities of the human brain as necessary for consciousness. Remarkably, pain perception can be eliminated since children born with congenital insensitivity to pain are surely conscious [55-59]. This suggests that the associated affect that is generated by threat detection may not be necessary for consciousness, just as thrill seekers with low anxiety thresholds are no less conscious than others. However, congenital insensitivity to pain is biologically maladaptive. Children born with this defect will chew off their fingertips and gouge their eyes out if not carefully monitored. Hence, the pre-conscious need not be adaptively evolved. Nonetheless, preconscious perception must permit errors if the cognitive system is to operate in 3-dimensional space, as illustrated by the experiment using Figure 2 in Section 2.2, above.

Likewise, learning and expansion of long-term memory are not necessary for consciousness. Individuals who have suffered permanent brain damage that precludes forming new long-term memories are nonetheless conscious [60-62]. For that matter, so are individuals whose ability to experience emotion is impaired [63-65], as suggested above. However, some pre-existing long-term memory is necessary since, as shown in Figure 3, it is interrogated by pre-conscious processing and the awareness filter that is necessary to conserve limited energy and prevent the brain from being overwhelmed with information. In this case, however, fixed long-term memory would play the same role as the hard-wired module that provides instinct, reflexes, and a somatic map. In other words, it would be akin to a computer program.

Unfortunately, this all means that an artificial sociopath would be the easiest form of synthetic consciousness to achieve since the considerable burden of reproducing human emotion and learning would be absent. In other words, a synthetic, non-learning brain that is unfeeling, as well as amoral and anti-social [64], would be the simplest form of artificial consciousness to achieve. Clearly, this is to be avoided if there is a serious effort to realize artificial consciousness even though it would make that goal even more daunting.

5. CONCLUSIONS

The conclusions of the present investigation and review can be enumerated as follows:

1) In addition to functional features, the sentient brain depends upon structure and architecture in order to be aware of 3-dimensional space, such as fractal geometry and the convolutions of the cerebral cortex, as illustrated by left-neglect disorder. This raises a question as to how “synthetic” a synthetic sentient brain could be.

2) Time is crucial to waking consciousness.

a) Time flows in the opposite direction that information flows.

b) Neurological time is distinct from physical time. The neurological present lies in the physical past. The physical present lies in the neurological future.

c) This refutes the conjecture that the consciousness of the observer causes the quantum-mechanical wave function to collapse because a measurement resulting in a sensory stimulus predates conscious awareness of the stimulus.

d) The neurological present is the only thing a person can perceive while awake.

e) The affective content of a stimulus arrives in the neurological present before the stimulus itself, thus admitting to emotional precognition. This is because awareness of the stimulus itself is first processed by pre-conscious perception and an awareness filter.

f) Pre-conscious perceptions are prone to errors because they need to be adaptive if a sentient system is to operate in a 3-dimensional environment.

3) Because consciousness is a subjective experience, there is no sufficient condition for consciousness that can be experimentally tested.

a) However, we can elucidate necessary conditions for consciousness.

b) Certain rare neurological disorders that occur in conscious individuals eliminate certain mental capabilities as necessary for consciousness. Capabilities not necessary for consciousness include, without limitation:

i the ability to perceive pain, and

ii the ability to form new long-term memories.

c) Since an unfeeling, immoral and non-learning brain may be sentient, it would be easier to engineer this type of synthetic brain. However, the result would be an artificial sociopath. Hence, such an effort should be avoided.

REFERENCES

- Tononi, G. (2012) Phi: A voyage from the brain to the soul. Random House, New York.

- Seth, A.K., Izhikevich, E., Reeke, G.N. and Edelman, G.M. (2006) Theories and measures of consciousness: An extended framework. Proceedings of the National Academy of Sciences, 103, 10799-10804. http://dx.doi.org/10.1073/pnas.0604347103

- Seth, A.K., Dienes, Z., Cleeremans, A., Overgaard, M. and Pessoa, L. (2008) Measuring consciousness: Relating behavioural and neurophysiological approaches. Trends in Cognitive Sciences, 12, 314-321. http://dx.doi.org/10.1016/j.tics.2008.04.008

- Seth, A.K., Baars, B.J. and Edelman, D.B. (2005) Criteria for consciousness in humans and other mammals. Consciousness and Cognition, 14, 119-139. http://dx.doi.org/10.1016/j.concog.2004.08.006

- Seth, A.K. (2009) Explanatory correlates of consciousness: Theoretical and computational challenges. Cognitive Computing, 1, 50-63. http://dx.doi.org/10.1007/s12559-009-9007-x

- Edelman, D.B. and Seth, A.K. (2009) Animal consciousness: A synthetic approach. Trends in Neurosciences, 32, 476-484. http://dx.doi.org/10.1016/j.tins.2009.05.008

- Seth, A.K. and Baars, B.J. (2005) Neural Darwinism and consciousness. Consciousness and Cognition, 14, 140- 168. http://dx.doi.org/10.1016/j.concog.2004.08.008

- Seth, A.K. (2010) The grand challenge of consciousness. Frontiers in Psychology, 1, 5. http://dx.doi.org/10.3389/fpsyg.2010.00005

- Boly, M., Moran, R., Murphy, M., Boveroux, P., Bruno, M.-A., Noirhomme, Q., Ledoux, D., Bonhomme, V., Brichant, J.-F., Tononi, G., Laureys, S. and Friston, K. (2012) Connectivity changes underlying spectral EEG Changes during propofol-induced loss of consciousness. The Journal of Neuroscience, 32, 7082-7090. http://dx.doi.org/10.1523/JNEUROSCI.3769-11.2012

- Tononi, G. (2004) An information integration theory of consciousness. BMC Neurosciences, 5, 42. http://dx.doi.org/10.1186/1471-2202-5-42

- Tononi, G. and Laurey, S. (2009) The neurology of consciousness: An overview. In: Laureys, S. and Tononi, G., Eds., The Neurology of Consciousness, Academic Press, London, 375-412. http://dx.doi.org/10.1016/B978-0-12-374168-4.00028-9

- Shannon, C.E. and Weaver, W. (1998) The mathematical theory of communication. University of Illinois Press, Urbana-Champaign.

- Yeung, R.W. (2002) A first course in information theory. Kluwer Academic/Plenum Academic, New York.

- Cathy, J. (1979) Glasgow coma scale. American Journal of Nursing, 79, 1551-1557.

- Teasdale, G.M. and Murray, L. (2000) Revisiting the glasgow coma scale and coma score. Intensive Care Medicine, 26, 153-154. http://dx.doi.org/10.1007/s001340050037

- Driver, J. and Halligan, P.W. (1991) Can visual neglect operate in object-centred coordinates? An affirmative single-case study. Cognitive Neuropsychology, 8, 465. http://dx.doi.org/10.1080/02643299108253384

- Farah, M.J., Brunn, J.L., Wong, A.B., Wallace, M.A. and Carpenter, P.A. (1990) Frames of reference for allocating attention to space: Evidence from the neglect syndrome. Neuropsychologia, 28, 335-347.

- Calvanio, R., Petrone, P.N. and Levine, D.N. (1987) Left visual spatial neglect is both environment-centered and body-centered. Neurology, 37, 1179. http://dx.doi.org/10.1212/WNL.37.7.1179

- Bassett, D.S., Meyer-Lindenberg, A., Achard, S., Duke, T. and Bullmore, E. (2006) Adaptive reconfiguration of fractal small-world human brain functional networks. Proceedings of the National Academy of Sciences, 103, 19518-19523. http://dx.doi.org/10.1073/pnas.0606005103

- Koiti, M. (1950) Field of retinal induction and optical illusion. Journal of Neurophysiology, 13, 413-426.

- Tadasu, O. (1960) Japanese studies on the so-called geometrical-optical illusions. Psychologia, 3, 7-20.

- Glover, S. and Dixon, P. (2001) Motor adaptation to an optical illusion. Experimental Brain Research, 137, 254- 258. http://dx.doi.org/10.1007/s002210000651

- Bean, C.H. (1938) The blind have “optical illusions”. Journal of Experimental Psychology, 22, 283-289. http://dx.doi.org/10.1037/h0061244

- O’Connell (2002) Pareidolia. iUniverse, Lincoln.

- Maranhã-Filho, P. and Vincent, M.B. (2009) Neuropareildoia: Pista diagnótica a partir de uma ilusão visual. Arquivos de Neuro-Psiquiatria, 67, 1117-1123.

- Dunning, B. (2008) The face on Mars revealed. http://www.Skeptoid.com

- Simons, D.J. and Levin, D.T. (1997) Change blindness. Trends in Cognitive Sciences, 1, 261-267.

- Simons, D.J. and Rensink, R.A. (2005) Change blindness: Past, present, and future. Trends in Cognitive Sciences, 9, 16-20. http://dx.doi.org/10.1016/j.tics.2004.11.006

- Simons, D.J. (2000) Current approaches to change blindness. Visual Cognition, 7, 1-15. http://dx.doi.org/10.1080/135062800394658

- Beck, D.M., Rees, G., Frith, C.D. and Lavie, N. (2001) Neural correlates of change detection and change blindness. Nature Neuroscience, 4, 645-650. http://dx.doi.org/10.1038/88477

- Simons, D.J. and Ambinder, M.S. (2005) Change blindness: Theory and consequences. Current Directions in Psychological Science, 14, 44-48.

- Levin, D.T., Momen, N., Drivdahl IV, S.B. and Simons, D.J. (2000) Change blindness: The metacognitive error of overestimating change-detection ability. Visual Cognition, 7, 397-412. http://dx.doi.org/10.1080/135062800394865

- Tourangeau, R. (2000) Remembering what happened: Memory errors and survey reports. In: Stone, A.A., Bachrach, C.A., Jobe, J.B., Kurtzman, H.S. and Cain, V.S. Eds., The Science of Self-Report: Implications for Research and Practice, Lawrence Erlbaum Assoc. Inc., Mahwah.

- Hyman Jr., I.E. and Loftus, E.F. (1998) Errors in autobiographical memory. Clinical Psychology Review, 18, 933-947.

- Squire, L.R. (1982) The neuropsychology of human memory. Annual Review of Neuroscience, 5, 241-273. http://dx.doi.org/10.1146/annurev.ne.05.030182.001325

- Freeman, L.C. and Romney, A.K. (1987) Words, deeds and social structure: A preliminary study of the reliability of informants. Human Organization, 46, 330-334.

- Farthing, G.W. (1992) The psychology of consciousness. Prentice-Hall, Englewood Cliffs.

- Block, R.A. (1979) Chapter 8. Time and consciousness. In: Underwood, G. and Stevens, R., Eds., Aspects of Consciousness, Psychological Issues, vol. 1. Academic Press, London, 179-217.

- Chafe, W. (1994) Discourse, consciousness and time. In: Chafe, W., Ed., The Flow and Displacement of Conscious Experience in Reading and Writing. University of Chicago Press, Chicago.

- Lewis, A. (1932) The experience of time in mental disorder. Proceedings of the Royal Academy of Medicine, 25, 611-620.

- Radin, D., Galdamez, K., Wendland, P., Richenbach, R. and Delome, A. (2012) Consciousness and the double-slit interference pattern: Six experiments. Physics Essays, 25, 157-171. http://dx.doi.org/10.4006/0836-1398-25.2.157

- Mermin, M. (1990) Boojums all the way through: Communicating science in a prosaic age. Cambridge University Press, Cambridge. http://dx.doi.org/10.1017/CBO9780511608216

- von Neumann, J. (1955) Mathematical foundations of quantum mechanics. Princeton University Press, Princeton.

- Stapp, H. (2007) Mindful universe: Quantum mechanics and the participating observer. Springer, New York.

- Squires, E.J. (1987) Many views of one world-an interpretation of quantum theory. European Journal of Physics, 8, 171. http://dx.doi.org/10.1088/0143-0807/8/3/003

- Machida, S. and Namiki, M. (1980) Theory of measurement of quantum mechanics: Mechanism of reduction of wave packet. I. Progress of Theoretical Physics, 63, 1457-1473. http://dx.doi.org/10.1143/PTP.63.1457

- Lockwood, M. (1996) “Many minds” interpretation of quantum mechanics. British Journal of the Philosophy of Science, 47, 159-188. http://dx.doi.org/10.1093/bjps/47.2.159

- Hartle, J.B. (1968) Quantum mechanics of individual systems. American Journal of Physics, 36, 704. http://dx.doi.org/10.1119/1.1975096

- Brown, A.S. (2003) A review of the déjà vu experience. Psychological Bulletin, 129, 394-413. http://dx.doi.org/10.1037/0033-2909.129.3.394

- Etcoff, N.L. and Magee, J.J. (1992) Categorical perception of facial expressions. Cognition, 44, 227-240. http://dx.doi.org/10.1016/0010-0277(92)90002-Y

- Vuilleumier, P. and Pourtois, G. (2007) Distributed and interactive brain mechanisms during emotion face perception: Evidence from functional neuroimaging. Neuropsychologia, 45, 174-194. http://dx.doi.org/10.1016/j.neuropsychologia.2006.06.003

- Anderson, J.R. and Bower, G.H. (1980) Human associative memory. Lawrence Erlbaum Assoc., Hillsdale.

- Palm, G. (1980) On associative memory. Biological Cybernetics, 36, 19-31. http://dx.doi.org/10.1007/BF00337019

- Leuner, B., Falduto, J. and Shors, T.J. (2003) Associative memory formation increases the observation of dendritic spines in the hippocampus. The Journal of Neuroscience, 23, 659-665.

- Praveen-Kumar, B., Sudhakar, S. and Prabhat, M.P.V. (2010) Congenital insensitivity to pain. Online Journal of Health and Allied Sciences, 9, 29.

- Willer, J.C. (2009) Insensibilité congénitale à la douleur (congenital insensitivity to pain). Revue Neurologique, 165, 129-136. http://dx.doi.org/10.1016/j.neurol.2008.05.003

- Protheroe, S.M. (1991) Congenital insensitivity to pain. Journal of the Royal Society of Medicine, 84, 558-559.

- Dehen, H., Willer, J.C., Boureau, F. and Cambier, J. (1977) Congenital insensitivity to pain, and endogenous morphine-like substances. The Lancet, 2, 293-294. http://dx.doi.org/10.1016/S0140-6736(77)90970-9

- Sternbach, R.A. (1963) Congenital insensitivity to pain: A critique. Psychological Bulletin, 60, 252-264. http://dx.doi.org/10.1037/h0042959

- Zola-Morgan, S., Squire, L.R. and Amaral, D.G. (1986) Human amnesia and the medial temporal region: Enduring memory impairment following a bilateral lesion limited to field CA1 of the hippocampus. The Journal of Neuroscience, 6, 2950-2967.

- Zola-Moran, S., Squire, L.G., Amaral, D.G. and Suzuki, W.A. (1989) Lesions of perirhinal and parahippocampal cortex that spare the amygdala and hippocampal formation produce severe memory impairment. The Journal of Neuroscience, 9, 4335-4370.

- Penfield, W. and Milner, B. (1958) Memory deficit produced by bilateral lesions in the hippocampal zone. Archives of Neuropsychiatry, 79, 475-497. http://dx.doi.org/10.1001/archneurpsyc.1958.02340050003001

- Adolpha, R., Cahill, L., Schul, R. and Babinsky, R. (1997) Impaired declarative memory for emotional material following bilateral amygdala damage in humans. Learning & Memory, 4, 291-300. http://dx.doi.org/10.1101/lm.4.3.291

- Anderson, S.W., Bechara, A., Damasio, H., Tranel, D. and Damasio, A.R. (1999) Impairment of social and moral behavior related to early damage in human prefrontal cortex. Nature Neuroscience, 2, 1032-1037.

- Cahill, L., Babinsky, R., Markowitsch, H. and McGaugh, J.L. (1995) The amygdala and emotional memory. Nature, 377, 295-296. http://dx.doi.org/10.1038/377295a0