Open Journal of Statistics

Vol.04 No.10(2014), Article ID:52710,10 pages

10.4236/ojs.2014.410082

Model Detection for Additive Models with Longitudinal Data

Jian Wu, Liugen Xue

1College of Applied Sciences, Beijing University of Technology, Beijing, China

2College of Science, Northeastern University, Shenyang, China

Email: wujian@emails.bjut.edu.cn, mbaron@utdallas.edu

Copyright © 2014 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 1 October 2014; revised 28 October 2014; accepted 15 November 2014

ABSTRACT

In this paper, we consider the problem of variable selection and model detection in additive models with longitudinal data. Our approach is based on spline approximation for the components aided by two Smoothly Clipped Absolute Deviation (SCAD) penalty terms. It can perform model selection (finding both zero and linear components) and estimation simultaneously. With appropriate selection of the tuning parameters, we show that the proposed procedure is consistent in both variable selection and linear components selection. Besides, being theoretically justified, the proposed method is easy to understand and straightforward to implement. Extensive simulation studies as well as a real dataset are used to illustrate the performances.

Keywords:

Additive Model, Model Detection, Variable Selection, SCAD Penalty

1. Introduction

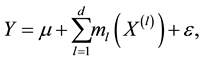

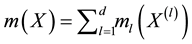

Longitudinal data arise frequently in biological and economic applications. The challenge in analyzing longitudinal data is that the likelihood function is difficult to specify or formulate for non-normal responses with large cluster size. To allow richer and more flexible model structures, an effective semi-parametric regression tool is the additive model introduced by [1] , which stipulates that

(1)

(1)

where  is a varaible of interest and

is a varaible of interest and  is a vector of predictor variables,

is a vector of predictor variables,  is a unknown constant, and

is a unknown constant, and  are unknown nonparametric functions. As in most work on nonparametric smoothing, estimation of the non-parametric functions

are unknown nonparametric functions. As in most work on nonparametric smoothing, estimation of the non-parametric functions  is conducted on a compact support. Without loss of generality, let the compact set be

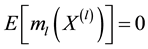

is conducted on a compact support. Without loss of generality, let the compact set be  and also impose the condition

and also impose the condition  which is required for identifiability of model (1.1),

which is required for identifiability of model (1.1), . We propose a penalized

. We propose a penalized

method for variable selection and model detection in model (1.1) and show that the proposed method can correctly select the nonzero components with probability approaching one as the sample size goes to infinity.

Statistical inference of additive models with longitudinal data has also been considered by some authors. By extending the generalized estimating equations approach, [2] studied the estimation of additive model with longitudinal data. [3] focuses on a nonparametric additive time-varying regression model for longitudinal data. [4] considered the generalized additive model when responses from the same cluster are correlated. However, in semiparametric regression modeling, it is generally difficult to determine which covariates should enter as nonparametric components and which should enter as linear components. The commonly adopted strategy in practice is just to consider continuous entering as nonparametric components and discrete covariates entering as parametric. Traditional method uses hypothesis testing to identify the linear and zero component. But this might be cumbersome to perform in practice whether there are more than just a few predictor to test. [5] proposed a penalized procedure via the LASSO penalty; [6] presented a unified variable selection method via the adaptive LASSO. But these methods are for the varying coefficient models. [7] established a model selection and semiparametric estimation method for additive quantile regression models by two-fold penalty. To our know- ledge, the model selection and variable selection simultaneously with longitudinal data have not been investi- gated. We make several novel contributions: 1) We develop a new strategies for model selection and variable selection in additive model with longitudinal data; 2) We develop theoretical properties for our procedure.

In the next section, we will propose the two-fold SCAD penalization procedure based on QIF and compu- tational algorithm; furthermore we present its theoretical properties. In particular, we show that the procedure can select the true model with probability approaching one, and show that newly proposed method estimates the non-zero function components in the model with the same optimal mean square convergence rate as the standard spline estimators. Simulation studies and an application of proposed methods in a real data example are included in Sections 3 and 4, respectively. Technical lemmas and proofs are given in Appendix.

2. Methodology and Asymptotic Properties

2.1. Additive Models with Two Fold Penalized Splines

Consider a longitudinal study with  subjects and

subjects and  observations over time for the ith subject

observations over time for the ith subject  for a total of

for a total of  observation. Each observation consists of a response variable

observation. Each observation consists of a response variable  and a covariate

and a covariate

vector  taken from the ith subject at time

taken from the ith subject at time . We assume that the full data set

. We assume that the full data set

is observed and can be modelled as

where

At the start of the analysis, we do not know which component functions in model (1.1) are linear or actually zero. We adopt the centered B-spline basis, where

article for simplicity of proof. Other regular knot sequences can also be used, with similar asymptotic results. Suppose that

To simplify notation, we first assume equal cluster size

where

where

The vector

where

Our main goal is to find both zero components (i.e.,

function). The former can be achieved by shrinking

where

2.2. Asymptotic Properties

To study the rate of convergence for

for

(A1) The covariates

(A2) Let

(A3) For each

(A4)

(A5) Let

(A6) The matrix

Theorem 1. Suppose that the regularity conditions A1-A5 hold and the number of knots

For

Theorem 2. Under the same assumptions of Theorem 1, and if the tuning parameter

a)

b)

Theorem 2 also implies that above additive model selection possesses the consistency property. The results in Theorems 2 are similar to semiparametric estimation of additive quantile regression model in [7] . However, the theoretical proof is very different from the penalized quantile loss function due to the two fold penalty and longitudinal data.

Finally, in the same spirit of that [11] , we come to the question of whether the SIC can identify the true model in our setting.

Theorem 3. Suppose that the regularity conditions A1-A5 hold and the number of knots

as assumed in Theorem 1, The parameters

3. Simulation Study

In this section, we conducted Monte Carlo studies for the following longitudinal data and additive model. the continuous responses

where

tion with mean 0, a common marginal variance

The predictors

To illustrate the effect on estimation efficiency, we compare the penalized QIF approach in [4] (PQIF) and an Oracle model (ORACLE). here the full model consists of all ten variable, and oracle model only contains the first five relevant variables and we know it’s a partial additive model. The oracle model is only available in simulation studies where the true information is known. In all simulation, the number of replications is 100 and the result are summarized in Table 1 and Table 2. In Table 1, the model selection result for both our procedure

Table 1. The estimation results for our estimator (TFPQIF) and sparse additive estimator (PQIF) and ORACLE esitmator.

Table 2. Model selction results for our estimator (TFPQIF) and sparse additive estimator (PQIF) and ORACLE esitmator.

with the one penalty QIF when the error are Gaussian, and we also list the oracle model as a benchmark, the oracle model is only available in simulation studies where the true information is known in Table 1, in which the column labeled “NNC” presents the average number of nonparametric components selected, the column “NNT” depicts the average number of nonparametric components selected that are truly nonparametric (truly nonzero for one penalty QIF), “NLC” presents the average number of linear components, “NLT” depicts the average number of linear components selected that are truly linear.

In Ta ble 2, we conduct some simulations to evaluate finite sample performance of the proposed method. Let

estimator

4. Real Data Analysis

In this subsection, we analyze data from the Multi-Center AIDS Cohort Study. The dataset contains the human immunodeficiency virus, HIV, status of 283 homosexual men who were infected with HIV during the follow-up period between 1984 and 1991. All individuals were scheduled to have their measurements made during semi- annual visits. Here

In our analysis, the response variable is the CD4 cell percentage of a subject at distinct time points after HIV infection. We take four covariates for this study:

the partially linear additive models instead of additive model because of the binaray variable

Figure 1. The estimator of

depletes rather quickly at the beginning of HIV infection, but the rate of depletion appears to be slowing down at four years after the infection. This result is the same as before [13] .

5. Concluding Remark

In summary, we present a two-fold penalty variable selection procedure in this paper, which can select linear component and significant covariate and estimate unknown coefficient function simultaneously. The simulation study shows that the proposed model selection method is consistent with both variable selection and linear components selection. Besides, being theoretically justified, the proposed method is easy to understand and straightforward to implement. Further study of the problem is how to use the multi-fold penalty to solve the model selection and variable selection in generalized additive partial linear models with longitudinal data.

Acknowledgements

Liugen Xue’s research was supported by the National Nature Science Foundation of China (11171012), the Science and Technology Project of the Faculty Adviser of Excellent PhD Degree Thesis of Beijing (20111000503) and the Beijing Municipal Education Commission Foundation (KM201110005029).

References

- Hastie, T.J. and Tibshirani, R.J. (1990) Generalized Additive Models. Chapman and Hall, London.

- Berhane, K. and Tibshirani, R.J. (1998) Generalized Additive Models for Longitudinal Data. The Canadian Journal of Statistics, 26, 517-535. http://dx.doi.org/10.2307/3315715

- Martinussen, T. and Scheike, T.H. (1999) A Semiparametric Additive Regression Model for Longitudinal Data. Biometrika, 86, 691-702. http://dx.doi.org/10.1093/biomet/86.3.691

- Xue, L. (2010) Consistent Model Selection for Marginal Generalized Additive Model for Correlated Data. Journal of the American Statistical Association, 105, 1518-1530. http://dx.doi.org/10.1198/jasa.2010.tm10128

- Hu, T. and Xia, Y.C. (2012) Adaptive Semi-Varying Coefficient Model Selection. Statistica Sinica, 22, 575-599. http://dx.doi.org/10.5705/ss.2010.105

- Tang, Y.L., Wang, H.X., Zhu, Z.Y. and Song, X.Y. (2012) A Unified Variable Selection Approach for Varying Coefficient Models. Statistica Sinica, 22, 601-628. http://dx.doi.org/10.5705/ss.2010.121

- Lian, H. (2012) Shrinkage Estimation for Identification of Linear Components in Additive Models. Statistics and Probability Letters, 82, 225-231. http://dx.doi.org/10.1016/j.spl.2011.10.009

- Qu, A., Lindsay, B.G. and Li, B. (2000) Improving Generalised Estimating Equations Using Quadratic Inference Functions. Biometrika, 87, 823-836. http://dx.doi.org/10.1093/biomet/87.4.823

- Huang, J.Z. (1998) Projection Estimation in Multiple Regression with Application to Functional ANOVA Models. The Annals of Statistics, 26, 242-272. http://dx.doi.org/10.1214/aos/1030563984

- Xue, L. (2009) A Root-N Consistent Backfitting Estimator for Semiparametric Additive Modeling. Statistica Sinica, 19, 1281-1296.

- Wang, H., Li, R. and Tsai, C.L. (2007) Tuning Parameter Selectors for the Smoothly Clipped Absolute Deviation Method. Biometrika, 94, 553-568. http://dx.doi.org/10.1093/biomet/asm053

- Xue, L.G. and Zhu, L.X. (2007) Empirical Likehood for a Varying Coefficient Model with Longitudinal Data. Journal of the American Statistical Association, 102, 642-654.

- Wu, C.O., Chiang, C.T. and Hoover, D.R. (2010) Asymptotic Confidence Regions for Kernel Smoothing of a Varying-Coefficient Model with Longitudinal Data. Journal of the American Statistical Association, 93, 1388-1402.

- De Boor, C. (2001) A Practical Guide to Splines. Springer, New York.

Appendix: Proofs of Theorems

For convenience and simplicity, let

Lemma 1. Under the conditions (A1)-(A6), minimizing the no penalty QIF

Proof: According to [14] , for each

Proof of Theorem 1. Let

be the object function in (2.7), where

As a result, this implies that

Since

By the definition of SCAD penalty function, removing the regularizing terms in (A2)

with

and

where

Proof of Theorem 2. We only show part (b), as an illustration and part (a) is similar. Suppose for some

As in the proof of Theorem 1, we have

Proof of Theorem 3. For any regularization parameters

CASE 1:

Since true

CASE 2:

CASE 3:

QIF (2.6)

CASE 4: