Open Access Library Journal

Vol.03 No.02(2016), Article ID:68244,9 pages

10.4236/oalib.1102380

Utilitarian Moral Judgments Are Cognitively Too Demanding

Sergio Da Silva1*, Raul Matsushita2, Maicon De Sousa1

1Department of Economics, Federal University of Santa Catarina, Florianopolis, Brazil

2Department of Statistics, University of Brasilia, Brasilia, Brazil

Copyright © 2016 by authors and OALib.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 15 January 2016; accepted 31 January 2016; published 4 February 2016

ABSTRACT

We evaluate utilitarian judgments under the dual-system approach of the mind. In the study, participants respond to a cognitive reflection test and five (sacrificial and greater good) dilemmas that pit utilitarian and non-utilitarian options against each other. There is judgment reversal across the dilemmas, a result that casts doubt in considering utilitarianism as a stable, ethical standard to evaluate the quality of moral judgments. In all the dilemmas, participants find the utilitarian judgment too demanding in terms of cognitive currency because it requires non-automatic, deliberative thinking. In turn, their moral intuitions related to the automatic mind are frame dependent, and thus can be either utilitarian or non-utilitarian. This suggests that automatic moral judgments are about descriptions, not about substance.

Keywords:

Cognitive Reflection, Utilitarianism, Moral Judgment

Subject Areas: Philosophy, Psychology

1. Introduction

The ethical theory of utilitarianism [1] [2] is summarized in Bentham’s aphorism that “the greatest happiness of the greatest number is the basis of morals and legislation.” However simple it may appear to maximize the welfare of the group, this proposition allows for more than one interpretation. These will be discussed later. The major alternatives to utilitarian ethics are the Kantian deontological and the Aristotelian virtue-based ones. However, many favor the utilitarian perspective as the ethical yardstick against which the quality of people’s moral judgment should be evaluated [3] - [5] .

Current moral psychology is inclined to the type of interpretation called act utilitarianism [6] - [8] . However, there is also a rule utilitarianism [9] . Killing one person to save five may be justified under the act utilitarianism, but not under the rule utilitarianism. Act utilitarianism thus assesses utilitarian judgments through “sacrificial moral dilemmas,” such as that of a runaway trolley that hurtles toward five unaware workers. The only way to save them is to push a heavy man, standing nearby on a footbridge, onto the track, where he will die stopping the trolley [10] . Facing such a dilemma, most people make the non-utilitarian choice. Worse, those who adopt the utilitarian judgment paradoxically exhibit antisocial characteristics that are at odds with the very social content of the utilitarian ethics [11] . For this reason, that way of representing utilitarianism has been criticized and “greater good” dilemmas have been proposed instead as a “genuine” utilitarian approach [12] .

Automatic and deliberative cognitive processes should mediate moral judgments [13] . Most cognitive psychologists currently favor a dual-system approach [14] in which two systems compete for control of our inferences, actions and judgments. Intuitive decisions require little reflection and use “System 1.” By scanning associative memory, we usually base our actions on experiences that worked well in the past. However, whenever we make decisions by constructing mental models or simulations of future possibilities, we engage “System 2.” Individuals differ in their cognitive abilities, but a simple test can assess their differences. The cognitive reflection test (CRT) [15] gauges the relative powers of Systems 1 and 2: the individual ability to think quickly with little conscious deliberation (using System 1) and to think in a slower and more reflective way (using System 2). High scores on the CRT track how strong System 2 is for one individual.

Here, we evaluate utilitarian judgments under the dual-system approach in a study where participants respond to a CRT and to sacrificial and greater good dilemmas that pit utilitarian and non-utilitarian options against each other.

The next section describes the experiment details. Section 3 presents results and discusses them, and Section 4 concludes this report.

2. Materials and Methods

The experimenter (MDS) sent Google Docs questionnaires to students from four large Brazilian universities: University of Brasilia, Federal University of Santa Catarina, Federal University of Rio Grande do Sul and Federal Institute of Minas Gerais. The students were enrolled in several disciplines, ranging from physics, chemistry, civil engineering, computer science, statistics, business administration, accounting, economics, physiotherapy, nutrition, design and pedagogy. The questionnaires were administrated to the students by their own teachers using university intranet pages. The questionnaires were also sent to participants not related to the universities. A total of 468 individuals participated (235 males, 233 females; age: mean = 24.21, standard deviation = 4.81). The participants were 393 university students and 75 young adults unaffiliated with the universities. All participants were instructed to respond to the CRT in Table 1 and to the utilitarian moral dilemmas displayed in Table 2. Two types of questionnaire were presented. In the type 1 questionnaires (204 respondents), the CRT came first, whereas in the type 2 questionnaires (264 respondents), the moral dilemmas came first.

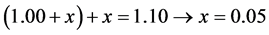

The three simple questions of the CRT were originally designed to elicit automatic responses that are wrong [15] . The questionnaires instructed the participants to respond to the three questions in Table 1 in less than 30 seconds; this was to ensure an automatic choice. The correct answers in Table 1 are 5, 5 and 47. However, the most common, intuitive answers are 10, 100 and 24. The intuitive answer that springs quickly to mind in question 1 is “10 cents.” This is wrong because if  is the ball cost, then

is the ball cost, then . The automatic answer to question 2 is 100. To see why this is wrong, take the first sentence and multiply 5 machines by 20; thus, it would take the same 5 minutes to make 100 widgets. The intuitive answer in question 3 is 24, but

. The automatic answer to question 2 is 100. To see why this is wrong, take the first sentence and multiply 5 machines by 20; thus, it would take the same 5 minutes to make 100 widgets. The intuitive answer in question 3 is 24, but

Table 1. Cognitive reflection test. .

Source: Ref. [15] .

Table 2. Utilitarian moral dilemmas.

if exponential  represents the function where

represents the function where  is the number of days it takes the patch to cover the entire lake, thus, half is always

is the number of days it takes the patch to cover the entire lake, thus, half is always . Then, the patch will cover half the lake in 48 − 1 = 47 days. The three questions on the CRT are easy because their solutions are easily understood when explained [15] . However, reaching one correct answer requires the suppression of an erroneous answer that impulsively comes to mind.

. Then, the patch will cover half the lake in 48 − 1 = 47 days. The three questions on the CRT are easy because their solutions are easily understood when explained [15] . However, reaching one correct answer requires the suppression of an erroneous answer that impulsively comes to mind.

At the end of the questionnaires, participants were asked whether they had really spent less than 30 seconds to answer to the CRT. There was also a question inquiring as to whether they already knew any of the three questions. We removed from the sample those who declared to have taken more than 30 seconds or to have known at least one of the questions. As a result, the sample was reduced to 398 participants (type 1 questionnaire: N = 173; type 2 questionnaire: N = 225).

The utilitarian moral dilemmas in Table 2 were selected to purposely contrast circumstantial or parochial dilemmas with humanity dilemmas. Personal dilemmas are associated with egocentric attitudes and no identification with the whole of humanity [12] . “Greater good” dilemmas consider the latter.

The benchmark sacrificial dilemma is that of the trolley (Table 2). We consider both the personal [10] and impersonal [16] versions because this distinction matters [17] . A further justification for administering two types of questionnaire is precisely to consider this difference. Type 1 questionnaires involve the personal sacrificial dilemma [10] , and type 2 questionnaires spot the impersonal sacrificial dilemma [16] . In the personal version, the trolley hurtles toward five unaware workers and the only way to save them is to push a heavy man, standing nearby on a footbridge, onto the track. In the impersonal version, throwing a switch diverts the trolley onto another track, killing one worker. In terms of simple utilitarian arithmetic, this sacrificial dilemma is still utilitarian (but see [12] ). After all, one should consider the “greater good” of killing just one man rather than killing five. Although the logic of choice in both personal and impersonal versions is the same, its architecture changes. And this fact usually causes preference reversals on this moral judgment. Indeed, most people choose the utilitarian action in the impersonal version and the non-utilitarian one in the personal version [18] . The next section will show this finding is replicated in our own questionnaires. Moreover, we will provide an explanation for such moral preference reversals based on the model of two minds.

The previous sacrificial dilemmas in which one is asked whether to sacrifice a single individual to save a group of strangers is referred to as “other-benefit dilemmas.” In contrast, whenever the sacrifice also benefits the participant, he or she faces a “self-benefit dilemma” [17] . Dilemma D2 is of the self-benefit variety (Table 2). It is equally criticized on the basis that it is not genuinely utilitarian, because it lacks the identification with the whole of humanity [12] . However, this standpoint has not reached a consensus in literature, partially because it is too new. We then decided to include the self-benefit dilemma D2 in the questionnaires for the same reason we included the other-benefit dilemmas D1. This will prove the right thing to do because our results will apply to utilitarian dilemmas of all types. In a broader sense, D2 is still utilitarian because it considers an arithmetic that pits the options of having less- or more-harmed individuals against each other. In particular, D2 is more akin to the D1 of type 1 questionnaires, in that D2 is personal.

In the three greater good dilemmas in Table 2, participants are left a choice to identify themselves with humanity as a whole. This contrasts with either a parochial attachment to one’s own community or country [12] , or a focus on one’s particular circumstance. It is argued that such all-encompassing, impartial concern is a core feature of classical utilitarianism [19] .

For each dilemma D1-D5, participants were also asked to rate their perceived wrongness of not adopting the utilitarian action (“a little wrong” = 1 to “very wrong” = 5) [12] . This question thus asks for a conscious, deliberate response that engages System 2.

3. Results and Discussion

Figure 1 shows the results regarding CRT performance. The marked difference between the two types of questionnaire occurs for the performance of those who does not score at all on the test. In type 2 questionnaires, where the CRT is posted after the moral dilemmas, participants who scored none of the questions were reduced by nearly 8 percent. This result is statistically significant. A one-sided, two-sample bootstrap t-test with 10,000 replicates shows a p-value less than 0.03, and thus we conclude the two distributions are not identical. We explain this improved performance by the fact that in type 2 questionnaires, participants became more cognitively alert as a consequence of previously answering the questions related to the moral dilemmas.

Indeed, Table 3 shows the average CRT performance for type 2 questionnaires was higher (1.06) than that for type 1 questionnaires (0.85) (p-value < 0.03). University students scored higher than non-students did on both types of questionnaire (statistically significant for type 2 questionnaires). Moreover, in both types of questionnaire, those answering within 30 seconds scored higher on the CRT than those answering in more than 30 seconds, but the difference in performance between the two groups was not statistically significant. Those who provided non-utilitarian answers considering the five dilemmas scored higher on the CRT, but this result is to be taken with caution because the dilemmas are not cognitively similar. For example, in D1 and D5 non-utilitarians beat utilitarians on CRT performance in both types of questionnaire, but the reverse is true considering dilemmas D2, D3 and D4 (Figure 2).

Figure 1. CRT performance. Left: type 1 questionnaires; right: type 2 questionnaires. Those answering type 2 questionnaires became more cognitively alert after deciding on the moral dilemmas, and thus improved the performance on the CRT.

Figure 2. Average CRT for utilitarian (blue) and non-utilitarian (red) answers given to the five moral dilemmas. Left: type 1 questionnaires; right: type 2 questionnaires. The different dilemmas are not cognitively similar in that in D1 and D5 non-utilitarians beat utilitarians on CRT performance, but the reverse is true considering dilemmas D2, D3 and D4. This occurs for both types of questionnaire (Table 4).

Table 3. Average CRT and type of questionnaire.

*p-values from one-sided, two-sample bootstrap t-test with 10,000 replicates.

Figure 3 displays the utilitarian and non-utilitarian answers considering the five dilemmas. As for the sacrificial dilemma D1, in its personal version (type 1 questionnaires) most respondents made the non-utilitarian choice. There was reversal in the impersonal version of D1 (type 2 questionnaires): most respondents now favored the utilitarian choice. In other words, most respondents were non-utilitarians when the dilemma was framed as pushing a person, but they reverted their judgment and became utilitarians when the dilemma was presented as moving an object. The p-values in Table 3 show this result is statistically significant for both types of questionnaires. This result is in line with the previous literature [18] .

In both types of questionnaire, respondents found it more wrong not to make the utilitarian choice in dilemma D1 (Figure 4). When they deliberately think (using System 2), they are capable of making the utilitarian arithmetic. This does not mean, however, participants are automatic non-utilitarians in dilemma D1 because the default, automatic decision using System 1 is frame dependent. And, this is compatible with the view that people do not carry any predetermined moral preferences. Indeed, a result from the literature of framing effects is that “there is no underlying preference that is masked or distorted by a frame. Our preferences are about framed problems, and our moral intuitions are about descriptions, not about substance” [20] . Moreover, we found no correlation between perceived wrongness and CRT (available upon request), which means perceived wrongness carries independent information not already conveyed in the CRT.

There was also judgment reversal in the self-benefit dilemma D2 across the two types of questionnaire. In type 1 questionnaires, most respondents adopted the non-utilitarian choice, and in type 2 questionnaires, most respondents were utilitarians (which meant killing a man to save oneself and the others). However, the p-values in Table 3 show this result is statistically significant for type 1 questionnaires only. This judgment reversal may be related to the architecture of choice participants faced. In type 1 questionnaires, the CRT was answered prior to the moral dilemmas. We speculate that may have generated “ego depletion” [21] , in which case System 1 takes control. Killing a man is bad, and for System 1 “What You See Is All There Is” [20] . Performing the utilitarian arithmetic (killing one to save oneself and the others) is cognitively demanding. In type 2 questionnaires, there was no possibility of ego depletion because the CRT was posted at the end. This may have freed up System 2 to perform the utilitarian arithmetic and explain why most respondents adopted the utilitarian judgment. Interestingly, in both types of questionnaire, respondents found it more wrong not to make the utilitarian choice

Figure 3. Utilitarian (blue) and non-utilitarian (red) answers given to the five moral dilemmas changed across the dilemmas.

Figure 4. Average perceived wrongness of not adopting the utilitarian action for utilitarian (blue) and non-utilitarian (red) answers given to the five moral dilemmas. Left: type 1 questionnaires; right: type 2 questionnaires.

of killing a man. Here, the utilitarian choice is difficult and requires the use of System 2. However, as in the previous dilemmas D1, the automatic choice was also frame dependent in dilemma D2.

As for the great good dilemmas D3, D4 and D5, the type of questionnaire did not matter for the judgments made by the participants. However, most respondents were non-utilitarians in D3 and D5, and adopted a utilitarian judgment in D4. There was judgment reversal even across the three greater good dilemmas. This may be related to the roles System 1 and System 2 played in each.

In dilemma D3, only 14 percent (in type 1 questionnaires) and 6 percent (in type 2 questionnaires) made the utilitarian choice (Table 4). Most respondents were not prepared to save the peace negotiator rather than Alberto’s mother. They did not identify themselves with humanity as a whole, and preferred to make a judgment based on genetic ties. This strongly suggests the involvement of System 1 in mediating the non-utilitarian answers. Most respondents preferred saving the mother despite perceiving the action of not saving the pacifier as more wrong (Figure 4). This shows a conflict between the two minds in the judgment making of dilemma D3. Being utilitarian is this dilemma D3 was highly costly in terms of cognitive currency. Incidentally, those giving the utilitarian answer scored slightly higher on the CRT than non-utilitarians did (Table 4), but the differences between the groups was not statistically significant.

The great majority of participants chose the utilitarian answer for dilemma D4, regardless of questionnaire type. Greater good dilemma D4 is conceived to allow for less personal involvement and emotional load, and for more deliberative control in moral judgments [12] . Thus, System 2 judgments are more likely to rule and it is not so surprising that respondents favored the utilitarian choice in dilemma D4. Most participants judged it right for an American executive to donate to a charity in a foreign country rather than one in his own country. The emotional distance was even greater for our sample of Brazilian respondents evaluating the moral attitude of an American individual. Utilitarians in dilemma D4 scored higher on the CRT in our sample (Table 4 and Figure 2), though this difference was not statistically significant (Table 4). However, the role System 2 played in this decision can be evaluated by considering the perceived wrongness of not making the utilitarian choice. Not giving the utilitarian answer was more heavily perceived as wrong for this dilemma D4 (statistically significant for type 1 questionnaires).

Most respondents rejected the utilitarian choice in greater good dilemma D5 (either donate money or buy oneself a car; Figure 3), despite perceiving the wrongness of doing that (statistically significant for type 1 questionnaires). Again, being utilitarian is cognitively too demanding due to the self-interest component of D5 [12] . Judgments aimed at maximizing the greater good are thus weakened by considerations of self-interest. However, self-interest is supposed to be out of place if one were genuinely aiming to promote the greater good from an impartial utilitarian standpoint [12] . The results on the CRT even show the choice of maximizing self-interest over maximizing the greater good is thoughtful, in that non-utilitarians for this dilemma D5 scored higher on the test (statistically significant for type 1 questionnaires).

Table 4. Answers given to the five utilitarian moral dilemmas.

*p-value from one-sided, two-sample bootstrap t-test with 10,000 replicates.

Data from an fMRI (functional magnetic resonance imaging) study provide evidence for the conflicting roles System 1 and System2 play in utilitarian moral judgments [22] . The dorsolateral prefrontal cortex and anterior cingulate cortex are brain regions associated with cognitive control, and therefore with the role System 2 plays. Such areas are recruited to resolve moral dilemmas in which a utilitarian judgement has to overcome a personal, automatic judgment. When System 1 rules in personal judgments, there is increase in amygdala activity. This neuroscientific evidence matches our experimental evidence that utilitarian judgments are mediated by an underlying tension between System 1 and System 2.

4. Conclusions

This study experimentally evaluated utilitarian judgments under the dual-system approach. Participants responded to a cognitive reflection test as well as to sacrificial and greater good dilemmas that pit utilitarian and non-utilitarian options against each other. We considered utilitarian moral dilemmas that purposely contrast circumstantial or parochial dilemmas with humanity dilemmas. While personal dilemmas are associated with egocentric attitudes, greater good dilemmas consider the whole of humanity.

Performance on the cognitive reflection test improved when participants responded to questionnaires that posted the moral dilemmas at the beginning. This possibly has occurred because participants became more cognitively alert after answering the questions related to the dilemmas.

The different dilemmas D1-D5 are not cognitively similar. Non-utilitarians beat utilitarians on CRT performance in dilemmas D1 and D5, but the reverse is true considering dilemmas D2, D3 and D4.

Most respondents were non-utilitarians when the sacrificial moral dilemma D1 was framed as pushing a person, but they reverted their judgment and became utilitarians when the dilemma was framed as moving an object. This moral judgment reversal replicated previous findings in literature [18] .

Respondents found it more wrong not to make the utilitarian choice in D1. When they deliberately thought (using System 2), they were capable of making the utilitarian arithmetic, though this does not mean participants were automatic non-utilitarians. The default, automatic decision using System 1 was frame dependent. This is compatible with the view that people do not carry any predetermined moral preferences. Moralintuitions are about descriptions, not about substance.

Respondents also found it more wrong not to make the utilitarian choice of killing a man in the self-benefit dilemma D2. However, their moral judgment was also frame dependent. Being utilitarian was costly in cognitive currency. Performing the utilitarian arithmetic required the use of System 2, and participants made the utilitarian judgment in D2 because System 2 was at work as the arithmetic involved protecting oneself. However, under ego depletion, System 1 ruled and participants reverted their moral judgments and became non-utilitarians. After all, for System 1 killing is bad and WYSIATI (What You See Is All There Is).

Most participants were not utilitarians when facing the greater good dilemma D3. Most respondents were not prepared to save a peace negotiator rather than one’s mother. They did not identify themselves with humanity as a whole, and preferred to make a judgment based on genetic ties. Being utilitarian by using System 2 in this dilemma D3 was considered by the participants of the experiment as highly costly in terms of cognitive currency.

Not choosing the utilitarian answer was perceived as much more wrong for the greater good dilemma D4. This was not so surprising because the design of the dilemma makes room for System 2 to take control. D4 allows for less personal involvement and emotional load and for more deliberative control in moral judgments. For this reason, most participants judged it right for an American executive to donate to a charity in a foreign country rather than to one in his own country.

Most respondents facing the dilemma to either donate money or buy oneself a car, made the non-utilitarian choice despite perceiving the wrongness of doing that. As before, being utilitarian here was cognitively too demanding and self-interest prevailed over the greater good.

Taken as a whole, all the utilitarian dilemmas showed the utilitarian judgment was too demanding in terms of cognitive currency. And the moral intuitions related to System 1 were sometimes non-utilitarian or utilitarian, depending on how a dilemma was framed. Automatic moral judgments are about descriptions, not about substance.

There is fMRI neuroscientific evidence for our experimental results [22] . Judgments in moral utilitarian dilemmas are mediated by a tension between System 1 and System 2. Being utilitarian by using System 2 is too demanding because the brain areas associated with cognitive control (the dorsolateral prefrontal cortex and anterior cingulate cortex) increase activity. In contrast, when System 1 moral judgments occur, the amygdala shows greater activity.

Cite this paper

Sergio Da Silva,Raul Matsushita,Maicon De Sousa, (2016) Utilitarian Moral Judgments Are Cognitively Too Demanding. Open Access Library Journal,03,1-9. doi: 10.4236/oalib.1102380

References

- 1. Bentham, J. (1789) An Introduction to the Principles of Morals and Legislation. Clarendon Press, Oxford.

http://dx.doi.org/10.1093/oseo/instance.00077240 - 2. Mill, J.S. (1861) Utilitarianism and Other Essays. Penguin Books, London.

- 3. Baron, J. and Ritov, I. (2009) Protected Values and Omission Bias as Deontological Judgments. In: Bartels, D.M., Bauman, C.W., Skitka, L.J. and Medin, D.L., Eds., Moral Judgment and Decision Making: The Psychology of Learning and Motivation, Elsevier, San Diego, 133-167.

http://dx.doi.org/10.1016/s0079-7421(08)00404-0 - 4. Greene, J.D., Cushman, F.A., Stewart, L.E., Lowenberg, K., Nystrom, L.E. and Cohen, J.D. (2009) Pushing Moral Buttons: The Interaction between Personal Force and Intention in Moral Judgment. Cognition, 111, 364-371.

http://dx.doi.org/10.1016/j.cognition.2009.02.001 - 5. Sunstein, C.R. (2005) Moral Heuristics. Behavioral and Brain Sciences, 28, 531-542.

http://dx.doi.org/10.1017/S0140525X05000099 - 6. Singer, P. (1979) Practical Ethics. Cambridge University Press, Cambridge.

- 7. Cushman, F., Young, L. and Greene, J.D. (2010) Our Multi-System Moral Psychology: Towards a Consensus View. In: Doris, J.M., Ed., Oxford Handbook of Moral Psychology, Oxford University Press, Oxford.

http://dx.doi.org/10.1093/acprof:oso/9780199582143.003.0003 - 8. Greene, J.D. (2008) The Secret Joke of Kant’s Soul. In: Sinnott-Armstrong, W., Ed., Moral Psychology: The Neuroscience of Morality, MIT Press, Cambridge, 35-79.

- 9. Kahane, G. and Shackel, N. (2010) Methodological Issues in the Neuroscience of Moral Judgment. Mind and Language, 25, 561-582.

http://dx.doi.org/10.1111/j.1468-0017.2010.01401.x - 10. Thomson, J.J. (1985) The Trolley Problem. Yale Law Journal, 94, 1395-1415.

http://dx.doi.org/10.2307/796133 - 11. Bartels, D.M. and Pizarro, D.A. (2011) The Mismeasure of Morals: Antisocial Personality Traits Predict Utilitarian Responses to Moral Dilemmas. Cognition, 121, 154-161.

http://dx.doi.org/10.1016/j.cognition.2011.05.010 - 12. Kahane, G., Everett, J.A.C., Earp, B.D., Farias, M. and Savulescu, J. (2015) “Utilitarian” Judgments in Sacrificial Moral Dilemmas Do Not Reflect Impartial Concern for the Greater Good. Cognition, 134, 193-209.

http://dx.doi.org/10.1016/j.cognition.2014.10.005 - 13. Greene, J.D., Morelli, S.A., Lowenberg, K., Nystrom, L.E. and Cohen, J.D. (2008) Cognitive Load Selectively Interferes with Utilitarian Moral Judgment. Cognition, 107, 1144-1154.

http://dx.doi.org/10.1016/j.cognition.2007.11.004 - 14. Evans, J.S.B.T. (2008) Dual-Processing Accounts of Reasoning, Judgment, and Social Cognition. Annual Review of Psychology, 59, 255-278.

http://dx.doi.org/10.1146/annurev.psych.59.103006.093629 - 15. Frederick, S. (2005) Cognitive Reflection and Decision Making. Journal of Economic Perspectives, 19, 25-42.

http://dx.doi.org/10.1257/089533005775196732 - 16. Foot, P. (1967) The Problem of Abortion and the Doctrine of Double Effect. Oxford Review, 5, 5-15.

- 17. Moore, A.B., Clark, B.A. and Kane, M.J. (2008) Who Shalt Not Kill? Individual Differences in Working Memory Capacity, Executive Control, and Moral Judgment. Psychological Science, 19, 549-557.

http://dx.doi.org/10.1111/j.1467-9280.2008.02122.x - 18. Hauser, M., Cushman, F., Young, L., Jin, R. and Mikhail, J. (2007) A Dissociation between Moral Judgments and Justifications. Mind & Language, 22, 1-21.

http://dx.doi.org/10.1111/j.1468-0017.2006.00297.x - 19. Hare, R.M. (1981) Moral Thinking: Its Levels, Method, and Point. Oxford University Press, Oxford.

http://dx.doi.org/10.1093/0198246609.001.0001 - 20. Kahneman, D. (2011) Thinking, Fast and Slow. Farrar, Straus and Giroux, New York.

- 21. Hagger, M.S., Wood, C., Stiff, C. and Chatzisarantis, N.L. (2010) Ego Depletion and the Strength Model of Self-Control: A Meta-Analysis. Psychological Bulletin, 136, 495-525.

http://dx.doi.org/10.1037/a0019486 - 22. Greene, J.D., Nystrom, L.E., Engell, A.D., Darley, J.M. and Cohen, J.D. (2004) The Neural Bases of Cognitive Conflict and Control in Moral Judgment. Neuron, 44, 389-400.

http://dx.doi.org/10.1016/j.neuron.2004.09.027

NOTES

*Corresponding author.