Journal of Intelligent Learning Systems and Applications, 2011, 3, 113-121 doi:10.4236/jilsa.2011.33013 Published Online August 2011 (http://www.scirp.org/journal/jilsa) Copyright © 2011 SciRes. JILSA 113 A Velocity-Based Rao-Blackwellized Particle Filter Approach to Monocular vSLAM Morteza Farrokhsiar, Homayoun Najjaran School of Engineering, University of British Columbia, Kelowna, Canada. Email: {morteza.farrokhsiar, homayoun.najjaran}@ubc.ca Received Jaunary 6th, 2011; revised May 6th, 2011; accepted May 13th, 2011. ABSTRACT This paper presents a modified Rao-Blackwellized Particle Filter (RBPF) approach for the bearing-only monocular SLAM problem. While FastSLAM 2.0 is known to be one of the most computationally efficient SLAM approaches; it is not applicable to certain formulations of the SLAM problem in which some of the states are not explicitly expressed in the measurement equation. This constraint impacts the versatility of the FastSLAM 2.0 in dealing with partially ob- servable systems, especially in dynamic environments where inclusion of higher order but unobservable states such as velocity and acceleration in the filtering process is highly desirable. In this paper, the formulation of an enhanced RBPF-based SLAM with proper sampling and importance weights calculation for resampling distributions is presented. As an example, the new formulation uses the higher order states of the pose of a monocular camera to carry out SLAM for a mobile robot. The results of the experiments on the robot verify the improved performance of the higher order RBPF under low parallax angles conditions. Keywords: Filtering, Higher Order Filter, Rao-Blackwellized Particle Filter, Bearing-Only Systems, Visual SLAM 1. Introduction The basic idea of simultaneous localization and mapping (SLAM) was originally discussed for autonomous robots mainly because of the need to locate the robot in real time in a map which is incrementally built using the in- formation obtained from the robot control and measure- ment systems. Smith et al. [1] established a probabilistic approach to model the uncertainty in the control and measurement signals. In essence, the SLAM provides a suitable framework for describing robot motion and landmark locations together with the underlying uncer- tainties associated with both of them. In this approach, motion model and observation model are described as the first-order and zero-order Markov processes, respec- tively. In the probabilistic approach, the SLAM problem is considered as a filtering problem in which the control input and robot observations are known and the robot pose and landmark locations are the desired unknown states. Although other approaches have been used to solve the SLAM problem, probabilistic approach has been re- mained as the mainstream of the SLAM literature [2]. In order to solve the SLAM problem, Csorba and Durrant-Whyte [3] proposed the use of the Extended Kalman Filter (EKF) which is capable of solving non- linear filtering problems. Their work was further ex- tended in [4,5] where convergence and performance of the EKF solution were discussed, and successful experi- mental results were reported. A major limitation of the EKF solution is that the co- variance matrix must maintain the information of all landmarks of the map, and hence the state vector is at least an space, where denotes the number of landmarks. Clearly, the covariance matrix has to be up- dated in each iteration at a cost proportional to . This computational cost limits the real-time implementation of the EKF SLAM to simple environments and sparse mapping. As a result, reducing th e computational load of the EKF-based SLAM solutions has been a major focus for the researchers working on th e SLAM problem [6-8]. 2 N N 2 N Liu and Thrun proposed a new solution for the SLAM problem using the Extended Information Filter (EIF) [9]. In their solution, the information matrix is sparsified and therefore, memory and computation load have been re- duced significantly. Another solution for the SLAM problem, FastSLAM, based on the Rao-Blackwellized particle filtering (RBPF) was presented by [10]. Fast- SLAM is based on factorization of the robot path from landmark locations in which observation of each land-  A Velocity-Based Rao-Blackwellized Particle Filter Approach to Monocular vSLAM 114 mark is independent of any other landmark position [11,12]. The use of well-tuned particle filters instead of Kalman-type filters can result in less computational complexity and more robustness in data association [13]. Also, unlike the EKF, there is no need for the lineariza- tion of the motion model. The main shortcoming of FastSLAM was the large number of samples that are needed to avoid the degeneracy of the filter. This prob- lem has been addressed in FastSLAM 2.0 in which both control inputs and observations are used to obtain the sampling distribution. The use of the observations in obtaining the sampling distribution not only reduces the number of samples required for the filtering process but also increases the overall efficiency of the filter [14]. However, it should be noted that in the original form of FastSLAM 2.0, the measurements are only useful for the sampling distribution of the states which are explicitly used in the measurement model. A goal of the algorithm proposed in this work is to eliminate this limitation. Recent research on the SLAM problem focuses on finding more computationally efficient solutions using vision-based approaches, referred to as vSLAM. Typi- cally, it is desirable to adopt a monocular vision system i.e., a single camera, for generating the map [15-18]. The use of a single camera, which is an example of a bearing only device when used as the only source of information, causes the problem of partial observability [17, 19] that is attributed to the lack information about the depth of the landmarks. However, the standard SLAM solutions are based on a fully observable system, and hence the main motivation of th is work is to introduce an appropri- ate method for monocular vSLAM. It is well-known that similar to any other filtering and estimation problem, the performance of the bearing-only SLAM systems is strongly influenced by the system or- der [20]. For example, higher order systems may be able to track more complicated maneuvers and abrupt changes of robot trajectories but they can also increase the esti- mation error unnecessarily for simple motion patterns. In the EKF-based SLAM framework, the motion model order can be modified easily and additional states can be added to the state vector and covariance matrix, but such modification for Rao-Blackwellized SLAM framework is not trivial since higher order states such as velocity and acceleration have no effect in the observation in the original formulation. Although FastSLAM 2.0 is superior to FastSLAM in terms of performance and convergence, it is not applicable to the bearing-only systems with higher order states. The idea of the use of higher order state such as veloc- ity in an RBPF-based SLAM was introduced and briefly discussed for bearing-only systems in [21]. Here, de- tailed formulation as well as a thorough analysis of the estimation performance using experimental results is presented for the proposed approach. In this approach, first the robot pose is updated by sampling velocity from the proposal distribution. Similar to FastSLAM 2.0, the proposed approach incorporates the information obtained from both the control input and observations to obtain the proposal distribution. However, the can also adopt the information obtained the observations for the pro- posal distribution of a state which is of higher order than the observed state (i.e. velocity where the displacement is measured). In essence, this improves the versatility of the proposed approach with respect to the original for- mulation of FastSLAM 2.0. Considering the updated robot pose and last observation, the landmark locations are updated using the EKF. In the next stage, the impor- tance weight of each particle is calculated. Finally, a set of particles is resampled with respect to the weight of the particles calculated in the prev ious step. In ord er to solve the problem of partial observability, each feature is ini- tialized using a modified scheme in which the landmarks are defined using their inverse depth to decrease the nonlinearity of the measuremen t equation in low parallax angles [22]. Another implementation of the higher order Rao- Blackwellized SLAM has been introduced by Törnqvist et al. [23]. However, their approach is based on Fast- SLAM in which sampling distribution is independent of observations whereas the approach proposed in this pa- per proposes an extension of FastSLAM 2.0 in which sampling distribution relies on the control input as well as the observations. More precisely, they have focused on reducing the computational load of FastSLAM by introducing a new factorization technique, whereas in this paper the main concern is to extend FastSLAM 2.0 so that it incorporates observations in obtain ing the sam- pling distribution of the states ev en if they are not explic- itly used in the measurement model. Another difference between this work and the one presented in [23] is that their approach has been implemented into an unmanned aerial vehicle (AUV) equipped with multiple sensors including inertial navigation system (INS) and cameras whereas the main focus of this paper is on bearing-only systems and tackling the partial observ ability problem. The detailed formulation of the proposed estimation approach including sampling and importance weight distributions are presented in Section 2. In Section 3, the motion and observation models of a bearing-only system are discussed. Section 4 presents another important fea- ture of the proposed SLAM method that is the initializa- tion scheme required to tackle the partial observability problem. Section 5 presents the results of a SLAM ex- periment carried out using a digital camera in a labora- tory environment. C opyright © 2011 SciRes. JILSA  A Velocity-Based Rao-Blackwellized Particle Filter Approach to Monocular vSLAM 115 2. Velocity Based RBPF Approach In the SLAM problem, the only available information for the observer is the measurements, 1:t and the control input 1:t, e.g. acceleration, as they become available. The index 1:t refers to the time period between the first iteration and the iteration at time In the vision-based SLAM, it can be assumed that the data association prob- lem [24] is solved using image processing methods such as the SIFT algorithm [25-27]. The bearing-only system is modeled using a co nstant velocity model which means that both position and velocity are included in the state vector. In this way, the accelerations are characterized using a Gaussian distribution. The approximated accel- eration is assumed as the control input. The velocity dy- namics can be presented as the first order Markov chain: z u 11 ,;, ttttt t t pxu xNxfxuR , (1) where t is the velocity in time t. The symbol N de- notes the normal distribution with the mean of 1 (,) tt xu and covariance of t. The relationship be- tween the velocity and pose is a deterministic function presented as: R 1, ttt gy x (2) which means that the current pose, is determined based on the previous pose, and the current veloc- ity, t y 1t y t . The observation equation is a zero order Markov chain: ,, t ,, t tc ttt zmyc N c my t c ; t zh , t t cQ hm m p where is the observation in time t. The symbol N stands for the normal distribution with the mean of and covariance of . The data association decision and landmark location are shown by and respec- tively. In the full SLAM problem formulation, the final objective is to find the posterior of the entire path and the map [13]: t z ,, t ctt y c t c t Q 1:1:1: 1: ,,, ttt ps mu z c t (3) where s is the robot pose state vector, , xy m, stands for known data association and is the map which is the set of landmarks: t c:1 1,, N mm m (4) It is shown that this problem can be written in the fac- tored form [28]: 1:1:1: 1:1: 1:1: 1: 1: 1: 1: 1 ,,, ,, ,, t ttttttt N ittt i ps mu z cpsuz c pmzs c (5) This factored form can be solved using one of the RBPF based approaches discussed in [10,14]. The local- ization problem is solved using the particle filter. Each particle, t, in this approach consists of a robot trajec- tory estimate and a set of estimators of the individual landmark locations. In order to use the Rao-Blackwel- lized Particle Filter (RBPF) approach, proper proposal and importance weight distributions have to be deter- mined y 2.1. Proposal Distribution Based on the idea discussed in [14], the proposal distri- bution is chosen as: [][] [] 1: 11:11:1:1: ~,,,, kkk ttttttt psxyuz c (6) This distribution includes all information related to the path, [] 1: 1 k t and [] 1: 1 k t controls, 1:t u, observations, 1:t, and data associations, 1:t. Considering the fact that z c , xy, this distribution can be expanded as: [] [] 1: 11: 11:1:1: [] [] 1: 11: 11:1:1: [] [] 1: 11: 11:1:1: ,,,, ,,,, ,,,,, kk tttttt kk tttttt kk ttttt t t ps xyuzc pxxyuzc pyxxyuz c (7) Using Equation (2) and the Markov rule: [] [] 1: 11: 11:1:1: [] 1: 1, ,,,,, ,d tt kk ttttt t t k tt tyy pyxxyuz c pyx y (8) where is Kronecker delta and d t is the determinis- tic result of the Equation (3) when t and [] 1: 1 k t are given. Ther efor e, the propo sal distr ibution of Equa tion (6) is simplified as: [] [] 1: 11:11:1:1: [] [] 1: 11:11:1:1:, ,,,, ,,,,d tt kk tttttt kk tttttt y psxyuz c pxxyuz c (9) Thus, sampling must occur in two separate stages. The velocity states, t has to be drawn from the following probability distribution: [] [] 1: 11: 11:1:1: ~,,,, kkk ttttttt [] (10) pxxyuz c whereas the states related to the robot pose, have to be calculated using Equation (2). Using the Bayesian formulation, the probability distribution of Equation (11) can be presented as: [][][] [] 1: 11: 11:1: 11: [] [] 1: 11: 11:1: 11: ~,,,, ,,,, kk kk ttttttt kk ttttt t , t pzx xyuzc pxxyu zc (11) and using Markov ch ain: [][][] [][] 1: 11:11:1: 11:1 ~,,,,, kk kkk tttttttttt , t pzx xyuzcpxxu (12) Considering the observation model, the posterior in Equation (12) can be presented as: C opyright © 2011 SciRes. JILSA  A Velocity-Based Rao-Blackwellized Particle Filter Approach to Monocular vSLAM 116 [] 1 [] [] ,, ,,, , 1:1:1 1: 1: 11: 1 [] |, [] [] ,,,,, 1:1:11: 1:1 1:1dk tt t t kk pzx mxyuzc tt cttt tt ktmpxx u c kk t pmcx xyuzc tttt tt (13) A general closed form solution of Equation (13) is not available unless the linearizability assumption [14] is imposed on the system. Using these assumptions the closed form of the Equation (13) can be obtained as the: [] [] 1: 11: 11:1:1: [] [] ,,,, exp kk tttttt kk t pxxyuz c P (14) where [][][] []1 [] [] [] 1[] 1ˆ ˆ 2 ˆ ˆ ˆˆ T kk k tttytt kk ttyt t T kk ttt tt PzzHyyQ zz Hyy xxRxx k (15) where , [] ˆk t z[] ˆk t and [] ˆk t are prediction of the obser- vation, velocity states and pose states, respectively. Also is the Jacobian of the measurement model with re- spect to the pose states. In addition, is defined as: []k t Q [] [] ,1 t kTk ttmct QQH H m (16) where m is the Jacobian of the measurement model with respect to the landmark location and [] ,1 t k ct is the covariance of the observed landmark location estimation. Considering the fact that the covariance of the Gaussian function is the inverse of the second derivative, and also invoking Equation (16), the sampling distribution co- variance is obtained from : 1 [] []1 t T kk yx tyxt YQHY R (17) where Y is the Jacobian of the Equation (2) with re- spect to the robot velocity states. Also, the mean value which is the root of the first order derivative equals to: 1 [] []1 t T kk yx tyxt YQHY R (18) In summary, to sample from the trajectory, the first velocity states have to be drawn from the Gaussian dis- tribution with the covariance and mean indicated in Equ- ations (17) and (18), respectively. Then, the pose states have to be calculated using Equation ( 2). 2.2. Importance Weight As it is discussed in [14] in the particle filtering and also RBPF, resampling is the crucial stage of the filtering process. In order to resample the particle, a proper im- portance weight has to be assigned to each particle. As the other particle filter approach, the importance weight [] target distribution roposal distribution t (19) The target distribution is the robot trajectory posterior, k 1:1: 1: 1: (|,,) tttt suz c. The proposal distribution is (| t ps p [] 1: 11:1:1: ,,,) k tttt uzc gi previous stages, ri ven the correct posterior from [] 1: 11:1:1: (|,,) k tttt psuz c . Therefore, Equa- tion (19) can be w the tten as: 1:1: 1: 1: 1: 11:1:1:1: 11:1:1: ,, ,,, ,, [k] t ω tt tt [k] [k] tt tttt ttt ps u zc pssu z cpsuz c (20) which can be presented as: [] 1: 11:1:1: ,,, k ttttt zsuz c (21) This equation can be transformed as: []k t p is defined by the following quotient: 1: 1 1:1:1: [] 1: 11:1: 11: , ,, ,d tt ttttt k tttttt c pssu zcs (22) Using Markov and a similar procedure to Equations (9 [] [] ,,, kk pzssuz )-(13): [] [] 1: 11:1:1: [] [] 1: 11:11:1: 11: [] 1: 11:1: 11: ,,,,, ,,,,, ,,,dd t t t kk ttttctt kk ctttt tt k tttttc pzssm uzc pmx xyuzc pxsuzcms t (23) This equation can be simplified further using Markov ch ain to: [k tt ]1 [] 1: 11:1: 11: ,d ,,,,d tt ttt k tt ccttttct pxx ux pz ympmsuzcm (24) Using the system and measurement model, Equations (1)-(3), and linearizing Equations (2)-(3), the closed form of the Equation (24), can be obtained as the Gaussian distribution: 1 [][] 2 [] []1[] 2 1ˆ exp 2 kk tt kk k tt ttt L zz Lzz ˆ (25) where [] [] [] ,1 t T kk tyxty Tk mct m LHYQHY HHR x (26) The higher order RBPF for M particles can be summa- riz ents Model g the constant veloc- ed as it is shown in Table 1. 3. Motion and Measurem 3.1. Camera Motion Model The camera motion is modeled usin C opyright © 2011 SciRes. JILSA  A Velocity-Based Rao-Blackwellized Particle Filter Approach to Monocular vSLAM 117 Table 1. Pseudo-code of higher order RBPF approach. Do the following M times Retrieve the previous trajectory information [] 1 k t from the kth particle set of the tr ). previous iteration, [] 1 k t Y (Re ieval Sample a new velocity []k t ased on the proposal distribution (Prediction). Update the po s e ba s e d o n t h e s a mpled velocity (Prediction cont’d) Update the mean and covariance of the landmark distribution using the predicted pose and the observation of the landmark (Measure- ment update). Calculate the importance weight []k t for the new particle (Im- portance weight). bability proportional to Sample, with replacement, M particles, where each particle is sampled with a pro[]k t (Resampling) ity input assumption in which the velocity update equa- on can be expressed as [29]: (27) where and are the velocity velocity of the camera in the world coordinates, respec- lso (28) where ti WWW t W vvV ww t W W ttt W t vW t wand rotational tively. A WW at V WW ut W a, W and are accelerati me respectively. In the bear- where is the quaternion defined dopted from [30]. every point i from a ca t interval, on, angular ac- celeration and ti ing-only situation acceleration and angular acceleration can be estimated by a Gaussian white noise. The camera pose can be defined by camera optical center, W r, and the quaternion, W q, defining the position and orienta- tion of the camera in the global coordinates, respectively: 1 WW Wtt WW W rt r gqq t q v w (29) 1tt t W t qt tor W t by the rota- tion vect. 3.2. Measurement Model The measurement model has been a a 3D scene, the observation ofIn m- era is defined by a vector consisting of six states: ,,,,, T iiiiiii mxyz (30) This vector determines the point location as: 1, i iii Yy n i i x z (31) where is the location of the optical center of the camera at the first observation, i g ray,is the inverse of the point depth along the carryin (,,) T iii yz i d, i and i tional vector are azimuth and elevation angles of ray at the global coordinate frame. Thdirec diree rayctional vector, (, ii n) is defined as: ,cossinsin coscos iii iii ni (32) Each observation can be interpreted as a constraint between the camera pose and the corresponding land- ing the pin-holemark. Us landm the projectio model ark in the camera coordinate is gi [31], the location of the note ven by: e cam de CCWW hRYr (33) where CW R is the rotation matrix from the global coor- dinate frame to the camera coordinate frame. The last equation can be expanded as: , C W W ii ii i hR yr n z i C x h age plane According to the pinhole model, t n of into the im (34) era observes d by: C h 0 0 (,) y zh smf d x f ud (35) and Where 0 u0 are the camera center in pixels, f is the focal length, and d and d are the pixel sizes. Also, and ad as: re define C x C z C y C h h h (36) z h Feature Initialization itialization process includes state values and assigning te relate landmark is EK 4. The in of the matrix. Once a both initial estimation h initialized, t F. T d c e estim o ova a posed itialization ap- riance tion ini- h he pr n process is carried out using an tialization approach is similar to the i proach discussed by [30,32] but modified according to the RBPF approach used here. The initial state values for the landmark, ˆi m can be expressed as: ˆˆˆ ˆˆˆ ,,,,, T iiiiiii mxyz (37) These values have to be determined using the location information, observation of new landmarks and the ini- tial estimation h inverse, of the dept0 . The optic center C opyright © 2011 SciRes. JILSA  A Velocity-Based Rao-Blackwellized Particle Filter Approach to Monocular vSLAM Copyright © 2011 SciRes. JILSA 118 of the camera is directly taken from the tion of the camera location. The azimuth an by [25,33]. current estima- and elevation A digital camera is installed on an Amigobot robot de- signed by Mobile Robotic Inc. The robot is programmed to move on a straight line with constant velocity of 0.6 m/sec while capturing a video from the laboratory envi- ronment. The robot navigation system equipped with wheel encoders indicates that the robot has been moved 2198 mm in x-direction and –121 mm in y-direction. gles of the projection ray are obtained from inverse of the camera pin-hole model. In the range min 1 0, d , the inverse dept h, 0 , can be estimated as: 0min 1 ˆ2d (38) higher order RB approach and initiali The lization scheme of the zation discussed in [32] lies in itial covariance matrix used. In the higher order R covarian tialization is: ovariance of camera, and the Jaco is giv difference between initia nhe noise c : PF the i BPF, t the en by ce matrix after this ini- 2 00 00 00 t T t D Q PJ J (39) where t Q is bian ,,, iiii WW kk mmmm h rq x (41) where In this experiment, the RBPF consisting of 40 particles initializes and filters the features extracted using the SIFT algorithm from the video frames. The calibration parameters of the camera play a crucial role in initializa- tion and filtering processes [29]. The camera has been calibrated based on the open access Matlab toolbox pro- vided in [34]. Sample frame of the video and detected SIFT features are shown in Figure 1 In addition to the core RBPF algorithm used in the simulation program, additional subroutines have been added to the filtering algorithm to make it more robust in real world imple- mentations. First, a probabilistic scheme has been added to the filter to evaluate the probability of the SIFT matching results and remove possible outliers. Specifi- cally, RBPF will ignore the observation if the matching probability is smaller than a specific threshold value. The matching probability is computed based on the corre- spondence likelihood discussed in [13]. Also, the nega- tive inverse depth which is an important issue in the in- verse depth bearing-only SLAM [35] has been avoided using the ability of the particle filter to fuse the hierar- chical knowledge with the positive information proc- essed by the probabilistic approach [13]. The robot path and estimated localization results are shown in the Fig- ure 2. The time evolutions of the robot pose states are shown in Figures 3 and 4. Also estimation of cosine of the robot heading angle has been shown in the Figure 5. (40) J Also, T txt DHRH isbian of the pose s spect to theand is the . Thovariandefined as: the J e de aco velocity states pth c tates with re- control input t R ce is covariance min 1 4d d n carried out to evaluateposed RBPF tion with comre extrac (42) 5. Experimental Results an Discussion An offline experiment using a video clip has beeThe RBPF estimated path which has been shown in Figure 2 follows the true robot path with slight deviation in the y-direction. This deviation can be explained by basic experimental errors, such as the robot control sys- the mpro on featu approach in combina- tion methods discussed Figure 1. Robot path; true value (solid line) estimation (dashed line).  A Velocity-Based Rao-Blackwellized Particle Filter Approach to Monocular vSLAM 119 Figure 2. Robot path; true value (solid line), estimation (dashed line). Figure 3. Estimation of the robot in the x-direction versus time. tem error, the camera-robot alignment and also oversim- plification of the measurement model e.g., the radial dis- tortion of the camera lens has been totally ignored in this research. Robot motion estimation in the x and y direc- tions are shown in Figures 3 and 4. The estimation of the heading angle cosine is shown in Figure 5. Although the major objective in higher order monocu- lar vSLAM is to solve the localization problem and pro- duced sparse cannot be used directly, mapping results is able to provide better insight to the proposed approach. The Monte Carlo simulations of the spatial distributions of a sample landmark, after the first, fourth and seventh frame are shown in Figure 6. As it is shown in Figure 6 sed. Also, the Euclidian norm of the landmark , the spatial volume which represents the uncertainty in the location of landmark, reduces as more frames are proces Figure 4. Estimation of the robot in the x-direction versus time. Figure 5. The robot velocity in the x-direction; nominal (solid line), estimated (dashed line). location covariance matrix has been calculated for dif- ferent frames. The evolution of this norm versus number of frames in which the landmark has been observed is shown in Figure 7. As it is shown in Figure 7, the co- variance norm decreases drastically after a few frames and then converges to a final value. The trend of the co- variance norm is compatible with the Monte Carlo simu- lation results. 6. Conclusions In this paper a new Rao-Blackwellized particle filte formulation where the velocity is considered a constant, filtering is performed under the constant acce- leration assumption meaning that the velocity is a state r suitable for monocular vision (i.e., bearing-only) systems is proposed. In the proposed approach, unlike the tradi- tional C opyright © 2011 SciRes. JILSA  A Velocity-Based Rao-Blackwellized Particle Filter Approach to Monocular vSLAM 120 Figure 6. The Monte Carlo simulations of the spatial distribution seventh frame. s of a landmark; (from left to right), the first, fourth and Figure 7. EuclidSystems, Vol. 3, No. 1, 2006, pp. 79-84. ian norm of the landmark location covari- ance matrix versus time. variable estimated by the filter. Although the inclusion of higher order terms in a parameterized filter such as an EKF may be considered straightforward, the entire for- mulation must be reworked for such modification of RPBF. New sampling and importance weight distribution based on the constant acceleration assumption have been derived. Also to solve the bearing only problem, a modi- fied initialization scheme has been proposed. Numerical simulations have been carried out to examine the perfor- mance of the proposed system. The higher order RBPF approach has been compared to a lower order RBPF approach in similar conditions. Simulation results verify not only the feasibility of the proposed higher order RBPF approach but also its superiority to the lower orde RBPF approach estimates the path successfully. It is also MITACS Inc., Canada. 8. References [1] R. Smith, M. Self and P. Cheeseman, “A Stochastic Map for Uncertain Spatial Relationships,” In: R. Bolles and B. Roth, Eds., The Fourth International Symposium of Ro- botics Research, The MIT Press, Cambridge, 1988, pp. 467-474. [2] D. Micucci, D. G. Sorrenti, F. Tisato an d F. M. Marchese, “Localisation and World Modelling: An Architectura Perspective,” International Journal of Advanced Robotic [3] M. Csorba and H. F. Durrant-Whyte, “New Approach to Map Building Using Relative Position Estimates,” Navi- r RBPF approach for high parallax angle. Also, an offline experiment has been conducted in which the higher order shown that the proposed filter requires large parallax angle to track the velocity accurately. It is also noted that the generalized higher order formulation is of great importance where there is a need to estimate the velocity as one of the states in the filtering process. gation and Control Technologies for Unmanned Systems II, SPIE, Bellingham, 1997, pp. 115-125. [4] M. W. M. G. Dissanayake, et al., “A Solution to the Si- mulaneous Localization and Map Building (SLAM) Problem,” IEEE Transactions on Robotics and Automa- tion, Vol. 17, No. 3, 2001, pp. 229-241. doi:10.1109/70.938381 7. Acknowledgements This work was support ed in part by the Natural Sciences and Engineering Research Council of Canada (NSERC), nuktun Services Ltd., Nanaimo, BC, Canada, and I l [5] G. Dissanayake, S. B. Williams, H. Durrant-Whyte and T. Bailey, “Map Management for Efficient Simultaneous Localization and Mapping (SLAM),” Autonomous Robots, Vol. 12, No. 3, 2002, pp. 267-286. doi:10.1023/A:1015217631658 [6] S. Ahn, J. Choi, N. L. Doh and W. K. Chung, “A Practi- cal Approach for EKF-SLAM in an Indoor Environment: Fusing Ultrasonic Sensors and Stereo Camera,” Autono- mous Robots, Vol. 24, No. 3, 2008, pp. 315-335. doi:10.1007/s10514-007-9083-2 [7] U. Frese, “Treemap: An O (log n) Algori and Mapping,” thm for Indoor Autonomous 6, pp. 103-122. Simultaneous Localization Robots, Vol. 21, No. 2, 200 doi:10.1007/s10514-006-9043-2 [8] S. Huang, Z. Wang, G. Dissanayake and U. Frese, “Iter- ated D-SLAM Map Joining: Evaluating Its Performance in Terms of Consistency, Accuracy and Efficiency,” Au- tonomous Robots, Vol. 27, No. 4, 2009, pp. 409-429. C opyright © 2011 SciRes. JILSA  A Velocity-Based Rao-Blackwellized Particle Filter Approach to Monocular vSLAM 121 doi:10.1007/s10514-009-9153-8 erlo, S. Thrun, D. Koller and B. Wegbreit, . Ruhnke, G. abilistic Robo- mbridge, 2006. Joint Conference on 1156. 654 [9] Y. F. Liu and S. Thrun, “Results for Outdoor-SLAM Using Sparse Extended Information Filters,” Proceedings of the 2003 IEEE International Conference on Robotics and Automation, Taipei, 2003, pp. 1227-1233. [10] M. Montem “Fast SLAM: A Factored Solution to the Simultaneous Localization and Mapping Problem,” Proceedings of 18th National Conference on Artificial Intelligence (AAAI-02), Edmonton, 2002, pp. 593-598. [11] K. P. Murphy, “Bayesian Map Learning in Dynamic En- vironments,” Advances in Neural Information Processing Systems, Denver, 1999, pp. 1015-1021. [12] R. Kümmerle, B. Steder, C. Dornhege, M Grisetti, C. Stachniss and A. Kleiner, “On Measuring the Accuracy of SLAM Algorithms,” Autonomous Robots, Vol. 27, No. 4, 2009, pp. 387-407. [13] S. Thrun, W. Burgard and D. Fox, “Prob tics,” The MIT press, Ca [14] M. Montemerlo, S. Thrun, D. Koller and B. Wegbreit, “Fast SLAM 2.0: An Improved Particle Filtering Algo- rithm for Simultaneous Localization and Mapping that Provably Converges,” International Artificial Intelligence, Las Vegas, 2003, pp. 1151- [15] A. J. Davison, “Real-Time Simultaneous Localisation and Mapping with a Single Camera,” Proceedings of Ninth IEEE International Conference on Computer Vision, Nice, 2003, pp. 1403-1410. doi:10.1109/ICCV.2003.1238 Particle Filter [16] E. Eade, “Scalable Monocular SLAM,” 2006 IEEE Com- puter Society Conference on Computer Vision and Pat- tern Recognition, Vol. 1, New York, 2006, pp. 469-476. [17] N. M. Kwok and A. B. Rad, “A Modified for Simultaneous Localization and Mapping,” Journal of Intelligent and Robotic Systems, Vol. 46, No. 4, 2006, pp. 365-382. doi:10.1007/s10846-006-9066-0 [18] A. Cesetti, E. Frontoni, A. Mancini, P. Zingaretti and S. Longhi, “A Vision-Based Guidance System for UAV Navigation and Safe Landing Using Natural Landmarks,” Journal of Intelligent and Robotic Systems, Vol. 57, No. 1-4, 2010, pp. 233-257. doi:10.1007/s10846-009-9373-3 [19] T. Bailey and H. Durrant-Whyte, “Simultaneous Locali- sation and Mapping (SLAM): Part II-State of the Art,” Robotics and Automation Magazine, Vol. 13, 2006, pp. 108-117. doi:10.1109/MRA.2006.1678144 [20] Y. Bar-Shalom, X. R. Li, T. Kirubarajan and J. Wiley, “Estimation with Applications to Tracking and Naviga- tion,” Wiley, New York, 2001. doi:10.1002/0471221279 [21] M. Farrokhsiar and H. Najjaran, “A Higher Order Rao- Blackwellized Particle Filter for Monocular vSLAM,” American Control Conference 2010 (ACC-2010), Balti- more, 2010, pp. 6987-6992. [22] J. M. M. Montiel, J. Civera and A. J. Davison, “Unified Inverse Depth Parametrization for Monocular SLAM,” Proceedings of Robotics: Science and Systems, 2006. [23] D. Törnqvist, T. B. Schön, R. Karlsson and F. Gustafsson, “Particle Filter SLAM with High Dimensional Vehicle Model,” Journal of Intelligent and Robotic Systems, Vol. 55, No. 4-5, 2009, pp. 249-266. doi:10.1007/s10846-008-9301-y [24] H. F. Durrant-Whyte and T. Bailey, “Simultaneous Loca- lization and Mapping: Part I,” IEEE Robotics & Automa- tion Magazine, Vol. 13, No. 2, 2006, pp. 99-110. doi:10.1109/MRA.2006.1638022 [25] D. G. Lowe, “Distinctive Image Features from Scale- Invariant Keypoints,” International Journal of Computer Vision, Vol. 60, No. 2, 2004, pp. 91-110. doi:10.1023/B:VISI.0000029664.99615.94 [26] S. Se, D. Lowe and J. Little, “Mobile Robot Localization and Mapping with Uncertainty Using Scale-Invariant Visual Landmarks,” The International Journal of Robot- ics Research, Vol. 21, No. 8, 2002, p. 735. doi:10.1177/027836402761412467 [27] F. Caballero, L. Merino, J. Ferruz and A. Ollero, “Un- manned Aerial Vehicle Localization Based on Monocular Vision and Online Mosaicking,” Journal of Intelligent and Robotic Systems, Vol. 55, No. 4-5, 2009, pp. 323-343. doi:10.1007/s10846-008-9305-7 [28] M. Montemerlo and S. Thrun, “FastSLAM, A Scalable Method for the Simultaneous Localization and Mapping Camera SLAM,” IEEE ysis and Machine Intelli- Problem in Robotics,” Springer, Berlin, 2007. [29] A. J. Davison, I. D. Reid, N. D. Molton and O. Stasse, “MonoSLAM: Real-Time Single Transactions on Pattern Anal gence, Vol. 29, No. 6, pp. 1052-1067. doi:10.1109/TPAMI.2007.1049 [30] J. Civera, A. J. Davison and J. M. M. Montiel, “Inverse Depth Parametrization for Monocular SLAM,” IEEE Transactions on Robotics, Vol. 24, No. 5, 2008, pp. 932-945. doi:10.1109/TRO.2008.2003276 [31] K. S. Fu, R. C. Gonzalez and C. S. G. Lee, “Robotics: ocular vSLAM with a ,” Proceedings of 5 th atures to Track,” Pro- Calibration oceedings of on Intelligent Robots Control, Sensing, Vision, and Intelligence,” McGraw-Hill, New York, 1987. [32] M. Farrokhsiar and H. Najjaran, “Rao-Blackwellized Particle Filter Approach to Mon Modified Initialization Scheme ASME/IEEE International Conference on Mechatronic and Embedded Systems and Applications, San Diego, 2009, pp. 427-433. [33] J. Shi and C. Tomasi, “Good Fe ceed- ings of 1994 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, 1994, pp. 593-600. [34] J. Y. Bouguet, “A Release of a Camera Toolbox for Matlab, ” 2008. http://www.vision.caltech.edu/bouguetj/calib_doc/ [35] M. P. Parsley and S. J. Julier, “Avoiding Negative Depth in Inverse Depth Bearing-Only SLAM,” Pr IEEE/RSJ International Conference and Systems, 2008, pp. 2066-2071. C opyright © 2011 SciRes. JILSA

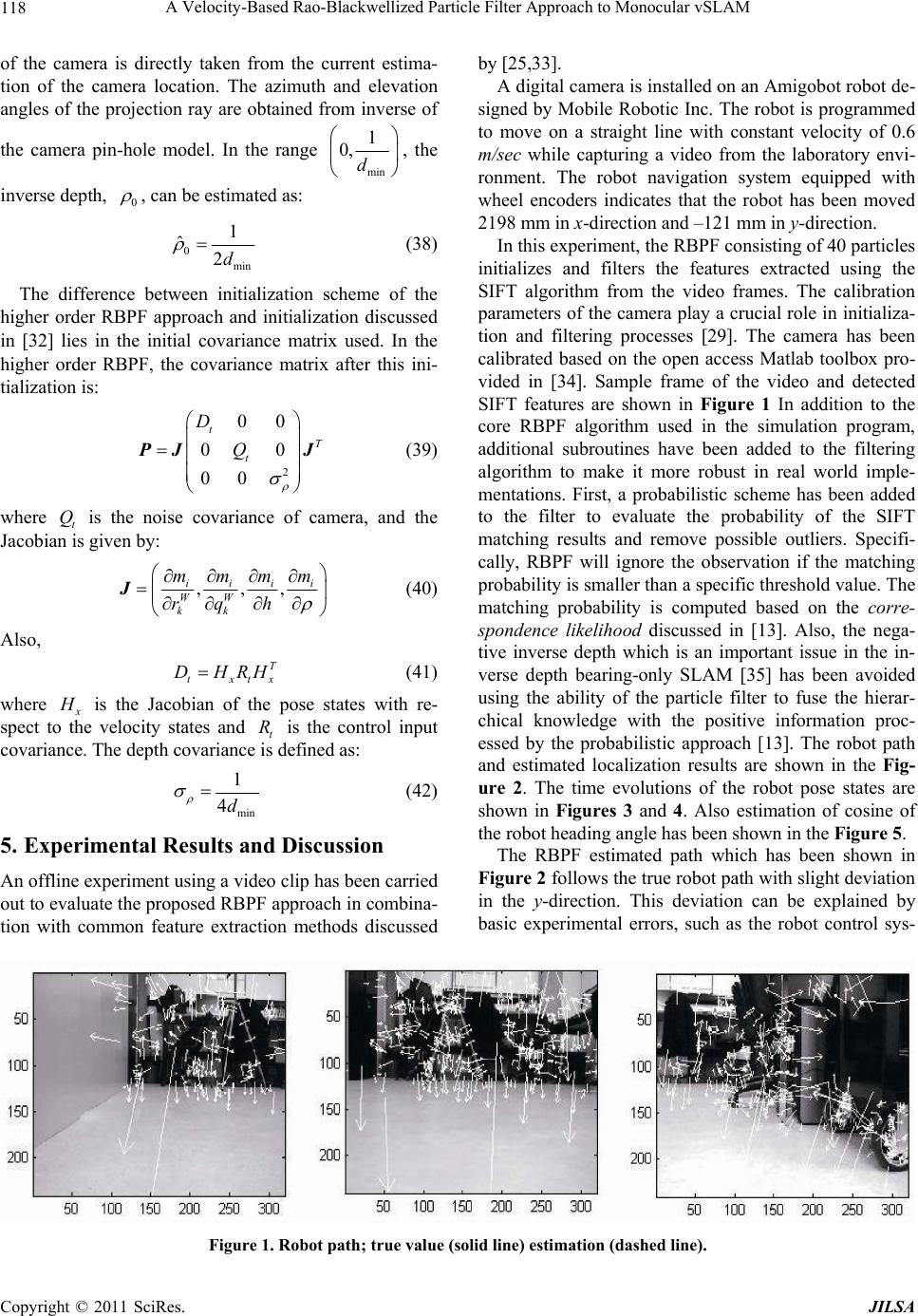

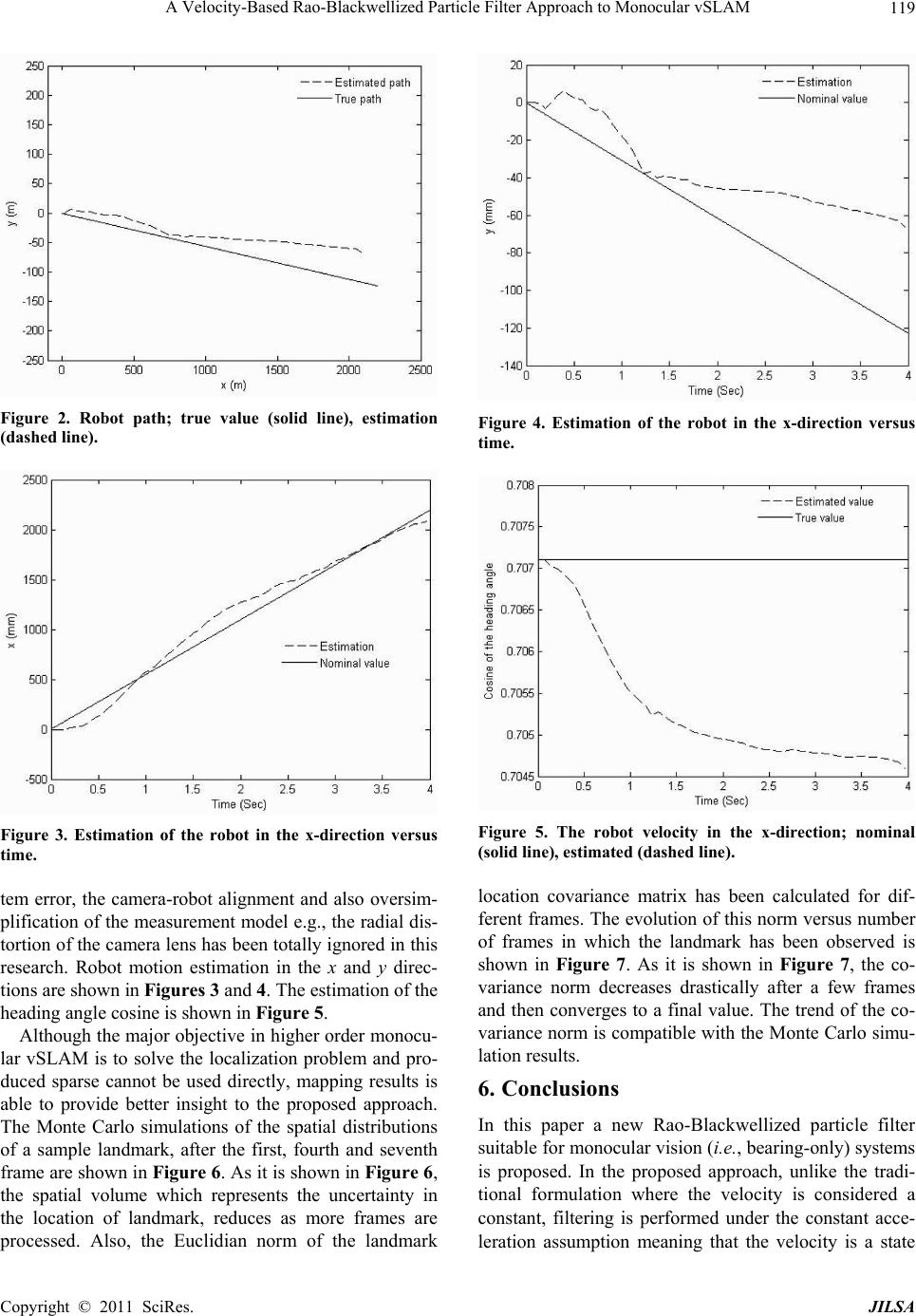

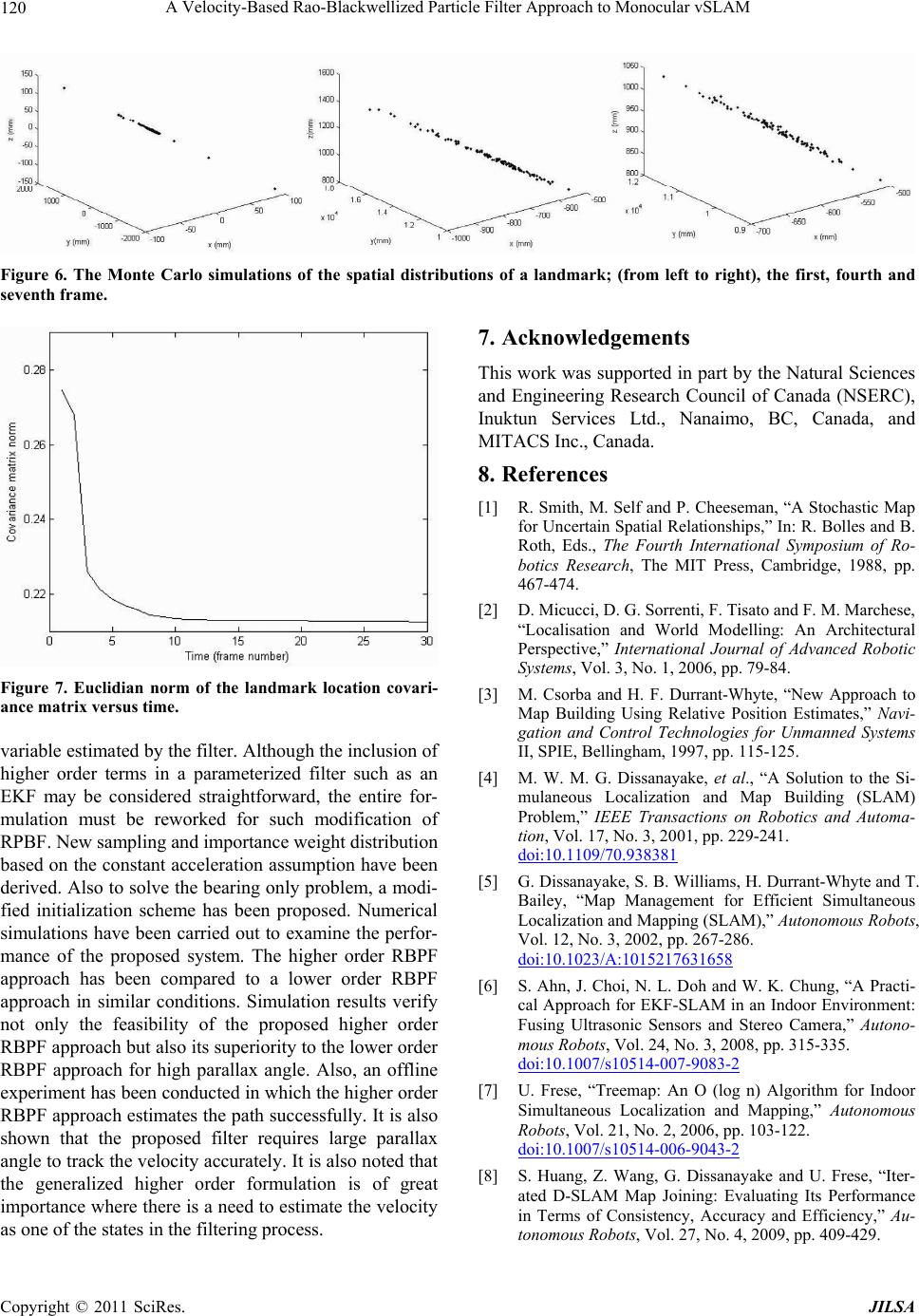

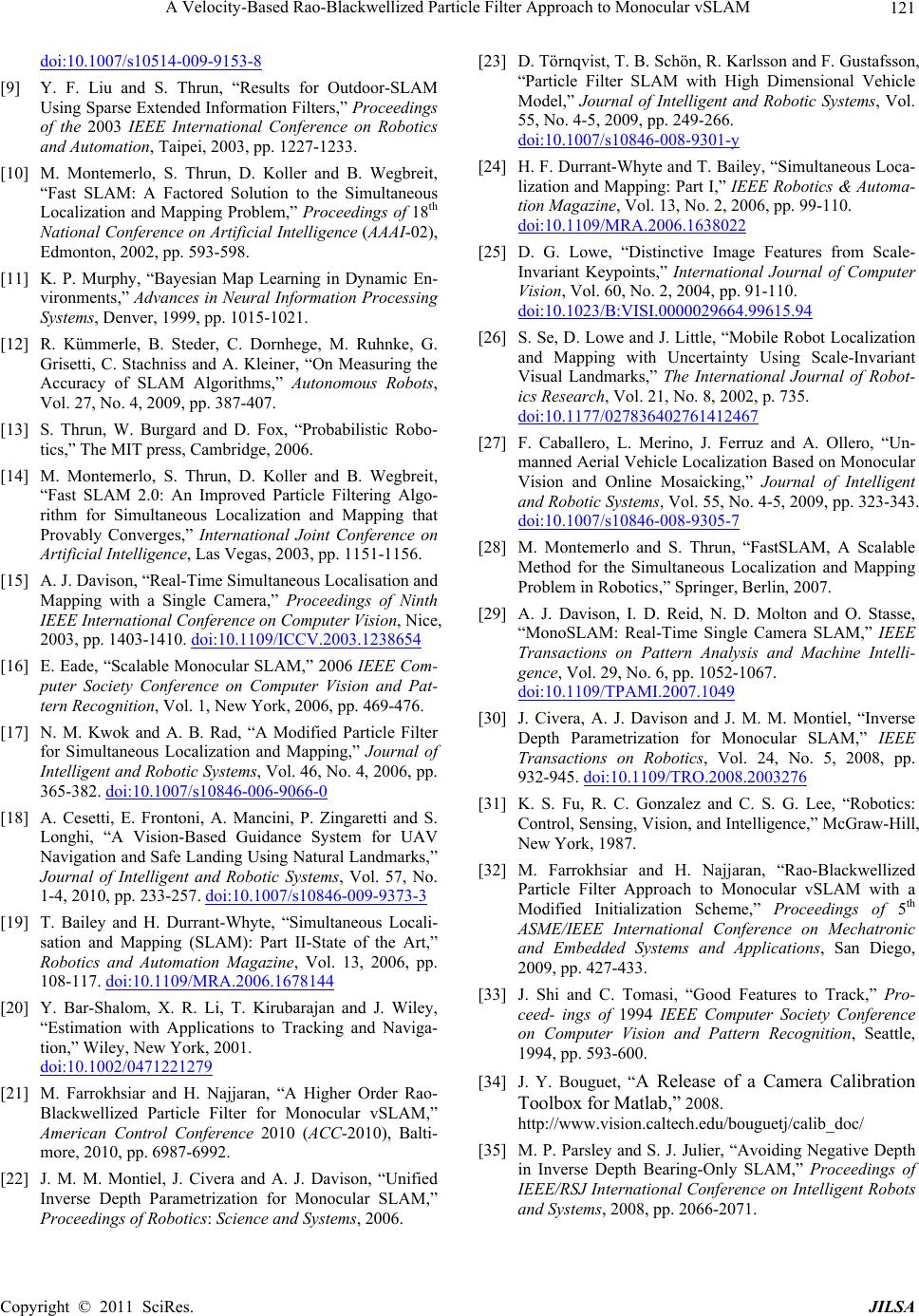

|