J. Service Scie nce & Management, 2009, 3: 168-180 doi:10.4236/jssm.2009.23020 Published Online September 2009 (www.SciRP.org/journal/jssm) Copyright © 2009 SciRes JSSM AHP and CA Based Evaluation of Website Information Service Quality: An Empirical Study on High-Tech Industry Information Center Web Portals Changping HU1, Yang ZHAO1, Mingjing GUO1, 2 1School of Information Management, Wuhan University, Wuhan, China; 2Faculty of Economy and Management of China University of Geosciences, Wuhan, China. Email: zyaxjlgg_0813@hotmail.com Received January 18th, 2009; revised April 15th, 2009; accepted July 20th, 2009. ABSTRACT Web portal is a useful way for high-tech information center providing information services for high-tech enterprises. The importance of evaluating th e informa tion service quality o f the web porta l has been recogn ized by both info rmatio n service researchers and practitioners. By applying for an analytic hierarchy process (AHP) approach, a hierarchical structure evaluation criteria model has been constructed, and the global priority weight of each criterion in the model has also been determined in this study. According to the model, two different methods—comprehensive score method and correspondence analysis (CA) have been used to analyze and evaluate the information service quality of ten pri- mary information center web portals in China high-tech industry from different aspects. The fin dings of this study pro- vide a useful instrument fo r researchers who wish to measure the info rmation service quality of web portals, a s well as for high-tech information center managers who want to enhance their web portals service performance. Keywords: information service, web portal, high-tech industry, AHP, correspondence analysis 1. Introduction High-tech industry is a knowledge and technology inten- sive industry, which needs high quality and diversified information services. In China, information services for high-tech industry are usually provided by special in- formation centers, such as China National Chemical In- formation Center, State Food and Drug Administration Information Center etc. All of them mainly provide in- formation services through their web portals. A high-tech industry information center web portal (HIIC web portal) is a website that provides high-tech enterprises with on- line information and information related services for their decision-making, such as information retrieval, in- formation publication, business consulting, and it is also a good channel fo r enterprises to communicate with their business partners. With the rapid development of high-tech industry in China, the information quality requirements of high-tech industry have become higher and higher. As a result, analyzing and evaluating the web portals information service quality and then improving it become a very im- portant task for information centers. In recent years, many evaluations of website information service quality have been concerned, and relevant researches [1-3] have also been carried out around this issue. According to the findings of these researches, different scholars have identified different evaluation criteria and propos ed some evaluation methods and models, such as website flux index statistical method [2], expert assessment, question- naire investigation [1], and the co mprehensiv e evaluatio n method [3]. But most of these studies only introduce the evaluation model constructing process or application fields without getting specific evaluation results for the websites. In addition, as to the types of evaluation web- sites, the majority were concentrated on the e-commerce websites [4] and library websites [5], with little focus on the information center web portals. Therefore, there is an urgent need to provide an effective method to evaluate HIIC web portals information service quality and help the owners improve their web portals’ quality. This study first ly identifies the information service qua- lity evaluation criteria and sub-criteria of HIIC web por- tals according to their characteristics. Then by applying  CHANGPING HU, YANG ZHAO, MINGJIN G GUO 169 an analytic hierarchy process (AHP) approach [6], the study constructs a hierarchical structure evaluation crite- ria model and determines the global priority weight of each criterion. Secondly, according to the evaluation criteria model, two different methods—comprehensive score method and correspondence analysis (CA) have been used to analyze and evaluate the information ser- vice quality of 10 samples from different aspects. To be specific, the comprehensive score method is used to compare the information service quality by calculating the final scores of that 10 different HIIC web portals, while CA is used to clearly reflect their advantages and disadvantages in each factor. Finally, according to the results of evaluation, th is study proposes the suggestions for high-tech industry information center owners to im- prove their web portals quality and provide better infor- mation services for users. 2. Related Researches 2.1 Research on Information Service Quality Evaluation In many studies, information service quality is generally regarded as an aspect of service quality; few researches specially focus on this issue. Levjakangas [7] has defined information service in his study as “a useful set of re- fined data provided to the user of information that sup- ports the user’s decision making as planning and execu- tion of efficient operations.” This definition included the right content, right timing, right formatting and right channeling of information, which can be used as indica- tors for information service quality evaluation. S.Feindt [8] thought that in the e-business context, website infor- mation service quality insinuated delivering relevant, updated, and easy-to-understand information to signifi- cantly influence online users’ attitude, satisfaction, and purchases. Delone and McLean [9] have established a well-known model to measure information service qual- ity; they highlighted the importance of relevance, time- liness, and accuracy of information. In addition to these researches, the information service quality evaluation is usually studied within service evaluation like some other related issues, such as website design quality, website security quality. For example, the most well-known ser- vice quality instrument, SERVQUAL, was used to mea- sure customer’s expectation and perception of service quality [10,11], and has been adopted successfully in the information system field to measure information system service quality. SERVQUAL consists of reliability, res- ponsiveness, empathy, assurance, and tangibility, among which, reliability, responsiveness, and empathy are con- sidered as the factors relating to information service quality evaluation. The .comQ (dotcom service quality) scale established by Wolfinbarger and Gilly [12] also considers information service quality which includes four major factors: website design, information reliabi- lity, privacy/security, and customer service. In order to measure e-business success and develop a high service quality website, Lee and Kozar [4] proposed a compre- hensive research model from information quality and service quality two aspects respectively. 2.2 Research on Web Portal Information Service Quality Evaluation With the rapid development of internet, evaluation of website information service has become a key issue, more and more organizations and researchers have de- veloped different methods for various websites informa- tion service quality evaluations. Gomez.com developed different scorecard indices to evaluate websites informa- tion service quality in different industries such as bank- ing, mortgage, insurance, and retail [13].Their evaluation of a website combines results from both consumer sur- veys and expert judgments based on factors such as us- age, web resources, information reliability, response speed and personalized service. BisRate.com established an index system for the information service evaluation of ecommerce website based on dimensions such as product representation, product information, on-time delivery, and customer support [14]. Web portal information ser- vice is more diversified than general website information service—a web portal presents information from diverse sources in a unified way. So it is more complicated and difficult to evaluate their qualities. There is currently no established conceptual foundation for developing and measuring the information service quality of web portal in general. The only two published researches we can find to address this issue were conducted by Van Riel et al. [15] and Yang et al. [2] respectively. Van Riel et al. employed exploratory factor ana- lysis (EFA) to identify underlying dimensions. Based on a sample of 52 sub- scribers from a portal that publishes a weekly medical newsletter, they found three key aspects of portal infor- mation service: core service, supporting services, and user interface. Yang et al. employed a rigorous scale development procedure to establish an instrument that measures information service quality of information presenting web portal (IP web portal). They determined that there were five service quality dimensions perceived by users of an IP web portal: usability, usefulness of content, adequacy of information, accessibility, and in- teraction. The five dimension measurement scales added Copyright © 2009 SciRes JSSM  CHANGPING HU, YANG ZHAO, MINGJIN G GUO 170 Figure 1. Research foundations and proposed information service quality cr iteria of HIIC web portals to extant literature by establishing a basis for further theoretical advances on information service quality of a web portal. In sum, prior researches from different perspectives developed some fundamental knowledge about informa- tion service quality evaluation, but most of them are for general websites and not for web portals, especially not for HIIC web portals. HIIC web portals help high-tech information centers to provide a consistent look and feel with access control and procedures for multiple applica- tions, which o therwise would h ave b een d ifferent en tities altogether. Compared with general websites, HIIC web portals are more authoritative and information-oriented. Therefore, the general information service quality eva- luation index system or criteria model may not be suit- able for HIIC web portals. It needs to identify related evaluation criteria (or factors) according to the charac- teristics of the HIIC web portals. Each of the criteria should have a significant impact on overall information service quality of a web portal. So as to be used to for- mulate a model to dealing with the prob lem of HIIC web portal information service quality evaluation. 3. Evaluation Criteria Model 3.1 Identifying the Criteria and Sub-Criteria There is currently no established evaluation criteria mo- del for measuring the information service quality of HIIC web portals. Thus, we integrated several conceptual me- thods to identity important service quality dimensions related to HIIC web portals for our study. Before we identify the criteria we should determine that a user must have a reason to adopt the website as an information and communication channel. The well-known technology adoption model (TAM) is thus embraced. Then, an HIIC web portal essentially is also an Information System, consisting of digital information and an information de- livery infrastructure (browsers, search engines, encryp- tion, networking systems, etc.). Accordingly, information quality and system quality are of importance for HIIC web portal users. Based on these foundations, by ana- lyzing previous models of general websites and consid- ering our research feasibility, we make a reference to the model proposed by Yang et al. [2] to identity informa- tion service quality criteria related to the HIIC web por- tals for our study. Considering the particularity of the HIIC web portals, two criteria and wording of some items have been changed. Finally, this study proposes a modified evaluation model consists of five criteria to measuring the information service quality of HIIC web portals, including usefulness of content, adequacy of information, specialization, easy of use and interaction. Each of the five criteria had a significant impact on overall information service quality. Figure 1 lists the foundations and their relationships to the proposed in- formation service quality criteria. 3.1.1 Use fulness of C ontent The content of a HIIC web portal is of great importance since it directly influences the user’s perception of the destination [16]. Usefulness of content examines whether a HIIC web portal can provide reliable, up-to-date, rele- vant and accurate information. Specifically, content reli- able refers to its dependability and consistency. Content up-to-date is concerned with information timeliness and continuous update. Content relevance includes relevant depth and scope, and completeness of the information [4]. Content accuracy describes the degree to which the web portal information is free of error [2]. 3.1.2 Ade quacy of Informati on High-tech enterprises usually need HIIC web portals to provide information as complete as possible. Adequacy Copyright © 2009 SciRes JSSM  CHANGPING HU, YANG ZHAO, MINGJIN G GUO 171 refers to the extent of completeness of information. It can be measured using complete product/service description, complete content, detailed contact information and rich variety of information. Complete product/service descri- ption facilitates users understanding of the products and personalized services. Detailed contact information re- fers to whether a HIIC web portal contains such informa- tion as contact address, telephone numbers, email and relevant high-tech enterprises Yellow Pages. In addition, web portals should provide complete and a great variety of information to satisfy the demands of different de- partment users [15,17,18]. 3.1.3 Specialization Compared with other industries, high-tech industry has its own features. For example, it is knowledge-intensive, market-oriented and highly innovative. It needs special information and services in its development process. Specialization is a critical factor, which can make a HIIC web portal distinguish itself from general information center websites. It can be measured by examining whe- ther a HIIC web portal has special databases, industry research reports and supply-demand information. Special databases can provide high-tech enterprises with rich products information, technical standards and front knowledge. Industry research reports can help enter- prises better understand the development status and trends of the industry. In addition, a main purpose of the high-tech enterprises is to put their products into market quickly, so they also need lots of supply-demand infor- mation. 3.1.4 Easy of Use In the websites context, easy of use has been regarded as the most frequently used factor in measuring information quality or user satisfaction [19,20]. It involves five as- pects: search function, navigation, personalization, hy- perlinks structure and speed of page loading. Search function refers to the website’s capability to provide di- versity retrieval methods, and helps users to quickly find and select the products or services they need [21]. Good navigation allows users to stay oriented during their vis- its and easily locate the information or products they need. Personalization services can provide online users an individualized interface, effective one-to-one service and customized information. Effective hyperlinks struc- ture and high speed of page loading of a web portal can enhance the user experience. 3.1.5 Interaction Interaction is a kind of action that occurs as two or more objects have an effect upon one another [22]. The inter- action about a HIIC web portal can be divided into three categories: enterprise-information centre interaction, enterprise-enterprise interaction and enterprise-website interaction. Although using a HIIC web por tal is primar- ily a self-served process, high-tech enterprise staff may still expect websites quick response to them and provide professional follow-up services by the knowledgeable and caring contact persons working in information cen- ters. HIIC web portal is a platform for information com- munication between users and service providers, there- fore, it should provide contact channels for business-to- business/information centre such as email, message boards, char room and discussion forum. 3.2 Model Construction In order to enable persons engage in the evaluation work to visualize the problem systematically in terms of rele- vant criteria and sub-criteria, we adopt the AHP to con- struct an evaluation criteria model. The AHP, developed by Saaty (1980) is designed to solve complex multi-crite- ria decision problems [23]. The hierarchical structure used in formulating the AHP model can show the rela- tionship between different factors more clearly. Fur- thermore, AHP has inherent capability to hand le qualita- tive and quantitative criteria used in information service quality evaluation problems. The criteria and sub-criteria we have identified have both qualitative components such as usefulness of content and quantitative ones such as number of special database. Therefore, AHP is the best method for evaluation criteria model construction in this study. The common AHP process involves three phases: con- struction of a hierarchical structure of the AHP model to present the problem, perform pair wise comparison of the criteria at the same level and determine their weights and synthesis to obtain the global weights for the criteria [24]. The consistency of the results is measured using a consistency ratio (CR). A CR of less than 0.1 is consid- ered adequate to interpret the results [25]. Using this three-phase approach, this study first formulates a three- level hierarchy criteria model for HIIC web portal evaluation, as show in Figure 2. The goal of our problem is to evaluate the information service quality of HIIC web portals, which is placed on the first level of the hi- erarchy. Five factors described in the above section are identified to achieve the goal, which form the second level of the hierarchy. The third level occupies the crite- ria defining the fiv e factors of the second level. In addition, using th e AHP, we can also determine the weights of each criterion and sub-criterion, which will be Copyright © 2009 SciRes JSSM  CHANGPING HU, YANG ZHAO, MINGJIN G GUO 172 Figure 2. The evaluation criteria model Table1. Global weights of the sub-criteria Criteria Local weights Sub-criteria Local weights Glo bal w eights Reliable information 0.2815 0.0577 Up-to-date information 0.2089 0.0428 Relevant information 0.2124 0.0435 Usefulness of content 0.2048 Accurate information 0.2972 0.0609 Complete product/ service description0.2460 0.0423 Complete cont e nt 0.2967 0.0510 Detailed contact inform ation 0.1422 0.0245 Adequacy of information 0.1721 Rich variety of information 0.3151 0.0542 Special databases 0.4600 0.1030 Industry research reports 0.2211 0.0495 Specialization 0.2239 Supply-demand information 0.3189 0.0714 Strong search functions 0.2361 0.0560 Good navigation 0.1541 0.0365 Personalized services 0.3018 0.0716 Effective hyperlinks structure 0.1540 0.0365 Easy of use 0.2371 High speed of page loading 0.1540 0.0365 Quick responsiveness to enterprises 0.4639 0.0752 Follow-up services to enterprises 0.2786 0.0452 Interaction 0.1621 Contact channels for B to B/information centers 0.2575 Total: 0.0417 1.0000 Copyright © 2009 SciRes JSSM  CHANGPING HU, YANG ZHAO, MINGJIN G GUO 173 used to calculate the total scores of each HIIC web portal for their information service quality evaluation in our following study. Based on the AHP hierarchy model, 5 experts were invited to assign pair wise comparisons to the criteria and sub-criteria in each level of the hierarchy by scoring the comparison matrices. The nine-point scale as suggested by Satty is used by them to assign their pair wise comparisons of all elements. Of these five experts, one is senior engineer from the website design depart- ment, two are information consultants of famous high- tech enterprises and the other two are professors of in- formation management. All of these experts have suffi- cient experience in web information service quality evaluation and are qualified to assign pair wise compari- son judgment matrices for the proposed AHP model, as shown in Appendix A. Based on the pair wise comparison judgment matrices obtained from five experts, we collected the valid data, calculated the geometric mean and checked the inte- grated CR. The software Matlab is used to determine the normalized priority weights for each criterion. The re- sults of CRs are all less than 0.1, so the matrices sat- isfy the consistency condition. Based on these results, th e global weight of each sub-criterion of level three has also been calculating by Matlab, the results are show in Table 1. 4. Method The questionnaire consisting of 19 items, as showed in Appendix B, was used in the stu dy. All the items were in one-to-one correspondence with the sub-criteria in eva- luation model and measured by using a 5-point Likert scales ranging from low to high. Then the questionnaires were sent to evaluate work participants by emails. 4.1 Evaluation Samples According to the Organ ization of Economic Cooperation and Development (OECD)’s industry classification standard, high-tech industry has been divided into 4 ma- jor categories which including aerospace manufacturing, computer and office equipment manufacturing, electron- ics and telecommunications equipment manufacturing and pharmaceutical manufacturing [26]. At present, China is basically in accordance with the OECD’s high-tech classification standards to define the statistic scope of high-tech industry. But considering the National Economic Industry Classification Standard (GB /T4745-2002) of China, another four categories have been added to the OECD’s categories in our country, they are nuclear fuel processing, information chemicals manufacturing, public software services and medical equipment and instrument manufacturing. In order to accurately reflect the level and current situation of in Table 2. Samples of high-tech industry information center web po rtals Code Information center web portals Web site w1 China atomic in- formation Network http://www.atominfo.com.cn w2 China chemical information center Network http://www.cheminfo.gov.cn/ w3 China medical in- formation network http://www.cpi.gov.cn/ w4 China aviation in- formation network http://www.aeroinfo.com.cn w5 China electronics industry informa- tion network http://www.ceic.gov.cn/ w6 China computer industry association network http://www.chinaccia.org.cn/ w7 China culture and office equipment manufacturing network http://www.ccoea.org.cn w8 China medical de- vices information network http://www.cmdi.gov.cn/ w9 China instrument network http://www.yibiao.com/ w10 China network software services network http://www.mycnsoft.com/ formation service quality of China’s HIIC web portals, according to China high-tech industry statistics classifi- cation standards, 10 information center web portals of different high-tech industry categories were selected as the samples in this study, as showed in Table 2. These 10 websites were in the charge of relevant government de- partments or industry associations, which are the most authoritative web portals of each high-tech sub-industry in China. 4.2 Participant Thirty CIOs from different high-tech enterprises agreed to our cooperation request and then participated in this study. The enterprises were selected from “China High- tech Enterprise Directory” and all of them have the spe- cialized information technology department. Generally speaking, CIO is the person in an enterprise who is re- sponsible for the information technology and computer systems; so he could understand the enterprise’s infor- mation services demands better than general employees. 75% of the participants were male and 25% were female. All participants were experienced HIIC web portal u sers: 40% of them had visited more than 8 HIIC web portals and 80% had visited more than 5. Regarding their usage frequency, over 80% of them used services provided by Copyright © 2009 SciRes JSSM  CHANGPING HU, YANG ZHAO, MINGJIN G GUO Copyright © 2009 SciRes JSSM 174 HIIC web portals for supporting enterprise goals several times a day while the others several times a week. Every CIO must fill in 10 questionnaires because they should score all the 10 samples respectively. Before fill- ing in the questionnaires, all the participants were asked to visit the samples and access the information service experiences. Every one should score all items of each HIIC web portal according to their experiences. In order to ensure the effectiveness of response results, follow-up calls were made to these participants. In the end, all the questionnaires responded with complete data. 4.3 Data Analysis Methods The data collected from the 300 (30×10) questionnaires were coded and analyzed statistically, and then we cal- culated the average score of each sample in each item. In order to analyze and compare the information service quality of the samples, two data analysis methods— comprehensive score method and correspondence analy- sis (CA) were used in this study. They evaluated the in- formation service quality of HIIC web portals from dif- ferent aspects respectively. 4.3.1 Comprehensive Score Metho d In this study, the evaluation samples (alternatives) have not been placed in a separate level like usual AHP ap- proach, an improved method—Comprehensive Score Method was instead of assessing pair wise comparisons among the evaluation objects. In Subsection 4.2 we have already asked thirty CIOs of different enterprises scored the each criteria of each HIIC web portal sample with their experiences by using 5-point Likert scales, and then we calculated the average score of each HIIC web portal sample in each criterion. The Comprehensive Score Me- thod is used to calculate the final score of each sample by multiplying the global weights of criteria in the third level with the 5-point Lik ert scales average scores of the corresponding criteria obtained from questionnaires. So, assume the average score of each sample in each item (sub-criterion) is(, , the final score Si (i=1,2,…,10) of each sample can be calculated by com- prehensive score method as shown in following equation. 1i2i18i 19i ,, , )aaa a where 12 1819is the global priority weight of each sub-criterion. Then we can compare the information service qualities of 10 HIIC web portals by their final scores. It should be noted in this study that we didn’t calculate the weights of evalua- tion samples as traditional AHP method which usually placed the evaluation samples (in traditional AHP me- thod it is called alternatives) in the lowest level in an AHP hierarchical structure and then got their global weights by a pair wise comparison judgment matrix. 0.577,0.0428, ,0.0452,0.0417(, ,,,)()wwww This study only calculated the global priority weights of sub-criteria, and then used them to get final scores of 10 samples. The reason is that if we adopt the traditional AHP method to compare 10 samples (alternatives), the number of pair wise comparisons required for each of the 19 sub-criteria would be equal to n(n-1)/2=45, and it becomes computationally difficult and sometimes infea- sible. The major advantage of comprehensive score method is to overcome the explosion in the number of required comparisons when the number of samples is large, and shorten the evaluation time. 4.3.2 Cor r esponde n c e Analysis In order to further explore the relationship between the 10 HIIC web portals in different criteria and then classify them according to their similarities, this study adopts the correspondence analysis to deal with the collected data. Correspondence analysis is a powerful method for the multivariate exploration of large-scale data, which re- veals a correlation between variables through analyzing interactive tables constituting qualitative variables, thus presenting differences between different categories of the same variable, and those between different categories of different variables. The primary idea of correspondence analysis is to present the proportion structure of elements in the columns and rows of a contingency table in the form of points in a space with lower dimensionality [5]. Mathematically, correspondence analysis can be regard- ed as either: 1) A method for decomposing the chi-squared statistic for a contingency table into components corresponding to different dimensions of the heterogeneity between its rows and column s, or 2) A method for simultaneously assigning a scale to rows and a separate scale to columns so as to maximize the correlation between the resu lting pair of variables. Quintessentially, however, correspondence analysis is a technique for displaying multivariate categorical data graphically, by providing coordinates to represent the categories of the variables involved; these may then be plotted to provide a “picture” of the data. Therefore, we can present the evaluation samples and criteria in a single figure with CA, directly and clearly observe categories and attributes of the samples. 1218 19 19 1i2i18i 19i1 (, ,,,) (, ,,,)' i ii i Sww ww aaa awa 5. Results 5.1 Comprehensive Score Method Result According to the equation explained above, we calculated  CHANGPING HU, YANG ZHAO, MINGJIN G GUO 175 Figure 3. Comprehensive score of each HIIC W Figure 4. Score of each HIIC web portal in five factors Copyright © 2009 SciRes JSSM  CHANGPING HU, YANG ZHAO, MINGJIN G GUO 176 the final total score of each HIIC web portal by multi- plying the global priority weight of each criterion with the CIOs’ average score, and adding the resulting values. Compared to the total score of each HIIC web portal as shown in Figure 3, we find that W8 has the highest score 3.8296, which means its integrated information service quality is the best, especially at adequ acy of information, specialization and easy of use. This web portal has a clear navigation system. When users visit it, they can easily find what they need and got special on-line ser- vices and consultation. W2 and W5 respectively got the second and third highest score, which are 3.8254 and 3.6956, so their service qualities are satisfactory. Com- paratively speaking, W7, W1 and W10 are the web por- tals whose comprehensive scores are lower than other ones. They have some common problems such as slow information update, less information content, imperfect search function, and bad interaction. So they should make great efforts to improve their information services so as to better satisfy the needs of high-tech enterprises. In addition, other HIIC web portals are in the general level of information service qu ality. Figure 4 has further shown the comparison results of information service quality from five factors: usefulness of content, adequacy of information, specialization, easy of use and inter action. On one ha nd, we find that W4 ha s the highest usefulness of content, because it can usually provide latest official policy about aerospace manufac- turer and update information content everyday. On the other hand, W2 has the highest adequacy of information and specialization; it has a quotations center which can help users understand chemical products price trend and market development in time. In addition, it has detailed industry statistics and special rep orts which provide users with abandon useful information. W8 has the highest easy of use, users can search information what they need by keywords or full text. The navigation of W8 is also very good, which divides the website content into four- teen sections and the most important information are put on the striking location, so users can find it quickly. W10 has the highest interaction which provides online con- sultation services and responses users’ needs quickly, and it also provides a chart room for business negotia- tions between different enterprises. But, we also find that W10 has the lowest usefulness of content and adequacy of information, W7 has the lowest easy of use and inter- action, while W1 has the lowest easy of use. Based on these comparison results we can clearly find out the ad- vantages and disad vantages of each HIIC web portal and then propose feasible suggestions to improve its infor- mation service qualities 5.2 CA Result In order to further analyze the advantages and disadvan- tages of these HIIC web portals in which criterion, the average score of each HIIC web portal sample in each criterion was entered into the SPSS—the data analysis tool, and analyzed. The results of correspondence analy- sis appear in Figure 5. The association graph was ana- lyzed further, and the points of criteria and HIIC web portals on Figure 5 were classified into four group. The first g rouping includes W1 and W4. Th e common ground of these two HIIC web portals are reliable infor- mation (C11), rapid information updating (C12), complete product/service description (C21), complete content (C22), detailed contact info rmation (C23) and good personalized services (C43). They get high scores in these criteria. For example, W1 updates news of Nuclear Fuel Processing industry everyday and provides detailed contact informa- tion of these kinds of companies. But it is also presented in Figure 5 that search function (C41) and supply-demand information (C33) of the two web portals are not very good, there is a long distance between them. Therefore, much attention must be paid to the search function and supply-demand information in the future to improve their information service qualities. The second grouping includes W2, W3, W5 and W8. The advantages of these web portals lay in the relevant information (C13), rich variety of information (C24) and industry research reports (C32). However, among these four web portals, W2 is not near the other three points and far from many criteria. It is seen from average scores made by participants that W2 got similar high scores in these criteria, which indicates that W2 performs better in these aspects than other web portals. The third grouping includes W6 and W7. Their ad- vantages are accurate information (C14) and high speed of page loading (C45). But they are not very successful, with little contact information and specialized informa- tion such as special databases, industry research reports, etc, revealing that the information service qualities are at a lower level. Therefore, such web portals should make great efforts to improve their information abundance and specialization. The fourth grouping includes W9 and W10. They can provide users with rich supply-demand information (C33) and good interactive services such as quick responsive- ness (C51) and follow-up services to enterprises (C52), contact channels for business-to-business/information centre (C53), etc. They also have good navigation (C42) and effective hyperlinks structure (C44). This indicates that overall performance of such web portals is in normal order. Therefore, such web portals should find their proper positions in the high-tech industry according to their own situation and advantages. There are still another two criteria points-special da- tabases (C31) and strong search functions (C41) don’t be- Copyright © 2009 SciRes JSSM  CHANGPING HU, YANG ZHAO, MINGJIN G GUO 177 long to any of the above groupings, which shows that compared with other criteria, they are not the unique attributes for a particular web portal, but they make great influence for the information service qualities of some HIIC web portals such W1, W4, W6, W7, W9 and W10. These web portals don’t get high scores in the two crite- ria, so they should attach much importance to these two aspects. 6. Discussion and Conclusions Information service quality evaluation of a HIIC web portal is an important problem to information centre it- self and high-tech enterprises. By improving the model proposed by Zhilin Yang et al., this study identified five information service quality criteria including usefulness of content, adequacy of information, specialization, easy of use and interaction, which included 19 sub-criteria. Then by using an AHP approach, this study constructed a hierarchical structure evaluation criteria model and de- termined the global priority weight of each criterion and sub-criterio n. Based on this evalu ation criteria modal, the study comprehensively applied the comprehensive score method and CA to analyze the information service qual- ity of ten typical information center web portals in high-tech industry and got several useful results. From the research results we can find: First, using the AHP approach proposed in this study, the criteria for information service quality evaluation were clearly defined and the problem was structured systematically. This enabled executives of HIIC to ex- amine the strengths and weaknesses of their web portals by comparing them with respect to appropriate criteria. The weights of five criteria showed that specialization and easy of use carry more weight on the overall evalua- tion of a HIIC web portal than other factors. Thus, ex- ecutives should expend more effort to make their web portals more professional and easy to use. Second, the study found that the comprehensive score of each HIIC web portal calculated by equation proposed in this paper all are not very high (less than 4), especially at two aspects—easy of use and interaction. It indicated that the overall information service qualities o f these web portals were at a lower level and the main shortages are at easy of use and interaction. To a high-tech enterprise, it was important for it to be able to locate the needed information without difficulties. So the information cen- ter should design a user-friendly website, with strong search functions, good navigation, personalized services, effective hyperlinks structure and high speed of page loading. At the same time, the information center should strengthen the interaction between web portal and users by using some facilities such as user chat room, message board, reputation system and providing online consulta- 0.1 .05 0 -0.1 -0.050.00.05 0.1 W1 W4 W3 W8 W5 W2 W6 W7 W9 W10 C11 C21 0 0. -0.05 -0.1 C12 C22 C23 C43 C13 C32 C24 C14 C45 C52 C42 C31 C53 C44 C51 C33 C41 HIIC Web PortalCriteria Figure 5. Positioning maps tion and training services. Finally, according to the CA results, the 10 samples have been divided into four groupings by their similari- ties in different criteria, wh ich can help su ch web portals to position themselves properly and provider extra spe- cially services for enterprise users. It can also urge the web portals to compare themselves with those that have the best performance in different criteria, to enable them to make improvements on information services. At the same time, this study still has several limita- tions that should be revisited in the future studies. First, the study only chose CIOs in the high-tech enterprises as the participants, which could not represent various kinds of users’ views. Second, this study was conducted with relatively small samples, 10 official HIIC web portals might not give a comprehensive reflection of the overall situation of HIIC web portals in China. Finally, evalua- tion criteria were selected based on the previous model of general websites, which could have excluded some criteria that might strongly influence website information service quality. Future research needs to take into con- sideration more kinds of users such as managers, staff in the high-tech enterprises participate in the evaluation process. Furthermore, it also should increase the number of website samples and co llect data to examine th e valid- ity of the proposed model better. 7. Acknowledgement This work was supported by grants from the China Na- tional Social Science Foundation Major Project “Study of Information Services in China National Innovation System” (No.06&ZD031) and Key Project of Philosop hy and Social Sciences Research “Education of Ministry of Knowledge Information Service System of Innovation- Oriented Country” ( No. 06JZD003 2). Copyright © 2009 SciRes JSSM  CHANGPING HU, YANG ZHAO, MINGJIN G GUO Copyright © 2009 SciRes JSSM 178 REFERENCES [1] H. Landrum and V. R. Prybutok, “A service quality and success model for the information service industry,” European Journal of Operational Research, Vol. 156, No. 3, pp. 628–642, Auguest 2004. [2] Z. L. Yang, S. H. Cai, Z. Zhou, et al., “Development and validation of an instrument to measure user perceived service quality of information presenting web portals,” Information & Management, Vol. 42, No. 4, pp. 575-589, May 2005. [3] L. Leiming and W. Y., “Comparative study of Web site evaluation pattern,” Qingbao Xuebao, Vol. 23, No. 2, pp. 198–203, 2004. [4] Y. Lee, and K. A. Kozar, “Investigating the effect of web site quality on e-business success: An analytic hierarchy process (AHP) approach,” Decision Support Systems, Vol. 42, No. 3, pp. 1383–1401, December 2006. [5] X. Shen, D. Li, and C. Shen, “Evaluating China’s uni- versity library web sites using correspondence analysis,” Journal of the American Society for Information Science and Technology, Vol. 57, No. 4, pp. 493–500, 2006. [6] T. Saaty, “The analytic hierarchy process,” McGraw-Hill, New York, 1980. [7] P. Levjakangas, J. Haajanen, and A. M. Alaruikka, “In- formation service architecture for international multi- modal logistic corridor,” IEEE Transactions on Intelligent Transportation Systems, Vol. 8, No. 4, pp. 565–574, De- cember 2007. [8] S. Feindt, J. Jeffcoate, and C. Chappell, “Identifying suc- cess factors for rapid growth in SME e-commerce,” Small Business Economics, Vol. 19, pp. 51–62, 2002. [9] W. H. DeLone and E. R. McLean, “Information systems success: The quest for the dependent variable,” Infor- mation Systems Research, Vol. 3, No. 1, pp. 60–95, 1992. [10] E. Babakus, and G. W. Boller, “An emperical assessment of the SERVQUAL scale,” Journal of Business Research, Vol. 24, No. 3, pp. 253–268, 1992. [11] T. P. Van Dyke, L. A. Kappelman, and V. R. Prybutok, “Measuring information systems service quality: Con- cerns on the use of the SERVQUAL questionnaire,” MIS Quarterly, Vol. 21, No. 2, pp. 195–208, 1997. [12] M. F. Wolfinbarger and M. C. Gilly, “.ComQ: Dimen- sionalizing, measuring and predicting quality of the etailing experience,” MSI Working Paper Series, pp. 2–100, 2002. [13] S. A. Kaynama and C. I. Black, “A proposal to assess the service quality of online travel agencies: An exploratory study,” Journal of Professional Services Marketing, Vol. 21, No. 1, pp. 63–88, 2000. [14] V. A. Zeithaml, A. Parasuraman, and A. Malhotra, “Ser- vice quality delivery through web sites: A critical extant knowledge,” Journal of the Academy of Marketing Science, Vol. 30, No. 4, pp. 362–375, 2002. [15] A. C. R. V. Riel, V. Liljander, and P. Jurriens, “Exploring consumer evaluations of e-services: A portal site,” International Journal of Service Industry Management, Vol. 12, No. 4, pp. 359–377, 2001. [16] C. Zafiropoulos, V. Vrana, and D. Paschaloudis, “An evaluation of the performance of hotel web site using the managers’ views about online information services,” Pro- ceedings of the 13th European Conference on Informa- tion Systems, Information Systems in a Rapidly Chang- ing Economy, 2005. [17] N. Cho, and S. Park, “Development of electronic com- merce userconsumer satisfaction index (ECUSI) for inter- net shopping,” Industrial Management & Data Systems, Vol. 101, No. 8, pp. 400–405, 2001. [18] K. V. La, and J. Kandampully, “Electronic retailing and distribution of services: Cyber intermediaries that serve customers and service providers,” Managing Service Qua- lity, Vol. 12, No. 2, pp. 100–116, 2002. [19] D. L. Hoffman and T. P. Novak, “Marketing in hyper- media computer-mediated environments: Conceptual foun- dations,” Journal of Marketing, Vol. 60, pp. 50–68, 1996. [20] M. M. Misic and K. L. Johnson, “Benchmarking: A tool for web site evaluation and improvement,” Internet Research: Electronic Networking Applications and Policy, Vol. 9, No. 5, pp. 383–392, 1999. [21] B. Shneiderman, “Designing the user interface: Strategies for effective human-computer interaction,” Addison- Wesley, 1998. [22] A. K. Shiau, D. Barstad, P. M. Loria, et al., “The struc- tural basis of estrogen receptor/coactivator recognition and the antagonism of this interaction by tamoxifen,” Cell, Vol. 95, No. 7, pp. 927–937, December 1998. [23] E. W. T. Ngai and E. W. C. Chan, “Evaluation of know- ledge management tools using AHP,” Expert Systems with Applications, Vol. 29, No. 4, pp. 889–899, Novem- ber 2005. [24] T. Saaty and L. Vargas, “Decision making in economic, political, social, and technological environments with the analytic hierarchy process,” RWS Publications, Pitts- burgh, 1994. [25] M. C. Carnero, “Selection of diagnostic techniques and instrumentation in a predictive maintenance program: A case study,” Decision Support Systems, Vol. 38, No. 4, pp. 539–555, 2005. [26] S. Juan, X. Dai, and J. Yang, “Thinking about China high-tech industry statistics definition,” Statistics and Decision, Vol. 256, No. 4, pp. 4–6, 2008.  CHANGPING HU, YANG ZHAO, MINGJIN G GUO 179 Appendix A. Pair Wise Comparison Judgments Matrices Table A1 shows the pair wise comparison judgments ma- trices for comparing the criteria and sub-criteria which are assigned by the 5 experts. B. Questionnaire Directions We need your impressions abou t perfor mance of the high -tech information center web portals that provide infor- mation services for your enterprise. Before you fill in this questionnaire, please visit the following 10 web por- tals (Table A2) and experience their various information services. For each of the items in the questionnaire, please indicate your perception of the web portals infor- mation services qualities by circling a number in the column (Table A3). There is no right or wrong answer. Please do not omit any feature. A questionnaire is corre- sponding to a web portal. Table A1. Pair wise comparison judgments matrices Goal B1 B 2 B 3 B 4 B 5 Priority B1 1 1.2 1/1.5 1 1.5 B2 1/1.2 1 1/1.6 1/1.2 1.2 B3 1.5 1.6 1 1/1.4 1 B4 1 1.2 1.4 1 1.5 B5 1/1.5 1/1.2 1 1/1.5 1 0.2048 0.1721 0.2239 0.2371 0.1621 CR=0.02 Usefulness of content C11 C 12 C 13 C 14 Priority C11 1 1.3 1.3 1 C12 1/1.3 1 1 1/1.5 C13 1/1.3 1 1 1/1.4 C14 1 1.5 1.4 1 0.2815 0.2089 0.2124 0.2972 CR =0.0 Adequacy of information C21 C 22 C 23 C 24 Priority C21 1 1/1.2 2 1/1.5 C22 1.2 1 2 1 C23 1/2 1/2 1 1/2 C24 1.5 1 2 1 0.246 0.2967 0.1422 0.3151 CR=0.01 Specialization C31 C 32 C 33 Priority C31 1 1.5 2 0.4600 C32 1/1.5 1 1/2 0.2211 C33 1/2 2 1 0.3189 CR=0.09 Easy of use C41 C 42 C 43 C 44 C 54 Priority C41 1 1.5 1/1.2 1.5 1.5 0.2361 C42 1/1.5 1 1/2 1 1 0.1541 C43 1.2 2 1 2 2 0.3018 C44 1/1.5 1 1/2 1 1 0.1540 C54 1/1.5 1 1/2 1 1 0.1540 CR=0.00 Interaction C51 C 52 C 53 Priority C51 1 2 1.5 0.4639 C52 1/2 1 1.3 0.2786 C53 1/1.5 1/1.3 1 0.2575 CR=0.03 Copyright © 2009 SciRes JSSM  CHANGPING HU, YANG ZHAO, MINGJIN G GUO 180 Table A2. High-tech information center web portals list Information center web portals Web site China atomic information network http://www.atominfo.com.cn China chemical information center network http://www.cheminfo.gov.cn China medical information network http://www.cpi.gov.cn China aviation information network http://www.aeroinfo.com.cn China electronics industry information network http://www.ceic.gov.cn China computer industry association network http://www.chinaccia.org.cn China culture and office equipment manufacturing networkhttp://www.ccoea.org.cn China medical devices information network http://www.cmdi.gov.cn China instrument network http://www.yibiao.com China network software services network http://www.mycnsoft.com Table A3. Questionnaire Name of web portal: How the web portals performs here Low High Quality of usefulness of content 1 2 3 4 5 1.Reliability of information received 1 2 3 4 5 2.Up-to-date information received 1 2 3 4 5 3.Relevance of information received 1 2 3 4 5 4.Accuracy of information received 1 2 3 4 5 Quality of adequacy of information 1 2 3 4 5 5.Complete product/ service description 1 2 3 4 5 6.Complete content 1 2 3 4 5 7.Detailed contact information 1 2 3 4 5 8.Rich variety of information 1 2 3 4 5 Quality of specialization 1 2 3 4 5 9.Special databases 1 2 3 4 5 10.Industry research reports 1 2 3 4 5 11.Supply-demand information 1 2 3 4 5 Quality of easy of use 1 2 3 4 5 12.Search functions are easy to use 1 2 3 4 5 13.A navigation that is easy to use 1 2 3 4 5 14.Personalized Service 1 2 3 4 5 15.Effective hyperlinks structure 1 2 3 4 5 16.Quick speed of page loading 1 2 3 4 5 Quality of interaction 1 2 3 4 5 17.Quick responsiveness to enterprises 1 2 3 4 5 18.Follow-up services to enterprises 1 2 3 4 5 19.Contact channels for business-to-business/ information centre 1 2 3 4 5 Copyright © 2009 SciRes JSSM

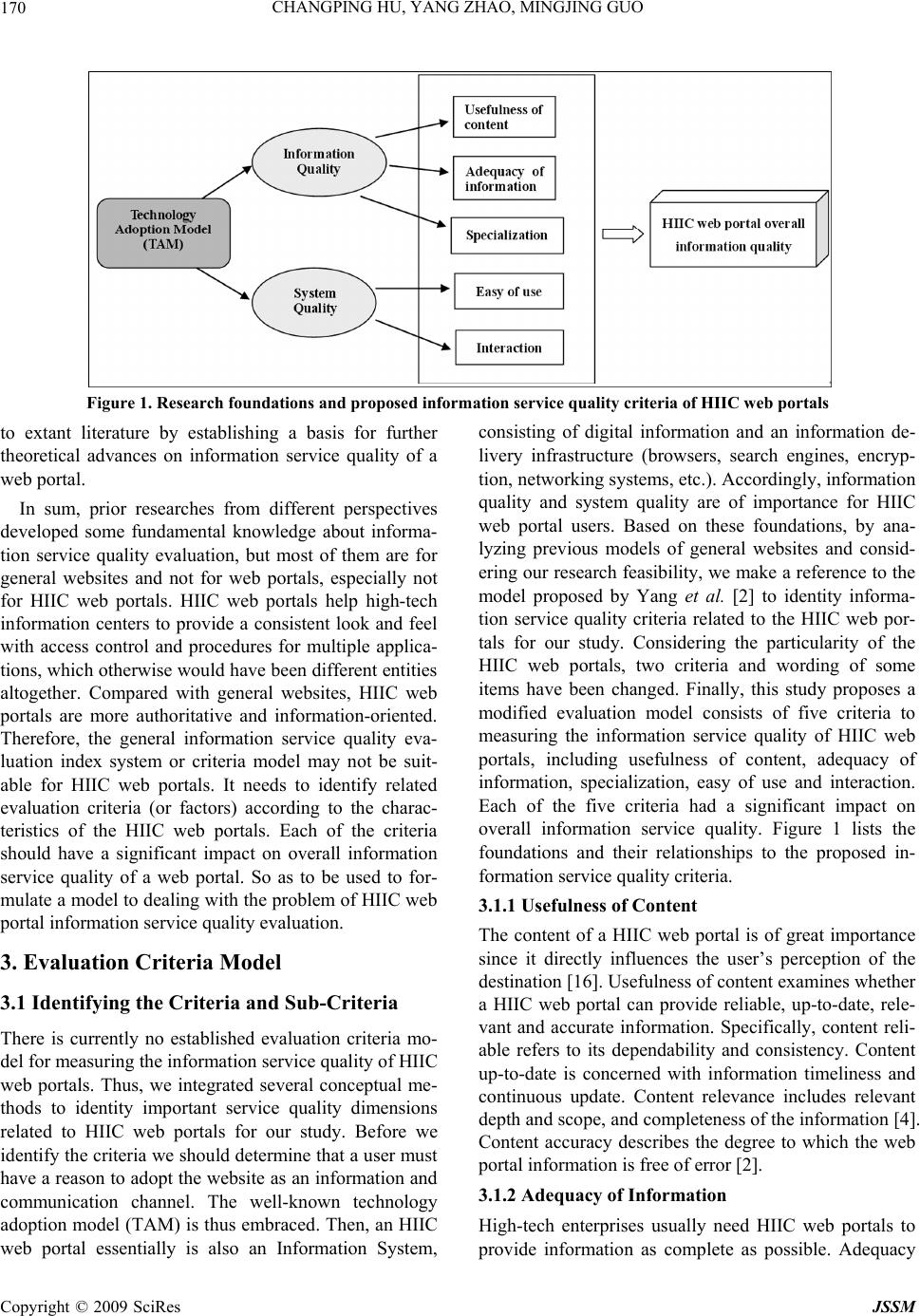

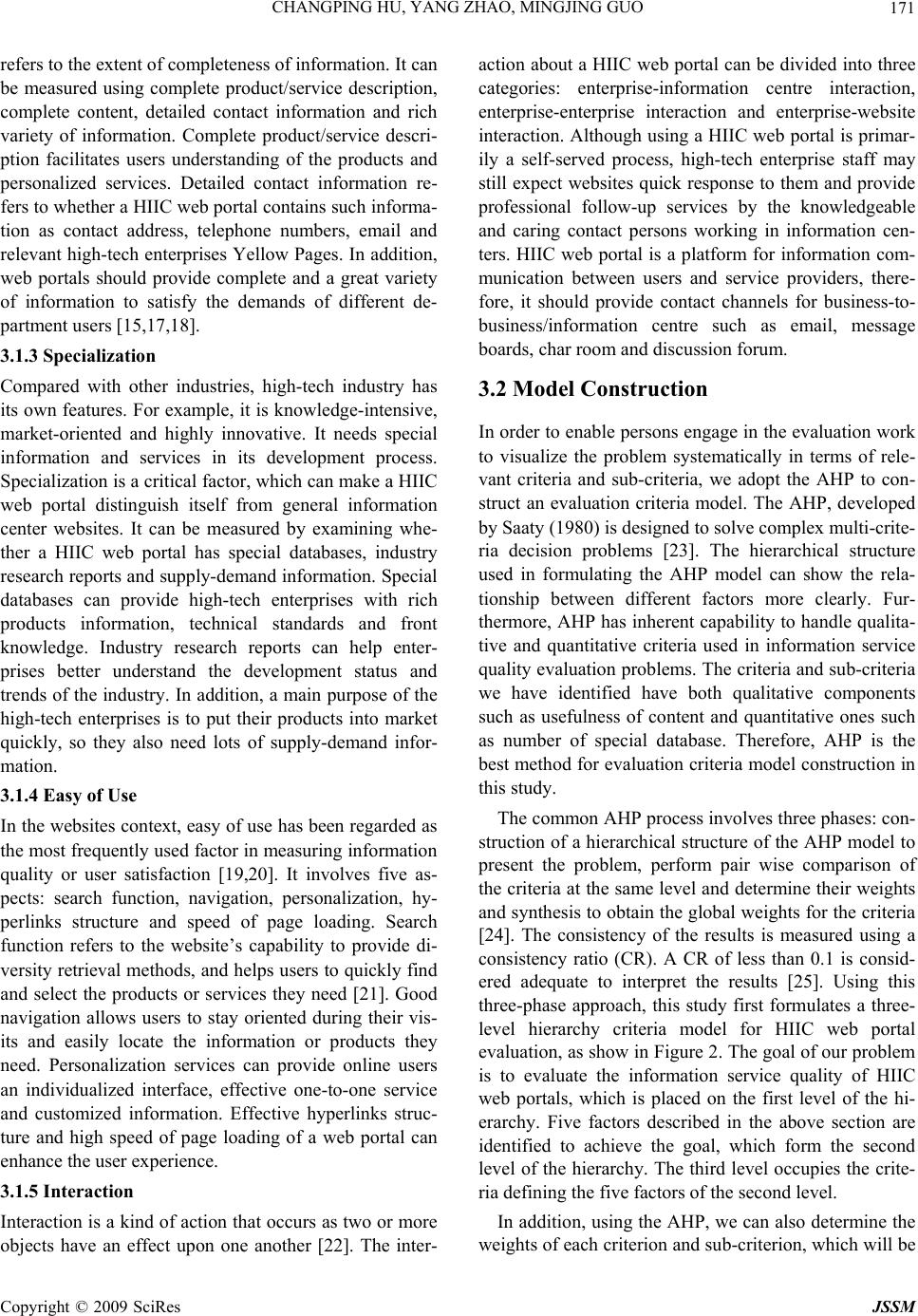

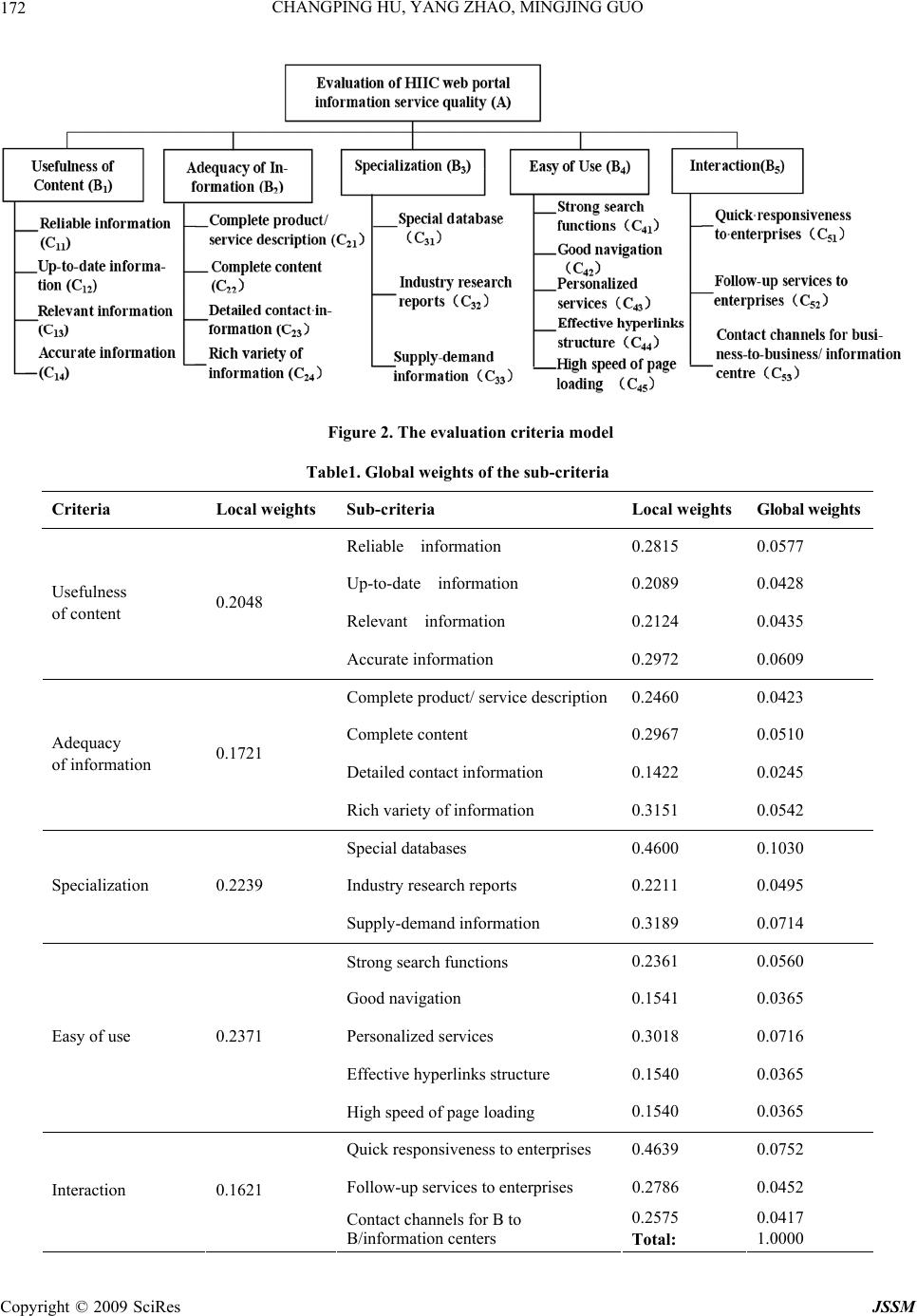

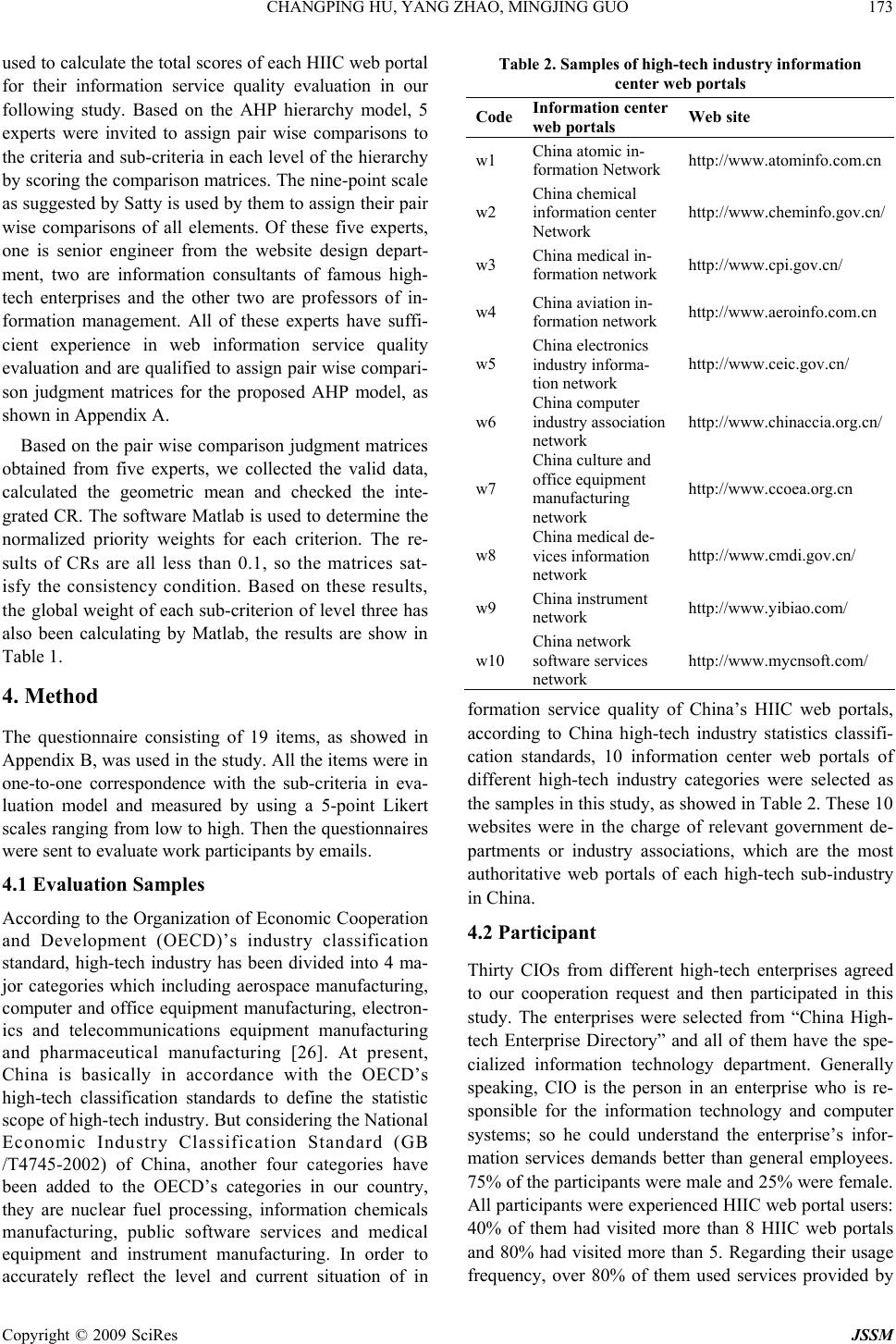

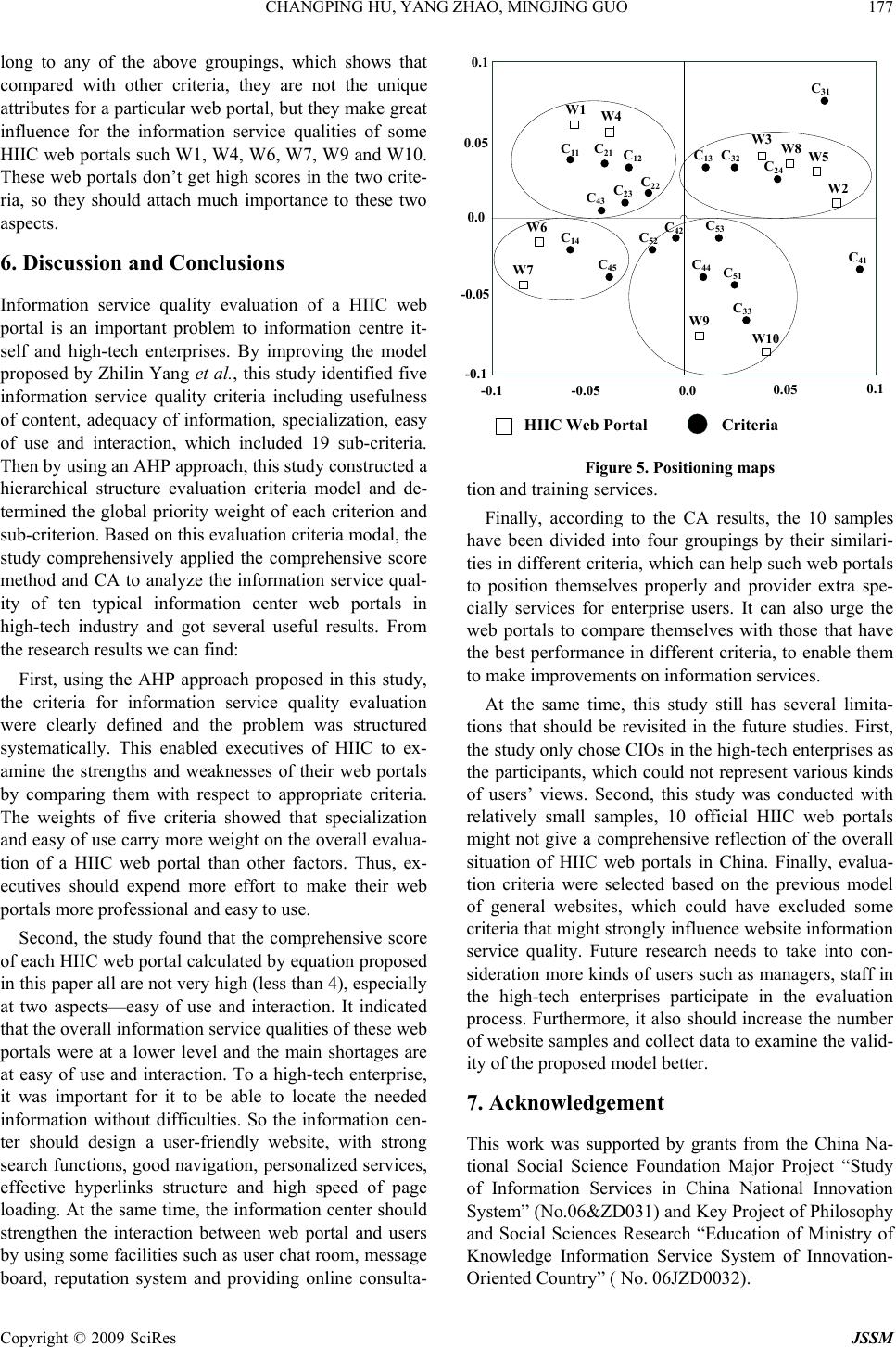

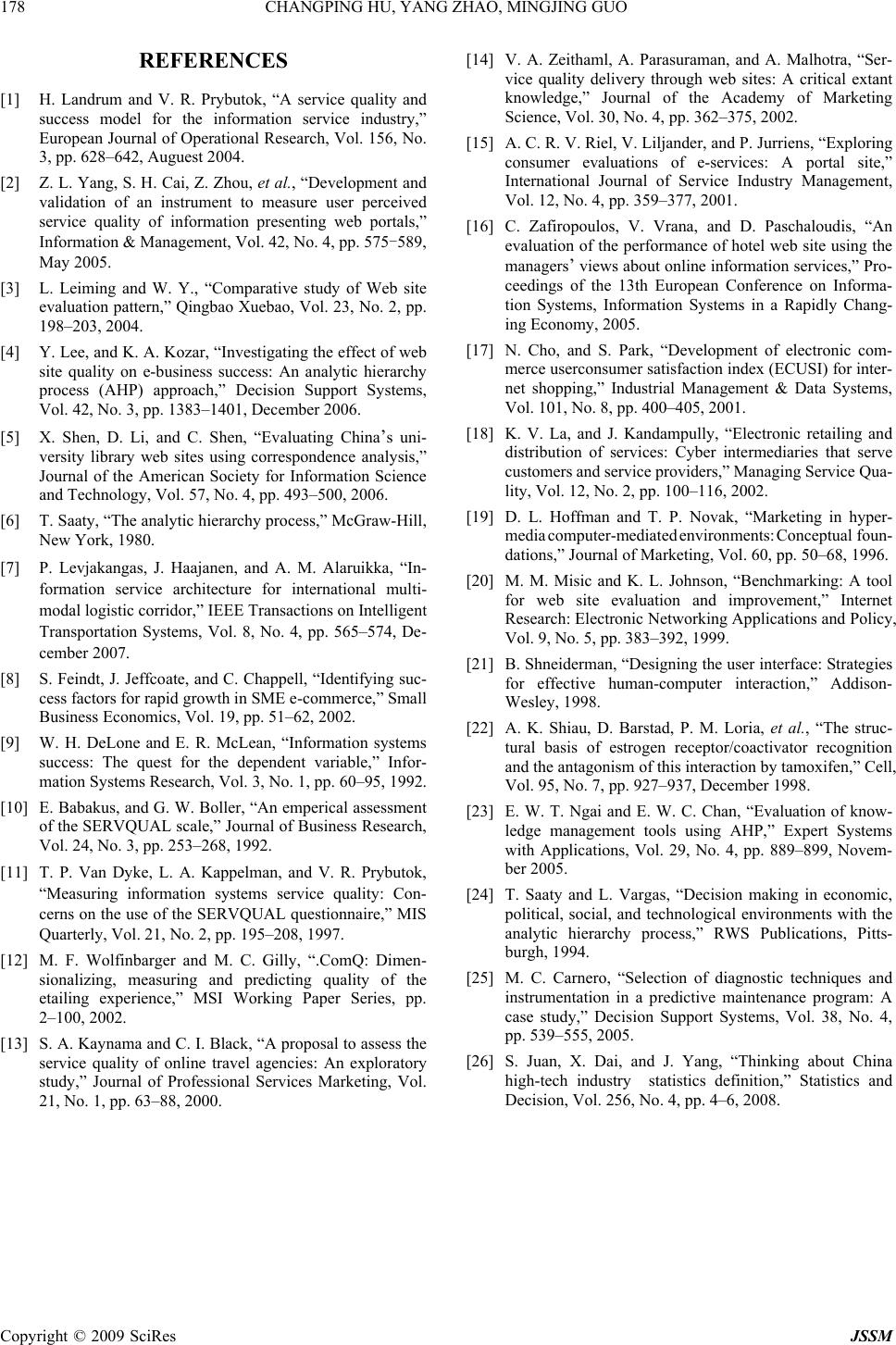

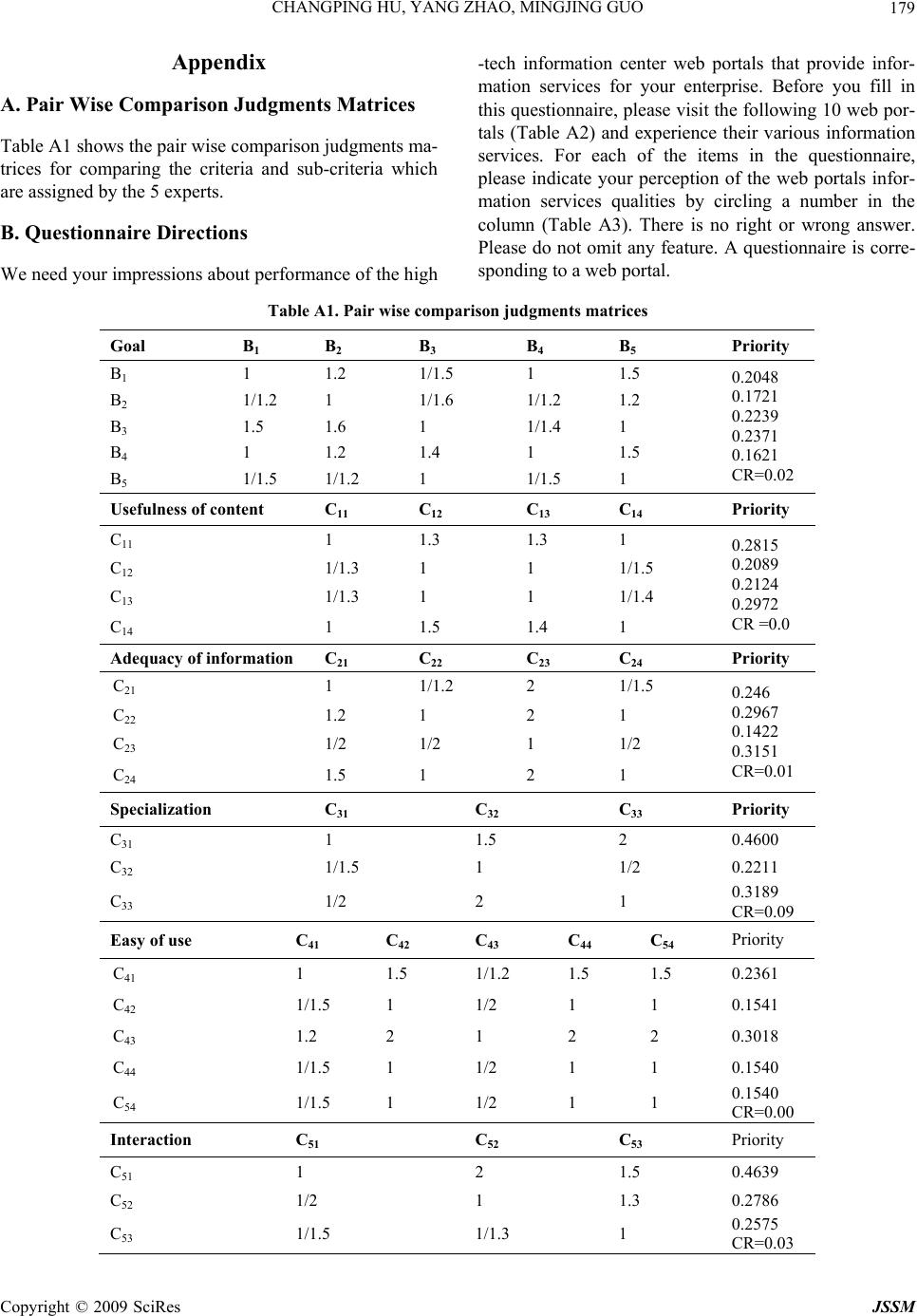

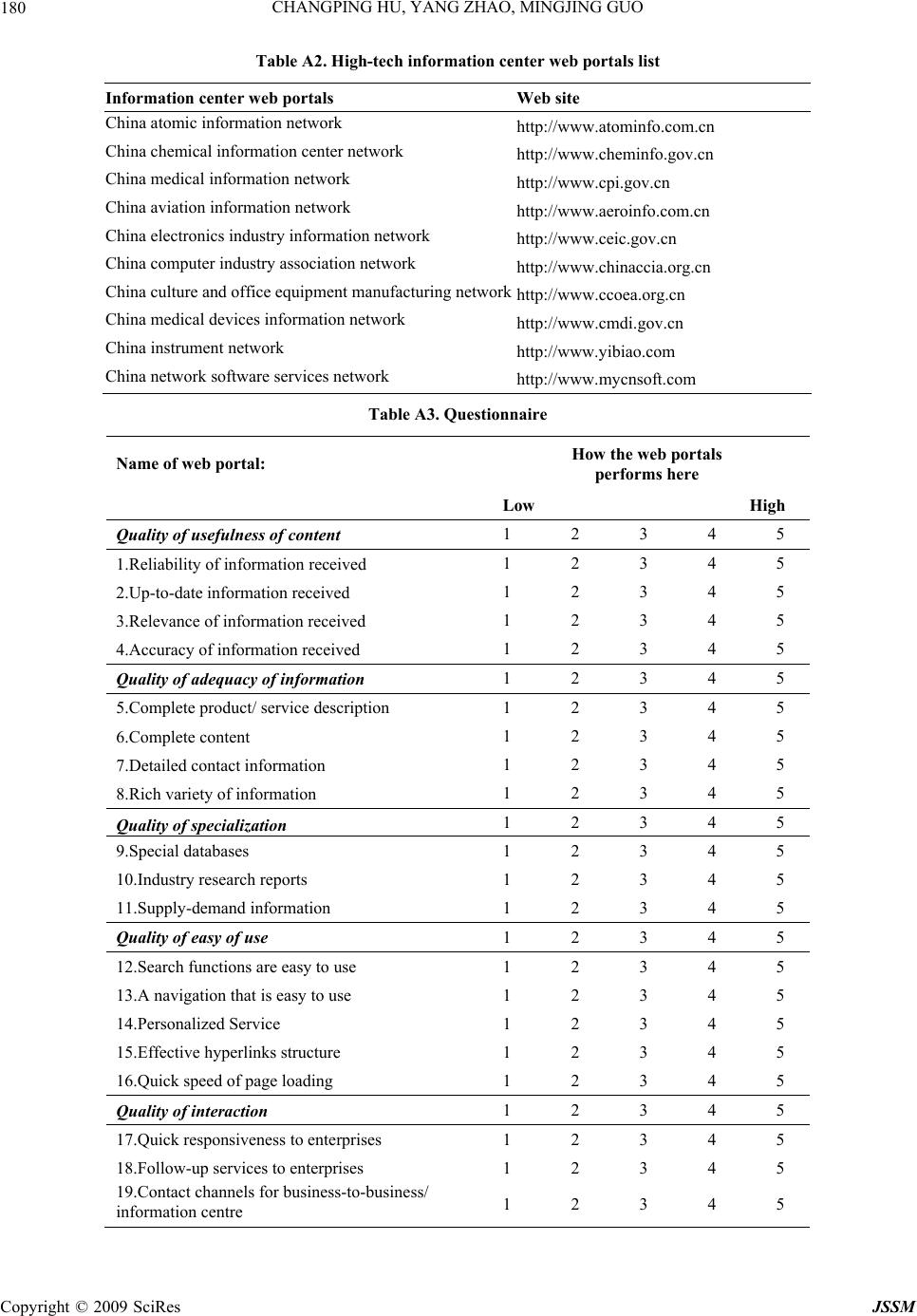

|