Advances in Pure Mathematics

Vol.06 No.08(2016), Article ID:68953,9 pages

10.4236/apm.2016.68044

Selecting the Quantity of Models in Mixture Regression

Dawei Lang, Wanzhou Ye

College of Science, Shanghai University, Shanghai, China

Copyright © 2016 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 14 June 2016; accepted 22 July 2016; published 25 July 2016

ABSTRACT

Mixture regression is a regression problem with mixed data. Specifically, in the observations, some data are from one model, while others from other models. Only after assuming the quantity of the model is given, EM or other algorithms can be used to solve this problem. We propose an information criterion for mixture regression model in this paper. Compared to ordinary information citizen by data simulations, results show our citizen has better performance on choosing the correct quantity of models.

Keywords:

Mixture Regression, Model Based Clustering, Information Criterion, AIC, BIC

1. Introduction

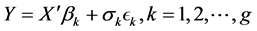

Mixture regression is a special situation in regression problem. Rather than getting samples in one distribution, the data of mixture regression are from multiple distributions (the information of which distribution every observation from is unknown), which will make a bad effect in parameter estimation. The mixture regression problem can be described as follows [1] :

(1)

(1)

(2)

(2)

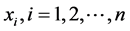

is independent observation matrix with n observations with p variables.

is independent observation matrix with n observations with p variables.  means ith observation vector from n observations. The length of

means ith observation vector from n observations. The length of  is p.

is p.  is response variable from observation data with the length of n.

is response variable from observation data with the length of n.  and

and  is the unknown parameters (weight) of the variable and scale parameter in different models.

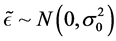

is the unknown parameters (weight) of the variable and scale parameter in different models.  is a random error independent from

is a random error independent from .

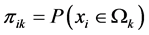

.  is the probability of ith observation is from the kth distribution

is the probability of ith observation is from the kth distribution . (i.e.

. (i.e. ). To solve the mixture regression problem, it need two parts. Firstly, confirming which model every sample is from is required. Secondly, parameters in each model should be estimated. That is the reason to call mixture regression model as model-based clustering [2] [3] .

). To solve the mixture regression problem, it need two parts. Firstly, confirming which model every sample is from is required. Secondly, parameters in each model should be estimated. That is the reason to call mixture regression model as model-based clustering [2] [3] .

For all the mixture regression problem,  is unknown which has:

is unknown which has: .

.

Furthermore,

Parameter estimation can be obtained by EM algorithm. Fraley et al., [4] [5] state the EM algorithm in ordinary mixture regression model which means every model in it is an ordinary linear regression. EM algorithm of ordinary mixture regression is as follows:

Column vector

E-step in mixture regression model can be obtained by:

When

As every observation is independent, covariance matrix can be defined as

Song et al., [6] has finished EM algorithm with robust mixture regression. Q Wu et al., [7] proposed EM algorithm in quantile regression. Furthermore D. Lang et al., [8] explained a fast iteration method for mixture regression problem which can solve mixture regression when random error in different distributions.

Moreover, all the algorithms mentioned below is considering the quantity of models g is known. However this will not happened in every condition. The number of models g need to be chosen before the algorithm. When X is a low dimension matrix, a scatter plot can be drawn for choosing g. To get the true quantity of models, watching scatter plot and giving a conclusion is not suitable for a high-dimension situation. It was meaningful to discussing how to create a proper method choosing the right quantity of models in a mixture regression problem.

The rest of the paper is organized as follows. Section 2 will discuss the equivalence between mixture regre- ssion and ordinary regression when classification matrix is fixed. We extend a method based on information criterion in Section 3. Section 4 is the data simulation of different information criterions. Proof of theorem is in the Appendix section.

2. Equivalence of Linear Regression

Unsupervised learning has its method to choose the quantity of clusters, like GAP statics in K-means [9] . Mixture regression can be regards as a model based clusting including judging which cluster every observation should be grouped as well as the parameter estimation.

To find a proper method for choosing the quantity of models, we need to find the relationships between mixture regression and other algorithms. In some conditions, such as classification matrix Z is fixed and random error has the same variance, mixture regression can be written as a linear regression.

Theorem 1 (Equivalence between Mixture Regression and Linear Regression) If the estimater of

When random error in every model is independent and identically distributed from a normal distribution (

The proof can be found in the Appendix.

After proofing this theorem, we can use the evaluation methodology from regression to solve the quantity choosing in mixture regression.

3. Information Criterion for Quantity of Clusters Choosing

3.1. Information Criterion

For a regression problem, Akaike information criterion (AIC) or Bayesian information criterion (BIC) [10] is always used for evaluating a regression model [11] . Information criterion is based on information theory, it shows the information lost in a specify model. A trade-off between goodness of fitting and the complexity of the model is considered in information criterion:

The best model is the one with the minimum AIC (BIC). L is the likelihood function which states the goodness of fitting (expression (3)). k is the penalty of the information criterion standing for the number of unknown parameters in the model. In linear regression, k means the number of dependent variables. As for BIC, the penalty is larger, weight of penalty comes to

3.2. Information Criterion in Mixture Regression

In mixture regression, parameters in classification matrix should be considered as part of the estimator variables. Despite these variables, the model will tend to choosing a larger quantity of models which is also an overfitting problem.

For every observation,

Akaike information criterion for Mixture regression(AICM) and Bayesian information criterion for mixture (BICM) regression is:

AICM and BIC can be used for the quantity selecting in mixture regression problem. However, penalty weight for g in BICM is

4. Data Simulation

In order to validating the rationality of the model, we designed numeric simulations and generated sample data

• Simulation I: 100 samples from 2 distributions. (

• Simulation II:200 samples from 2 distributions. (

• Simulation III:150 samples from 3 distributions. (

4.1. Simulation I

Models from simulation I is:

where

Figure 1. Mixture regression when

Table 1. Simulation I of selecting quantity of models.

4.2. Simulation II

The models in simulation II is same as simulation I. While, the samples in simulation II is 100 for each distribution.

Figure 2 can be found in Appendix for simulation 2. Table 2 below is results for repeating 100 simulation.

4.3. Simulation III

Simulation III has three distributions with 50 samples in each distribution.

See Figure 3 for simulation III in Appendix, and result is shown in Table 3.

Figure 2. Mixture regression when

Table 2. Simulation II of selecting quantity of models.

Figure 3. Mixture regression when

Table 3. Simulation III of selecting quantity of models.

5. Conclusion

According to the results in three simulations, we can see AICM and BICM show a good result in small g (

Cite this paper

Dawei Lang,Wanzhou Ye, (2016) Selecting the Quantity of Models in Mixture Regression. Advances in Pure Mathematics,06,555-563. doi: 10.4236/apm.2016.68044

References

- 1. McLachlan, G. and Peel, D. (2004) Finite Mixture Models. John Wiley & Sons, Hoboken.

- 2. Fraley, C. and Raftery, A.E. (2002) Model-Based Clustering, Discriminant Analysis, and Density Estimation. American Statistical Association, 97, 611-631.

http://dx.doi.org/10.1198/016214502760047131 - 3. Ingrassia, S., Minotti, S.C. and Punzoa, A. (2014) Model-Based Clustering via Linear Cluster-Weighted Models. Computational Statistics and Data Analysis, 71, 159-182.

http://dx.doi.org/10.1016/j.csda.2013.02.012 - 4. Fraley, C. and Raftery, A.E. (1998) How Many Clusters? Which Clustering Method? Answers via Model-Based Cluster Analysis. The Computer Journal, 41, 578-588.

http://dx.doi.org/10.1093/comjnl/41.8.578 - 5. Fraley, C. and Raftery, A.E. (2002) Model-Based Clustering, Discriminant Analysis, and Density Estimation. American Statistical Association, 97, 611-631.

http://dx.doi.org/10.1198/016214502760047131 - 6. Song, W.X., Yao, W.X. and Xing, Y.R. (2014) Robust Mixture Regression Model Fitting by Laplace Distribution. Computational Statistics and Data Analysis, 71, 128-137.

http://dx.doi.org/10.1016/j.csda.2013.06.022 - 7. Wu, Q. and Yao, W. (2016) Mixtures of Quantile Regressions. Computational Statistics & Data Analysis, 93, 162-176.

http://dx.doi.org/10.1016/j.csda.2014.04.014 - 8. Lang, D.W. and Ye, W.Z. (2015) A Fast Iteration Method for Mixture Regression Problem. Journal of Applied Mathematics and Physics, 3.

http://dx.doi.org/10.4236/jamp.2015.39136 - 9. Tibshirani, R., Walther, G. and Hastie, T. (2002) Estimating the Number of Clusters in a Data Set via the Gap Statistic. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 63, 411-423.

- 10. Aho, K., Derryberry, D. and Peterson, T. (2014) Model Selection for Ecologists: The Worldviews of AIC and BIC. Ecology, 95, 631-636.

http://dx.doi.org/10.1890/13-1452.1 - 11. Naik, P.A., Shi, P. and Tsai, C.-L. (2007) Extending the Akaike Information Criterionto Mixture Regression Models. Journal of the American Statistical Association, 102.

- 12. Mixreg, R.T. Functions to Fit Mixtures of Regressions. R Package Version 0.0-5.

Appendix

Proof of theorem 1

Proof. Linear regression has the form of:

To proof this theorem, mixture regression need to be written as the form above. And when every random error has the same variance, random error in mixture regression is also a normal distribution.

In mixture regression problem, ith observation

We have:

Because ith observation can be written as a product of vectors, population of observation can be written as

For the observation

In the distribution of variable

so

Submit your manuscript at: http://papersubmission.scirp.org/