Journal of Signal and Information Processing

Vol.07 No.01(2016), Article ID:63986,10 pages

10.4236/jsip.2016.71006

Sparse Representation by Frames with Signal Analysis

Christopher Baker

Department of EE & CS, University of Wisconsin-Milwaukee, Milwaukee, WI, USA

Copyright © 2016 by author and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 3 February 2016; accepted 26 February 2016; published 29 February 2016

ABSTRACT

The use of frames is analyzed in Compressed Sensing (CS) through proofs and experiments. First, a new generalized Dictionary-Restricted Isometry Property (D-RIP) sparsity bound constant for CS is established. Second, experiments with a tight frame to analyze sparsity and reconstruction quality using several signal and image types are shown. The constant  is used in fulfilling the definition of D-RIP. It is proved that k-sparse signals can be reconstructed if

is used in fulfilling the definition of D-RIP. It is proved that k-sparse signals can be reconstructed if  by using a concise and transparent argument1. The approach could be extended to obtain other D-RIP bounds (i.e.

by using a concise and transparent argument1. The approach could be extended to obtain other D-RIP bounds (i.e. ). Experiments contrast results of a Gabor tight frame with Total Variation minimization. In cases of practical interest, the use of a Gabor dictionary performs well when achieving a highly sparse representation and poorly when this sparsity is not achieved.

). Experiments contrast results of a Gabor tight frame with Total Variation minimization. In cases of practical interest, the use of a Gabor dictionary performs well when achieving a highly sparse representation and poorly when this sparsity is not achieved.

Keywords:

Compressed Sensing, Total Variation Minimization, l1-Analysis, D-Restricted Isometry Property, Tight Frames

1. Introduction

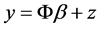

Let  and

and  be a signal such that

be a signal such that

(1)

(1)

with . In compressed sensing, one can find a good stable approximation (in terms of

. In compressed sensing, one can find a good stable approximation (in terms of  and the tail of

and the tail of  consisting of

consisting of  smallest entries) of

smallest entries) of  from the measurement matrix

from the measurement matrix  and the measurement y through solving an

and the measurement y through solving an  -minimization, provided that

-minimization, provided that  belongs to a family of well behaved matrices. A subclass of this family of matrices can be characterized by the well known Restrictive Isometry Property (RIP) of Candès, Romberg, and Tao, [3] [4] . This property requires the following relation for

belongs to a family of well behaved matrices. A subclass of this family of matrices can be characterized by the well known Restrictive Isometry Property (RIP) of Candès, Romberg, and Tao, [3] [4] . This property requires the following relation for

for every k-sparse vector c (namely, c has at most k non-zero components), for some small constant

several sharp RIP bounds that cover the most interesting cases of δk and

A key requirement in this setting is a signal being sparse or approximately sparse. Indeed, Many families of integrating signals have sparse representations under suitable bases. Recently, an interesting sparsifying scheme was proposed by Candès et al. [9] . In their scheme, instead of bases, tight frames are used to sparsify signals.

Let

The traditional RIP is no longer effective in the generalized setting. Candès et al. defined the D-restricted isometry property which extends RIP [9] . Here the formulation of D-RIP is used as in Lin et al. [10] .

Definition 1. The measurement matrix

holds for all k-sparse vectors

The RIP is now a special case of D-RIP (when the dictionary D is the identity matrix). For D being a tight frame, Candès et al. [9] , proved that if

Contribution

The proof in Section 2 establishes an improved D-RIP bound which states that

previously available [1] and it has been improved on by Wu and Li [2] . The main ingredient of the proof in Section 2 is a tool developed by Xu et al. [11] . This approach takes its inspiration from the clever ideas of Cai and Zhang [7] [8] .

The practical application of this proof consists of experiments targeting the theory of using tight frames in this CS setting satisfying D-RIP. Similar experimental methods to those used by Candès et al. are followed [9] [12] . However, an expanded variety of relevant sparse and non-sparse signals are used to test the robustness of the Gabor transform. Additionally, these experiments analyze sparsity in the coefficient domain and show that a highly sparse representation is a good indicator of reconstruction quality. These results are contrasted with a commonly used CS approach of Total Variation (TV)

This paper is organized into four main sections. Background information is presented in Section 1. Section 2 describes a proof of an improved D-RIP sparsity bound by using a concise and transparent approach. Section 3 details the methods of experimentation used to apply the tight frame in practical applications of signals and image recovery. Section 4 shows and discusses the reconstruction simulation results with an analysis of the robustness and shortcomings of using tight frames in CS.

2. New D-RIP Bounds

Theorem 2. Let D be an arbitrary tight frame and let

where the constants

components (in magnitude) set to zero.

Before proving this theorem, some remarks are helpful. Firstly, Cai and Zhang have obtained a sharp bound

the ideas of Cai and Zhang [7] [8] , more general results (other D-RIP bounds) can be obtained in parallel.

In order to prove Theorem 2, the following

The following description is taken from Xu et al. [11] .

Lemma 1. For positive integers

such that

for some nonnegative real numbers

Now Theorem 2 is proven.

Proof. This proof follows some ideas in the proofs of Theorems 1.1 and 2.1 by Cai et al. [8] , incorporating some simplified steps. Some strategies from Cai et al. [5] [13] are also used. This proof deals only with the

Let

For a subset

magnitude), i.e.,

[9] , one can easily verify the following:

Denote

By rearranging the columns of D if necessary, assume

Assume that the tight frame D is normalized, i.e.,

From the facts

Since

k-sparse decomposition of

with each

Cauchy-Schwartz inequality, there is

By the triangle inequality,

Note that

the theorem, it suffices to show that there are constants

In fact, assuming (17) there is

Now moving to the proof of (17). Denote

First, as

On the other hand, as each

Combining this with (23) shows

By making a perfect square, there is

which implies that

and finally have (17):

This demonstrates the use of Lemma 1 to get a good result. This could be pursued further to general cases for an even better bound. Indeed, this has been done recently by Wu and Li [2] to improve the result of this proof which has been available previously [1] .

3. Experiments

The focus in these experiments is to show practical applications of a sparsifying frame that satisfies the new lower bound proven in the previous section. A time-frequency Gabor dictionary is used as a sparsifying trans- form in re-weighted

One of the advantages of a Gabor dictionary is its characteristic of translational invariance both in time and frequency. In a similar context, a translational invariant wavelet transform was used with good results in MRI reconstruction [14] . Invariance in the

Here comparison measurements of sparsity in Gabor and TV Coefficients of various signals are used to verify if good and robust results can be achieved. A selection of 5 different real valued signals and/or images with variants are used to simulate the complexity of practical signals.

・ Sinusoidal pulses (GHz range)

・ Shepp-Logan phantom image

・ Penguin image

・ T1 weighted MRI image

・ Time of Flight (TOF) Maximum Intensity Projection (MIP) MRI image

The original images and signals are sized to be a total length of 16k values-for images, this is a 128 × 128 gray-scale image, shown in part (a) of each figure. These signals are not sparse in their native domain, but can become sparse when a transform is applied.

The use of the Gabor dictionary to reconstruct these signals utilizes a core optimization algorithm solver for the primal-dual interior point method provided by

In Table 1, there is a comparison of two measures for this analysis, the Mean Square Error (MSE) and

Table 1. Sparsity and compressed Sensing reconstruction errors of various signals (L-Linear, G-Gabor, TV-Total Variation, MSE-Mean Square Error).

Sparsity. Measurements of normalized MSE are taken, where

A Linear (L) MSE of the reconstruction is used as a reference, shown as (b) in each figure. This is calculated by the transpose of the measurement matrix as the pseudo inverse. Gabor (G) MSE identifies the error in the use of that dictionary in the CS reconstruction, shown in parts (c) of the figures. TV MSE is a measure of the error when TV weighted

Sparsity measurements taken in the coefficient domain are based on a ratio of the count of values that are less than 1/256 of the maximum coefficient, divided by the total number of the coefficients. This ratio then is a percentage of sparsity. Two sparsity measures are taken:

・ % G Sparse-Sparsity of the Gabor transform coefficients of the fully sampled signal

・ % TV Sparse-Sparsity of the TV calculation of the fully sampled signal

4. Results and Discussion

The goal is to show how well a sparse tight frame representation of various signals performs in CS recon- struction. Analysis is done of the Gabor dictionary as a sparsifying transform on non-sparse signals and images. A large range of reconstruction errors and sparsity levels are observed for different image types and signals. The use of the Gabor frame with a reference of TV weighted

According to these measurements, sparsity in the coefficient domain will correlate to image reconstruction

Figure 1. Signal reconstruction test 1, one long pulse (a) original; (b) linear pseudo inverse; (c) CS Gabor; (d) CS TV.

Figure 2. Reconstruction test 2, 20 short pulses (a) original; (b) linear pseudo inverse; (c) CS Gabor; (d) CS TV.

Figure 3. Reconstruction test 3, Shepp-Logan phantom (a) original; (b) linear pseudo inverse; (c) CS Gabor; (d) CS TV.

Figure 4. Reconstruction test 4, penguin (a) original; (b) linear pseudo inverse; (c) CS Gabor; (d) CS TV.

Figure 5. Signal reconstruction test 5, one long pulse + Shepp-Logan phantom (a) original; (b) linear pseudo inverse; (c) CS Gabor; (d) CS TV.

Figure 6. Image reconstruction test 5, one long pulse + Shepp-Logan phantom (a) original; (b) linear pseudo inverse; (c) CS Gabor; (d) CS TV.

Figure 7. Reconstruction test 6, T1 MRI, (a) original; (b) linear pseudo inverse; (c) CS Gabor; (d) CS TV.

Figure 8. Reconstruction test 7, MIP MRI, (a) original; (b) linear pseudo inverse; (c) CS Gabor; (d) CS TV.

success. For example, test 1 measures a Gabor coefficient sparsity of over 99% and a reconstruction success which reduces the MSE by 36 times compared to the linear reconstruction. Whereas, with the same signal, which is not sparse at all in the TV domain, TV minimization actually increases the MSE when compared with the linear reconstruction, see Figure 1. It is important to note that the sparsity calculated on the Gabor coefficient set is on a much larger set of redundant coefficients than the non-redundant TV coefficients.

In test 2, the complexity and sparsity are adjusted by adding additional sinusoidal pulses which may overlap. The complexity of the pulses significantly increases to 20 pulses and the Gabor dictionary is able to sparsely represent the signal very well compared to TV minimization, see Figure 2. Similar to the results in test 1, the reconstruction MSE is reduced by 36 times.

In tests 3 and 4, images are chosen which are sparse in the TV domain but not in the Gabor domain. The TV reconstruction reduces the MSE to zero compared to the Gabor reconstruction reducing by only a factor of 2.6 and 2.4 respectively. The penguin image is an example with a different background magnitude from the Shepp-Logan phantom, see Figure 3 and Figure 4.

The signal in test 5 is a combination of a pulse from test 1 with the Shepp-Logan image from test 3. The same signal is plotted in the time domain for Figure 5 and in the image domain for Figure 6. Although both Gabor and TV CS reconstructions improve the result over the linear calculation, the error still remains quite large. It is noteworthy that the sparsity percentages are much lower for this case in both the TV and Gabor domains. This underscores the important connection between having a sparse representation and making a good reconstruction.

In the last experiments, tests 6 and 7, MRI images of the brain as either T1 weighted or TOF MIP are used. They appear not to be sparsely represented in either the Gabor domain or in TV. The MSE result is poor in both reconstruction algorithms, see Figure 7 and Figure 8. It is important to note that under-sampling is in the image domain and not in the native MRI signal domain of k-space. However, this is still an equivalent comparison for cases when the under-sampled k-space produces artifacts that are incoherent as in this experiment. A require- ment of CS reconstruction is that artifacts due to sampling are similar to uniform noise with an even distribution across the image.

It is also important to point out that the linear reconstructions, calculated with a pseudo-inverse, have a consistent MSE for all experiments, see L MSE in Table 1. This is not the case for this tight frame. These findings show remarkably good results for some periodic signals. However, the Gabor tight frame does not appear to be advantageous for the images investigated here. The ability of the sparsifying transform to produce a high percentage of sparsity contributes greatly to the reduction of reconstruction error. When using CS in these cases, it is vital to pick a dictionary which will effectively and sparsely represent the signal of interest.

5. Conclusion

The use of a new D-RIP sparsity bound constant for compressed sensing is proven using a transparent and concise approach. Practical numerical experiments for this setting are performed. The use of a Gabor tight frame in CS is contrasted with TV weighted

Acknowledgements

The author wishes to thank several people: Academic supervisor, Professor Guangwu Xu, for his assistance in the proofs of the results in Section 2, suggestions in setting up experiments, and help with analysis. Bing Gao, for pointing out an error in the earlier version of the proof [1] . Dr. Kevin King, from GE Healthcare for providing the MRI images and suggestions for improvement. Dr. Michael Wakin [12] , who provided some code useful for experiment comparison.

Cite this paper

ChristopherBaker, (2016) Sparse Representation by Frames with Signal Analysis. Journal of Signal and Information Processing,07,39-48. doi: 10.4236/jsip.2016.71006

References

- 1. Baker, C.A. (2014) A Note on Sparsification by Frames. arXiv:1308.5249v2[cs.IT].

- 2. Wu, F. and Li, D. (2015) The Restricted Isometry Property for Signal Recovery with Coherent Tight Frames. Bulletin of the Australian Mathematical Society, 92, 496-507.

http://dx.doi.org/10.1017/S0004972715000933 - 3. Candès, E.J., Romberg, J. and Tao, T. (2006) Stable Signal Recovery from Incomplete and Inaccurate Measurements. Communications on Pure and Applied Mathematics, 59, 1207-1223.

http://dx.doi.org/10.1002/cpa.20124 - 4. Candès, E.J. and Tao, T. (2005) Decoding by Linear Programming. IEEE Transactions on Information Theory, 51, 4203-4215.

http://dx.doi.org/10.1109/TIT.2005.858979 - 5. Cai, T., Wang, L. and Xu, G. (2010) New Bounds for Restricted Isometry Constants. IEEE Transactions on Information Theory, 56, 4388-4394.

http://dx.doi.org/10.1109/TIT.2010.2054730 - 6. Candès, E.J. (2008) The Restricted Isometry Property and Its Implications for Compressed Sensing. Comptes Rendus Mathematique, 346, 589-592.

http://dx.doi.org/10.1016/j.crma.2008.03.014 - 7. Cai, T. and Zhang, A. (2013) Sharp RIP Bound for Sparse Signal and Low-Rank Matrix Recovery. Applied and Computational Harmonic Analysis, 35, 74-93.

http://dx.doi.org/10.1016/j.acha.2012.07.010 - 8. Cai, T. and Zhang, A. (2014) Sparse Representation of a Polytope and Recovery of Sparse Signals and Low-Rank Matrices. IEEE Transactions on Information Theory, 60, 122-132.

http://dx.doi.org/10.1109/TIT.2013.2288639 - 9. Candès, E.J., Eldar, Y., Needel, D. and Randall, P. (2010) Compressed Sensing with Coherent and Redundant Dictionaries. Applied and Computational Harmonic Analysis, 31, 59-73.

http://dx.doi.org/10.1016/j.acha.2010.10.002 - 10. Lin, J., Li, S. and Shen, Y. (2013) New Bounds for Restricted Isometry Constants with Coherent Tight Frames. IEEE Transactions on Signal Processing, 61, 611-621.

http://dx.doi.org/10.1109/TSP.2012.2226171 - 11. Xu, G. and Xu, Z. (2013) On the -Norm Invariant Convex k-Sparse Decomposition of Signals. Journal of Operations Research Society of China, 1, 537-541.

http://dx.doi.org/10.1007/s40305-013-0030-y - 12. Candès, E.J., Wakin, M.B. and Boyd, S. (2008) Enhancing Sparsity by Reweighted l1 Minimization. Journal of Fourier Analysis and Applications, 14, 877-905.

http://dx.doi.org/10.1007/s00041-008-9045-x - 13. Cai, T., Xu, G. and Zhang, J. (2009) On Recovery of Sparse Signals via Minimization. IEEE Transactions on Information Theory, 55, 3388-3397.

http://dx.doi.org/10.1109/TIT.2009.2021377 - 14. Baker, C.A., King, K., Liang, D. and Ying, L. (2011) Translational-Invariant Dictionaries for Compressed Sensing in Magnetic Resonance Imaging. 2011 IEEE International Symposium on Biomedical Imaging: From Nano to Macro, 1602-1605.

http://dx.doi.org/10.1109/isbi.2011.5872709 - 15. Candès, E.J. and Romberg, J. (2005) -Magic: Recovery of Sparse Signals via Convex Programming.

http://users.ece.gatech.edu/justin/l1magic/downloads/l1magic.pdf

NOTES

1This was proven in 2014 [1] . This result is improved upon [2] .