American Journal of Computational Mathematics

Vol.04 No.04(2014), Article ID:49504,12 pages

10.4236/ajcm.2014.44028

On Generalized High Order Derivatives of Nonsmooth Functions

Samaneh Soradi Zeid, Ali Vahidian Kamyad

Department of Mathematics, Ferdowsi University of Mashhad, Mashhad, Iran

Email: s_soradi@yahoo.com, avkamyad@yahoo.com

Copyright © 2014 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 3 July 2014; revised 5 August 2014; accepted 12 August 2014

ABSTRACT

In this paper, we proposed a Extension Definition to derive, simultaneously, the first, second and high order generalized derivatives for non-smooth functions, in which the involved functions are Riemann integrable but not necessarily locally Lipschitz or continuous. Indeed, we define a functional optimization problem corresponding to smooth functions where its optimal solutions are the first and second derivatives of these functions in a domain. Then by applying these functional optimization problems for non-smooth functions and using this method we obtain generalized first derivative (GFD) and generalized second derivative (GSD). Here, the optimization problem is approximated with a linear programming problem that by solving of which, we can obtain these derivatives, as simple as possible. We extend this approach for obtaining generalized high order derivatives (GHODs) of non-smooth functions, simultaneously. Finally, for efficiency of our approach some numerical examples have been presented.

Keywords:

Generalized Derivative, Smooth and Nonsmooth Functions, Nonsmooth Optimization Problem, Linear Programming

1. Introduction

A function is smooth (of the first order) if it is differentiable and its derivatives are continuous. The nth-order smoothness is defined analogously, that is, a function is nth-order smooth if its  -derivatives are smooth. So, the infinite smoothness refers to continuous derivatives of all orders. From this perspective a non-smooth function only has a negative description―it lacks some degree of properties traditionally relied upon in analysis. One could get the impression that non-smooth optimization is a subject dedicated to overcoming handicaps which have to be faced in miscellaneous circumstances where mathematical structure might be poorer than what one would like, but this is far from right. Instead, non-smooth optimization typically deals with highly structured problems, but problems which arise differently, or are modeled or cast differently, from ones for which many of the mainline numerical methods, involving gradient vectors and Hessian matrices, have been designed. Moreover, non-smooth analysis, which refers to differential analysis in the absence of differentiability and can be regarded as a subfield of nonlinear analysis, has grown rapidly in the past decades. In fact, in recent years, non-smooth analysis has come to play a vital role in functional analysis, optimization, mechanics, differential equations, etc.

-derivatives are smooth. So, the infinite smoothness refers to continuous derivatives of all orders. From this perspective a non-smooth function only has a negative description―it lacks some degree of properties traditionally relied upon in analysis. One could get the impression that non-smooth optimization is a subject dedicated to overcoming handicaps which have to be faced in miscellaneous circumstances where mathematical structure might be poorer than what one would like, but this is far from right. Instead, non-smooth optimization typically deals with highly structured problems, but problems which arise differently, or are modeled or cast differently, from ones for which many of the mainline numerical methods, involving gradient vectors and Hessian matrices, have been designed. Moreover, non-smooth analysis, which refers to differential analysis in the absence of differentiability and can be regarded as a subfield of nonlinear analysis, has grown rapidly in the past decades. In fact, in recent years, non-smooth analysis has come to play a vital role in functional analysis, optimization, mechanics, differential equations, etc.

Among those who have participated in its development are Clarke [1] , Ioffe [2] , Mordukhovich [3] , Rockafellar [4] , but many more have contributed as well. During the early 1960s there was a growing realization that a large number of optimization problems which appeared in applications involved minimization of non-differ- rentiable functions. One of the important areas where such problems appear is optimum control. The subject of non-smooth analysis arises out of the need to develop a theory to deal with the minimization of non-smooth functions.

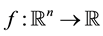

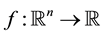

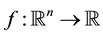

Recent research on non-smooth analysis mainly focuses on Lipschittz function. Properties of the generalized derivatives of Lipschtitz functions are summarized in the following result of Clarke, 1983 [1] . A function  is said to be Lipschitz on a set U if there is a positive real number K such that

is said to be Lipschitz on a set U if there is a positive real number K such that

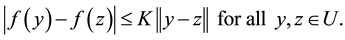

A function f that is Lipschitz in a neighborhood of a point x is not necessarily differentiable at x, but for locally Lipschitz functions the following expressions exist

and

The symbols  and

and  denote the upper Dini and lower Dini directional derivatives of f at x in direction of v. Hence, we may consider any of these derivatives as a generalized derivative for locally Lipschitz functions. However they suffer from an important drawback. Both of them are in general not convex in the direction and simple examples (which are left to the reader) can be constructed to demonstrate this. Thus they lack the most important property of the directional derivative of a convex function. Clarke observed that in the definition of the upper Dini derivative if one moves the point x, i.e., move through points that converge to x, then one can generate a generalized directional derivative which is sublinear in the direction. Thus we arrive at the definition of the Clarke generalized directional derivative.

denote the upper Dini and lower Dini directional derivatives of f at x in direction of v. Hence, we may consider any of these derivatives as a generalized derivative for locally Lipschitz functions. However they suffer from an important drawback. Both of them are in general not convex in the direction and simple examples (which are left to the reader) can be constructed to demonstrate this. Thus they lack the most important property of the directional derivative of a convex function. Clarke observed that in the definition of the upper Dini derivative if one moves the point x, i.e., move through points that converge to x, then one can generate a generalized directional derivative which is sublinear in the direction. Thus we arrive at the definition of the Clarke generalized directional derivative.

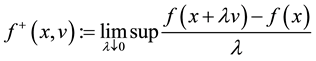

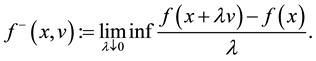

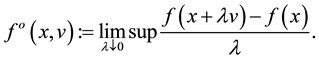

Definition 1.1. Let  be a locally Lipschitz function. Then the Clarke generalized directional derivative of f at x in direction of v is given by

be a locally Lipschitz function. Then the Clarke generalized directional derivative of f at x in direction of v is given by

Clarke also defined the following notion of generalized gradient or subdifferential [1] .

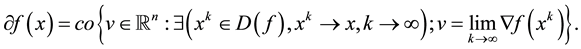

Definition 1.2. Let  be locally Lipschitz. Then the Clarke generalized gradient or the subdifferential of f at x, denoted by

be locally Lipschitz. Then the Clarke generalized gradient or the subdifferential of f at x, denoted by , is given by

, is given by

Here  denotes the gradient of f;

denotes the gradient of f;  denotes the set under which f is differentiable and co denotes the convex hull of a set. It is shown in [5] that the mapping

denotes the set under which f is differentiable and co denotes the convex hull of a set. It is shown in [5] that the mapping  is upper semicontinuous and bounded on every bounded set.

is upper semicontinuous and bounded on every bounded set.

However, one can realize that calculating the Clarke subdifferential from first principles is not always a simple task. In this manuscript, we develop the definition for GFD of non-smooth functions which will be seen as a refinement of what is given in [6] , to derive GHODs of non-smooth functions, simultaneously.

The paper is organized as follows. Section 2 presents the basic definitions and facts needed in what follows. Our results are then stated and proved in Section 3. We develop the definition for GFD and GSD of non-smooth functions which will be seen as a refinement of what is given in theorems (2.1) and (2.7), to derive GHODs of non-smooth functions, simultaneously (Theorem 3.5). Section 4 presents some illustrative example of the results of the paper.

2. GFD and GSD of Nonsmooth Functions

In this section, we present definitions and results concerning GFD and GSD, which are needed in the remainder of this paper. In order to get this approach some tools from nonsmooth analysis are used, specially generalized derivatives. Since the early 1960s, many different generalized derivatives have been proposed, for instance Rockafellar [4] [7] , Clarke [1] , Clarke et al. [8] and Mordukhovich [3] [9] -[12] . These papers and theirs results include some restrictions, for examples.

1) The function  must be locally Lipschitz or convex.

must be locally Lipschitz or convex.

2) We must know that the function

3) The generalized derivatives of

4) The directional derivative is used to introduce generalized derivative.

5) The concepts limsup and liminf are applied to obtain the generalized derivative in which calculation of these is usually hard and complicated.

6) To obtain the second derivative, the gradient of the function should be computed which will be hard in some cases.

It is commonly recognized that these GDs are not practical and applicable for solving problems. We mainly use the new GD of Kamyad et al. [6] for nonsmooth functions. This kind of GD is particularly helpful and practical when dealing with nonsmooth continuous and discontinuous functions and does not have the above restrictions and difficulties. Let

Given a function

where

and

Theorem 2.1. (Kamyad et al., 2011) Let

Definition 2.2. (Kamyad et al., 2011) Let

Remark 2.3. Note that if

In what follow, the problem (1) is approximated as the following finite dimentional problem (Kamyad et al. [6] ):

where N is a given large number. We assume that

for all

By trapezoidal and midpoint integration rules, the last problem can be written as the following problem in which

Lemma 2.4. Let the pairs

Then

where I is a compact set.

Proof. See [13] .

Now, by Lemma 2.4, the problem (3) can be converted to the following equivalent LP problem (see [14] [15] )

where

and

Remark 2.5. Note that

chosen as arbitrary numbers for

Remark 2.6. Let

Based on GFD of nonsmooth functions, Kamyad et al. [6] introduced an optimization problem similar to the problem (1) that its optimal solution is the second order derivative of smooth functions on an interval. To attain this goal they define

In addition, suppose

where

Theorem 2.7. Let

Proof. See [16] .

Definition 2.8. Let

Note that if

where

Remark 2.9. Let

3. Main Approach

In this section, we propose a method based on GD of Kamyad et al. to obtain simultaneously GFD and GSD for smooth and nonsmooth functions in which the involved functions are Riemann integrable.

Theorem 3.1. Let

for any

Proof. By Theorem 2.2 in [6] we have

Since by assumption

and

Again by using integration by parts and rule for

where

tion

By Lemma 2.1 of [6] it follows that

Corollary 3.2. Suppose that the conditions of Theorem 3.1 hold. Then

Let

Then

Theorem 3.3. Let

for any

Theorem 3.4. Let

for all

Proof. The result is clear by Theorem 2.4 of [6] and the integral properties.

Let

where

Theorem 3.5. Let

Proof. The result follows from Theorems 3.3, 3.4.

Definition 3.6. Let

Definition 3.7. Let

Note that if

We approximate the obtained generalized derivatives of nonsmooth function

where the coefficients

Note that, we can approximate the generalized derivatives of nonsmooth function

and

Hence, in what follow, by using the methods of [6] , we will convert the problem (8) to the corresponding finite dimensional problem,

where N is a given big number. Let

and

problem 9 can be written as the following problem:

Now, problem (9) can be converted to the following equivalent linear programming problem (see [14] [15] ):

where

for

Remark 3.8. Let

Remark 3.9. By looking at the definition of EGD, for a function as

4. Numerical Results

In this stage, we have found the GFD and GSD of smooth and nonsmooth functions in several examples using problem (11). Here we assume that

Example 4.1. Consider the nonsmooth function

Example 4.2. Consider the smooth function

Figure 1.

Figure 2. GFD of Example 4.1 by using the problem (4).

Figure 3.

Example 4.3. Consider the nonsmooth function

Example 4.4. Consider the nonsmooth function

Example 4.5. Consider function

in points

5. Conclusions

We propose a strategy for approximating generalized first and second derivatives for a nonsmooth function. We assume that the function of interest which has satisfied, is different from what is usually found in the literature,

Figure 4. GSD of Example 4.2 by using the problem (6).

Figure 5. EGD of Example 4.3 by using the problem (11).

Figure 6. EGD of Example 4.4 by using the problem (11).

Figure 7. EGD of Example 4.5 by using the problem (11).

since it is not required to be locally Lipschitz or convex. These generalized derivatives are computed by solving a linear programming problem, whose solution provides simultaneously the first and second order derivatives. The advantages of our generalized derivatives with respect to the other approaches except simplicity and practically are as follows:

1) The generalized derivative of a non-smooth function by our approach does not depend on the non-smooth- ness points of function. Thus we can use this GD for many cases that we do not know the points of non-differ- rentiability of the function.

2) The generalized derivative of non-smooth functions by our approach gives a good global approximate derivative as on the domain of functions, whereas in the other approaches the GDs are calculated in one given point.

3) The generalized derivative by our approach is defined for non-smooth piecewise continuous functions, whereas the other approaches are defined usually for locally Lipschitz or convex functions.

4) Our approach simultaneously gives first and second derivatives of nonsmooth function plus second derivative obtained directly from the primary function without need to compute the first derivative.

5) The generalized derivative by our approach is valid for functions that are only integrable, where continuity and locally Lipschitz need not be assumed.

In deed we show that if

nishes at the end points of the interval, then

continuity need not be assumed: if

ferentiable, or smooth, functions

Cite this paper

Samaneh SoradiZeid,Ali VahidianKamyad, (2014) On Generalized High Order Derivatives of Nonsmooth Functions. American Journal of Computational Mathematics,04,317-328. doi: 10.4236/ajcm.2014.44028

References

- 1. Clarke, F.H. (1983) Optimization and Non-Smooth Analysis. Wiley, New York.

- 2. Ioffe, A.D. (1993) A Lagrange Multiplier Rule with Small Convex-Valued Subdifferentials for Nonsmooth Problems of Mathematical Programming Involving Equality and Nonfunctional Constraints. Mathematical Programming, 588, 137-145.

http://dx.doi.org/10.1007/BF01581262 - 3. Mordukhovich, B.S. (1994) Generalized Differential Calculus for Nonsmooth and Set-Valued Mappings. Journal of Mathematical Analysis and Applications, 183, 250-288.

http://dx.doi.org/10.1006/jmaa.1994.1144 - 4. Rockafellar, T. (1994) Nonsmooth Optimization, Mathematical Programming: State of the Art 1994. University of Michigan Press, Ann Arbor, 248-258.

- 5. Mahdavi-Amiri, N. and Yousefpour, R. (2011) An Effective Optimization Algorithm for Locally Nonconvex Lipschitz Functions Based on Mollifier Subgradients. Bulletin of the Iranian Mathematical Society, 37, 171-198.

- 6. Kamyad, A.V., Noori Skandari, M.H. and Erfanian, H.R. (2011) A New Definition for Generalized First Derivative of Nonsmooth Functions. Applied Mathematics, 2, 1252-1257.

http://dx.doi.org/10.4236/am.2011.210174 - 7. Rockafellar, R.T. and Wets, R.J. (1997) Variational Analysis. Springer, New York.

- 8. Clarke, F.H., Ledyaev, Yu.S., Stern, R.J. and Wolenski, P.R. (1998) Nonsmooth Analysis and Control Theory, Graduate Texts in Mathematics, Vol. 178. Springer-Verlag, New York.

- 9. Mordukhovich, B. (1988) Approximation Methods in Problems of Optimization and Control. Nauka, Moscow.

- 10. Mordukhovich, B. (1993) Complete Characterizations of Openness, Metric Regularity, and Lipschitzian Properties of Multifunctions. Transactions of the American Mathematical Society, 340, 1-35.

http://dx.doi.org/10.1090/S0002-9947-1993-1156300-4 - 11. Mordukhovich, B. (2006) Variational Analysis and Generalized Differentiation, Vol. 1-2. Springer, New York.

- 12. Mordukhovich, B., Treiman, J.S. and Zhu, Q.J. (2003) An Extended Extremal Principle with Applications to MultiObjective Optimization. SIAM Journal on Optimization, 14, 359-379.

http://dx.doi.org/10.1137/S1052623402414701 - 13. Erfanian, H., Noori Skandari, M.H. and Kamyad, A.V. (2012) A Numerical Approach for Nonsmooth Ordinary Differential Equations. Journal of Vibration and Control.

- 14. Bazaraa, M.S., Javis, J.J. and Sheralli, H.D. (1990) Linear Programming. Wiley and Sons, New York.

- 15. Bazaraa, M.S., Sheralli, H.D. and Shetty, C.M. (2006) Nonlinear Programming: Theory and Application. Wiley and Sons, New York. http://dx.doi.org/10.1002/0471787779

- 16. Erfanian, H., Noori Skandari, M.H. and Kamyad, A.V. (2013) A New Approach for the Generalized First Derivative and Extension It to the Generalized Second Derivative of Nonsmooth Functions, I. Journal of Intelligent Systems and Applications, 4, 100-107. http://dx.doi.org/10.5815/ijisa.2013.04.10

- 17. Stade, E. (2005) Fourier Analysis. Wiley, New York. http://dx.doi.org/10.1002/9781118165508