Paper Menu >>

Journal Menu >>

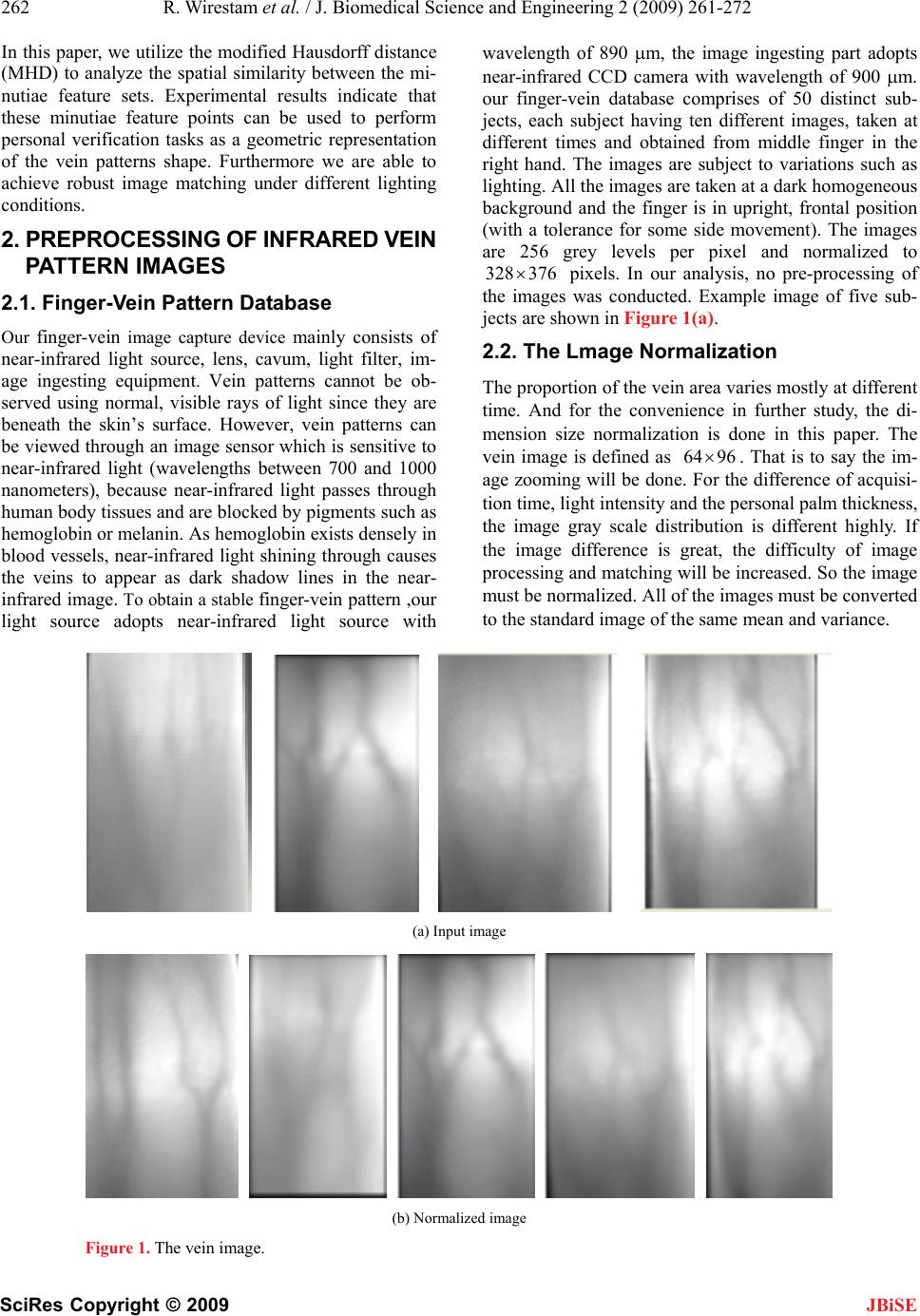

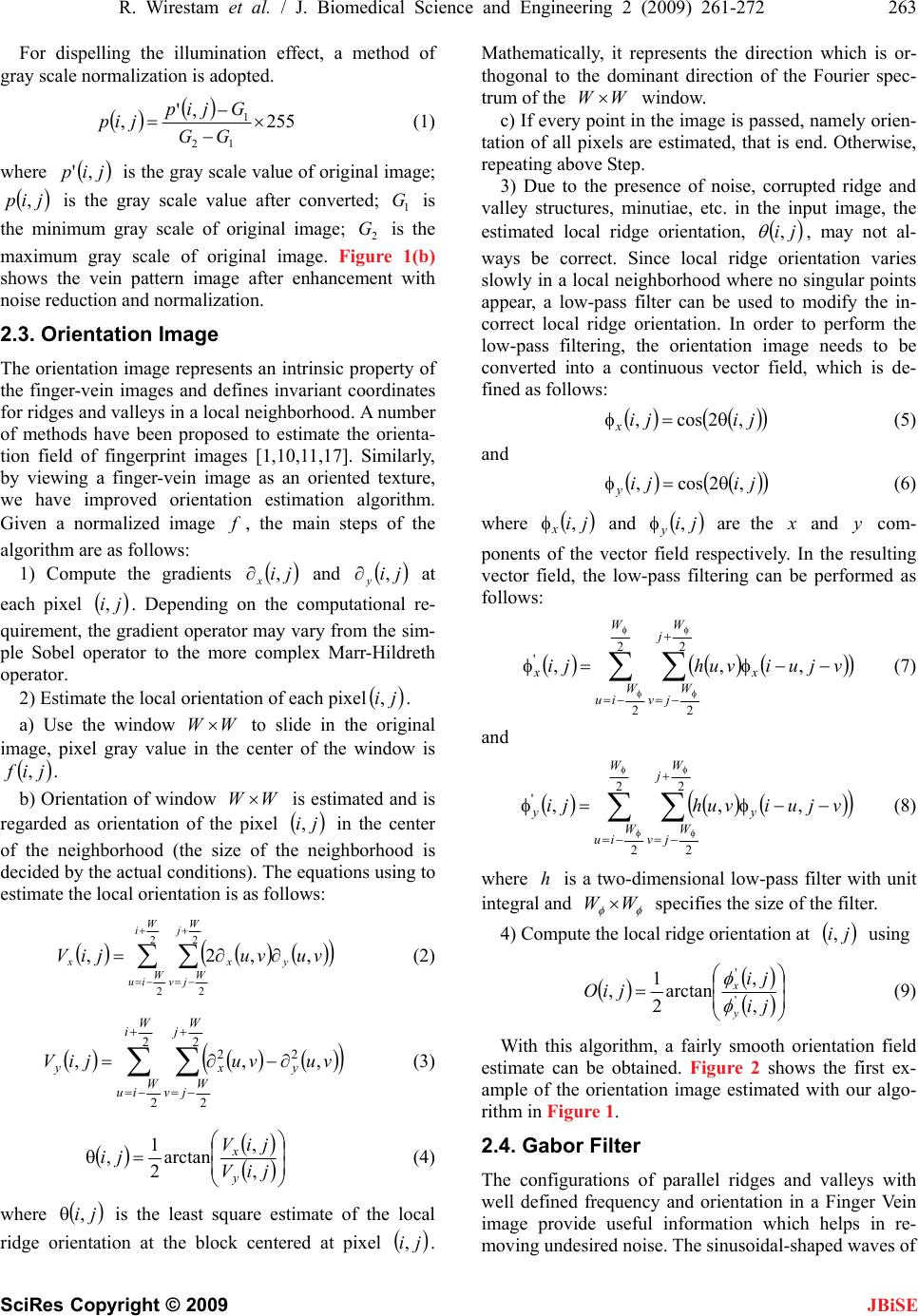

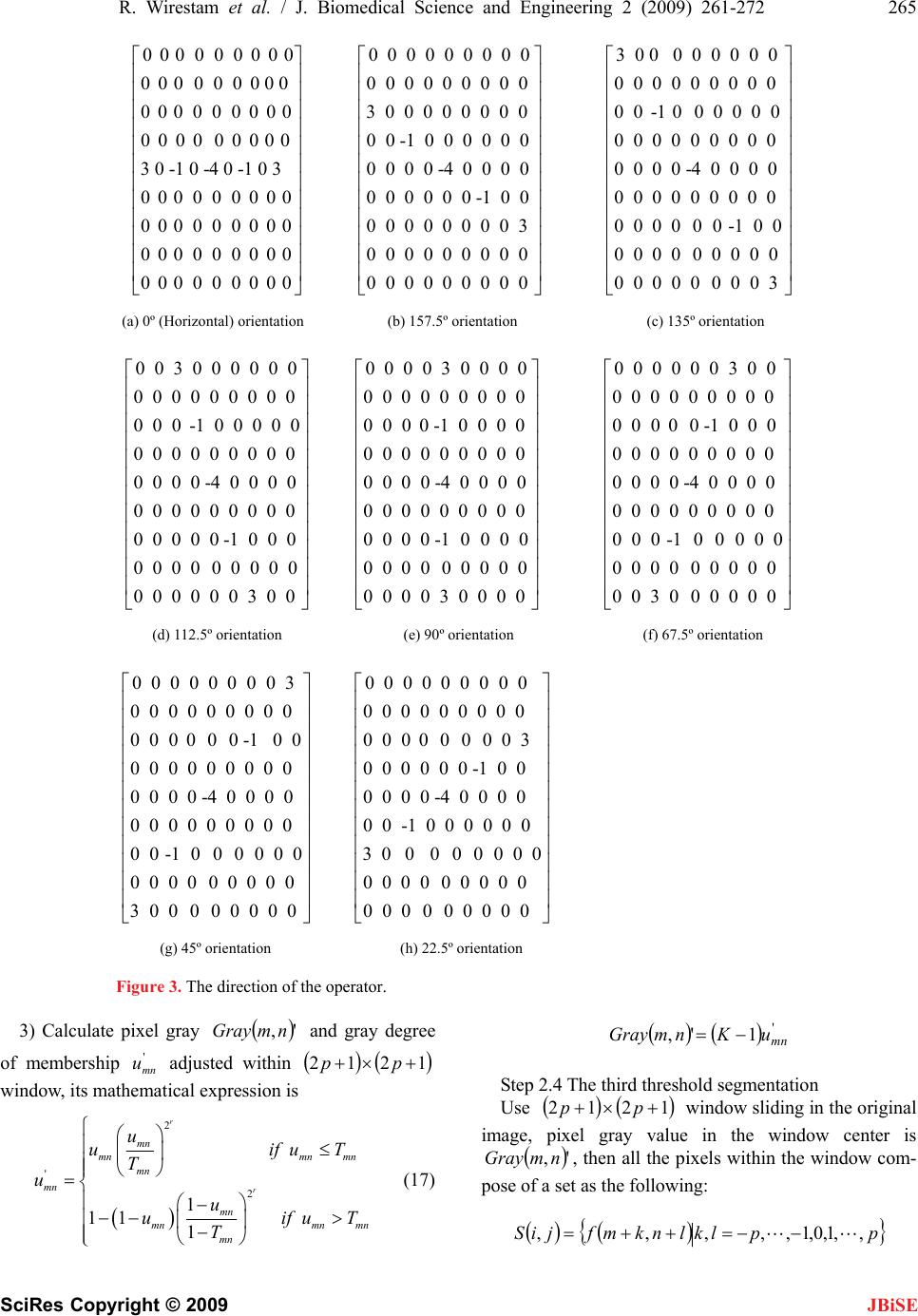

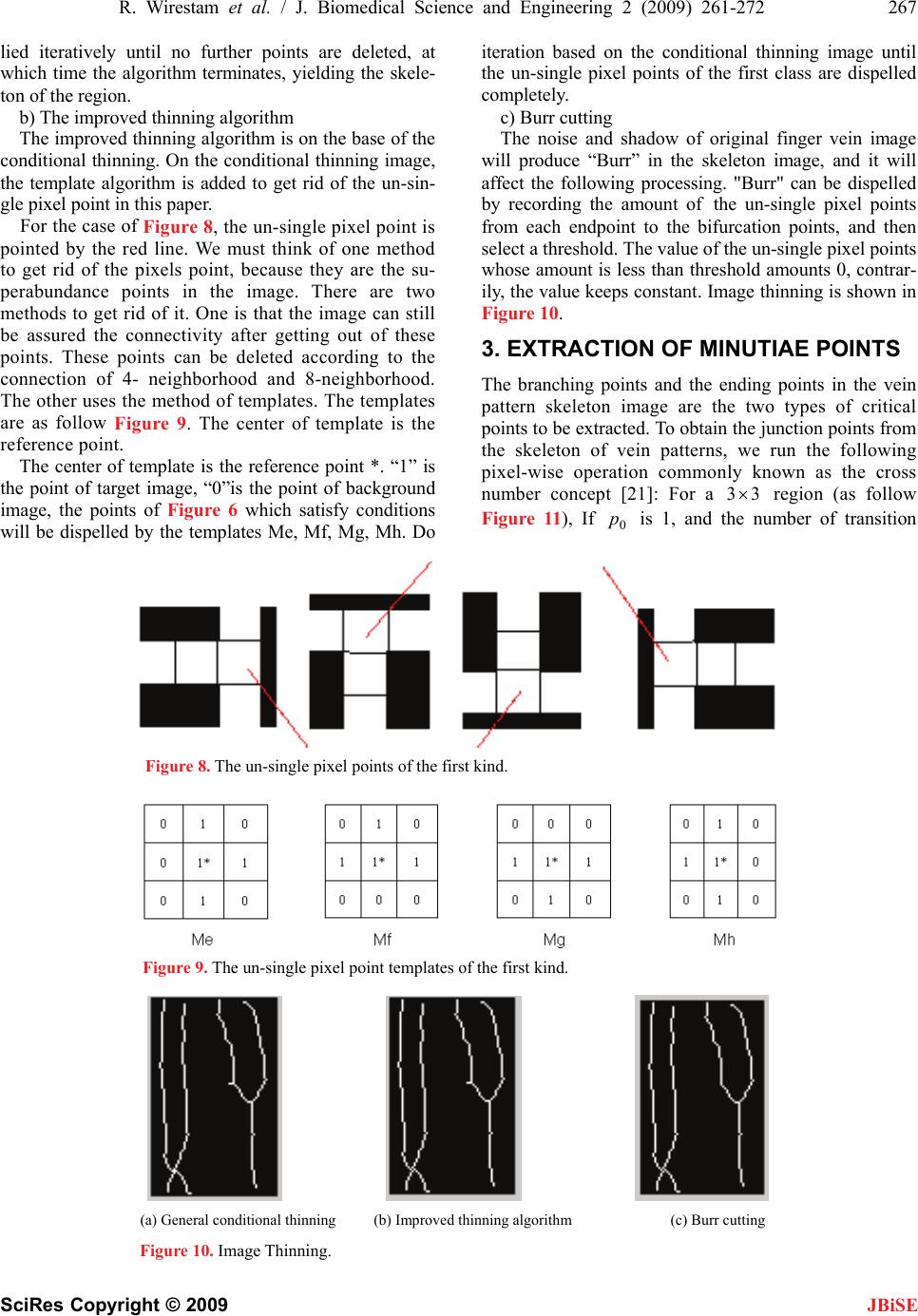

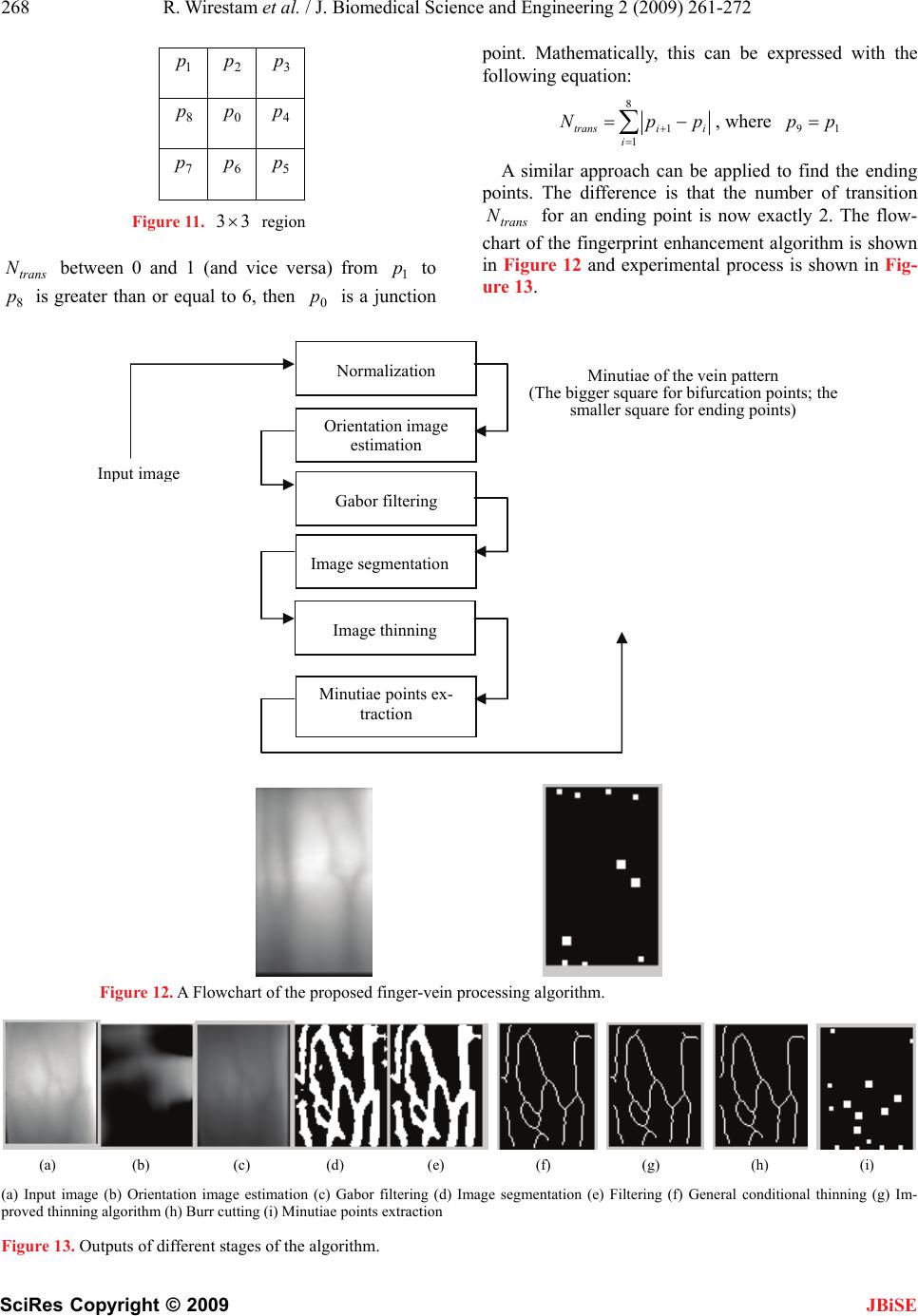

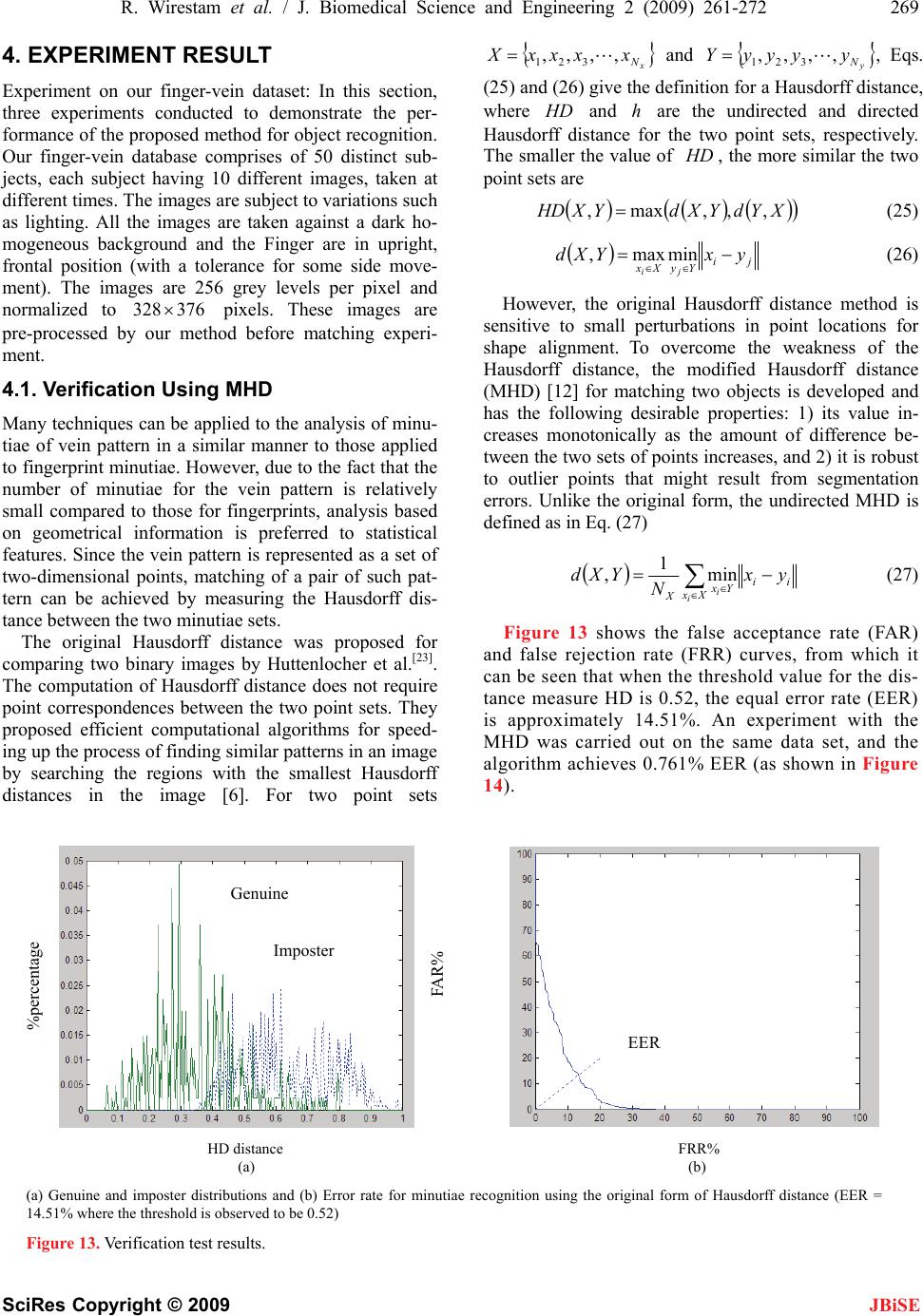

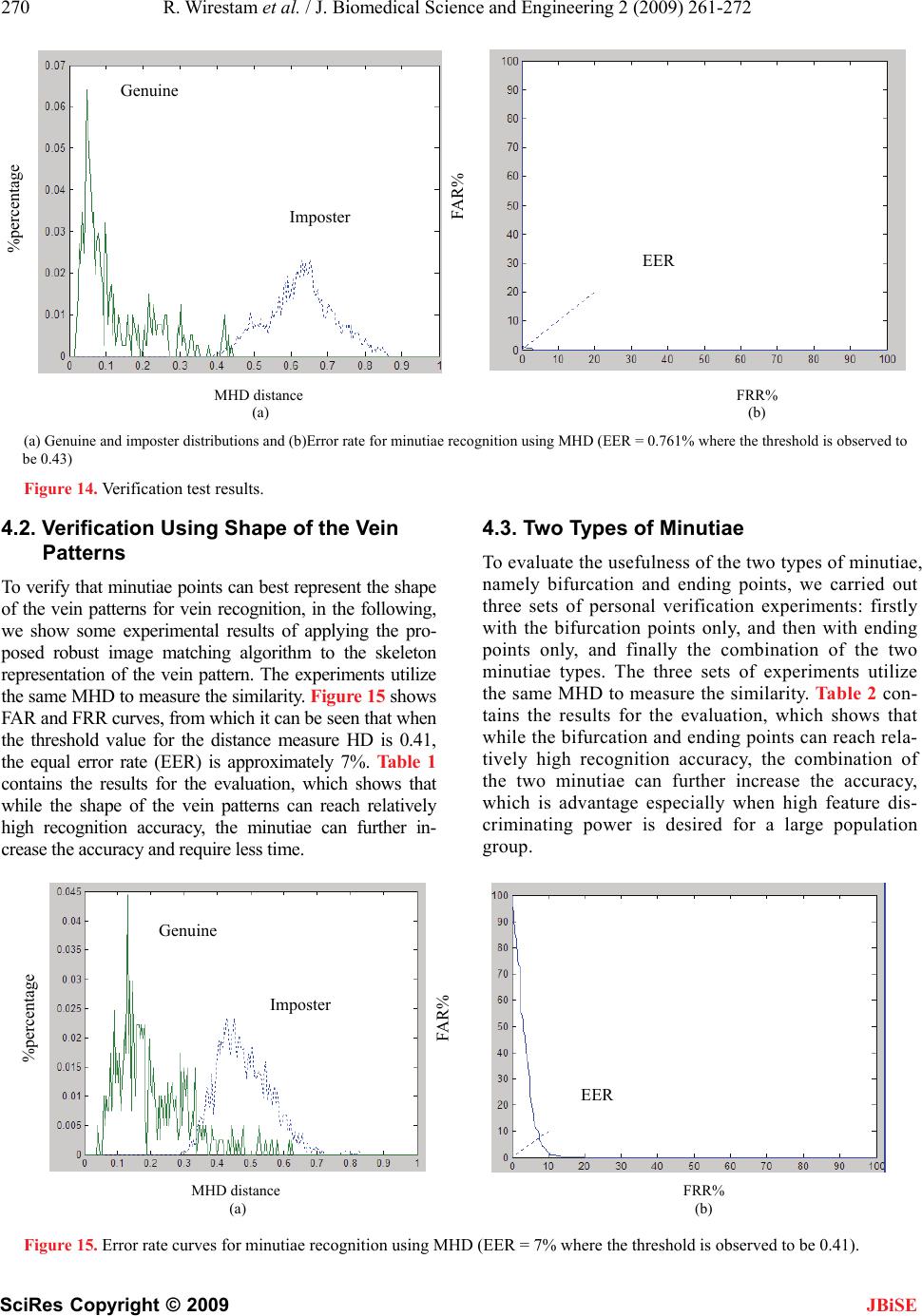

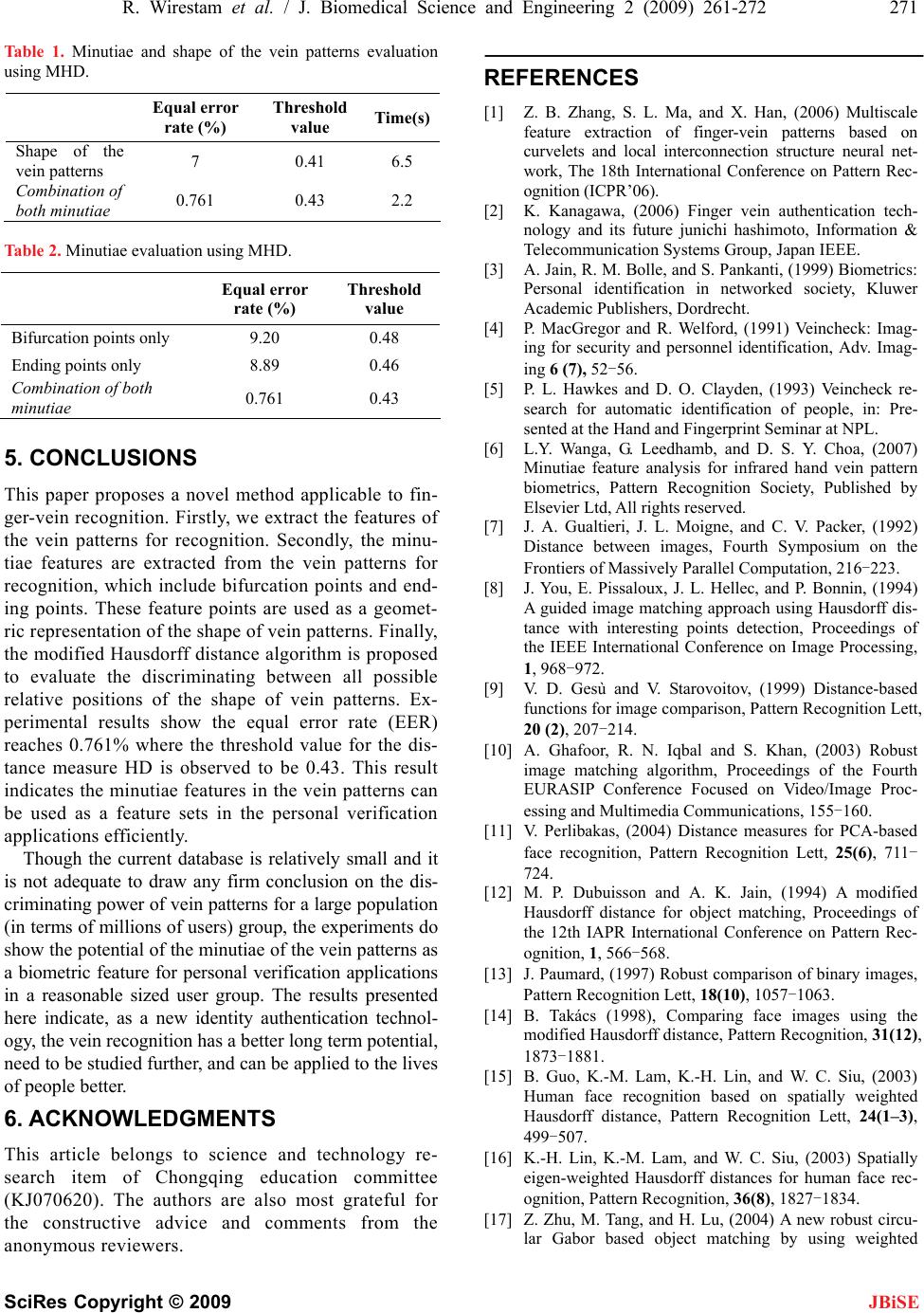

J. Biomedical Science and Engineering, 2009, 2, 261-272 doi: 10.4236/jbise.2009.24040 Published Online August 2009 (http://www.SciRP.org/journal/jbise/ JBiSE ). Published Online August 2009 in SciRes. http://www.scirp.org/journal/jbise Finger-vein image recognition combining modified hausdorff distance with minutiae feature matching Cheng-Bo Yu, Hua-Feng Qin, Lian Zhang, Yan-Zhe Cui Chongqing Institute of Technology, Chongqing 400050, China Email: yuchengbo@cqit.edu.cn Received 6 January 2009; revised 22 February 2009; accepted 26 February 2009. ABSTRACT In this paper, we propose a novel method for finger-vein recognition. We extract the features of the vein patterns for recognition. Then, the minutiae features included bifurcation points and ending points are extracted from these vein patterns. These feature points are used as a geometric representation of the vein patterns shape. Finally, the modified Hausdorff distance algorithm is provided to evaluate the identifica- tion ability among all possible relative positions of the vein patterns shape. This algorithm has been widely used for comparing point sets or edge maps since it does not require point cor- respondence. Experimental results show these minutiae feature points can be used to perform personal verification tasks as a geometric rep- resentation of the vein patterns shape. Fur- thermore, in this developed method. we can achieve robust image matching under different lighting conditions. Keywords: Biometrics; Finger-Vein Verification; Gabor Enhancement; Minutiae Matching; Modified Hausdorff Distance 1. INTRODUCTION Biometrics is the science of identifying a person using their physiological or behavioral features. Recently, vein pattern biometrics has attracted increasing interest from many research communities. Finger-vein recognition is a new biometrica identification technology using the fact that different person has a different finger-vein pattern [2,3,4,5]. Compared with fingerprint recognition, the advantages of the finger-vein recognition are [1]: 1) Do not need to consider the condition of the skin surface and can prevent the artificial finger; 2) Increase the forgery difficulty by using the invisible features inside the hu- man body which only appears under the infrared light; 3) Non-contact recognition has no bad effect on public health. The properties of uniqueness, stability and strong immunity to forgery of the vein pattern make it become a potentially good biometric which offers secure and reliable for person identification. A typical vein pattern biometric system consists of five processing stages [5]: image acquisition, image enhancement, vein pattern segmentation, feature extraction and matching. During the image acquisition stage, vein patterns are usually obtained using infrared imaging technologies. One method is using a far-infrared camera to acquire the vein pattern images of finger-vein [1,2,6]. In order to get the shape representation of the pattern, after obtaining the images, vein pattern is separated and extracted from the background. Finally, a robust image similarity measure- ment is imperative for matching images under different conditions. There are a number of previous methods based on Hausdorff distance function for image match- ing [7,8,9,10,11]. One of the most distinguished benefits of Hausdorff distance is that it does not require point correspondences between two objects or two images. Dubuisson and Jain [12] developed several modified Hausdorff distances (MHD) for comparing the edge maps computed from the gray-scale images. Paumard proposed a censored Hausdorff distance (CHD) for com- paring binary images [13]. Takacs introduced the neighborhood function and associated penalties to ex- tend the MHD for face recognition [14,24,25]. Guo et al. proposed a new modified Hausdorff distance which is weighted by a function derived from the spatial informa- tion of human face [15]. Furthermore, Lin et al. pro- posed modified Hausdorff distances with spatial weighting determined by eigenface features [16]. The eigenface-based weighting function provides more weighting on important facial features, such as eyes, mouth, and face contour. Zhu et al. employed an im- proved Gabor filter for computing edge maps and ap- plied a weighted modified Hausdorff distance (WMHD) in a circular Gabor feature space for comparing images [17]. LingyuWanga applied the modified Hausdorff dis- tance based matching scheme to the interesting points for comparing images [6]. This research is motivated by P. L. Hawkes et al [6].  262 R. Wirestam et al. / J. Biomedical Science and Engineering 2 (2009) 261-272 SciRes Copyright © 2009 JBiSE In this paper, we utilize the modified Hausdorff distance (MHD) to analyze the spatial similarity between the mi- nutiae feature sets. Experimental results indicate that these minutiae feature points can be used to perform personal verification tasks as a geometric representation of the vein patterns shape. Furthermore we are able to achieve robust image matching under different lighting conditions. 2. PREPROCESSING OF INFRARED VEIN PATTERN IMAGES 2.1. Finger-Vein Pattern Database Our finger-vein image capture device mainly consists of near-infrared light source, lens, cavum, light filter, im- age ingesting equipment. Vein patterns cannot be ob- served using normal, visible rays of light since they are beneath the skin’s surface. However, vein patterns can be viewed through an image sensor which is sensitive to near-infrared light (wavelengths between 700 and 1000 nanometers), because near-infrared light passes through human body tissues and are blocked by pigments such as hemoglobin or melanin. As hemoglobin exists densely in blood vessels, near-infrared light shining through causes the veins to appear as dark shadow lines in the near- infrared image. To obtain a stable finger-vein pattern ,our light source adopts near-infrared light source with wavelength of 890 m, the image ingesting part adopts near-infrared CCD camera with wavelength of 900 m. our finger-vein database comprises of 50 distinct sub- jects, each subject having ten different images, taken at different times and obtained from middle finger in the right hand. The images are subject to variations such as lighting. All the images are taken at a dark homogeneous background and the finger is in upright, frontal position (with a tolerance for some side movement). The images are 256 grey levels per pixel and normalized to 376328 pixels. In our analysis, no pre-processing of the images was conducted. Example image of five sub- jects are shown in Figure 1(a). 2.2. The Lmage Normalization The proportion of the vein area varies mostly at different time. And for the convenience in further study, the di- mension size normalization is done in this paper. The vein image is defined as . That is to say the im- age zooming will be done. For the difference of acquisi- tion time, light intensity and the personal palm thickness, the image gray scale distribution is different highly. If the image difference is great, the difficulty of image processing and matching will be increased. So the image must be normalized. All of the images must be converted to the standard image of the same mean and variance. 9664 (a) Inpuage t im (b) Normalized image Figure 1. The vein image.  R. Wirestam et al. / J. Biomedical Science and Engineering 2 (2009) 261-272 263 SciRes Copyright © 2009 JBiSE For dispelling the illumination effect, a method of gray scale normalization is adopted. 255 ,' , 12 1 GG Gjip jip (1) where is the gray scale value of original image; is the gray scale value after converted; is the minimum gray scale of original image; is the maximum gray scale of original image. Figure 1(b) shows the vein pattern image after enhancement with noise reduction and normalization. jip ,' jip ,1 G 2 G 2.3. Orientation Image The orientation image represents an intrinsic property of the finger-vein images and defines invariant coordinates for ridges and valleys in a local neighborhood. A number of methods have been proposed to estimate the orienta- tion field of fingerprint images [1,10,11,17]. Similarly, by viewing a finger-vein image as an oriented texture, we have improved orientation estimation algorithm. Given a normalized image , the main steps of the algorithm are as follows: f 1) Compute the gradients and ji x, ji y, at each pixel . Depending on the computational re- quirement, the gradient operator may vary from the sim- ple Sobel operator to the more complex Marr-Hildreth operator. ji, 2) Estimate the local orientation of each pixel ji,. a) Use the window to slide in the original image, pixel gray value in the center of the window is . WW jif , b) Orientation of window is estimated and is regarded as orientation of the pixel in the center of the neighborhood (the size of the neighborhood is decided by the actual conditions). The equations using to estimate the local orientation is as follows: WW ji, 2 2 2 2 ,,2, W i W iu W j W jv yxx vuvujiV (2) 2 2 2 2 22 ,,, W i W iu W j W jv yxy vuvujiV (3) jiV jiV ji y x , , arctan 2 1 , (4) where is the least square estimate of the local ridge orientation at the block centered at pixel ji, ji,. Mathematically, it represents the direction which is or- thogonal to the dominant direction of the Fourier spec- trum of the WW window. c) If every point in the image is passed, namely orien- tation of all pixels are estimated, that is end. Otherwise, repeating above Step. 3) Due to the presence of noise, corrupted ridge and valley structures, minutiae, etc. in the input image, the estimated local ridge orientation, ji , , may not al- ways be correct. Since local ridge orientation varies slowly in a local neighborhood where no singular points appear, a low-pass filter can be used to modify the in- correct local ridge orientation. In order to perform the low-pass filtering, the orientation image needs to be converted into a continuous vector field, which is de- fined as follows: jij ,2cos i x, (5) and jij ,2cos i y, (6) where j,i x and ji y, are the x and com- ponents of the vector field respectively. In the resulting vector field, the low-pass filtering can be performed as follows: y 2 2 ',,, W j W jv xx vjuivuhji 2 2 W W iu (7) and 2 2 2 2 ',,, W W iu W j W jv yy vjuivuhji (8) where is a two-dimensional low-pass filter with unit integral and h WW specifies the size of the filter. 4) Compute the local ridge orientation at using ji, ji ji jiO y x , , arctan 2 1 ,' ' (9) With this algorithm, a fairly smooth orientation field estimate can be obtained. Figure 2 shows the first ex- ample of the orientation image estimated with our algo- rithm in Figure 1. 2.4. Gabor Filter The configurations of parallel ridges and valleys with well defined frequency and orientation in a Finger Vein image provide useful information which helps in re- moving undesired noise. The sinusoidal-shaped waves of  264 R. Wirestam et al. / J. Biomedical Science and Engineering 2 (2009) 261-272 SciRes Copyright © 2009 JBiSE Figure 2. Orientation fields using and our method (20 W and ). 10 W ridges and valleys vary slowly in a local constant orien- tation. Therefore, a band pass filter is used to tune the corresponding frequency. Moreover, orientation can ef- ficiently remove the undesired noise and preserve the true ridge and valley structures. Gabor filters have both frequency-selective and orientation-selective properties and have optimal joint resolution in both spatial and frequency domains. Therefore, it is appropriate to use Gabor filters as band pass filters to remove the noise and preserve true ridge/valley structures. The circular Gabor filter is an effective tool for texture analysis [20], and has the following general form: sincos2exp 2 exp 2 1 ,,,, 2 22 2 uyuxi yx x uyxG (10) where 1i, u is the frequency of the sinusoidal wave, controls the orientation of the function, and is the standard deviation of the Gaussian envelope. To make it more robust against brightness, a discrete Gabor filter, ,,,, uyxG, is turned to zero DC (direct current) with the application of the following formula: 2 12 ,,,, ,,,,,,,, ~ n uyxG uyxGuysG n ni n nj (11) Here is the size of the filter. In fact, the imagi- nary part of the Gabor filter automatically has zero DC because of odd symmetry. The adjusted Gabor filter is used to filter the preprocessed images. In our system, we ap- plied a tuning process to optimize the selection of these three parameters 2 12n O , , 5179.1u1116.0 . 2.5. The Vein Extraction The image segmentation is very important in the whole process of finger vein recognition and it is very difficult. There are several image segmentation methods. The classic methods are threshold method [26], region grow- ing method [27], relaxation method [28], edge detection method [29], split-merge algorithm [31] and so on. The modern methods are NN method [30], fuzzy clustering method [32] and so on. The different methods are adapted in the different application fields. There is no segmentation method fitting all images. So selecting the suitable segmentation method is very important. There- fore, we proposed a completely new method to segregate the vein image, the principle of the algorithm is as fol- lowing: Step 1: Convolution (i=1, 2, ┄, 8) of each pixel within the iFgray 99 window in image were calcu- lated by corresponding eight direction Operator (As shown in Figure 3). Then get the largest convolution in eight directions. maxG iFgrayMaxG i max (12) Then, maximum is gray value of the point maxG max, GnmGray (13) Step 2 Threshold segmentation Step 2.1 The first threshold segmentation ,, ,0 Graym nifGraym n Graym notherwise 0 (14) Step 2.2 The second threshold segmentation NumGraysumGmean , ,, GmeanifGraym nGmean Graym nGraym notherwise (15) Gmean is the average of non-zero elements in the image. Graysum and expresse the sum and number of non-zero elements respectively. Num Step 2.3 Fuzzy enhancement After the previous twice segmentation, then range of the gray value is Gmean,0, pseudo-vein characteris- tics and noise are likely to exist in the region, cleared the fuzzy part by using fuzzy enhancement operator. This will further reduce the noise and removed pseudo-vein. The algorithm are as following: 1) Calculate the degree of membership, namely: 1 , , K nmGray nmGrayGumn (16) 2) Calculate the means of all the elements within mn T 1212 pp window  R. Wirestam et al. / J. Biomedical Science and Engineering 2 (2009) 261-272 265 SciRes Copyright © 2009 JBiSE 0 0 0 0 0 0 0 00 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 00 0 0 0 0 0 0 0 0 0 3 0 -1 0 -4 0 -1 0 3 0 0 0 0 0 0 0 00 0 0 0 0 0 0 0 00 0 0 0 0 0 0 0 00 0 0 0 0 0 0 0 00 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 3 0 0 0 0 0 0 0 0 0 0 -1 0 0 0 0 0 0 0 0 0 0 -4 0 0 0 0 0 0 0 0 0 0 -1 0 0 0 0 0 0 0 0 0 0 3 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 3 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 -1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 -4 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0-1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 3 (a) 0º (Horizontal) orientation (b) 157.5º orientation (c) 135º orientation 0 0 3 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 -1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 -4 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0-1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 3 0 0 0 0 0 0 3 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 -1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 -4 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 -10 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 3 0 0 0 0 0 0 0 0 0 0 3 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 -1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 -4 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0-1 00 0 0 0 0 0 0 0 0 0 0 0 0 0 0 3 00 0 0 0 0 (d) 112.5º orientation (e) 90º orientation (f) 67.5º orientation 0 0 0 0 0 0 0 0 3 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 -1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 -4 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0-10 00 0 0 0 0 0 0 0 0 0 0 0 0 3 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 3 0 0 0 0 0 0 -1 0 0 0 0 0 0 -4 0 0 0 0 0 0-1 0 0 0 0 0 0 3 000 00 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 (g) 45º orientation (h) 22.5º orientation Figure 3. The direction of the operator. 3) Calculate pixel gray and gray degree of membership adjusted within ', nmGray ' mn u 1212 pp window, its mathematical expression is 2 ' 2 1 11 1 r r mn mnmn mn mn mn mn mnmn mn mn u uifu T u u uifu T ' 1', mn uKnmGray Step 2.4 The third threshold segmentation T T (17) Use 1212 pp window sliding in the original image, pixel gray value in the window center is ', nmGray , then all the pixels within the window com- pose of a set as the following: pplklnkmfjiS ,,1,0,1,,,,,  266 R. Wirestam et al. / J. Biomedical Science and Engineering 2 (2009) 261-272 SciRes Copyright © 2009 JBiSE Obtained the average of all pixels in the window. nmSAverage , Formula as follows p pm p pn nmGray pp nmSAverage ', 1212 1 , (18) p pm p pn nmSAveragenmGray pp nm 2 ,', 1212 1 , (19) nmnmSAveragenmT ,,, (20) So each pixel of images has a threshold value. The binary images obtained by the each respective threshold values are 1,' ,' 0 ifGraymnTmn Graym notherwise , (21) Obtaining the final image only contain- ing the vein characteristics. ', nmGray It is obvious that the grain of the original image has been got as Figure 4. The effect is comparatively ideal. Median filtering method can eliminate burrs and make the borderline smooth. In addition, because the result of the new threshold dispose algorithm inducts massive noises, these noises are wiped off according to the size of them in this paper. The effect of filtering is as shown in Figure 5. 2.6. Lmage Thinning In this paper, we thin the vein image using the combina- tion method of general conditional thinning and tem- plates. Get rid of the special un-single pixel point after the general conditional thinning. a) The conditional thinning algorithm [22] Region points are assumed to have value 1 and back- ground points to have value 0. The method consists of successive passes of two basic steps applied to the con- tour points of the given region, where a contour point is any pixel with value 1 and having at least one 8- neighbor valued 0. With reference to 8-neighborhood Figure 4. Segmentation. Figure 5. Filtering. notation shown in Figure 6, Step 1 flags a contour point for deletion if the following conditions are satisfied: 1 p 1 1 246 468 () 26 () 1 () 0 () 0 aNp bTp c ppp dppp (22) where 1 pN is the number of nonzero neighbors of , that is, 1 p 98321 pppppN (23) And 1 pT is the number of 0-1 transitions in the ordered sequence . For example, 23 892 ,,,,,pp ppp 14 pN , 13 pT in Figure 7. In step 2, conditions (a) and (b) remain the same, but conditions (c) and (d) are changed to 246 468 (') 0 (') 0 cppp dppp (24) Thus one iteration of the thinning algorithm consists of: 1) applying step 1 to flag the remaining border points for deletion; 2) deleting the flagged points; 3) applying step 2 to flag the remaining border points for deletion; (4) deleting the flagged points. This basic pro cedure is app- 9 p 2 p 3 p 8 p 1 p 4 p 7 p 6 p 5 p Figure 6. Neighborhood Arrangement Used by the Thinning lgorithm. A Figure 7. Illustration of condition (a) and (b) in Eq.(22) (In this case, 4 1 pN , 3 1 pT ). 0 0 1 1 1 p 0 1 0 1  R. Wirestam et al. / J. Biomedical Science and Engineering 2 (2009) 261-272 267 SciRes Copyright © 2009 JBiSE lied iteratively until no further points are deleted, at which time the algorithm terminates, yielding the skele- ton of the region. b) The improved thinning algorithm The improved thinning algorithm is on the base of the conditional thinning. On the conditional thinning image, the template algorithm is added to get rid of the un-sin- gle pixel point in this paper. For the case of Figure 8, the un-single pixel point is pointed by the red line. We must think of one method to get rid of the pixels point, because they are the su- perabundance points in the image. There are two methods to get rid of it. One is that the image can still be assured the connectivity after getting out of these points. These points can be deleted according to the connection of 4- neighborhood and 8-neighborhood. The other uses the method of templates. The templates are as follow Figure 9. The center of template is the reference point. The center of template is the reference point *. “1” is the point of target image, “0”is the point of background image, the points of Figure 6 which satisfy conditions will be dispelled by the templates Me, Mf, Mg, Mh. Do iteration based on the conditional thinning image until the un-single pixel points of the first class are dispelled completely. c) Burr cutting The noise and shadow of original finger vein image will produce “Burr” in the skeleton image, and it will affect the following processing. "Burr" can be dispelled by recording the amount of the un-single pixel points from each endpoint to the bifurcation points, and then select a threshold. The value of the un-single pixel points whose amount is less than threshold amounts 0, contrar- ily, the value keeps constant. Image thinning is shown in Figure 10. 3. EXTRACTION OF MINUTIAE POINTS The branching points and the ending points in the vein pattern skeleton image are the two types of critical points to be extracted. To obtain the junction points from the skeleton of vein patterns, we run the following pixel-wise operation commonly known as the cross number concept [21]: For a region (as follow Figure 11), If is 1, and the number of transition 33 0 p Figure 8. The un-single pixel points of the first kind. Figure 9. The un-single pixel point templates of the first kind. (a) General conditional thinning (b) Improved thinning algorithm (c) Burr cutting Figure 10. Image Thinning.  268 R. Wirestam et al. / J. Biomedical Science and Engineering 2 (2009) 261-272 SciRes Copyright © 2009 JBiSE Figure 11. 33 region trans N 8 p between 0 and 1 (and vice versa) from to is greater than or equal to 6, then is a junction 1 p 0 p point. Mathematically, this can be expressed with the following equation: 8 1 1 i iitrans ppN , where 19 pp A similar approach can be applied to find the ending points. The difference is that the number of transition for an ending point is now exactly 2. The flow- chart of the fingerprint enhancement algorithm is shown in Figure 12 and experimental process is shown in Fig- ure 13. trans N Figure 12. A Flowchart of the proposed finger-vein processing algorithm. (a) (b) (c) (d) (e) (f) (g) (h) (i) (a) Input image (b) Orientation image estimation (c) Gabor filtering (d) Image segmentation (e) Filtering (f) General conditional thinning (g) Im- proved thinning algorithm (h) Burr cutting (i) Minutiae points extraction F igure 13. Outputs of different stages of the algorithm. 1 p 2 p 3 p 8 p 0 p 4 p 7 p 6 p 5 p Minutiae of the vein patte r n (The bigger square for bifurcation points; the smaller square for ending points) Orientation image estimation Gabor filtering Image segmentation Image thinning Minutiae points ex- traction Normalization In p ut ima g e  R. Wirestam et al. / J. Biomedical Science and Engineering 2 (2009) 261-272 269 SciRes Copyright © 2009 JBiSE 4. EXPERIMENT RESULT Experiment on our finger-vein dataset: In this section, three experiments conducted to demonstrate the per- formance of the proposed method for object recognition. Our finger-vein database comprises of 50 distinct sub- jects, each subject having 10 different images, taken at different times. The images are subject to variations such as lighting. All the images are taken against a dark ho- mogeneous background and the Finger are in upright, frontal position (with a tolerance for some side move- ment). The images are 256 grey levels per pixel and normalized to pixels. These images are pre-processed by our method before matching experi- ment. 376328 4.1. Verification Using MHD Many techniques can be applied to the analysis of minu- tiae of vein pattern in a similar manner to those applied to fingerprint minutiae. However, due to the fact that the number of minutiae for the vein pattern is relatively small compared to those for fingerprints, analysis based on geometrical information is preferred to statistical features. Since the vein pattern is represented as a set of two-dimensional points, matching of a pair of such pat- tern can be achieved by measuring the Hausdorff dis- tance between the two minutiae sets. The original Hausdorff distance was proposed for comparing two binary images by Huttenlocher et al.[23]. The computation of Hausdorff distance does not require point correspondences between the two point sets. They proposed efficient computational algorithms for speed- ing up the process of finding similar patterns in an image by searching the regions with the smallest Hausdorff distances in the image [6]. For two point sets x N xxxxX ,,,, 321 and y N yyyyY,,,, 321 , Eqs. (25) and (26) give the definition for a Hausdorff distance, where H D h and are the undirected and directed Hausdorff distance for the two point sets, respectively. The smaller the value of H D, the more similar the two point sets are XYdYXdYXHD ,,,max, (25) ji Yy Xx yxYXd j i minmax, (26) However, the original Hausdorff distance method is sensitive to small perturbations in point locations for shape alignment. To overcome the weakness of the Hausdorff distance, the modified Hausdorff distance (MHD) [12] for matching two objects is developed and has the following desirable properties: 1) its value in- creases monotonically as the amount of difference be- tween the two sets of points increases, and 2) it is robust to outlier points that might result from segmentation errors. Unlike the original form, the undirected MHD is defined as in Eq. (27) Xx ii Yx Xii yx N YXdmin 1 , (27) Figure 13 shows the false acceptance rate (FAR) and false rejection rate (FRR) curves, from which it can be seen that when the threshold value for the dis- tance measure HD is 0.52, the equal error rate (EER) is approximately 14.51%. An experiment with the MHD was carried out on the same data set, and the algorithm achieves 0.761% EER (as shown in Figure 14). Genuine %percentage Imposter FAR% EER HD distance FRR% (a) (b) (a) Genuine and imposter distributions and (b) Error rate for minutiae recognition using the original form of Hausdorff distance (EER = 14.51% where the threshold is observed to be 0.52) Figure 13. Verification test results.  270 R. Wirestam et al. / J. Biomedical Science and Engineering 2 (2009) 261-272 SciRes Copyright © 2009 JBiSE Genuine %percentage FAR% Imposter EER M HD distance FRR% (a) (b) (a) Genuine and imposter distributions and (b)Error rate for minutiae recognition using MHD (EER = 0.761% where the threshold is observed to be 0.43) Figure 14. Verification test results. 4.2. Verification Using Shape of the Vein Patterns To verify that minutiae points can best represent the shape of the vein patterns for vein recognition, in the following, we show some experimental results of applying the pro- posed robust image matching algorithm to the skeleton representation of the vein pattern. The experiments utilize the same MHD to measure the similarity. Figure 15 shows FAR and FRR curves, from which it can be seen that when the threshold value for the distance measure HD is 0.41, the equal error rate (EER) is approximately 7%. Table 1 contains the results for the evaluation, which shows that while the shape of the vein patterns can reach relatively high recognition accuracy, the minutiae can further in- crease the accuracy and require less time. 4.3. Two Types of Minutiae To evaluate the usefulness of the two types of minutiae, namely bifurcation and ending points, we carried out three sets of personal verification experiments: firstly with the bifurcation points only, and then with ending points only, and finally the combination of the two minutiae types. The three sets of experiments utilize the same MHD to measure the similarity. Table 2 con- tains the results for the evaluation, which shows that while the bifurcation and ending points can reach rela- tively high recognition accuracy, the combination of the two minutiae can further increase the accuracy, which is advantage especially when high feature dis- criminating power is desired for a large population group. FAR% %percentage Imposter Genuine EER MHD distance FRR% (a) (b) Figure 15. Error rate curves for minutiae recognition using MHD (EER = 7% where the threshold is observed to be 0.41).  R. Wirestam et al. / J. Biomedical Science and Engineering 2 (2009) 261-272 271 SciRes Copyright © 2009 JBiSE Table 1. Minutiae and shape of the vein patterns evaluation using MHD. Equal error rate (%) Threshold value Time(s) Shape of the vein patterns 7 0.41 6.5 Combination of both minutiae 0.761 0.43 2.2 Table 2. Minutiae evaluation using MHD. Equal error rate (%) Threshold value Bifurcation points only 9.20 0.48 Ending points only 8.89 0.46 Combination of both minutiae 0.761 0.43 5. CONCLUSIONS This paper proposes a novel method applicable to fin- ger-vein recognition. Firstly, we extract the features of the vein patterns for recognition. Secondly, the minu- tiae features are extracted from the vein patterns for recognition, which include bifurcation points and end- ing points. These feature points are used as a geomet- ric representation of the shape of vein patterns. Finally, the modified Hausdorff distance algorithm is proposed to evaluate the discriminating between all possible relative positions of the shape of vein patterns. Ex- perimental results show the equal error rate (EER) reaches 0.761% where the threshold value for the dis- tance measure HD is observed to be 0.43. This result indicates the minutiae features in the vein patterns can be used as a feature sets in the personal verification applications efficiently. Though the current database is relatively small and it is not adequate to draw any firm conclusion on the dis- criminating power of vein patterns for a large population (in terms of millions of users) group, the experiments do show the potential of the minutiae of the vein patterns as a biometric feature for personal verification applications in a reasonable sized user group. The results presented here indicate, as a new identity authentication technol- ogy, the vein recognition has a better long term potential, need to be studied further, and can be applied to the lives of people better. 6. ACKNOWLEDGMENTS This article belongs to science and technology re- search item of Chongqing education committee (KJ070620). The authors are also most grateful for the constructive advice and comments from the anonymous reviewers. REFERENCES [1] Z. B. Zhang, S. L. Ma, and X. Han, (2006) Multiscale feature extraction of finger-vein patterns based on curvelets and local interconnection structure neural net- work, The 18th International Conference on Pattern Rec- ognition (ICPR’06). [2] K. Kanagawa, (2006) Finger vein authentication tech- nology and its future junichi hashimoto, Information & Telecommunication Systems Group, Japan IEEE. [3] A. Jain, R. M. Bolle, and S. Pankanti, (1999) Biometrics: Personal identification in networked society, Kluwer Academic Publishers, Dordrecht. [4] P. MacGregor and R. Welford, (1991) Veincheck: Imag- ing for security and personnel identification, Adv. Imag- ing 6 (7), 52-56. [5] P. L. Hawkes and D. O. Clayden, (1993) Veincheck re- search for automatic identification of people, in: Pre- sented at the Hand and Fingerprint Seminar at NPL. [6] L.Y. Wanga, G. Leedhamb, and D. S. Y. Choa, (2007) Minutiae feature analysis for infrared hand vein pattern biometrics, Pattern Recognition Society, Published by Elsevier Ltd, All rights reserved. [7] J. A. Gualtieri, J. L. Moigne, and C. V. Packer, (1992) Distance between images, Fourth Symposium on the Frontiers of Massively Parallel Computation, 216-223. [8] J. You, E. Pissaloux, J. L. Hellec, and P. Bonnin, (1994) A guided image matching approach using Hausdorff dis- tance with interesting points detection, Proceedings of the IEEE International Conference on Image Processing, 1, 968-972. [9] V. D. Gesù and V. Starovoitov, (1999) Distance-based functions for image comparison, Pattern Recognition Lett, 20 (2), 207-214. [10] A. Ghafoor, R. N. Iqbal and S. Khan, (2003) Robust image matching algorithm, Proceedings of the Fourth EURASIP Conference Focused on Video/Image Proc- essing and Multimedia Communications, 155-160. [11] V. Perlibakas, (2004) Distance measures for PCA-based face recognition, Pattern Recognition Lett, 25(6), 711- 724. [12] M. P. Dubuisson and A. K. Jain, (1994) A modified Hausdorff distance for object matching, Proceedings of the 12th IAPR International Conference on Pattern Rec- ognition, 1, 566-568. [13] J. Paumard, (1997) Robust comparison of binary images, Pattern Recognition Lett, 18(10), 1057-1063. [14] B. Takács (1998), Comparing face images using the modified Hausdorff distance, Pattern Recognition, 31(12), 1873-1881. [15] B. Guo, K.-M. Lam, K.-H. Lin, and W. C. Siu, (2003) Human face recognition based on spatially weighted Hausdorff distance, Pattern Recognition Lett, 24(1–3), 499-507. [16] K.-H. Lin, K.-M. Lam, and W. C. Siu, (2003) Spatially eigen-weighted Hausdorff distances for human face rec- ognition, Pattern Recognition, 36(8), 1827-1834. [17] Z. Zhu, M. Tang, and H. Lu, (2004) A new robust circu- lar Gabor based object matching by using weighted  272 R. Wirestam et al. / J. Biomedical Science and Engineering 2 (2009) 261-272 SciRes Copyright © 2009 JBiSE Hausdorff distance, Pattern Recognition Lett, 25(4), 515-523. [18] L. Hong, Y.F. Wan, and A. Jain, (1998) Fingerprint image enhancement: algorithm and performance evaluation, IEEE Transactions on Pattern Analysis and Machine In- telligence, 20(8). [19] D. Marr, (1982) Vision, San Francisco, Calif.: W. H. Freeman. [20] J. G. Daugman, (1993) High confidence visual recogni- tion of persons by a test of statistical independence, IEEE Trans, Pattern Analysis and Machine Intelligence, 15(11), 1148-1161. [21] U. Halici, L. C. Jain, and A. Erol, (1999) An introduction to fingerprint recognition, in: L. C. Jain, U. Halici, I. Hayashi, S. B. Lee, S. Tsutsui (Eds.), Intelligent Biomet- ric Techniques in Fingerprint and Face Recognition, CRC Press, Boca Raton, FL, 3-34. [22] R. C. Gonzalez, (2005) Digital Image Processing, Pub- lishing House of Electronics Industry, Beijing. [23] D. P. Huttenlocher, G. A. Klanderman, and W. J. Ruck- lidge, (1993) Comparing images using the Hausdorff dis- tance, IEEE Trans. Pattern Anal. Mach. Intell, 15(9), 850-863. [24] E. P. Vivek and N. Sudha, (2007) Robust Hausdorff dis- tance measure for face recognition, Pattern Recognition, Elsevier, 40(2), 431-442. [25] E. P. Vivek and N. Sudha, (2006) Gray Hausdorff dis- tance measure for comparing face images, IEEE Transac- tions on Information, Forensics and Security, 1(3), 342-349. [26] M. Sezgin and B. Sankur, (2004) Survey over image thresholding techniques and quantitative performance evaluation [J], Journal of Electronic Imaging, 11. [27] S. Lachance, R. Bauer, and A. Warkentin, (2004) Appli- cation of region growing method to evaluate the surface condition of grinding wheels, International Journal of Machine Tools and Manufacture, 44(7-8), 823-829. [28] R. Saurel, F. Petitpas, and R. A. Berry, (2009) Simple and efficient relaxation methods for interfaces separating compressible fluids, cavitating flows and shocks in mul- tiphase mixtures, Journal of Computational Physics, 228(5), 20 March, 1678-1712. [29] C. C. Kang and W. J. Wang, (2007) A novel edge detec- tion method based on the maximizing objective function, Pattern Recognition, 40(2), 609-618. [30] Z. B. Zhang, D. Y. Wu, S. L. Ma, and J. Ma, (2005) Mul- tiscale feature extraction of finger-vein patterns based on wavelet and local interconnection structure neural net- works and brain,. ICNN&B’05, 2(13-15), 1081-1084. [31] V. Barra, (2006) Robust segmentation and analysis of DNA microarray spots using an adaptative split and merge algorithm, Computer Methods and Programs in Biomedicine, 81(2), 174-180. [32] J. M. Lu, X. Yuan, and T Yahagi, (2006) A method of face recognition based on fuzzy clustering and parallel neural networks, Signal Processing, 86(8), 2026-2039. |