Paper Menu >>

Journal Menu >>

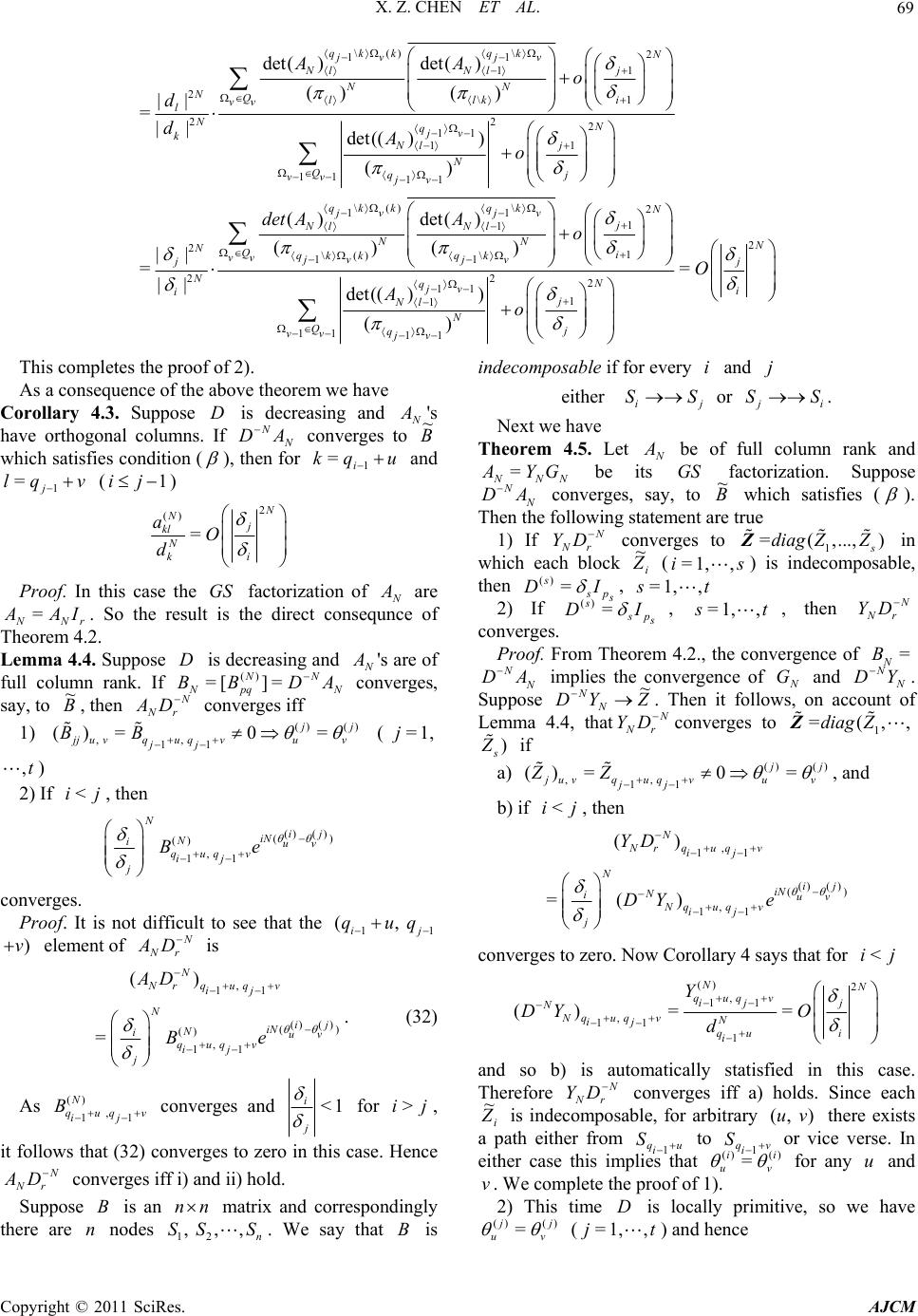

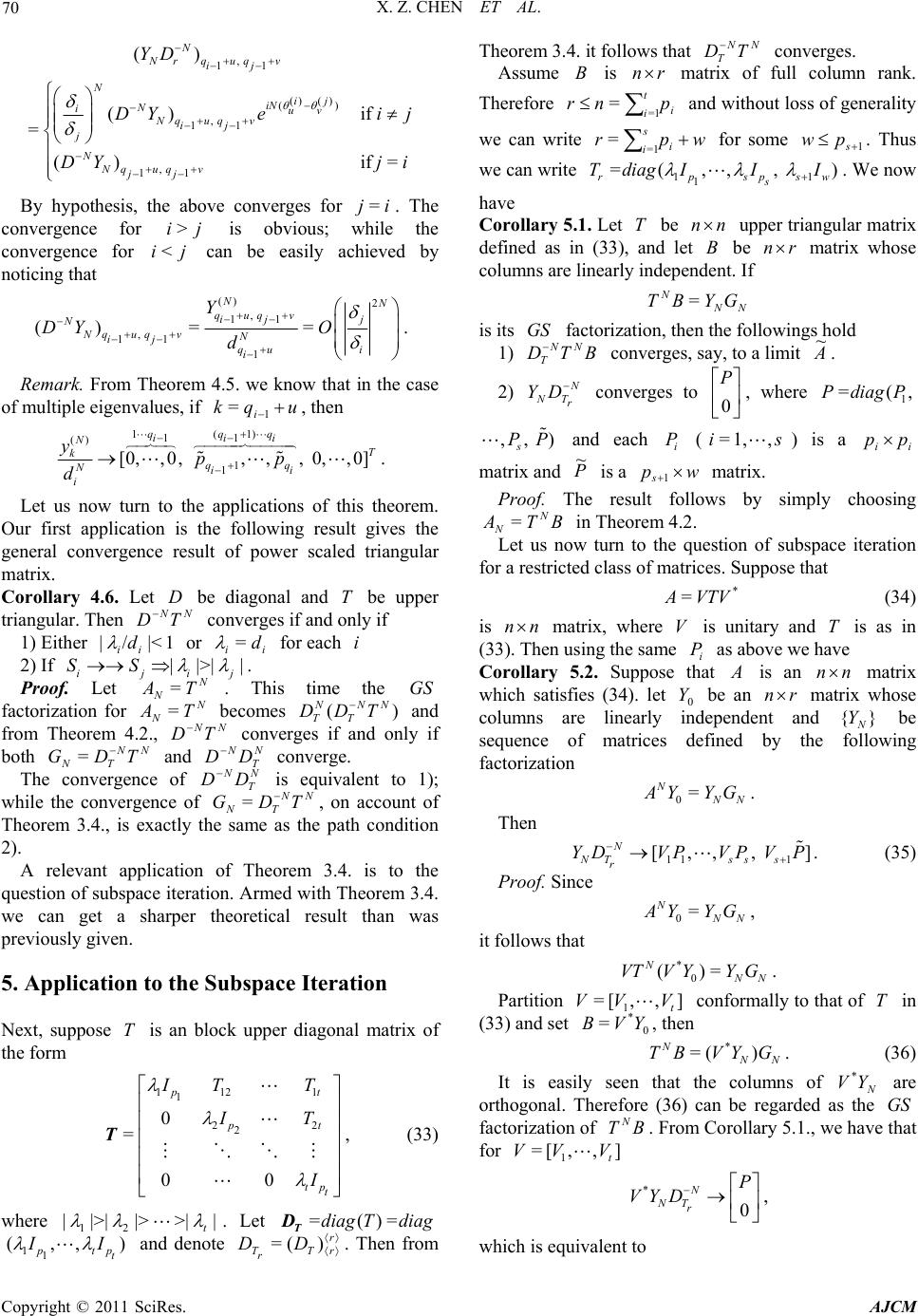

American Journal of Computational Mathematics, 2011, 1, 63-71 doi:10.4236/ajcm.2011.12007 Published Online June 2011 (http://www.scirp.org/journal/ajcm) Copyright © 2011 SciRes. AJCM The Conditions for the Con vergence of Power Scaled Matrices and Applications Xuzhou Chen1, Robert E. Hartwig2 1Department of Computer Science, Fitchburg St a t e U n i v er s i ty , Fitchburg, USA 2Department of Mathematics, North Carolina State University, Raleigh, USA E-mail: xchen@fitchburgstate.edu, hartwig@math.ncsu.edu Received February 28, 2011; revised May 30, 2011; accepted June 7, 2011 Abstract For an invertible diagonal matrix D , the convergence of the power scaled matrix sequence NN D A is investigated. As a special case, necessary and sufficient conditions are given for the convergence of NN D T , where T is triangular. These conditions involve both the spectrum as well as the diagraph of the matrix T. The results are then used to privide a new proof for the convergence of subspace iteration. Keywords: Convergence, Iterative Method, Triangular Matrix, Gram-Schmidt 1. Introduction The aim of iterative methods both in theory as well as in numerical settings, is to produce a sequence of matrices 01 , ,AA, that converges to hopefully, something useful. When this sequence diverges, the natural question is how to produce a new converging sequence from this data. One of these convergence producing methods is to diagonally scale the numbers N A and form the sequence {} N N DA . Examples of this are numerous, such as the Krylov sequence ( x , A x, 2 A x,…), which when divergent can be suitably scaled to yield a dominant eigenvector. The convergence of power scaled iterative methods and more general power scaled Cesaro sums were studied by Chen and Hartwig [4,6]. In this paper, we continue our investigation of this iteration and derive a formula for the powers of an upper triangular matrix, and use this to investigate the convergence of the sequence {} N N n DT . We also investigate the subspace iterations, which has been started by numerous authorss [1,3,10,11,15], and turn our attention to the case of repeated eigenvalues. The main contributions of this paper are: •We present the necessary and sufficient conditions for convergence of power scaled triangular matrices {} N N n DT . We prove that these conditions involve both the spectrum as well as the digraph induced by the matrix T. •We apply the the convergence of power scaled triangular matrices with the explicit expression for the G-S factors of N N DT [3] and present a new proof of the convergence of simultaneous iteration for the case where the eigenvalues of the matrix A satisfy 12 1 ||| || |>||| | rr n and ||=| |= ijij . Because of the explicit expression for the GS factors, and the exact convergence results, our discussion is more precise than that given previously [12,17]. One of the needed steps in our investigation is the derivation a formula for the powers of a triangular matrix T, which in turn will allow us to analyze the convergence of N N T DT . Throughout this note all our matrices will be complex and, as always, we shall use and () to denote the Euclidean norm and spectral radius of (). This paper is arranged as follows. As a preliminary result, a formula for the power of an upper triangular matrix is presented in Section 2. It is shown in Section 3 that the convergence of N N T DT is closely related to the digraph induced by T. Section 4 is the main section in which convergence of general power scaled sequence N N DA is investigated and this, combined with path conditions in Section 3, is then used to discuss the convergence of NN DT . As an application we analyze the convergence results for subspace iterations, in which the eigenvalues are repeated, but satisfy a peripheral constraint.  X. Z. CHEN ET AL. Copyright © 2011 SciRes. AJCM 64 2. Preliminary Results We first need a couple of preliminary results. Lemma 2.1. If 1<)(A and 0< <1 i , then =0 Nkk k A (1) converges. Proof. For =0 ()= k k k f zz , we have =0 =0 |()|=|| || kk k kk f zzz . As the geometric summation on the right-hand side has radius of convergence 1, () f z converges for all z such that ||<1z, which in turn tells us that the radius of convergence of () f z is no less than 1. Therefore, from Theorem 6.2.8. of [8], () f A converges. Next consider the triangular matrix 112 1 2 1, 0 = 00 n nn n uu u U, (2) which is used in the following characterization of the powers of a trangular matrix. Lemma 2.2. Let =0 00 T a Uc T where a and c are column vectors and suppose that =0 00 NT NN NN N a Uc N T. (3) then 11 =0 22 2 =0 =0 = NNk k Nk NNk TkNki i ki aUc . (4) in particular, 1) if =0 , then 2 12 =0 = N NTkNk Nk aUc , (5) 2) if =0 , then 2 12 =0 = N NTkNk Nk aUc , (6) 3) if 0 and , then 1 2 1 =0 1(/) = 1(/) , N N N kNk N NT k U ac (7) 4) if 0 and = , then 2 12 =0 =(1) k N NN T Nk U NacNk and (8) 5) if ==0 , then 2 =TN NaU c . (9) Proof. It is easily verified by induction that = N T 1 NN N Ty O , where 11 =0 == k kkkjTj T j k aU a OU OU 1k T (10) and 11 1 =0 == N N N kk k N T cc N y. (11) Now 2 12 1 =0 =0 1 2 12 1 =0 =0 1 122 12 =0=0 =0 11 =0 = = = Nk NkNkjT k Nk j kNk Nk NkNkjT k Nk j kNk NNNk N kk NkjTkk kkj NNk k k aU c OU aUc Uc aUc Uc N y . Hence 122 12 =0=0 =0 122 12 =0=0 =0 2 12 12 =0=0 =0 = = = NNNk NkkNkjT kk Nkkj NNNk N kk TkNkjk kkj Nj NN N kk TjNkjk kjk aUc aUc aUc , completing the proof of (4). The special cases (1) - (5) are easy consequences of (4). Let us now illustrate how the power of T are related to its digraph.  X. Z. CHEN ET AL. Copyright © 2011 SciRes. AJCM 65 3. The Digraph of T Suppose 0 T a OU c O T is an (2)(2)nn upper triangular matrix. Correspondingly we select 2n nodes 01 1 , ,,, nn SS SS , and consider the assignment 01 1 0 1112 1 2 1, 1 0 00 0 nn T n nn nn n SS SS Sa Suu Ouc S SO (12) with 12 =[, ,,] T n aaa a and 12 =[, ,,] T n ccc c. We next introduce the digraph induced by T, i.e. =(, )GVE where 01 1 ={, ,,} n VSSS is the vertex set and ={(, )|0} ijij ESSt is the edge set. As usual we say (, ) ij SS E if and only if 0 ij t. A path from j S to k S in G is a sequence of vertices 1 =, j r SS 2,, = rrk l SSS with 1 (, ) rr ii SS E , for =1, ,1il , for some l. If there is a path from j S to k S, we say that j S has access to k S and k S can be reached from j S. We write if (,), ifthereisapathfrom to , if and ij ij ij ij ijijji SS SSE SS SS SSSSSS Let 01 1 =<, >={,,} npp t SSSS be the sandwich set of 0 S and 1n S, i.e., 1 {,,} pp t SS is the set of all the nodes from 1 {, ,} n SS such that 0pi SS 1n S, i.e., i p S can be reached from 0 S and has access to 1n S. Let us now introduce the notation 1 1 1 1 =[,,] , ˆ=, =[,,]. T pp t pp t pp t T pp t aaa UU ccc Then we have the following result. Lemma 3.1. cUaUca TT ˆ =. Proof. If 0 jiji cua , then 0 (, ) i SS, (, ) ij SS, (, j S 1) n SE , thus , ij SS, which implies that =1 =1 == nn Tiijj iijj ij ij aUcaucauc . This completes the proof. □ This following corollaries are the direct consequences of the above lemma. Corollary 3.2. If 01n SS and there is no intermediate node that lies in 1 {, ,} n SS on any path from 0 S to 1n S, then 1) 10 n SS , i.e. 0 , 2) 0= ˆ =cUacUa iTiT Corollary 3.3. cUacUa iTiT ˆ =, for =1, 2,.i We now turn to the main theorem of this section. Theorem 3.4. Let 112 1 1, 0 = 00 n nn n tt t T be nonsingular and 1 = ()= (,...,) n diag Tdiag T D. Then, the following statement are equivalent 1) NN TTD converges. 2) if ij SS , then ||>|| ji , i.e. if there is a path from i S to j S, then ||>|| ji . Proof. We prove the theorem by induction on n. For 2=n, 1 2 =0 T and 1 1/ == 01 N N 2NNN T MDT where 211 212 1112 [1(/)]/()if =/if = N N NN . It is easily seen that the convergence of (2) M implies that of N N 1 . Hence if 21 = , then 0= . Conversely, if 0 , then 1|</| 12 which implies that (2) M converges. Next, assume that the result holds for all triangular matrices of size 1 n or less. Let T be defined as in (12) and set 1 =(, ,..., , )=(, , n diag diag : T D ) which is nonsingular. Consider the vertex set 01 ={ ,,} n VS S and the assignment 01 1 0 () 2 1 1/ / = 01 nn TN N NN N nNN NN n SS SS Sa MOU c SO . (13) 1) 2). Assume that )( 2 N n M converges. Then by induction, both T a OU and Uc O obey the theorem. Suppose ij SS in V. If 1|<| nji we are done since then both endpoints lie in 0 {, ,} n SS or 11 {, ,} n SS . So we only need to consider the case where 0 =SSi and 1 =nj SS, i.e. 01n SS . Subcase (a): There is an intermediate node from  X. Z. CHEN ET AL. Copyright © 2011 SciRes. AJCM 66 1 {, ,} n SS, say 01 p n SSS (np 1). Then by the induction hypothesis ||>||>|| p, and we are done. Subcase (b): There is no intermediate node between 0 S and 1n S. In this case 10 n SS , and by Corollary 3.2., 0 and 0= ˆ =cUacUa iTiT for arbitrary i. Since the sandwich set is empty, we see from Lemma 2.2., that [1(/)]/()if =/if = N NNN . (14) Now because we are given that N N converges and 0 , we must have 1|</| . Conversely, assume that ij SS ||>|| ji and assume that the hypothesis holds for matrices of size 1n or less. Since the graph condition also hold for 0 {, , } n SS and 11 {, ,} n SS , it follows by the hypothesis that all the entries in )( 2 N n M converges, with the possible exception of N N /. Consequently, all we have to show is that N N also converges, given the path conditions. Consider 1 2 =0 2 2 =0 1(/) 11(/) = 1 /(1) = N iNi NT i NN i NT i U ac if U NacNi if (15) If 01n SS , then 10 n SS and therefore = 0. Moreover, is empty and the right hand side of (15) is zero, i.e. 0= N N and we are done. So suppose 01n SS and thus ||>|| . In this case 1(/) N converges (possibly to 0 when 0= ). Now if π= then the second term of (15) vanishes by Lemma 2.2. Lastly suppose π , i.e. there are intermediate nodes 1,, p pt SS. From Lemma 2.2., we recall that cUacUa iTiT ˆ = where 11 * ˆ= p p p p tt S OS U. (16) Since for each i, 01 p n i SSS , we know that ||>||>|| i p and thus ) ˆ (|>|U . Hence ˆ (/)<1U which implies that 1 2 =0 ˆˆ i N TT i UU acaIc . To complete the proof we observe that 1 2 =0 ˆi N i N i U also converges because of Lemma 2.1. with / ˆ =UA and iN i )/(= . We at once have, as seen in [3]. Corolla ry 3.5. Let T be an upper triangular matrix and 1 = ()= (,...,) n diag Tdiag T D. If 12 | |>||>>|| n , then NN TTD converges to an upper triangular matrix of diagonal 1. We now turn to the main result in this paper. Our aim is to characterize the convergence of N NAD in terms of the GS factorization of N A. 4. Main Theorem Let us denote the set of increasing sequences of p elements taken from (1, 2,,m) by , 11 ={=( ,,)|1<<} pm pp QIiii im and assume this set , p m Q is ordered lexicographically. Suppose ::=(, 1,...,) s tss t is a subsequence of (1, 2,..., m) and we define 11 :={=(,,)|<<<<} ppp QstUuusuut . Clearly, , =1: pm p QQm . Suppose B is nm matrix of rank r . The determinant of a pp submatrix of A (1minp (, )mn), obtained from A by striking out pm rows and pn columns, is called a minor of order p of A . If the rows and columns retained are given by subscripts (see Householder [9]) 1, =( ,,) p pm IiiQ and 1, =( ,,) p pn J jjQ respectively, then the corresponding pp submatrix and minor are respectively denoted by I J A and )( I J Adet . The minors for which JI = are called the principal minors of A of order p, and the minors with ==(1, 2,,) I Jp are referred to as the leading principal minors of A . Let 1, =( ,,) p pm IiiQ and 1, =( ,,) qqm Jj jQ . For convenience, we denote by mpk QiI 1, ][ the sequences of 1 p elements obtained by striking out the kth element k i; while )( jI denotes the sequences of 1 p elements obtained by adding a new element j after k i, i.e., 1 ()=(,,, ) k I jiij. Note that if jip>, then )( jI is not an element of 1, p m Q because it is no longer an increasing sequence. If mqp , we denote the concaternation 11 (,,, , p iij  X. Z. CHEN ET AL. Copyright © 2011 SciRes. AJCM 67 ,) q j of I and J by IJ. It has qp elements. Again, IJ may not be an element of , p qm Q. Since the natural sequence (1, 2,,p) of p elements will be used frequently, we particularly denote this sequence by =(1, 2,,)pp ; while []=(1,pt ,1, 1,,)tt p is simply denoted by tp \. Next recall [2] that the volume )(BVol of a real matrix B, is defined as the product of all the nonzero singular values of B. It is known [2] that 2 ()=|det()| I J Vol BB , (17) where I J B are all r r submatrices of B. In particular, if B has full column rank, then * ()= det()VolBB B. (18) Lastly, suppose 12 =[, ,,] r A aa a is an r n matrix of full column rank and = A YG (19) is its GS factorization so that the columns of 1 =[ ,Yy 2,,] r yy are orthogonal and G is r r upper triangular matrix of diagonal 1. For rk , we define 1 =[ ,,] kk A aa and =() kk VVolA. (20) It follows directly that 2* , =|det()|=det() I kkkk IQ km VAAA . (21) Theorem 4.1. Let A be an r n matrix of rank r and let YGA = be its GS factorization. Then () 2 11 1, =det()det()/ Ik I klll l IQ ln y AAV (22) and * 1( ) 2 det( ) = j j k jk j AA gV . (23) Proof. The result of (22) follows from Theorem 2.1. in [3], while on account of Corollary 2.1. in [3], * =(GY 1* )YYA . Hence we arrive at 22 11 * 22 =1 *\ 2 1 =1 =1 == =(1)det()/ n jj jkj klj lk l jj j njt jt lt lkjj lt VV gya ya VV aa AAV . Because lklt n laa 1= is just the (, tk) element of matrix A A *, we see that *\2 1 =1 =1 =(1)()det( )/ jn jt jt j kltlkjj tl g aaAAV , which is the Laplace expansion along column j of * 1( ) det() j j k AA . Thus * 1( ) 2 det( ) = j j k jk j AA gV , completing the proof. Remark: A different proof of (23) was given in [9, § 1.4]. For a diagonal matrix 1 =( ,...,) n diag ddD, we say that D is decreasing, if 1 |||| n dd. (24) Moreover, D is called locally primitive, if it is decreasing and |=| |= ijij dd dd. (25) It is obvious that we can partition a decreasing matrix D as (1)( ) =(,,) t diag DDD (26) where each () () 1 =(,,) s si ips sdiag ee s D with 1 ||> 2 ||>>|| t . As a special case, if D is locally primitive, then D can be written as 11 =(,,) ptp t diag II D. (27) Now let us define j s j spq 0= = (=1, s t, 0== 00 pq) and 111 :={=(,,)| uiiuu i Qq qq 1<< <} ui q . Next, suppose rn N ijN aA ][= )( is a sequence of r n matrices and let () () ==[][] NN N NNij nrij rr AYG yg (28) be their GS factorization. Suppose B is a r n matrix, we can partition B conformally as D in (26). It is easily verified that the (, )uv element of (, )ij block ij B of B is exactly the 11 (, ) ij quqv element of the whole matrix B. B is said to satisfy condition ( ) if for each uqki 1 = there exists iiuuqqQ : 1 such that 1 det 0 qiu k B . We now have the following theorem. Theorem 4.2. Let N A be a sequence of r n matrices of full column rank with GS factor NNNGYA =. Also suppose D is a diagonal matrix and r D is r r leading submatrix of D. Then 1) N NAD converges to B ~ which satisfies condition ( ) N G converges and N NYD converges to Z which satisfies condition ( ) 2) If in addition D is decreasing, i.e. D satisfies (26), then for uqki 1 = and vql j 1 = ( 1 ji ) 2 () = N Nj klNi k yO d . (29) Proof. 1) The sufficiency is obvious. So let us turn to the necessary part. For 1 =( ,...,) n diag ddD, there exists a permutation Q such that DQQD* = ˆ is decreasing. Meanwhile, by hypothesis and the fact that = NN DA Q  X. Z. CHEN ET AL. Copyright © 2011 SciRes. AJCM 68 ** ˆ ˆ ()()= NN N N QDQ QAQDA with * ˆ= N N A QA , it follows that N NAD ˆ ˆ converges. So without loss of generality, we assume that D is decreasing and partition D as (26) and simply consider N NAD. We shall now, without risk of confusion, abbreviate the set 1111 :={=(,,)|<<<<} uiiuu iui Qq qqq to u Q and for ),...,(=1s iiI set s iiI dd 1 = . It at once follows that 1 ||=|| qk iu . (30) We now have from (23) () *2 1() 1() , 2 , 1( ) 11 11 =det( ) / det( )det( ) = (from Cauchy-Binet) det(() ) ()det()det() = ()det() Nk klN Nklk II NkNkl IQ kn I Nk IQ kn II NkNkl Iq QIq Q iuiu Nk Iq QIq Q iuiu gAAV AA A AA A 2 11 1() 1 2 1 1 2 1 11() 1 ) det( )det( ) = det(( )) det( ) det( ) () () = I qq iu iu NkNk l QIqQ uui u qiu Nk QIqQ uui u N q qiu iu Nk l Nk i NN Qi kk uu Q uu AA A A Ao 22 1 1 det( ) () N qiu Nk i Ni k Ao ’ On account of (30), this is equal to 2 1 11() 1 11 22 1 1 1 det( ) det( ) ()() det( ) () N q qiu iu Nk l Nk i NN Qi qq uu iuiu N qiu Nk i N Qi q uu iu A Ao Ao . (31) Since N NAD convergence, so does the submatrices ui q IN NAD 1 )( and their determinant and hence 1 11 1 1 1 det( )=det[()()] () =det() qiuqq N NI iu iu NI q Niu qiu q Niu NI ADA DA converges, say, to ui q IN Adet 1 ) ~ (. We have that consequently (31) converges to 11 1() 2 1 det( )det( ) det( ) qq iu iu kkl Q uu qiu k Q uu AA A , in which the denominator is nonzero as A ~ satisfies condition ( ). Hence N G converges and this implies that 1 =NN N N NGADYD also converges. 2) Lastly, what remains is to show that N rN DY converges if D is decreasing, i.e. D satisfies (26). Now for uqk i 1 =, vql j 1 = (1 ji ), it follows that () 1 () 1, 2 1 () 1 \\ 11 2 1 11 11 det( )det( ) 1 = ()det()det() 1 = ()det() det( ) = Ik I Nl Nl NIQ ln klNN kk l Ik I Nl Nl Iq kQ Iq kQ jv jv NI kNk Iq QIq Q jv jv qj Nl Q vv AA y dd V AA dA A \() \ 11 1 \ 1 2 11 1 11 11 \() \ 11 1 11 det( ) (det(()) ) det( )det( ) () () 1 = kk qk vjv Nl Iq kQ jv q Njv lNl QIqQ vv jv qk kqk jv jv Nl Nl NN QIq ll vv j N k A dA AA d 1 2 11 1 1 11 11 det(( )) ) Qv qjv Nl N QIqQ l vv jv A  X. Z. CHEN ET AL. Copyright © 2011 SciRes. AJCM 69 \() \2 11 1 1 21 \ 22 2 11 1 1 11 11 2 det( )det( ) ()( ) || =||det(( )) () || =| qk kqkN jvjvj NlNl NN NQi llk vv lNN qjv kj Nl N Qj q vv jv N j i AA o d dAo \() \2 11 1 1 1 \() \ 11 22 2 11 1 1 11 11 () det() ()() = |det(( )) () qk kqkN jvjv j NlNl NN Qi qk kqk vv jvjvj NN qjv j Nl N Qj q vv jv det AAo O Ao 2N i This completes the proof of 2). As a consequence of the above theorem we have Corollary 4.3. Suppose D is decreasing and N A's have orthogonal columns. If N NAD converges to B ~ which satisfies condition ( ), then for uqk i 1 = and vql j 1 = (1 ji ) 2 () = N Nj klNi k aO d Proof. In this case the GS factorization of N A are rNNIAA =. So the result is the direct consequnce of Theorem 4.2. Lemma 4.4. Suppose D is decreasing and N A's are of full column rank. If N NN pqN ADBB =][=)( converges, say, to B ~ , then N rN DA converges iff 1) () () , , 11 () =0= j j j juvquq vuv jj BB (=1,j ,t) 2) If ji <, then ()( ) () () , 11 Nij iN N iuv quq v ij j Be converges. Proof. It is not difficult to see that the 11 (, ij quq )v element of N rN DA is , 11 ()( ) () () , 11 () = N Nr q uqv ij Nij iN N iuv quq v ij j AD Be . (32) As () , 11 N quqv ij B converges and 1< j i for ji >, it follows that (32) converges to zero in this case. Hence N rN DA converges iff i) and ii) hold. Suppose B is an nn matrix and correspondingly there are n nodes 12 , ,,n SS S. We say that B is indecomposable if for every i and j either ij SS or j i SS . Next we have Theorem 4.5. Let N A be of full column rank and NNN GYA= be its GS factorization. Suppose N N DA converges, say, to B ~ which satisfies ( ). Then the following statement are true 1) If N rN DY converges to 1 =(,...,) s diag ZZ Z in which each block i Z ~ (=1,, is) is indecomposable, then s ps sID = )( , =1, , s t 2) If s ps sID = )( , =1, , s t, then N rN DY converges. Proof. From Theorem 4.2., the convergence of = N B N N DA implies the convergence of N G and N NYD. Suppose ZYD N N ~ . Then it follows, on account of Lemma 4.4, thatN rN DY converges to 1 =(,,diag Z Z ) s Z if a) () () , , 11 () =0= j j j uvq uqvuv jj ZZ , and b) if ji <, then , 11 ()( ) () , 11 () =() N Nr q uqv ij Nij iN N iuv Nq uqv ij j YD DY e converges to zero. Now Corollary 4 says that for ji < () 2 , 11 , 11 1 () == NN quq v ij j NNq uqvN ij i qu i Y DY O d and so b) is automatically statisfied in this case. Therefore N rN DY converges iff a) holds. Since each i Z ~ is indecomposable, for arbitrary (, )uv there exists a path either from u i q S 1 to v i q S 1 or vice verse. In either case this implies that )()( =i v i u for any u and v. We complete the proof of 1). 2) This time D is locally primitive, so we have )()(=j v j u (=1,,jt) and hence  X. Z. CHEN ET AL. Copyright © 2011 SciRes. AJCM 70 , 11 ()( ) () , 11 , 11 () ()if = ()if = N Nr q uqv ij Nij iN N iuv Nq uqv ij j NNq uq v jj YD DYei j DY ji By hypothesis, the above converges for ij=. The convergence for ji > is obvious; while the convergence for ji < can be easily achieved by noticing that () 2 , 11 , 11 1 () == NN quq v ij j NNq uqvN ij i qu i Y DY O d . Remark. From Theorem 4.5. we know that in the case of multiple eigenvalues, if uqk i 1 =, then 1(1) 11 () 1 1 [0,,0, ,,, 0,,0] qqq iii NT kqq Nii i ypp d . Let us now turn to the applications of this theorem. Our first application is the following result gives the general convergence result of power scaled triangular matrix. Corollary 4.6. Let D be diagonal and T be upper triangular. Then NN T D converges if and only if 1) Either 1|</| ii d or iid= for each i 2) If ||>| | ijij SS . Proof. Let N NTA =. This time the GS factorization for N NTA = becomes )( NN T N TTDD and from Theorem 4.2., NN T D converges if and only if both NN TN TDG = and N T NDD converge. The convergence of N T NDD is equivalent to 1); while the convergence of NN TN TDG =, on account of Theorem 3.4., is exactly the same as the path condition 2). A relevant application of Theorem 3.4. is to the question of subspace iteration. Armed with Theorem 3.4. we can get a sharper theoretical result than was previously given. 5. Application to the Subspace Iteration Next, suppose T is an block upper diagonal matrix of the form 1121 1 22 2 0 = 00 pt pt tp t IT T IT I T, (33) where |>||>|>|| 21 t . Let =()=diag Tdiag T D 11 (,,) ptp t I I and denote r rT r TDD )(= . Then from Theorem 3.4. it follows that NN TTD converges. Assume B is r n matrix of full column rank. Therefore i t ipnr 1= = and without loss of generality we can write wpri s i 1= = for some 1 s pw . Thus we can write 11 1 =(,,, ) rpspsw s TdiagII I . We now have Corolla ry 5.1 . Let T be nn upper triangular matrix defined as in (33), and let B be r n matrix whose columns are linearly independent. If NN NGYBT= is its GS factorization, then the followings hold 1) BTD NN T converges, say, to a limit A ~ . 2) N r TN DY converges to 0 P, where 1 =(,PdiagP ,, ) s PP and each i P (=1, , is) is a ii pp matrix and P ~ is a wps 1 matrix. Proof. The result follows by simply choosing BTA N N= in Theorem 4.2. Let us now turn to the question of subspace iteration for a restricted class of matrices. Suppose that * =VTVA (34) is nn matrix, where V is unitary and T is as in (33). Then using the same i P as above we have Corollary 5.2. Suppose that A is an nn matrix which satisfies (34). let 0 Y be an r n matrix whose columns are linearly independent and }{N Y be sequence of matrices defined by the following factorization 0= N N N A YYG. Then 11 1 [,,, ] N NTsss r YDVPVP VP . (35) Proof. Since 0= N N N A YYG, it follows that * 0 ()= N N N VTV YYG. Partition 1 =[ ,,] t VV V conformally to that of T in (33) and set 0 * =YVB , then * =( ) N N N TB VYG. (36) It is easily seen that the columns of N YV * are orthogonal. Therefore (36) can be regarded as the GS factorization of B T N. From Corollary 5.1., we have that for 1 =[ ,,] t VV V * 0 N NT r P VYD , which is equivalent to  X. Z. CHEN ET AL. Copyright © 2011 SciRes. AJCM 71 11 1 ==[,,, ] 0 N NTrss s r P YDVVPVPVP VP . 6. References [1] F. L. Bauer, “Das Verfahren der Treppeniteration und verwandte Verfahren zur Lösung Algebraischer Eigen- wertprobleme,” Zeitschrift für Angewandte Mathe- matik und Physik, Vol. 8, No. 3, 1957, pp. 214-235. doi:10.1007/BF01600502 [2] A. Ben-Israel, “A Volume Associated with nm Matrices,” Linear Algebra and Its Applications, Vol. 167, No. 1, pp. 87-111, 1992. doi:10.1016/0024-3795(92)90340-G [3] X. Chen and R. E. Hartwig, “On Simultaneous Iteration for Computing the Schur Vectors of Matrices,” Pro- ceedings of the 5th SIAM Conference on Applied Linear Algebra, Snowbird, June 13-19, 1994, pp. 290-294. [4] X. Chen and R. E. Hartwig, “On the Convergence of Power Scaled Cesaro Sums,” Linear Algebra and Its Applications, Vol. 267, pp. 335-358, 1997. doi:10.1016/S0024-3795(97)80056-0 [5] X. Chen and R. E. Hartwig, “The Semi-iterative Method Applied to the Hyperpower Iteration,” Numerical Linear Algebra with Applications, Vol. 12, No. 9, pp. 895-910, 2005. doi:10.1002/nla.429 [6] X. Chen and R. E. Hartwig, “The Picard Iteration and Its Application,” Linear and Multi-linear Algebra, Vol. 54, No. 5, 2006, pp. 329-341. doi:10.1080/03081080500209703 [7] R. A. Horn and C. R. Johnson, “Matrix Analysis,” Cambridge University Press, Cambridge, 1985. [8] R. A. Horn and C. R. Johnson, “Topics in Matrix Ana- lysis,” Cambridge University Press, Cambridge, 1991. [9] A. S. Householder, “The Theory of Matrices in Nu- merical Analysis,” Dover, New York, 1964. [10] B. N. Parlett and W. G. Poole, Jr., “A Geometric Theory for the QR, LU, and Power Iterations,” SIAM Journal on Numerical Analysis, Vol. 10, No. 2, 1973, pp. 389-412. doi:10.1137/0710035 [11] H. Rutishauser, “Simultaneous Iteration Method for Symmetric Matrices,” Numerische Mathematik, Vol. 16, No. 3, 1970, pp. 205-223. doi:10.1007/BF02219773 [12] Y. Saad, “Numerical Methods for Large Eigenvalue Problems,” Manchester University Press, Manchester, 1992. [13] G. W. Stewart, “Methods of Simultaneous Iteration for Calculating Eigenvectors of Matrices” In: John J. H. Miller, Eds., Topics in Numerical Analysis II, Academic Press, New York, 1975, pp. 185-169. [14] G. R. Wang, Y. Wei and S. Qiao, “Generalized Inverses: Theory and Computations,” Science Press, Beijing/New York, 2004. [15] D. S. Watkins, “Understanding the QR Algorithm,” SIAM Review, Vol. 24, No. 4, 1982, pp. 427-440. doi:10.1137/1024100 [16] D. S. Watkins, “Some Perspectives on the Eigenvalue Problem,” SIAM Review, Vol. 35, No. 3, 1993, pp. 430-470, doi:10.1137/1035090 [17] J. H. Wilkinson, “The Algebraic Eigenvalue Problem,” Oxford University Press (Clarendon), London and New York, 1964. |