American Journal of Computational Mathematics, 2014, 4, 474-481 Published Online December 2014 in SciRes. http://www.scirp.org/journal/ajcm http://dx.doi.org/10.4236/ajcm.2014.45040 How to cite this paper: Sohaly, M.A. (2014) Mean Square Heun’s Method Convergent for Solving Random Differential Initial Value Problems of First Order. American Journal of Computational Mathematics, 4, 474-481. http://dx.doi.org/10.4236/ajcm.2014.45040 Mean Square Heun’s Method Convergent for Solving Random Differential Initial Value Problems of First Order M. A. Sohaly Department of Ma th emat ic s, Faculty of Science, Mansoura University, Mansoura, Egypt Email: m_stat20 00@yaho o.com Received 23 September 2014; revised 20 October 2014; accepted 12 November 2 014 Copyright © 2014 by author and Scientific Research Publishing Inc. This work is licensed under the Creative Commons Attribution International License (CC BY). http://creativ ecommon s.org/l icenses/by/4. 0/ Abstract This paper deals with the construction of Heun’s method of random initial value problems. Suffi- cient conditions for their mean square convergence are established. Main statistical properties of the approximations processes are computed in several illustrative examples. Keywords Stochastic Partial Differential Equations, Mean Square Sense, Second Order Random Variable, Finite Difference Scheme 1. Introduction Random differential equation (RDE), is an ordinary differential equation (ODE) with random inputs that can model unpredictable real-life behavior of any continuous system and they are important tools in modeling com- plex phenomena. They arise in many physics and engineering applications such as wave propagation, diffusion through heterogeneous random media. Additional examples can be found in materials science, chemistry, biol- ogy, and other areas. However, reaching a solution of these equations in a closed form is not always possible or even easy. Due to the complex nature of RDEs, numerical simulations play an important role in studying this class of DEs. For this reason, few numerical and analytical methods have been developed for simulating RDEs. Random solutions are always studie d in terms of their statistical measures. This paper is interested in studying the following random differential initial value problem (RIVP) of the fo rm: ( )() ( ) ( ) 00 d,, . d Xt ftXtXt X t= = (1)  M. A. Sohaly Randomness may exist in the initial value or in the differential operator or both. In [1] [2], the authors dis- cussed the general order conditions and a global convergence proof was given for stochastic Runge-Kutta me- thods applie d to stochastic or dinary differential e quations (SODEs) o f Strato novic h type. In [3] [4], the authors discussed the random Euler method and the conditions for the mean square convergence of this problem. In [5], the aut ho r s c onsi d er ed a very simple adaptive algorithm, based on controlling only the drift component of a time step but if the drift was not linearly bounded, then explicit fixed time step approximations, such as the Euler- Maruyama scheme, may fail to be ergodic. Platen, E. [6] discussed discrete time strong and weak approximation methods that we re suitable for different applications. [12] discussed the mean square convergent Euler and Runge Kutta methods for random initial value problem. Other numerical methods are discussed in [7]-[12]. In this paper the random Heun’s method is used to obtain an approximate solution for Equation (1). T his pa- per is organized as follows. In Section 2, some important preliminaries are discussed. In Section 3, random dif- ferential equatio ns are discussed. In Section 4, the convergence of rando m Heun’s method is discussed. Section 5 presents the solution of numerical example of first order random differential equation using random Heun’s method showing the convergence of the numerical solutions to the exact ones (if possible). The general conclu- sions are presented in Section 6. 2. Preliminaries Definition 1 [1 3] . Let us consider the properties of a class of real r.v.’s whose second moments, are finite. In this case, they are called “second order random variables”, (2.r.v’s). The Convergence in Mean Square [13] A sequence of r.v’s converges in mean square (m.s) to a random variable X if: i.e. or where l.i.m is the limit in mean square sense. 3. Random Initial Value Problem (RIVP) If we have the random differential equation ()( ) ( ) [ ] () 010 0 ,,, ,Xtf XtttTttXtX = ∈== (2) where X0 is a rando m variable, and the unknown as well as the right-hand side are stochastic processes defined on the same probability space , are powerful tools to model real problems with un- certainty. Definition 2 [6] [7]. • Let is an m.s. bounded function and let then The “m.s. modulus of continuity of g” is the function ( )( ) ( ) * ** ,sup, ,. tt h Wghgt gtttT −≤ =−∈ • The function g is said to be m.s. uniformly continuous in T i f: Note that: (The limit depend on h because g is defined at every t so we can write ). In the p ro ble m (2) that we di scus ses we fi nd t hat t he co nver genc e o f this pro ble m is de pend on t he ri ght ha nd side ( i.e. ) then we want to a pply t he pre vious definition on hence: Let be defined on S × T where S is bounded set in L2 Then we say that f is “randomly bounded uniformly continuous” in S, if (note that: ). A Random Mean Value Theorem for Stochastic Processes The aim of this section is to estab lish a re la tionship be tween the increment of a 2-s.p. a nd its m.s.  M. A. Sohaly derivative for some lying in the interval for . This result will be used in the next section to prove the convergence of the random Heun’s method discussed. Lemma (3.2) [6] [7]. Let is a 2-s.p., m. s . continuous on interval . Then, there exists such t hat: ( )() ( ) 0 00 1 d ,. t t YssYt tttt ξ = −<< ∫ (3) Theorem (3.3) [6] [7]. Let be a m.s. differentiable 2-s.p. in and m.s. continuous in . Then, there exists such t hat: () ( ) () () 00 . XtXtXt t ξ −= − 4. The Convergence of Random Heun’s Scheme In this section we are interested in the mean square convergence, in the fixed station sense, of the random Heun’s method defined by: ( )( ) ( ) ( ) 11 00 ,,,,, 0 2 nnnnnnnn h XXfXtfXhfXt tXtXn ++ =+++= ≥ (4) where and are 2-r.v.’s, , and , satisfies the following conditions: C1: is randomly bounded uniformly continuous. C2: satisfies the m.s. L ipschitz conditio n: ( )()( )( ) 1 0 ,,where :. t t f xtfytktxykt−≤ −≤∞ ∫ (5) Note that under hypothesis C1 and C2, we are interested in the m.s. convergence to zero of the error: (6) where is the theoretica l so lution 2-s.p. of the problem (2), . Ta king into acc ount (2), and Th (3 .3 ), one gets, since from (2) we have at then: . (Note: and we can use instead of .) And from Th (3.3) at then we obtain: ( ) ( ) ( ) ()() ( ) ( ) ( ) ( ) 00 00 ,.XtXtXtt tXtXtfXtttt ξξξξξ ξ −= −⇒−=− Note that we deal with the interval and hence was the starting in the problem (2) and here is the starting and since Heun’s method deal with solution depend on previous solution and if we have instead of then we can use instead of hence the final form of the problem (2) is: ( )( ) ( ) ( ) () 11 ,,for some,. n nnn XtXthf Xt tttt ξξ ξ ++ =+∈ (7) Now we have the solution of problem (2) is: At then and the solution of Heun’s method (4) is: Then we can define the error: By (4) and (7 ) it follows that: ()()( ) ( ) ( ) ( ) ( ) ( ) ( ) 11 11 , ,, 2 , ,. 22 nnnnnnnnn n n h eXXtXfXtfXhfXt t hh XtfXt tfXtt ξξ ξξ ++ ++ =− =+++ −− − Then we obtain :  M. A. Sohaly ( )() ( ) ( ) { } ( ) ( ) ( ) ( ) { } 11 ,,,,, . 22 nnnnnnnnn hh eXXtfXtfXttfXhfXt tfXt t ξξ ξξ ++ =−+−++− By ta king the norm for the t wo sides: ( )( ) () ( ) { } ( ) () ( ) ( ) { } ( ) ( ) ( ) ( )( ) ( ) ( ) () 11 1 , ,,,, 22 ,,,,, . 22 nnnnnnnn n nnnnnnn n hh eXXtfXtfXt tfXhfXt tfXtt hh XXt fXttfXt fXhfXttfXtt ξξ ξξ ξξ ξξ ++ + =−+−+ +− ≤−+−++− (8) Since: ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ,, ,, ,, ,, ,,,,, ,. nn nnnnnn nn nnnnnn nn fXttfXt fXt tfXttfXttfXt tfXt tfXt fXt tfXttfXttfXt tfXt tfXt ξξ ξξ ξξ ξξ ξξ − = −++−− ≤−+−+− (9) Since the theoretic al solution is m.s. bounded in , and under hypothesis C1, C2 , we have: • ( ) ( ) ( ) ( ) ( ) , ,. n f Xttf Xttwh ξξ ξ −= • ( ) ( ) ( ) ( ) ( ) ,, nnn n f Xttf XttktMh ξ −≤ (10) where is Lipschitz constant (from C2) and: From Th (3.3) we have ( ) ( ) ( ) ( ) 00 XtXtXt t ξ −= − and note that the two points are and in (1 0) the n: ( ) ( ) ( ) nn XtXtXt tMh ξξ ξ − =−≤ where: and • ( ) ( ) ()( )()( ) ,, . n nnnnnnnn f Xttf XtktXtXkte− ≤−= Then by substituting in (9 ) we have : ( ) ( ) ( ) ( ) ( )() ,, . nnnnn f Xttf XtwhktMhkte ξξ −≤+ + (11) And a nothe r term: ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( )( ) 11 1 11 11 1 11 11 11 ,, ,,,, ,,, , , ,, ,,, , , ,, nnn nnnn n nn nn nnn nnn nn nn nnn nnn n nn fXhfXt tfXttfXt tfXhfXt t f Xttf XttfXttf Xtt fXttfXhfXt t f Xttf Xttf Xttf Xtt fXttfXhfXt t whktMh kteh ξξ ξξ ξξ ξξ ξξ ξξ ++ +++ ++ + ++ ++ ++ +− = −+ =−++ − −+ ≤− + − + −+ ≤+ +−.M Since: • ( ) ( ) ( ) ( ) ( ) 1 , ,. n f XttfXttwh ξξ ξ + −≤ • ( ) ( ) ( ) ( ) ( ) 1 11 ,, nnn n f Xttf XttktMh ξ + ++ −≤  M. A. Sohaly where is Lipschitz constant (from C2) and: From Th (3.3) we have ( ) ( ) ( ) ( ) 00 XtXtXt t ξ −= − and note that the two points are and in (1 0) the n we have: () () ( ) nn XtXtXt tMh ξξ ξ − =−≤ where and And the last term: ( ) () () () ()( )( ) ()()()() 1 11 11 , ,,, ,. n nnnnnnnnnn nn nnnnn fXttfXhfXt tktXtXhfXt k tXtXhfXtktehM + ++ ++ − +≤−− ≤−−= − Then by substituting in (8 ) we have: ( ) ( )( ) ( ) ( )( ) ( )() ( ) ()( )( ) ()( ) ( ) ()( )( ) ( )() 1 11 1 11 11 1 1 22 12 22 12 22 12 nnnnnnn n nnnnn n nnn nnn nn hh eewhktMh ktewhktMhktehM hh ektktwhhkt MhktMKthM hh ektktwhhktMhkt MKt hM hkt kt + ++ + ++ −− − + ≤++ ++++− =++ +++− ≤ +++++− ×+ + ( ) ()( )( ) ()( )( )() ( ) ()( )( ) ( )() ( ) ()( )( ) ()() ( ) ( ) 11 111 1 1 11 2 212 2 2 11 2 2 22 12 22 12 22 nn n nnnnn nnn nnn nn n nnn hw hhk tMhktMKthM h hh ektktktktwhhktMhktMKt hM hh ktktwhhkt MhktMKthM hh ektktwhhktMhk ++ −−+ − + ++ − −−− +++ − =++++ +++− ×+ ++++− ≤+ ++++ ( )( ) ()( ) ( ) ()( )( ) ( )() ( ) ()( )( ) ()()()( )( )() 11 11 1 11 2 2111 12 22 12 22 1 11 2 22 2 2 nn nnnn n nnn nn nnnn nnn tMK thM hh ktktwhhktMhkt MKt hM hh ktktwhhkt MhktMKthM h hh ektktktktkt kt hw −− −− + ++ − −−−+ − ×++ +++− ×+ ++++− = ++++++ + ( ) ()()( )()()()() ( ) ()( )( )()() ( ) ()( )( ) ( ) () ( ) ( )( ) 2 1111 11 11 3 323 2 11 22 21 22 2 2 12 22 nnnn nnn nn nnn nn n nnn nnn hh hhktM hktMKthMktktktkt hh whhktMhkt MKt hMktkt hw hhk tMhktMKthM hh ektktwhhktM hktM Kt − −−−+ −+ ++ −−− −− ++−+ + ++ +++ −++ +++ − ≤+ ++++− ( ) ()() ( ) ()()( ) ()( ) ( ) ()( )( ) 2 2121 1 11 12 22 12 22 nnnn n nnnn n hM hh ktktwhhktMhktM KthM hh ktktwhhktMhkt MKt hM − −−−− − −− ×++ +++− ×++ +++−  M. A. Sohaly ()() () ()( )( ) ()()()()()( ) ()() () ()()( )( ) () 1 11 3 322111 21 121 12 22 11 11 22 22 21 22 12 nnn nn nnnnnnnnn nn nnn n hh ktktwhhktMhktMKthM hh hh ektktktktktktkt kt hh whhktM hktM KthMktkt hkt + ++ − −−−−−+ −− −−− ×+ ++++− =++++ ++++ + ++−++ ×+ ()()()( ) () ()()( ) ( )()()() () ()()( ) ( )() () ( )()( ) 1 11 1 111 11 12 22 11 2 2 22 12 . 22 nnnnn n n nnnnnn nnnnn hh ktktktwhhktMhkt MKt hM h hh ktktktktwhhktMhktMKthM hh ktktwhhktMhktMKt hM − +− − +++ +− +++ +++− ×++++++ +− ×+ ++++− Finally, we have: ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) ()()( )() ( ) ()( )( ) ( ) ( ) ( ) ( ) () ( ) ( ) () 10011223 112 11 12 23 11 1 22 2 11 2 2 22 11 22 nnnnn nnnnnn n nnnnnn nn nnnnnn hh h eekt ktktktktkt h hh ktktktktwhhktM hktM KthM hh kt ktktkt +−−−− −−−−−− −+− −− −− −−−−−− ≤+ +++++ ×+++ ++++− ×+ +++ ()() () ()()()()( )( )() ( ) ()( )() ( ) ( ) ( ) ( ) ()() ( )() 1 121 11 11 23 1 12 11 11 22 22 2 11 2 22 11 2 nn nnnnn nnn nn nnn nn nn nn hkt kt hh hh kt ktktktktktktkt h hh whhktMhktMKt hMktktktkt hh kt kt − + −− −+ −− −− −− + ++ ×+++++ + ++ +++ −++++ ×+ ++ ( ) ()()()( )() ( ) ( )()( )()() ( ) ()( )() ( ) ( ) ( ) ( ) ()( )( ) 21 11 11 1 1 02 11 1 0 11 2 22 21 22 2 2 12 22 nnnnnn n nnnn n nn n ninnn ni i hh ktktktktkt kt hh whhkt MhktMKthMktkt hwhhk tMhk tMKthM hh ektktwhhktM hktM KthM −− −+ +++ − −− −− −− = + +++++ ++ +−++ ++ +− = +++++− ∏ ( ) ( ) ( ) ( ) ()( )() ( ) ( ) ( ) ( ) ()()( ) ()() ( ) ()( )() ( ) ( ) ( ) 1 1 1 0 2 11 1 0 11 01 12 22 12 22 12 22 12 n ninnn ni i n nin nn ni i nnnn n ni ni hh ktktwhhktMhkt MKt hM hh ktktwhhktMhktMKthM hh ktktwhhktMhkt MKt hM h ekt kt − −− −− = − − ++ −− = +− −−− ×+ ++++− × ++++++− ×+ ++++− = ++ ∏ ∏ ( ) ()()() () ( ) ( ) ( ) ( ) ( ) ( )() 2 11 0 12 1 11 00 2 2 1111 . 22 2 n n nn i nn ninin n ni ni ii hwhhk tMhk tMKthM hh h ktktktktktkt − −− = −− −− + −− −− = = +++ − ×++ ++++++++ ∏ ∏∏ Taking into account t hat where and by taking the limit as:  M. A. Sohaly then we have : i.e. conve rge i n m.s. to zero as: hence 5. Case Study Ex amp l e: Solve the problem , , , The theore tic a l solution is: The approximations: h (st ep size) for the exact solution for Heun’s method Error on Heun’s Error on Euler [12] Error on Runge Kutta [12] 0.25 1318.1 792 42 1306.2500 00 11.929242 6 8. 179242 156.820758 0.125 1140.4312 27 1 128.515625 1 .3 68727 15.431 227 97.068773 0.025 1025.5727 65 1 025.562500 0 .0 10265 0.5727 65 21.927235 0.0025 1 002.505635 1 002.505625 0 .0 00010 0.0056 35 2.244365 0.25 1306.1 224 49 1296.8750 00 9.247449 5 6. 122449 131.377551 0.125 1137.7777 78 1 136.718750 1 .0 59028 12.777 778 80.972222 0.025 1025.5727 65 1 025.468750 0 .0 07936 0.4766 86 18.273314 0.0025 1 002.505635 1 002.504688 0 .0 00007 0.0046 95 1.870305 0.25 1764.8 237 62 1687.5000 00 77.323762 2 64.823762 4 85.176238 0.125 1305.1225 00 1 296.875000 8 .2 47500 55.122 500 319 .87 750 0 0.025 1051.9338 60 1 051.875000 0 .0 58860 1.9338 60 73.066140 0.0025 1 005.018808 1 005.018750 0 .0 00058 0.0188 08 7.481192 In this results showed in the table we have the Heun’s method gave better approximation as: than Euler and Rung-Kutta [12] for solving random variable, ınitial value problems. 6. Conclusion The initially valued first order random differential equations can be solved numerically using the random Heun’s methods in mean square sense. The convergence of the presented numerical techniques has been proven in mean square sense. The results of the paper have been illustrated through an example. References [1] Bu rrage, K. and Burrage, P.M. (1996) High Strong Order Explicit Runge-Kutta Methods for Stochastic Ordinary Dif- ferential Equations. Applied Numerical Mathematics, 22, 81-101. http://dx.doi.org/10.1016/S0168-9274(96 ) 00027-X [2] Burrage, K. and Burrage, P.M. (1998) General Order Conditions for Stochastic Runge-Kutta Methods for Both Com- muting and Non-Commuting Stochastic Ordinary Equations. Applied Numerical Mathematics, 28, 161-177.  M. A. Sohaly http://dx.doi.org/10.1016/S0168-9274(98)00042-7 [3] Cortes, J.C., Jodar, L. and Villafuerte, L. (2007) Numerical Solution of Random Differential Equations, a Mean Square Approach. Mathematical and Computer Modeling, 45, 757 -765. http://dx.doi.org/10.1016/j.mcm.2006.07.017 [4] Cortes, J.C., Jodar, L. and Villafuerte, L. (2006) A Random Euler Method for Solving Differential Equations with Un- certainties. Progress in Industrial Mathematics at ECMI. [5] La mba , H., Mattingly, J.C. and Stuart, A. (2007) An Adaptive Euler-Maruyama Scheme for SDEs, Convergence and Stability. IMA Journal of Numerical Analysis, 27, 479-506. http://dx.doi.org/10.1093/imanum/drl032 [6] Platen, E. (1999) An Introduction to Numerical Methods for Stochastic Differential Equations. Acta Numerica, 8, 197-246. http://dx.doi.org/10.1017/S0962492900002920 [7] H i gh a m, D.J. (2001) An Algorithmic Introduction to Numerical Simulation of SDE. SIAM Review, 43, 525-546. http://dx.doi.org/10.1137/S0036144500378302 [8] Ta l ay, D. and Tubaro, L. ( 1990) Expansion of the Global Error for Numerical Schemes So lving Stochast ic Different ial Equation. Stochastic Analysis and Applications, 8, 483-509 . http:/ / dx.doi.org/10.1080/07362999 008809220 [9] Burrage, P.M. (1999) Numerical Methods for SDE. Ph.D. Thesis, University of Queensland, Brisbane. [10] Kloeden, P.E., P laten, E. and Schurz, H. (1997) Numerical Solution of SDE through Computer Experiments. 2nd Edi- tion, Springer, Berlin. [11] El-Tawil, M.A. (2005) The Approximate Solutions of Some Stochastic Differential Equations Using Transformation. Applied Mathematics and Computation, 164, 167-17 8. http://dx.doi.org/10.1016/j.amc.2004.04.062 [12] El-Tawil, M.A. and Sohaly, M.A. (2011) Mean Squ are Nu merical Meth od s for In it ial Val ue R andom Differential Equ- ations. Open Journal of Discrete Mathematics, 1, 66-84. http://dx.doi.org/10.4236/ojdm.2011.12009 [13] Kloeden, P.E. and Platen, E. (1999) Numerical Solution of Stochastic Differential Equations. Springer, Berlin.

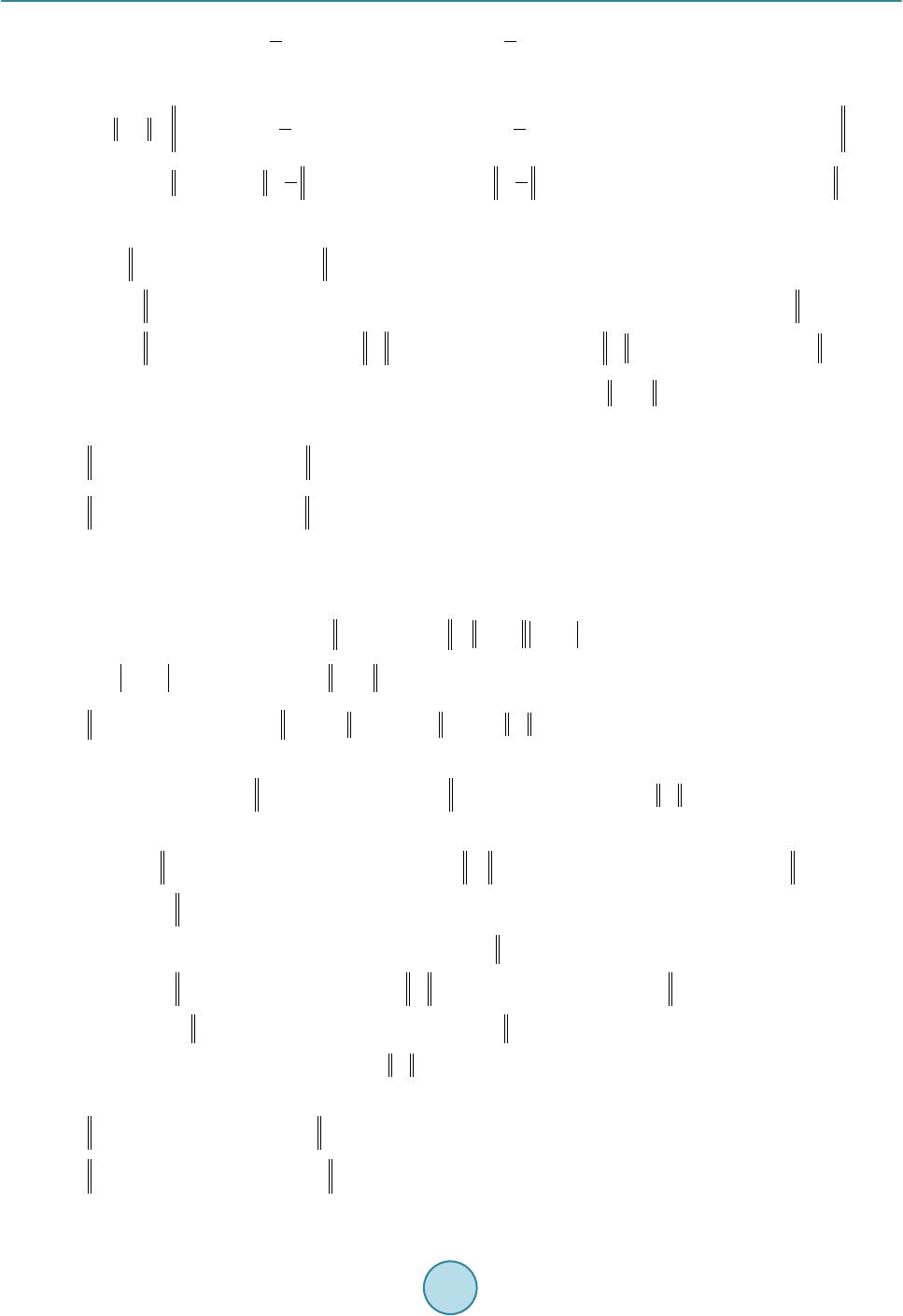

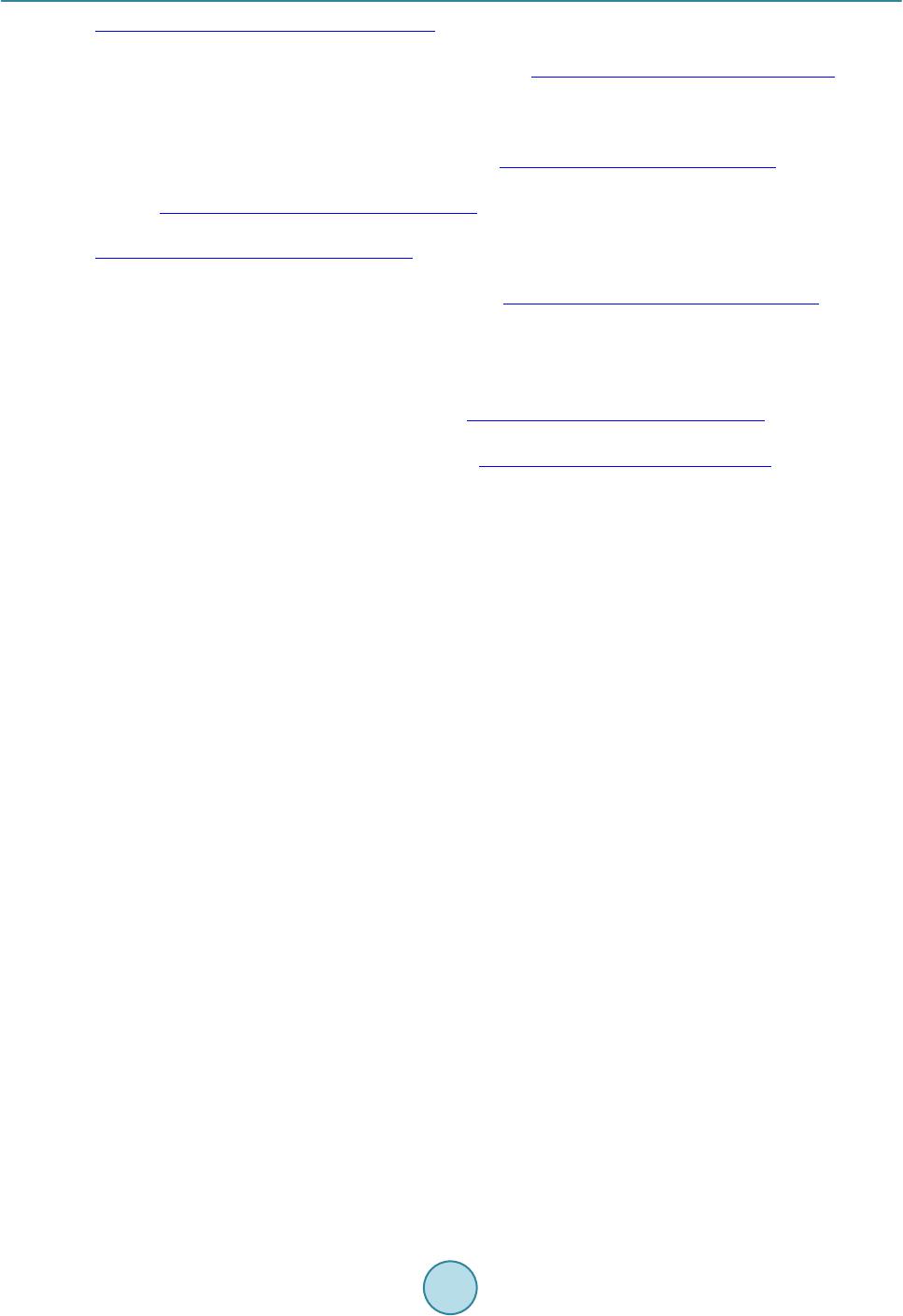

|