Applied Mathematics

Vol.06 No.10(2015), Article ID:59733,14 pages

10.4236/am.2015.610152

An Implicit Smooth Conjugate Projection Gradient Algorithm for Optimization with Nonlinear Complementarity Constraints

Cong Zhang1*, Limin Sun1, Zhibin Zhu2, Minglei Fang3

1Huarui College, Xinyang Normal University, Xinyang, China

2School of Mathematics & Computational Science, Guilin University of Electronic Technology, Guilin, China

3College of Science, Anhui University of Science and Technology, Huainan, China

Email: *zhcopt@126.com

Copyright © 2015 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 26 July 2015; accepted 15 September 2015; published 18 September 2015

ABSTRACT

This paper discusses a special class of mathematical programs with equilibrium constraints. At first, by using a generalized complementarity function, the discussed problem is transformed into a family of general nonlinear optimization problems containing additional variable m. Furthermore, combining the idea of penalty function, an auxiliary problem with inequality constraints is presented. And then, by providing explicit searching direction, we establish a new conjugate projection gradient method for optimization with nonlinear complementarity constraints. Under some suitable conditions, the proposed method is proved to possess global and superlinear convergence rate.

Keywords:

Mathematical Programs with Equilibrium Constraints, Conjugate Projection Gradient, Global Convergence, Superlinear Convergence

1. Introduction

Mathematical programs with equilibrium constraints (MPEC) include the bilevel programming problem as its special case and have extensive applications in practical areas such as traffic control, engineering design, and economic modeling. So many scholars are interested in this kind of problems and make great achievements, (see [1] -[10] ).

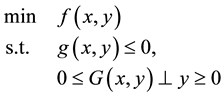

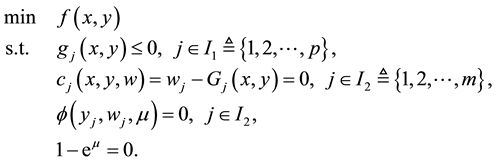

In this paper, we consider an important subclass of MPEC problem, which is called mathematical program with nonlinear complementarity constraints (MPCC):

(1.1)

(1.1)

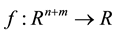

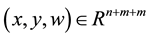

where ,

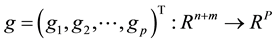

,

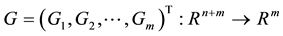

,

,

are all continuously differential functions,

are all continuously differential functions, .

.

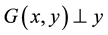

denotes orthogonality of the vectors y and

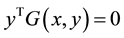

denotes orthogonality of the vectors y and , i.e.,

, i.e., .

.

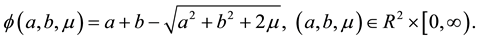

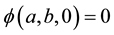

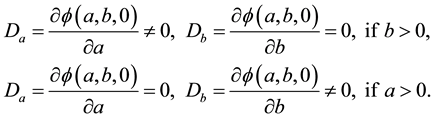

In order to eliminate the complementary constraints, which can not satisfy the standard constraint qualification [11] , we introduce the generalized nonlinear complementary function

Obviously, the following practical results about function

hold:

hold:

• if

and

and , then

, then

(1.2)

(1.2)

•

(1.3)

(1.3)

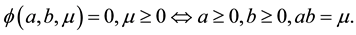

By means of the function , problem (1.1) is transformed equivalently into the following standard nonlinear optimization problem

, problem (1.1) is transformed equivalently into the following standard nonlinear optimization problem

(1.4)

(1.4)

Similar to [12] , we define the following penalty function

where

2. Preliminaries and Algorithm

For the sake of simplicity, we denote

Throughout this paper, the following basic assumptions are assumed.

H 2.1. The feasible set of (1.1) is nonempty, i.e.,

H 2.2. The functions

H 2.3.

The following definition and proposition can be refereed to in [13] .

Definition 2.1. Suppose that

If there exists multipliers

hold, then

Proposition 2.1. Suppose that

where

Proposition 2.2. (1) s is a feasible point of (1.1) if and only if

(2)

Proof. (1) According to the property of function

(2) Suppose that

So, it is not difficult to prove that

Conversely, if

which shows that

Then, it is easy to see, from (1.2) and the

Now, we present the definition of multiplier function associated with

Definition 2.2. A continuous function

Firstly, for a given point

Algorithm A:

Step 1. For the current point

Step 2. If

Step 3.

Lemma 2.1. For any iteration index k, algorithm A terminates in finite iteration.

For the current point

Now we give some notations and the explicit search direction in this paper.

where

According to the above analysis, the algorithm for the solution of the problem (1.1) can be stated as follows.

Algorithm B:

Step 0. Given a starting point

Choose parameters

Step 1. By means of Algorithm A, compute

Step 2. Compute

goto Step 3; otherwise, goto Step 4.

Step 3. Let

(1) If

Set

(2) Let

Step 4. Obtain feasible descent direction

Let

Step 5. Define

and

In the remainder of this section, we give some results to show that Algorithm B is correctly stated.

Lemma 2.2. (1) If

(2) If the sequence

Proof. (1) If

In view of

so we have

(2) Note that the boundedness of sequence

So, the claim holds.

According to Lemma 2.2 and the continuity of functions

Lemma 2.3. Algorithm B is well defined.

3. Global Convergence

In this section, we consider the global convergence of the algorithm B. Firstly, we show that

Lemma 3.1. (1)

(2) If

Proof. (1) If

Note that matrix

By (2.14) and (3.1), we have

so

On the other hand, it is easy to verify that

It follows from

From the positive definiteness of

which implies that

(2) In view of the definition of

Since the vectors

i.e.

Thus, we deduce

In view of the definition of penalty parameter

Combining with (3.2) and (3.5), it holds that

Let

Theorem 3.1. Suppose the nondegeneracy condition holds at

Proof. According to the K − T system of (1.4) and the relationship of index i and j in (2.1), we see that

Then, combining with Proposition 2.1 and Proposition 2.2, we can conclude that

In the sequel, it is assumed that the Algorithm B generates an infinite sequence

H 3.1. (1) The sequence

(2) The accumulation point

From H 3.1 and the fact that there are only finitely many choices for sets

where J is a constant set. Correspondingly, the following results hold:

Lemma 3.2. Suppose

(1) there exists a constant

(2) there exists a constant

Proof. (1) suppose, by contradiction, that there exists an index set

Since there are only finite possible subsets of

which contradicts the condition H 2.3.

(2) Suppose by contradiction, there exists a subsequence

From the finite selectivity of

a contradiction, and the result is proved.

Lemma 3.3. Suppose that

(1)

(2)

Proof. (1) Suppose

Therefore, for

(2) For (2.20), denote

From (3.12), for

For (2.21), when

According to the analysis above, the result is true.

Lemma 3.4. Algorithm B generates infinite sequence

Proof. Suppose that

Now we consider the following two cases:

(1) Suppose there exists an infinite subset

which is obtained by Step 3 and Step 5. In view of

Obvious,

(2) Assume the iteration

which is a contradiction. Thus, the claim holds.

Theorem 3.2. The

Proof. If

Set

Obvious,

i.e.

Thereby,

According to the definition of

In addition, combining with (3.2) (3.16), we obtain

Let

Theorem 3.3. Suppose (2.2) holds at

Proof. According to Theorem 3.2 and (2.1), Proposition 2.1 and Proposition 2.2 imply

4. Superlinear Convergence

Now we discuss the convergence rate of the Algorithm B, and prove that the sequence

H 4.1. The bounded sequence

Lemma 4.1. Under H 2.1-H 4.2, we have that

Proof. For

While, for

So

Passing to the limit

Thereby

Theorem 4.1. The entire sequence

In order to obtain the superlinear convergence rate, we make the following assumption.

H 4.2.

Lemma 4.2. If H 2.1-H 4.2 hold, then we get that

(1) for k large enough,

(2)

Proof. (1) On one hand, by Lemma 3.2, for k large enough, there exists a constant

On the other hand, we assert that

Let

It is a contradiction with the complementary slackness condition, which shows that

(2) According to

So the uniqueness of

Lemma 4.3. Under H 2.1-H 4.2, for k large enough,

Proof. Suppose that

In addition, for k large enough,

Lemma 4.4. (1) For k large enough, there exist constants

(2)

Proof. (1) Since

Obviously, for k large enough,

In addition, from Lemma 4.3, we see

So

(2) Since

we know

From

So, the result is true.

In order to obtain the superlinear convergence rate, we make another assumption.

H 4.3. The sequence of symmetric matrices

where

Lemma 4.5. For k large enough, Algorithm B is not implemented on Step 4, and

holds in Step 3.

Proof. According to

which shows (2.17) hold. Now we prove that, the arc search (2.19) and (2.18) eventually accept unit step, i.e.,

Firstly, for (2.19), when

when

Again, from

we see

Thus, (4.7) yields

In view of

Secondly, we prove that, for k large enough, (2.18) holds for

From (4.5), we have

Also, by (4.8), it holds that

So

Thus, (4.6) yields

Denote

Clearly,

while, from (2) and (10), it holds that

So

which implies the theorem hold.

According to Lemma 4.3, Lemma 4.4 and Lemma 4.5, combining with Theorem 12.3.3 in [15] , the following state holds.

Theorem 4.2. The Algorithm B is superlinearly convergent, i.e.,

5. Conclusion

By means of perturbed technique and generalized complementarity function, we, using implicit smoothing strategy, equivalently transform the original problem into a family of general optimization problems. Based on the idea of penalty function, the discussed problem is transformed an associated problem with only inequality constraints containing parameter. And then, by providing explicit searching direction, a new variable metric gradient projection method for MPCC is established. The smoothing factor

Acknowledgements

The authors are indebted to the anonymous referees for valuable comments and remarks that helped them improve the original version of the paper.

Funding

This work was supported in part by the National Natural Science Foundation (No. 11361018), the Natural Sci- ence Foundation of Guangxi Province (No. 2014GXNSFFA118001), the Key Program for Science and Techno- logy in Henan Education Institution (No. 15B110008) and Huarui College Science Foundation (No. 2014qn35) of China.

Cite this paper

CongZhang,LiminSun,ZhibinZhu,MingleiFang, (2015) An Implicit Smooth Conjugate Projection Gradient Algorithm for Optimization with Nonlinear Complementarity Constraints. Applied Mathematics,06,1712-1726. doi: 10.4236/am.2015.610152

References

- 1. Kocvara, M. and Outrata, J. (1994) On Optimization Systems Govern by Implicit Complementarity Problems. Numerical Functional Analysis and Optimization, 15, 869-887.

http://dx.doi.org/10.1080/01630569408816597 - 2. Fletcher, R., Leyffer, S., Ralph, D. and Scholtes, S. (2006) Local Convergence of SQP Methods for Mathematical Programs with Equilibrium Constraints. SIAM: SIAM Journal on Optimization, 17, 259-286.

http://dx.doi.org/10.1137/S1052623402407382 - 3. Luo, Z.Q., Pang, J.S. and Ralph, D. (1996) Mathmetical Programs with Equilibrium Constraints. Cambridge University Press, Cambridge.

http://dx.doi.org/10.1017/CBO9780511983658 - 4. Jiang, H. (2000) Smooth SQP Methods for Mathematical Programs with Nonlinear Complementarity Constaints. SIAM Journal of Optimization, 10, 779-808.

http://dx.doi.org/10.1137/S1052623497332329 - 5. Kocvara, M. and Outrata, J. (1995) A Nonsmmoth Approach to Optimization Problems with Equilibrium Constraints. In: Ierns, M.C. and Pang, J.D., Eds., Proceedings of the International Conference on Complementatity Problems, SIAM Publications, Baltimore, 148-164.

- 6. Outrata, J. (1990) On the Numberical Solution of a Class of Stachelberg Problems. Zeitschrift for Operations Research, 4, 255-278.

- 7. Zhang, C., Zhu, Z.B., Chen, F.H. and Fang, M.L. (2010) Sequential System of Linear Equations Algorithm for Optimization with Complementary Constraints. Mathematics Modelling and Applied Computing, 1, 71-80.

- 8. Zhang, C., Zhu, Z.B. and Fang, M.L. (2010) A Superlinearly Covergent SSLE Algorithm for Optimization Problems with Linear Complementarity Constraints. Journal of Mathematical Science: Advance and Application, 6, 149-164.

- 9. Huang, Z.H., Lin, G.H. and Xiu, N.H. (2014) Several Developments of Variational Inequalities and Complementarity Problems, Bilevel Programming and MPEC. Operations Research Transactions, 18, 113-133.

- 10. Zhang, C., Sun, L.M., Zhu, Z.B. and Fang, M.L. (2015) Levenberg-Marquardt Method for Mathematical Programs with Linearly Complementarity Constraints. American Journal of Computational Mathematics, 5, 239-242.

http://dx.doi.org/10.4236/ajcm.2015.53020 - 11. Outrata, J., Kocvara, M. and Zowe, J. (1998) Nonsmmoth Approach to Aptimization Problems with Equilibrium Constraints. Kluwer Academic Publishers, The Netherland.

http://dx.doi.org/10.1007/978-1-4757-2825-5 - 12. Gao, Z.Y., He, G.P. and Wu, F. (2004) Sequential Systems of Linear Equations Algorithm for Nonlinear Optimization Problems—General Constrained Problems. Applied Mathematics and Computation, 147, 211-226.

http://dx.doi.org/10.1016/S0096-3003(02)00662-8 - 13. Jian, J.B., Qin, Y. and Liang, Y.M. (2007) A Generalized Strongly Sub-Feasible Algorithm for Mathematical Problems with Nonliear Complementarity Constraints. Numerical Mathematics: A Journal of Chinese Universities, 29, 15-27.

- 14. Zhu, Z.B. and Zhang, K.C. (2004) A New Conjugate Projuction Gradient Method and Superlinear Convergence. Acta Mathematicae Applicatae Sinica, 27, 149-161.

- 15. Yuan, Y.X. and Sun, W.Y. (1997) Optimization Theory and Method. Science Press, Beijing.

NOTES

*Corresponding author.