Advances in Linear Algebra & Matrix Theory

Vol.06 No.02(2016), Article ID:67303,16 pages

10.4236/alamt.2016.62008

Least-Squares Solutions of Generalized Sylvester Equation with Xi Satisfies Different Linear Constraint

Xuelin Zhou, Dandan Song, Qingle Yang, Jiaofen Li*

School of Mathematics and Computing Science, Guangxi Colleges and Universities Key Laboratory of Data Analysis and Computation, Guilin University of Electronic Technology, Guilin, China

Copyright © 2016 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 12 March 2016; accepted 11 June 2016; published 14 June 2016

ABSTRACT

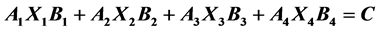

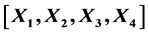

In this paper, an iterative method is constructed to find the least-squares solutions of generalized Sylvester equation , where

, where  is real matrices group, and

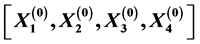

is real matrices group, and  satisfies different linear constraint. By this iterative method, for any initial matrix group

satisfies different linear constraint. By this iterative method, for any initial matrix group  within a special constrained matrix set, a least squares solution group

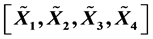

within a special constrained matrix set, a least squares solution group  with

with  satisfying different linear constraint can be obtained within finite iteration steps in the absence of round off errors, and the unique least norm least-squares solution can be obtained by choosing a special kind of initial matrix group. In addition, a minimization property of this iterative method is characterized. Finally, numerical experiments are reported to show the efficiency of the proposed method.

satisfying different linear constraint can be obtained within finite iteration steps in the absence of round off errors, and the unique least norm least-squares solution can be obtained by choosing a special kind of initial matrix group. In addition, a minimization property of this iterative method is characterized. Finally, numerical experiments are reported to show the efficiency of the proposed method.

Keywords:

Least-Squares Problem, Centro-Symmetric Matrix, Bisymmetric Matrix, Iterative Method

1. Introduction

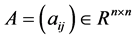

A matrix  is said to be a Centro-symmetric matrix if

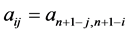

is said to be a Centro-symmetric matrix if  for all

for all . A matrix

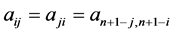

. A matrix  is said to be a Bisymmetric matrix if

is said to be a Bisymmetric matrix if  for all

for all . Let

. Let

and

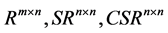

and  denote the set of

denote the set of  real matrices,

real matrices,

Denote

Obviously, K, i.e.

In this paper, we mainly consider the following two problems:

Problem I. Given matrices

Problem II. Denote by

In fact, Problem II is to find the least norm solution of Problem I.

There are many valuable efforts on formulating solutions of various linear matrix equations with or without linear constraint. For example, Baksalary and Kala [1] , Chu [2] [3] , Peng [4] , Liao, Bai and Lei [5] and Xu, Wei and Zheng [6] considered the nonsymmetric solution of the matrix equation

by using Moore-Penrose generalized inverse and the generalized singular value decomposition of matrices, while Chang and Wang [7] considered the symmetric conditions on the solution of the matrix equations

Zietak [8] [9] discussed the

Peng [10] researched the general linear matrix equation

with the bisymmetric conditions on the solutions. Vec operator and Kronecker product are employed in this paper, so the size of the matrix is enlarged greatly and the computation is very expensive in the process of solving solutions. Iterative algorithms have been received much attention to solve linear matrix equations in recent years. For example, by extending the well-known Jacobi and Gauss-seidel iterations for

However, to our best knowledge, the unknown matrix with different linear constraint of linear matrix equations, such as Equations ((1)-(4)), has not been considered yet. No loss of generality, we research the following case

which has four unknown matrices and each is required to satisfy different linear constraint. We should point out that the matrices

The paper is organized as follows. In Section 2, we first conduct an iterative method for solving Problem I, and then describe the basic properties of this method; we also solve Problem II by using this iterative method. In Section 3, we show that the method possesses a minimization property. In Section 4, we present numerical experiments to show the efficiency of the proposed method, and use some conclusions in Section 5 to end our paper.

2. The Iterative Method for Solving Problem I and II

In this section, we firstly introduce some lemmas which are required for solving Problem I, we then conduct an iterative method to obtain the solution of Problem I. We show that, for any initial matrix group

Lemma 1. [16] [17] . A matrix

A matrix

Lemma 2. Suppose that a matrix

Suppose that a matrix

Proof: Its proof is easy to obtain from Lemma 1. W

Lemma 3. Suppose that

Proof: It is easy to verify from direct computation. W

Lemma 4. (Projection Theorem) [18] . Let X be a finite dimensional inner product space, M be a subspace of X, and

where

Lemma 5. Suppose

then the matrix group

Proof: Let

obviously, Z is a linear subspace of

then

i.e. for all

By Lemma 3, it is easy to verify that if the equations of (6) are satisfied simultaneously, the expression above holds, which means

Lemma 6. Suppose that matrix group

Proof: Assume that matrix group

which implies matrix group

Conversely, if matrix group

where matrix group

which means matrix group

Next, we develop iterative algorithm for the least-squares solutions with

where

Algorithm 1. For an arbitrary initial matrix group

Step 1.

Step 2. If

Step 3.

Step 4. Go to step 2.

Remark 1. 1) Obviously, matrices sequence

2)

3) Algorithm 1 implies that if

In the next part, we will show the basic properties of iteration method by induction. First for convenience of discussion in the later context, we introduce the following conclusions from Algorithm 1. For all

Lemma 7. For matrices

1)

2)

3)

Proof: For

Assume that the conclusions

hold for all

By the assumption of Equation (3), we have

Then for j = s,

Then the conclusion

Lemma 7. shows that the matrix sequence

generated by Algorithm 1 are orthogonal each other in the finite dimension matrix space

It is worth to note that the conclusions of Lemma 7 may not be true without the assumptions

If

If

So the discussions above show that if there exist a positive integer i such that the coefficient

Together with Lemma 7 and the discussion about the coefficient

Theorem 1. For an arbitrary initial matrix group

By choosing a special kind of initial matrix group, we can obtain the unique least norm of Problem I. To this end, we first define a matrix set as follows

where

Theorem 2. If we choose the initial matrix group

Proof: By the Algorithm 1 and Theorem 1, if we choosing initial matrix group

By Lemma 6 we know that arbitrary solution of Problem I can be express as

where matrix group

Then

So we have

which implies that matrix group

Remark 2. Since the solution of Problem I is no empty, so the

3. The Minimization Property of Iterative Method

In this section, the minimization property of Algorithm 1 is characterized, which ensures the Algorithm 1 converges smoothly.

Theorem 3. For an arbitrary initial matrix group

where F denote a affine subspace which has the following form

Proof: For arbitrary matrix group

Denote

by the conclusion Equation (2) in Lemma 7, we have

where

Because

if and only if

It follows from the conclusion in Lemma 7 that

By the fact that

We complete the proof. W

Theorem 3 shows that the approximation solution

which shows that the sequence

is monotonically decreasing. The descent property of the residual norm of Equation (5) ensures that the Algorithm 1 possesses fast and smoothly convergence.

4. Numerical Examples

In this section, we present numerical examples to illustrate the efficiency of the proposed iteration method. All the tests are performed using Matlab 7.0 which has a machine precision of around 10−16. Because of the error of calculation, the iteration will not stop within finite steps. Hence, we regard the approximation solution group

Example 1. Given matrices

Choose the initial matrices

with

And

If we let the initial matrix

with

And

Example 2. Suppose that the matrices

Algorithm 2. For an arbitrary initial matrix group

Step 1.

Step 2. If

Step 3.

Step 4. Go to step 2.

The main differences of Algorithm 1 and Algorithm 2 are: in Algorithm 1 the selection of coefficient

the Galerkin condition, but lacks of minimization property. Choosing the initial matrix

Figure 1. The comparison of residual norm between these two algorithm.

obtain the same least norm solution group, and we also obtain the convergence curves of residual norm shown in Figure 1. The results in this figure show clearly that the residual norm of Algorithm 1 is monotonically decreasing, which is in accordance with the theory established in this paper, and the convergence curve is more smooth than that in Algorithm 2.

Acknowledgements

We thank the Editor and the referee for their comments. Research supported by the National Natural Science Foundation of China (11301107, 11261014, 11561015, 51268006).

Cite this paper

Xuelin Zhou,Dandan Song,Qingle Yang,Jiaofen Li, (2016) Least-Squares Solutions of Generalized Sylvester Equation with Xi Satisfies Different Linear Constraint. Advances in Linear Algebra & Matrix Theory,06,59-74. doi: 10.4236/alamt.2016.62008

References

- 1. Baksalary, J.K. and Kala, R. (1980) The Matrix Equation AXB+CYD=E. Linear Algebra and Its Applications, 30, 141-147.

http://dx.doi.org/10.1016/0024-3795(80)90189-5 - 2. Chu, K.E. (1987) Singular Value and Generlized Value Decompositions and the Solution of Linear Matrix Equations. Linear Algebra and Its Applications, 87, 83-98.

http://dx.doi.org/10.1016/0024-3795(87)90104-2 - 3. Chu, K.E. (1989) Symmetric Solutions of Linear Matrix Equation by Matrix Decompositions. Linear Algebra and Its Applications, 119, 35-50.

http://dx.doi.org/10.1016/0024-3795(89)90067-0 - 4. Peng, Z.Y. (2002) The Solutions of Matrix AXC+BYD=E and Its Optimal Approximation. Mathematics: Theory & Applications, 22, 99-103.

- 5. Liao, A.P., Bai, Z.Z. and Lei, Y. (2005) Best Approximation Solution of Matrix Equation AXC+BYD=E. SIAM Journal on Matrix Analysis and Applications, 22, 675-688.

http://dx.doi.org/10.1137/040615791 - 6. Xu, G., Wei, M. and Zheng, D. (1998) On the Solutions of Matrix Equation AXB+CYD=F. Linear Algebra and Its Applications, 279, 93-109.

http://dx.doi.org/10.1016/S0024-3795(97)10099-4 - 7. Chang, X.W. and Wang, J.S. (1993) The Symmetric Solution of the Matrix Equations AX+YA=C, AXAT+BYBT=C and (ATXA,BTXB)=(C,D). Linear Algebra and Its Applications, 179, 171-189.

http://dx.doi.org/10.1016/0024-3795(93)90328-L - 8. Zietak, K. (1984) The lp-Solution of the Linear Matrix Equation AX+YB=C. Computing, 32, 153-162.

http://dx.doi.org/10.1007/BF02253689 - 9. Zietak, K. (1985) The Chebyshev Solution of the Linear Matrix Equation AX+YB=C. Numerische Mathematik, 46, 455-478.

http://dx.doi.org/10.1007/BF01389497 - 10. Peng, Z.Y. (2004) The Nearest Bisymmetric Solutions of Linear Matrix Equations. Journal of Computational Mathematics, 22, 873-880.

- 11. Ding, F., Liu, P.X. and Ding, J. (2008) Iterative Solutions of the Generalized Sylvester Matrix Equations by Using the Hierarchical Identification Principle. Applied Mathematics and Computation, 197, 41-50.

http://dx.doi.org/10.1016/j.amc.2007.07.040 - 12. Peng, Z.Y. and Peng, Y.X. (2006) An Efficient Iterative Method for Solving the Matrix Equation AXB+CYD=E. Numerical Linear Algebra with Applications, 13, 473-485.

http://dx.doi.org/10.1002/nla.470 - 13. Peng, Z.Y. (2005) A Iterative Method for the Least Squares Symmetric Solution of the Linear Matrix Equation AXB=C. Applied Mathematics and Computation, 170, 711-723.

http://dx.doi.org/10.1016/j.amc.2004.12.032 - 14. Peng, Z.H., Hu, X.Y. and Zhang, L. (2007) The Bisymmetric Solutions of the Matrix Equation A1X1B1+A2X2B2+…+AiXiBi=C and Its Optimal Approximation. Linear Algebra and Its Applications, 426, 583-595.

http://dx.doi.org/10.1016/j.laa.2007.05.034 - 15. Lei, Y. and Liao, A.P. (2007) A Minimal Residual Algorithm for the Inconsistent Matrix Equation AXB=C over Symmetric Matrices. Applied Mathematics and Computation, 188, 499-513.

http://dx.doi.org/10.1016/j.amc.2006.10.011 - 16. Zhou, F.Z., Hu, X.Y. and Zhang, L. (2003) The Solvability Conditions for the Inverse Eigenvalue Problems of Centro-Symmetric Matrices. Linear Algebra and Its Applications, 364, 147-160.

http://dx.doi.org/10.1016/S0024-3795(02)00550-5 - 17. Xie, D.X., Zhang, L. and Hu, X.Y. (2000) The Solvability Conditions for the Inverse Problem of Bisymmetric Nonnegative Definite Matrices. Journal of Computational Mathematics, 6, 597-608.

- 18. Wang, R.S. (2003) Function Analysis and Optimization Theory. Beijing University of Aeronautics and Astronautics Press, Beijing. (In Chinese)

NOTES

*Corresponding author.