Open Journal of Statistics

Vol.04 No.11(2014), Article ID:52853,11 pages

10.4236/ojs.2014.411087

Some Properties of a Recursive Procedure for High Dimensional Parameter Estimation in Linear Model with Regularization

Hong Son Hoang, Remy Baraille

SHOM/HOM/REC, 42 av Gaspard Coriolis 31057 Toulouse, France

Email: hhoang@shom.fr

Copyright © 2014 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 8 September 2014; revised 6 October 2014; accepted 2 November 2014

ABSTRACT

Theoretical results related to properties of a regularized recursive algorithm for estimation of a high dimensional vector of parameters are presented and proved. The recursive character of the procedure is proposed to overcome the difficulties with high dimension of the observation vector in computation of a statistical regularized estimator. As to deal with high dimension of the vector of unknown parameters, the regularization is introduced by specifying a priori non-negative covariance structure for the vector of estimated parameters. Numerical example with Monte-Carlo simulation for a low-dimensional system as well as the state/parameter estimation in a very high dimensional oceanic model is presented to demonstrate the efficiency of the proposed approach.

Keywords:

Linear Model, Regularization, Recursive Algorithm, Non-Negative Covariance Structure, Eigenvalue Decomposition

1. Introduction

In [1] a statistical regularized estimator is proposed for an optimal linear estimator of unknown vector in a linear model with arbitrary non-negative covariance structure

(1)

(1)

where  is the p-vector observation,

is the p-vector observation,  is the

is the  observation matrix,

observation matrix,  is the n-vector of unknown parameters to be estimated,

is the n-vector of unknown parameters to be estimated,  is the p-vector representing the observation error.

is the p-vector representing the observation error.

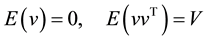

It is assumed

(2)

(2)

(3)

(3)

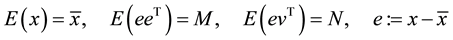

where  is the mathematical expectation operator. Throughout this paper let

is the mathematical expectation operator. Throughout this paper let ,

,  be any positive inte-

be any positive inte-

gers, the covariance matrix of the joint vector  may be singular (and hence the model (1)-(3) is called a linear model with arbitrary non-negative covariance structure).

may be singular (and hence the model (1)-(3) is called a linear model with arbitrary non-negative covariance structure).

No particular assumption is made regarding the probability density function of  and

and ,

,  are any positive integers.

are any positive integers.

In [1] the optimal linear estimator for the unknown vector  in the model (1)-(3) is defined as

in the model (1)-(3) is defined as

where

As in practice all the matrices

hence the resulting estimate

As shown in [1] , there are situations when the error

In this paper we are interested in obtaining a simple recursive algorithm for computation of

This problem is very important for many practical applications. As example, consider data assimilation problems in meteorology and oceanography [2] . For typical data set in oceanography, at each assimilation instant we have the observation vector with

2. Simple Recursive Method for Estimating the Vector of Parameters

2.1. Problem Statement: Free-Noise Observations

First consider the model (1) and assume that

and

Suppose that the system (6) is compatible, i.e. there exists

The problem is to obtain a simple recursive procedure to compute a solution of the system (6) when the dimension of

2.2. Iterative Procedure

To find a solution to Equation (6), let us introduce the following system of recursive equations

Mention that the system is compatible if

Theorem 1. Suppose the system (6) is compatible. Then for any finite

In order to prove Theorem 1 we need

Lemma 1. The following equalities hold

Proof. By induction. We have for

since

Let the statement be true for some

Substituting

Lemma 2. The following equalities hold

Proof. By induction. We have for

Let the statement be true for some

However from Lemma 1, as

Proof of Theorem 1.

Theorem follows from Lemma 2 since under the conditions of Theorem, from Equation (9) for

Corollary 1. Suppose the rows of

Proof. By induction. The fact that for

Suppose that

its subspace

independent elements. We conclude that it is impossible

Suppose now Corollary is true for

we have

By the same way as proved for

Comment 1. By verifying the rank of

Using the result (8) in Lemma 1, it is easy to see that:

Corollary 2. Suppose

Corollary 3. Suppose

Corollary 3 follows from the fact that when

Comment 2. Equation (7c) for

In the next section we shall show that the fact

3. Optimal Properties of the Solution of (7)

3.1. Regularized Estimate

Theorem 1 says only that Equations (7a)-(7c) give a solution to (1). We are going now to study the question on whether a solution of Equations (7a)-(7c) is optimal, and if yes, in what sense? Return to Equations (1)-(3) and assume that

where

and one can prove also that the mean square error for

If we apply Equation (3.14.1) in [3] ,

For simplicity, let

In [4] a similar question has been studied which concerns the choice of adequate structure for the Error Covariance Matrix (ECM)

We prove now a more strong result saying that all the estimates

Theorem 2. Consider the algorithm (7). Suppose

Proof. For

Suppose the statement is true for some

Really as

Theorem 2 says that by specifying

Comment 3

1) If

2) Theorem 2 says that there is a possibility to regularize the estimate when the number of observations is less than the number of estimated parameters by choosing

3.2. Minimal Variance Estimate

Suppose

Theorem 3. Suppose

Proof. Introduce for the system (6),

and the class of all estimates

The condition for unbiasedness of

or

from which follows

It means that all the estimate in Equation (14) is unbiased.

Consider the minimization problem

We have

Taking the derivative of

from which follows one of the solutions

If now instead of Equation (13) we consider the system

and repeat the same proof, one can show that the unbiased minimum variance estimate for

Using the properties of the pseudo-inverse (Theorem 3.8 [3] ), one can prove that

Thus for a very small

On the other hand, applying Lemma 1 in [5] for the case of uncorrelated sequence

Letting

3.3. Noisy Observations

The algorithm (18a)-(18d) thus yields an unbiased minimal variance (UMV) estimates for

3.4. Very High Dimension of

In the field of data assimilation in meteorology and oceanography usually the state vector

Let us consider the eigen-decomposition for

In (19) the columns of

3.4.1. Main Theoretical Results

Theorem 4

Consider two algorithms of the type (7) subject to two matrices

where the columns of

Proof

Write the representation of

where

Let

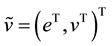

In what follows we introduce the notation:

The samples

There are the following different cases

1)

2)

a) For samples belonging to

b) For samples belonging to

Thus in the mean sense

3) Consider two initializations

a)

i)

ii)

b)

i) of minimal variance for

ii) of minimal variance for

iii) not of minimal variance for

Thus in the mean sense

3.4.2. Simplified Algorithm

Theorem 5

Consider the algorithm (7) subject to

Then this algorithm can be rewritten in the form

It is seen that in the algorithm (24a)-(24d), the estimate

Mention that the version (24a)-(24d) is very closed to that studied in [7] for ensuring a stability of the filter.

4. Numerical Example

Consider the system (1) subject to the covariance

Here we assume that the 1st and 3rd components of

Numerical computation of eigendecomposition of

The algorithm (24a)-(24d) is applied subject to three covariance matrices

In Figure 1 we show the numerical results obtained from the Monte-Carlo simulation.

There are 100 samples simulating the true

Figure 1. Performance (rms) of the algorithm (24) subject to different projection subspaces.

The curve

As seen from Figure 1, the estimation error is highest in ALG (1). There is practically no difference between ALG (2) and ALG (3) which are capable of decreasing considerably the estimation error (50%) compared to ALG (1). As to the ALG (4), its performance is situated between ALG (1) and ALG (2). This experiment confirms the theoretical results and demonstrates that if we are given a good priori information on the estimated parameters, there exists a simple way to improve the quality of the estimate by appropriately introducing the priori information in the form of the regularization matrix

The results produced by ALG (1) and ALG (4) show also that when the priori information is insufficiently rich, the algorithm naturally produces the estimates of poor quality. In such situation, simple applying orthogonal projection can yield a better result. For the present example, the reason is that using the 2nd mode

5. Experiment with Oceanic MICOM Model

5.1. MICOM Model

In this section we will show importance of the regularization factor in the form of the priori covariance

The numerical model used in this experiment is the MICOM (Miami Isopycnic Coordinate Ocean Model) which is exactly as that presented in [8] . We recall only that the model configuration is a domain situated in the North Atlantic from

The assimilation experiment consists of using the SSH to correct the model solution, which is initialized by some arbitrarily chosen state resulting from the control run.

5.2. Different Filters

The different filters are implemented to estimate the oceanic circulation. It is well known that determining the filter gain is one of the most important tasks in the design of a filter. As for the considered problem it is impossible to apply the standard Kalman filter [9] since in the present experiment,

According to Corollary 4.1 in [4] , using the hypothesis on separable vertical-horizontal structure for the ECM, we represent

where

Figure 2 shows the estimated vertical coefficients

We remark that all the gain coefficients in two filters are of identical sign but the elements of

The vertical gain coefficients for the CHF are taken from [8] and are equal to

5.3. Numerical Results

In Figure 3 we show the instantaneous variances of the SSH innovation produced by three filters EnOI, CHF and PEF. It is seen that initialized by the same initial state, if the innovation variances in EnOI, CHF have a tendency to increase, this error remains stable for the PEF during all assimilation period. At the end of assimilation, the PE in the CHF is more than two times greater than that produced by the PEF. The EnOI has produced poor estimates, with error about two times greater than the CHF has done.

For the velocity estimates, the same tendency is observed as seen from Figure 4 for the surface velocity PE

Figure 2. Vertical gain coefficients obtained during application of the Sampling Procedure for layer thickness correction during iterations.

Figure 3. Performance comparison of EnOI, CHF and PEF: Variance of SSH innovation resulting from the filters EnOI, CHF and PEF.

Figure 4. The prediction error variance of the u velocity component at the surface (cm/s) resulting from the EnOI, CHF and PEF.

errors. These results prove that the choice of ECM as a regularization factor on the basis of members of the En(PEF) allows to much better approach the true system state compared to that based on the samples taken from En(EnOI) or to that constructed on the basis of the physical consideration as in the CHF. The reason is that the members of En(PEF) by construction [8] are samples of the directions along which the prediction error increases most rapidly. In other words, the correction in the PEF is designed to capture the principal important components in the decomposion of the covariance of the prediction error.

6. Conclusion

We have presented some properties of an efficient recursive procedure for computation of a statistical regularized estimator for the optimal linear estimator in a linear model with arbitrary non-negative covariance structure. The main objective of this paper is to obtain an algorithm which allows overcoming the difficulties concerned with high dimensions of the observation vector as well as that of the estimated vector of parameters. As it was seen, the recursive nature of the proposed algorithm allows dealing with high dimension of the observation vector. By initialization of the associated matrix equation by a low rank approximation covariance which accounts for only first leading components of the eigenvalue decomposition of the priori covariance matrix, the proposed algorithm permits to reduce greatly the number of estimated parameters in the algorithm. The efficiency of the proposed recursive procedure has been demonstrated by numerical experiments, with the systems of small and very high dimension.

References

- Hoang, H.S. and Baraille, R. (2013) A Regularized Estimator for Linear Regression Model with Possibly Singular Covariance. IEEE Transactions on Automatic Control, 58, 236-241. http://dx.doi.org/10.1109/TAC.2012.2203552

- Daley, R. (1991) Atmospheric Data Analysis. Cambridge University Press, New York.

- Albert, A. (1972) Regression and the Moore-Penrose Pseudo-Inverse. Academy Press, New York.

- Hoang, H.S. and Baraille, R. (2014) A Low Cost Filter Design for State and Parameter Estimation in Very High Dimensional Systems. Proceedings of the 19th IFAC Congress, Cape Town, 24-29 August 2014, 3156-3161.

- Hoang, H.S. and Baraille, R. (2011) Approximate Approach to Linear Filtering Problem with Correlated Noise. Engineering and Technology, 5, 11-23.

- Golub, G.H. and van Loan, C.F. (1996) Matrix Computations. 3rd Edition, Johns Hopkins University Press, Baltimore.

- Hoang, H.S., Baraille, R. and Talagrand, O. (2001) On the Design of a Stable Adaptive Filter for High Dimensional Systems. Automatica, 37, 341-359. http://dx.doi.org/10.1016/S0005-1098(00)00175-8

- Hoang, H.S. and Baraille, R. (2011) Prediction Error Sampling Procedure Based on Dominant Schur Decomposition. Application to State Estimation in High Dimensional Oceanic Model. Applied Mathematics and Computation, 218, 3689-3709. http://dx.doi.org/10.1016/j.amc.2011.09.012

- Kalman, R.E. (1960) A New Approach to Linear Filtering and Prediction Problems. Journal of Basic Engineering, 82, 35-45. http://dx.doi.org/10.1115/1.3662552

- Cooper, M. and Haines, K. (1996) Altimetric Assimilation with Water Property Conservation. Journal of Geophysical Research, 101, 1059-1077. http://dx.doi.org/10.1029/95JC02902

- Greenslade, D.J.M. and Young, I.R. (2005) The Impact of Altimeter Sampling Patterns on Estimates of Background Errors in a Global Wave Model. Journal of Atmospheric and Oceanic Technology, 1895-1917.

- Spall. J.C. (2000) Adaptive Stochastic Approximation by the Simultaneous Perturbation Method. IEEE Transactions on Automatic Control, 45, 1839-1853. http://dx.doi.org/10.1109/TAC.2000.880982