Intelligent Control and Automation, 2014, 5, 46-59 Published Online May 2014 in SciRes. http://www.scirp.org/journal/ica http://dx.doi.org/10.4236/ica.2014.52006 How to cite this paper: Gress, E.S.H., Lechuga, G.P. and Gress, N.H. (2014) Sensitivity Analysis of the Replacement Problem. Intelligent Control and Automation, 5, 46-59. http://dx.doi.org/10.4236/ica.2014.52006 Sensitivity Analysis of the Replacement Problem Eva Selene Hernández Gress1, Gilberto Pérez Lechuga1, Neil Hernández Gress2 1Academic Area of Engineering, Autonomous University of Hidalgo, Pachuca, México 2Monterrey Institute of Technology-Mexico State Campus, Pachuca, México Email: evah@uaeh.edu.mx Received 21 January 2014; revised 21 February 2014; accepted 28 February 2014 Copyright © 2014 by authors and Scientific Research Publishing Inc. This work is licensed under the Creative Commons Attribution International License (CC BY). http://creativecommons.org/licenses/by/4.0/ Abstract The replacement problem can be modeled as a finite, irreducible, homogeneous Markov Chain. In our proposal, we modeled the problem using a Markov decision process and then, the instance is optimized using linear programming. Our goal is to analyze the sensitivity and robustness of the optimal solution across the perturbation of the optimal basis obtained from the simplex algorithm. The perturbation can be approximated by a given matrix such that . Some algebraic relations between the optimal solution and the perturbed instance are obtained and discussed. Keywords Replacement Policy, Markov Processes, Linear Programming 1. Introduction Machine replacement problem has been studied by a lot of researchers and is also an important topic in opera- tions research, indus trial engineering and management sciences. Items which are under constant usage, need re- placement at an appropriate time as the efficiency of the operating system using such items suffer a lot. In the real-world, the equipment replacement problem involves the selection of two or more machines of one or more types from a set of several possible alternative machines with different capacities and cost of purchase and operation. When the problem involves a single machine, it is common to find two well-defined forms to do so: the quantity-based replacement, and the time-based replacement. In the quantity-based replacement model, a machine is replaced when an accumulated product of size q is produced. In this model, one has to determine the optimal production size q. While in a time-based replacement model, a machine is replaced in every period of  E. S. H. Gress et al. with a profit maximizing. When the problem involves two or more machines it is named the parallel ma- chine replacement problem [1], and the time-based replacement model consists of finding a minimum cost re- placement policy for a finite population of economically interdependent machines. A replacement policy is a specification of “keep” or “replace” actions, one for each period. Two simple ex- amples are the policy of replacing the equipment every time period and the policy of keeping the first machine until the end of a period N. An optimal policy is a policy that achieves the smallest total net cost of ownership over the entire planning horizon and it has the property that whatever th e initial state and initial decision are, the remaining decisions must constitute an optimal policy with regard to the state resulting from the first decision. In practice, the replacement problem can be easily addressed using dynamic programming and Markov decision processes. The dynamic programming uses the following idea: The system is observed over a finite or infinite horizon split up into periods or stages. At each stage the system is observed and a decision or action concerning the sys- tem has to be made. The decision influences (deterministically or stochastically) the state to be observed at the next stage, and depending on the state and the decision made, an immediate reward is gained. The expected total reward from the present stage and the one of the following states is expressed by the functional equa- tion. Optimal decisions depending on stage and state are determined backwards step by step as those maximiz- ing the right hand side of the functional equation ( ) { } ()( ) 1 11 , max,2,3, , jjjjj j d KR utUtu tjT + +− = = += [2], where is the expected total reward in stage , this reward depends on the action (keep or replace) in the last stage of operation, , is the functional in stage , is the total number of stages, and are the actions associated with the equipment Keep and Replace, is the set of actions . The Markov Decision process concept has been stated by Howard [3] combining the dynamic programming technique with the mathematical notion of a Markov Chain. The concept has been used to develop the solution of infinite stage problems such as in Sernik and Marcus [4], Kristensen [5], Sethi et al. [6], and Childress and Durango-Cohen [1]. The policy iteration method was created as an alternative to the stepwise backward contrac- tion methods. The policy iteration was a result of the application of the Markov chain environment and it w as an important contribution to the development of optimization techniques [5]. In the other hand, a Markov decision process is a discrete time stochastic control process. At each time step, the process is in state and the decision maker may choose any action a that is available in state s. The pro- cess responds at the next time step moving into a new state , and giving the decision maker a corresponding reward . The probability that the process chooses as its new state is influenced by the chosen ac- tion. Specifically, it is given by the state transition function . Thus, the next state depends on the current state and the decision maker’s action . But given and , it is conditionally independent of all previous states and actions; in other words, the state transitions of an MDP possess the Markov property. Finally, it is important to note that linear programming was early identified as an optimization technique to be applied to Markov decision process as described by, for instance [5] an d [7]. In this document, we consider a stochastic machine replacement model. The system consists of a single machine; it is assumed that th is machine operates continuously and efficiently over N periods. In each period, the quality of the machine deteriorates due to its use , a nd the r e fore , it can be i n a ny of t he N stages, denoted . We modeled the replacement problem using a Markov decision process and then, the instance is optimized using linear programming. Our goal is to analyze the sensitivity and robustness of the optimal solution across the perturbation of the optimal basis. Spe- cifically, the methodology used is to model the replacement problem through a Markov decision process, op- timize the instance obtained using linear programming, analyzing the sensitivity and robustness of the solution obtained by the perturbation of the optimal basis from the simplex algorithm, and finally, obtain algebraic rela- tions between the initial optimal solution and the perturbed solution. In this work, we assume that for each new machine it state can become worse or may stay unchanged, and that the transition probabilities are known, where are defined as { } next state will be current state is 0,ifPji ji= < also be assumed that the state of the machine is known at the start of each period, and we must choose one of the  E. S. H. Gress et al. following two options: 1) Let the machine operate one more period in the state it currently is, 2) Replace the machine by a new one, where every new machine for replacement is assumed to be identical. The importance of this study is that the result of the objective function of the replacement problem depends on the probabilities of the transition matrices, which is why by perturbing these matrices, it is unknown if the solution and the decisions associated with the replacement problem will change. Few authors in the literature have studied the sensitivity of the replacement problem by perturbing the transition matrices (see f or exa mpl e [8] and [9]). Studying such problems is important not only to solve this particular model, but to assess if the objec- tive function to solve is still conv ex. The original contributions of this work are the perturbation of the optimal basis obtained with the simplex al- gorithm. It was concluded that by perturbing this op timal basis directly affects the transition matrices assoc iated with the replacement problem, and it found a region of feasibility for perturbation, in which, the objective func- tion and the decisions will not change. The rest of the paper is organized as follows. The next section presents the literature review for determining the optimal replacement policy. Also we present the problem formulation to the replacement problem with dis- crete-time Markov decision process and using the equivalent linear programming. Section Properties of the per- turbed optimal basis associated with the replacement problem shows some algebraic relations between the op- timal basis and the perturbed instance. The following section presents numerical results for a specific example. Finally our conclusions and future research directions are given. 2. Literature Review There are several theoretical models for determining the optimal replacement policy. The basic model considers maintenance cost and resale value, which have their standard behavior as per the same cost during earlier period and also partly having an exponential grown pattern as per passage of time. Similarly the scrap value for the item under usage can be considered to have a similar type of recurrent behavior. In relation to stochastic models the available literature on discrete time maintenance models predominantly treats an equipment deterioration process as a Markov chain. Sernik and Marcus [4] obtained the optimal policy and its associated cost for the two-di me n sional Markov replacement problem with partial observations. They demonstrated that in th e infinite horizon, the optimal discoun ted cost function is piecewise linear, and also pr o- vide formulas for computing the cost and the policy. In [6], the authors assume that the deterioration of the ma- chine is not a discrete process but it can be modeled as a continuous time Markov process, therefore, the only way to improve the quality is by replacing the machine by one new. They derive some stability conditions of the system under a simple class of real-time scheduling/replacement policy. Some models are approached to evaluate the inspection intervals for a phased deterioration monitored com- plex components in a system with severe down time costs using a Markov model, see for example [10]. In [11] the problem is approached from the perspective of the reliability engineering developing replacement strategies based on predictive maintenance. Moreover, in [1] the authors formulated a stochastic version of the parallel machine replacement problem. They analyzed the structure of optimal policies under general classes of replacement cost functions. Another important approach that has received the problem is the geometric programming [12]. In its proposal, the author discusses the application of this technique to solving replacement problem with an infinite horizon and under certain circumstances he obtains a closed-form solution to the optimiza ti on problem . A treatment to the problem when there are budget constraints can be found in [13]. In their work, the authors propose a dual heuristic for dealing with large, realistically sized problems through the initial relaxation of budget constraints. Compared with simulation techniques, some authors [14] proposed a technique based on obtaining the first two moments of the discounted cost distribution, and then, they approximate the underlying distri bution function by three theoretical distribu tion s using Monte Carlo simulation. The most important pioneers in applying dynamic programming models replacement problems are: Bellman [15], White [16], Davidson [17], Walker [18] and Bertsekas [19] Recently the Markov decision process has been applied successfully to the animal replacement problem as a productive unit, see for example [20]-[22]. Although the modeling and optimization of the replacement problem using Markov decision processes is a topic widely known [23]. However, there is a significant amount a bout the theory of stochastic pertur bation ma-  E. S. H. Gress et al. trices [24]-[27]. In literature there are hardly results concerning the perturbation and robustness of the optimal solution of a replacement problem modeled via a Markov decision process and optimized using linear program- ming. In this paper we are interested in addressin g th is is sue with a stochastic perspective. 3. Problem Formulation We start by defining a discrete-time Markov decision process with a finite state space with states where, in each stage the analyst should made a decision between possible. Denote by and the state and the decision made in stage respectively, then, the system moves at the next stage in to the next state with a known probability given by ()( ) 1, k zjn k pPznjzn zd d =+== = . When the transition occurs, it is followed by the reward and the payoff is given by at the state after the decision is made. For every policy , the corresponding Markov chain is ergodic, then the steady state probabil- ities of this chain are given by ( ) lim,1, 2,, zn pPZnz iZ ϑ →∞ == = and the problem is to find a policy for which the expected payoff is maximum. In this system, the time interval between two transitions is called a stag e. An optimal policy is def ined as a policy that maximizes (o r minimizes) some pred e- fined objective function. The optimization technique (i.e. the method to obtain an optimal policy) depends on the form of the objective function and it can result in different alternative objective fun ction. The choice of criterion depends on whether t he planning h orizon is fi ni te or infinite (Kristensen, 1996). In our proposal we consider a single machine and regular times intervals whether it should be kept for an ad- ditional period or it should be replaced by a new. By the above, the state space is defined by ()( ) {} 12 Keep ,ReplaceZz z = , and having observed the state, action should be taken concerning the machine about to keep it for at least an additional stage or to replace it at the end of the stage. The economic returns from the system will depend on its evolution and whether the machine is kept or replaced, in this proposal this is represented by a reward depending on state and action specified in advance. If the action replace is taken, we assume that the replacement takes place at the end of the stage at a known cost, the planning horizon is unknown and it is regarded infinite, also, all the stages are of equal length . The optimal criterion used in this document is the maximization of the expected average reward per unit of time given by , where is the limiting state probability under the policy , and the op- timization technique used is the linear programming. Thus, we may maximize the problem (1) using the equiva- lent linear programming given by [28]. ( ) 11 11 1 11 Maximize Subject to 1 00 for ,1,2,, and 1,2,,. Z zk zk zk Z zk zk Z jkzk zjzk k zk R rx x xxp kx zjZ k ξ ξ ξξ ξ = = = = == = = = −=≥ == ∑∑ ∑∑ ∑ ∑∑ (1) where is the steady-state unconditional probability that the system is in state , and the decision is made; similarly is the reward obtained when the system is in state , and the decisio n is made. In this sense, is optimal in state if and only if, the optimal solu tion of (1) satisfy the u nconditional probabilitie s that the system visit the state , when making the decision are strictly positive. Note tha t, the optimal value of the objective function is equal to the average rewards per stage under an optimal policy. The optimal value of is equal to the limiting state probability under an optimal policy. Model (1) contains functional constrains and decision variables. In [9] is show ed that the problem (1) has a degenerate basic feasible solution. In the remainder of this document, we are interested in the optimal basis associated with the solution of the problem (1) when it is solved via the Simplex Method.  E. S. H. Gress et al. 4. Properties of the Perturbed Optimal Basis Associated with the Replacement Problem In the LP model (1), the number of basic solutions is less than or equal to the number of combinations and (subm a t r i x of ) is a feasible basis of the LP model that satisfies . Let the optimal basis associated to problem (2), and the perturbed matrix of defined by where and is a matrix with the same order than . The optimal solution is and any perturbed solution is . From these assumptions we state and prove the next propositions and theorems. Proposition 4.1: Let , ( ) 1 * *1t ffcBBb −− =−− where . Proof 4.1: By the definition of , (3) So, () () 11 ****1 1 ( )().dxxxB bkBHbBB b −−−− −= −=−+=− (4) Similarly, ( ) ( )( )() ( ) 11 *****. t tt f xf xdxcxdxfcdxfcBBb −− =+=+=+ =−− (5) Proposition 4.2: The matrix is defined by: [ ] 11 121 21 222123 12 n nn m mmn hh h hh h h HHHH hh h = = (6) where are the entries of that coul d be pe rturbed. The columns of the optimal basis and the perturbed basis must sum 1. (7) Proof: The proof is trivial. The optimal basis is composed by the transition probability matrix , con- sidering the properties of the M a r k ov chain we ha ve 0 ,0,1,, Z jz zj z pj Z ππ = = ∀= ∑ (8) where , the Equation (8) is defined by , then, for is fullfilled that . This property is valid also for . Theorem 4.3: The e ucl ide a n no rm i s us ed to est abl is h pe rt u rbat io n b ou nds bet wee n the o ptimal basi s and the pertu rbed basis , such that (9) Proof:  E. S. H. Gress et al. ( ) ( ) ( ) ( ) ( ) ( ) ( ) ( ) 1 111 1 111 **** * 2 22 22 2 xxBbBbbBB bBB BB − −−− − −−− −=−=−≤ ⋅−=− (10) because . Proposition 4.4: (11) Proof: From the LP model (2), , (1 2) , (13) premultiplying the Equation (12) times , ( ) 1 *** *** , soBxB BbBxb − = = (14) similarly, premultiplying the Equation (13) , ( ) 1,BxB Bbso Bxb − = = (15) equalizing (14) and (15) (16) isolating results the Equation (11) . Theorem 4.5: A feasible solution satis fies tha t where . Proof: Let and , then, for . ( ) 11 12111 121 22221 123 1 0 1 0 0 0 0 m m m mm mnm DD DD DD DD xB HbDb DDDD D − ≥ ≥ =+⋅= ⋅=⋅= ≥ (17) 5. Numerical Example Consider the following transition probabilities matrices in [5], which represented a Markovian decision process with , and : 0.60.30.11 31 31 3 0.20.60.2,1 31 313 0.10.30.61 31313 KR = = (18 ) In order to maximize the objective fun ction th e cos t coefficients are shown in Table 1. The corresponding LP problem is: ()( )( )( )()( ) ( )( )( )( )()() ( )( ) 11 1221223132 11 1221223132 1112212231 32 11 1221223132 11 Maximize 10,0009,00012,00011,000014,00013,0000 Subject to 1 2323151311013 0 3 101 325233101 30 1 1013 Rxx xxxx xxxxxx xxxx xx xx x xxx xx = ++++ + +++++= + −−−−= −−+ +−−= −− ( )( )( )( ) 1221 223132 15132523 0 0,, . ij xxx x x ij −−+ −= ≥∀ (1 9) The optimal inverse basis of the LP problem associated to this solution is:  E. S. H. Gress et al. Table 1. Cost coefficients. D = 1 (Keep) D = 1 (Replace) 10,000 9,000 12,000 11,000 14,000 13,000 ( ) 1 * 3160982116 0111 71601181 16 3801 411 8 B − −− = − − (20) The optimal solution and the basic variables of the inverse basis are (presented in order): ( ) ( ) 1222131 , ,,0.1875,0,0.4375,0.375 B Xx axx= = . The optimal objective function is 12,187.50. The basis that will be perturbed is formed by the columns * 101 1 231151 10 1 3025310 13 01525 B −− = −− −− (21) Note that satisfies the Proposition 4.2 that corresponds with the Equation (7), this property must be conserv e d for . Suppose that we are inte rested to perturb This decision variable has associated the transition probability . Simplifying the restriction of the state 1 in the LP model (19), the value for this variable is . Continuing with the process, the restrictions of the states 2 and 3 are respectively: ( )( ) 12 121212 1312 11 2,2 33 xpx xpx−=−=− (22) Because the restrictions of the LP model (1) the probability is affected by a minus sign. In , the variable is associated with the vector , and the positions that could be perturbed are , considering the Equation (7). Note that the first element of the vector does not have any per- turbation, because it corresponds to the first restriction of the LP model (1). Suppose also, that the column vector of the matrix that corresponds to the variable will be perturbed in the second position, from to . The perturbed vector is 21 1 1, ,, 33232 ∈∈ +∈−− −− (23) So, the matrix is: 11 1213 14 21 2223 24 31 3233 34 41 4243 44 0 000 000 2000 2000 hh hh hhhh Hhh hh hhhh ∈ = = −∈ −∈ (24) therefore the perturbed matrix is : ( ) () () () () * 101 1 231151 10 1 320253 10 132 01525 B +∈−− = − −∈− − −∈− (25 )  E. S. H. Gress et al. Every value of ( ) ( ) 11121 31 41 ,,,0,,2,2 tt H hhhh ==∈ −∈−∈ is associated with the decision (replace) and the state (the variable associated with this column vector is ), because of this, any perturbation in will affect the matrix in the first column The matrix is now ( )( )()() () 1313213 2 13 1313 13 1313 R −∈+∈+∈ = (26) The matrix has no changes. Considering the Equation (17) of the Theorem 4.5, is obtained ( ) () [ ] [ ] [ ] [ ] 1 1 0 1 11 231151 100 1 320253100 13 2015250 10 108 151320 00 7 / 30720. 0 8 151320 1/ 53100 8 151320 x BHb − =+⋅ +∈− − = ⋅ − −∈− − −∈− ≥ +∈ = +∈ = ≥ +∈ +∈ ≥ +∈ (28) Solving the inequality asso ciated with the first element , an interval is ob- tained. The second element fulfills with the equality. The third element have an inequality the solution is . In the inequality, the solution interval is . The intersection of the intervals is , considering that the probabilities are between 0 and 1, the extent to perturb in this particular case are to conserve the feasibility of the perturb solution . Consi- dering this perturbation interval we calculate the numerical comparative of the Proposition 4.1 1 (Table 2 and Ta- ble 3), Proposition 4.1 2 (Table 4 and Table 5), Theorem 4.3 (Table 6 and Ta ble 7), and Proposition 4.4 (Table 8 and Ta bl e 9). 6. Conclusions and Future Work In this document, we considered a stochastic machine replacement problem with a single machine that operates continuously and efficiently over periods. We were interested in the matrix perturbation procedure from a probabilistic point of view. A perturbation in a Markov chain can be referred as a slight change in the entries of the corresponding tran sition stochastic matrix. We perturbed the optimal basis , but this kind of perturbation also changes the transition stochastic matrices.  E. S. H. Gress et al. Table 2. Numerical comparative of Equation (4), Proposition 4.1 (1), Ascending perturbation in . 0.01 (0.1852, 0, 0.4387, 0.3760) (0.0023, 0, −0.0012, −0.0010) 0.02 (0.1830, 0, 0.4399, 0.3770) (0.0045, 0, −0.0024, −0.0021) 0.03 (0.1808, 0, 0.4410, 0.3780) (0.0066, 0, −0.0036, −0.0031) 0.04 (0.1787, 0, 0.4421, 0.3790) (0.0087, 0, −0.0047, −0.0040) 0.05 (0.1763, 0, 0.4432, 0.3799) (0.0108, 0, −0.0058, −0.0050) 0.06 (0.1747, 0, 0.4443, 0.3809) (0.0128, 0, −0.0069, −0.0059) 0.07 (0.1727, 0, 0.4454, 0.3818) (0.0147, 0, −0.0079, −0.0068) 0.08 (0.1708, 0, 0.4464, 0.3826) (0.0167, 0, −0.0090, −0.0077) 0.09 (0.1689, 0, 0.4474, 0.3835) (0.0185, 0, −0.0100, −0.0086) 0.10 (0.1671, 0, 0.4484, 0.3844) (0.0204, 0, −0.0110, −0.0094) 0.20 (0.1508, 0, 0.4573, 0.3920) (0.0367, 0, −0.0198, −0.0170) 0.30 (0.1373, 0, 0.4645, 0.3982) (0.0502, 0, −0.0270, −0.0232) 0.40 (0.1261, 0, 0.4706, 0.4034) (0.0614, 0, −0.0331, −0.0284) 0.50 (0.1165, 0, 0.4706, 0.4034) (0.0710, 0, −0.0382, −0.0328) 0.60 (0.1083, 0, 0.4706, 0.4034) (0.0792, 0, −0.0426, −0.0366) 0.70 (0.1012, 0, 0.4873, 0.4148) (0.0863, 0, −0.0465, −0.0398) 0.80 (0.0949, 0, 0.4840, 0.4177) (0.0926, 0, −0.0498, −0.0427) 0.90 (0.0849, 0, 0.4903, 0.4203) (0.0981, 0, −0.0528, −0.0453) 1 (0.0844, 0, 0.4930, 0.4225) (0.1030, 0, −0.0555, −0.0475) aThis value is obtained directly from the LP model. bThis value is obtained doing the matrix operations. Table 3. Numerical comparative of Equation (4), Proposition 4.1 (1), Descending perturbation in . −0.01 (0.1898, 0, 0.4362, 0.3739) (−0.0023, 0, 0.0012, 0.0011) −0.02 (0.1921, 0, 0.4349, 0.3728) (−0.0047, 0, 0.0025, 0.0022) −0.03 (0.1946, 0, 0.4336, 0.3717) (−0.0071, 0, 0.0038, 0.0033) −0.04 (0.1971, 0, 0.4323, 0.3705) (−0.0096, 0, 0.0052, 0.0040) −0.05 (0.1996, 0, 0.4309, 0.3693) (−0.0122, 0, 0.0066, 0.0056) −0.06 (0.2022, 0, 0.4295, 0.3681) (−0.0148, 0, 0.0080, 0.0059) −0.07 (0.2049, 0, 0.4280, 0.3669) (−0.0175, 0, 0.0094, 0.0081) −0.08 (0.2077, 0, 0.4265, 0.3656) (−0.0203, 0, 0.0109, 0.0093) −0.09 (0.2106, 0, 0.4250, 0.3643) (−0.0231, 0, 0.0124, 0.0107) −0.10 (0.2135, 0, 0.4234, 0.3629) (−0.0260, 0, 0.0140, 0.0120) −0.20 (0.2979, 0, 0.4049, 0.3471) (−0.0604, 0, 0.0325, 0.0279) −0.30 (0.2955, 0, 0.3793, 0.3251) (−0.1081, 0, 0.0582, 0.0499) −0.40 (0.3658, 0, 0.3414, 0.2926) (−0.1784, 0, 0.0960, 0.0823) −0.50 (0.4800, 0, 0.2800, 0.2400) (−0.2925, 0, 0.1575, 0.1350) −0.60 (0.6976, 0, 0.1627, 0.1395) (−0.5102, 0, 0.2747, 0.2355) aThis value is obtained directly from the LP model. bThis value is obtained doing the matrix operations.  E. S. H. Gress et al. Table 4. Numerical comparative of Equation (4), Proposition 4.1 (2), Ascending perturbation in . ( ) ( ) 1 1 **tb fcBB b − − −− 0.01 (0.1852, 0, 0.4387, 0.3760) 12196.4 0.02 (0.1830, 0, 0.4399, 0.3770) 12,205.0 0.03 (0.1808, 0, 0.4410, 0.3780) 12,213.4 0.04 (0.1787, 0, 0.4421, 0.3790) 12,221.7 0.05 (0.1763, 0, 0.4432, 0.3799) 12,299.7 0.06 (0.1747, 0, 0.4443, 0.3809) 12,237.6 0.07 (0.1727, 0, 0.4454, 0.3818) 12,245.3 0.08 (0.1708, 0, 0.4464, 0.3826) 12,252.8 0.09 (0.1689, 0, 0.4474, 0.3835) 12,260.2 0.10 (0.1671, 0, 0.4484, 0.3844) 12,267.4 0.20 (0.1508, 0, 0.4573, 0.3920) 12231.7 0.30 (0.1373, 0, 0.4645, 0.3982) 12,384.4 0.40 (0.1261, 0, 0.4706, 0.4034) 12,429.0 0.50 (0.1165, 0, 0.4706, 0.4034) 12,466.0 0.60 (0.1083, 0, 0.4706, 0.4034) 12,498.0 0.70 (0.1012, 0, 0.4873, 0.4148) 12,526.0 0.80 (0.0949, 0, 0.4840, 0.4177) 12,551.0 0.90 (0.0849, 0, 0.4903, 0.4203) 12,572.0 1 (0.0844, 0, 0.4930, 0.4225) 12,592.0 aThis value is obtained directly from the LP model. bThis value is obtained doing the matrix operations. Table 5. Numerical comparative of Equation (4), Proposition 4.1 (2), Descending perturbation in . ( ) ( ) 1 1 **tb fcBB b − − −− −0.01 (0.1898, 0, 0.4362, 0.3739) 12,178.4 −0.02 (0.1921, 0, 0.4349, 0.3728) 12,169.1 −0.03 (0.1946, 0, 0.4336, 0.3717) 12,159.6 −0.04 (0.1971, 0, 0.4323, 0.3705) 12,149.8 −0.05 (0.1996, 0, 0.4309, 0.3693) 12,139.8 −0.06 (0.2022, 0, 0.4295, 0.3681) 12,139.8 −0.07 (0.2049, 0, 0.4280, 0.3669) 12,118.5 −0.08 (0.2077, 0, 0.4265, 0.3656) 12,108.0 −0.09 (0.2106, 0, 0.4250, 0.3643) 12,096.9 −0.10 (0.2135, 0, 0.4234, 0.3629) 12,085.4 −0.20 (0.2979, 0, 0.4049, 0.3471) 11,950.4 −0.30 (0.2955, 0, 0.3793, 0.3251) 11,763.5 −0.40 (0.3658, 0, 0.3414, 0.2926) 11,487.8 −0.50 (0.4800, 0, 0.2800, 0.2400) 11.040.0 −0.60 (0.6976, 0, 0.1627, 0.1395) 10,180.0 aThis value is obtained directly from the LP model. bThis value is obtained doing the matrix operations.  E. S. H. Gress et al. Table 6. Numerical comparative of Equation (9), Theorem 4.3, Ascending perturbation in . 0.01 0.0028 0.0257 0.02 0.0055 0.0507 0.03 0.0081 0.0752 0.04 0.0107 0.0991 0.05 0.0132 0.1224 0.06 0.0157 0.1453 0.07 0.0181 0.1676 0.08 0.0204 0.1894 0.09 0.0227 0.2107 0.10 0.0250 0.2316 0.20 0.0450 0.4178 0.30 0.0615 0.5707 0.40 0.0753 0.6986 0.50 0.0870 0.8071 0.60 0.0971 0.9004 0.70 0.1058 0.9814 0.80 0.1135 1.0524 0.90 0.1202 1.1151 1 0.1263 1.1709 Table 7. Numerical comparative of Equation (9), Theorem 4.3, Descending perturbation in . −0.01 0.0028 0.0263 −0.02 0.0057 0.0533 −0.03 0.0081 0.0752 −0.04 0.0118 0.1092 −0.05 0.0149 0.1383 −0.06 0.0181 0.1682 −0.07 0.0214 0.1988 −0.08 0.0248 0.2303 −0.09 0.0283 0.2626 −0.10 0.0319 0.2959 −0.20 0.0741 0.6871 −0.30 0.1325 1.2286 −0.40 0.2187 2.0277 −0.50 0.3586 3.3254 −0.60 0.6254 5.8002  E. S. H. Gress et al. Table 8. Numerical comparative of Equation (11), Proposition 4.4, Ascending perturbation in . 0.01 (0.1852, 0, 0.4387, 0.3760) (0.1852, 0, 0.4387, 0.3760) 0.02 (0.1830, 0, 0.4399, 0.3770) (0.1830, 0, 0.4399, 0.3770) 0.03 (0.1808, 0, 0.4410, 0.3780) (0.1808, 0, 0.4410, 0.3780) 0.04 (0.1787, 0, 0.4421, 0.3790) (0.1787, 0, 0.4421, 0.3790) 0.05 (0.1763, 0, 0.4432, 0.3799) (0.1763, 0, 0.4432, 0.3799) 0.06 (0.1747, 0, 0.4443, 0.3809) (0.1747, 0, 0.4443, 0.3809) 0.07 (0.1727, 0, 0.4454, 0.3818) (0.1727, 0, 0.4454, 0.3818) 0.08 (0.1708, 0, 0.4464, 0.3826) (0.1708, 0, 0.4464, 0.3826) 0.09 (0.1689, 0, 0.4474, 0.3835) (0.1689, 0, 0.4474, 0.3835) 0.10 (0.1671, 0, 0.4484, 0.3844) (0.1671, 0, 0.4484, 0.3844) 0.20 (0.1508, 0, 0.4573, 0.3920) (0.1508, 0, 0.4573, 0.3920) 0.30 (0.1373, 0, 0.4645, 0.3982) (0.1373, 0, 0.4645, 0.3982) 0.40 (0.1261, 0, 0.4706, 0.4034) (0.1261, 0, 0.4706, 0.4034) 0.50 (0.1165, 0, 0.4706, 0.4034) (0.1165, 0, 0.4706, 0.4034) 0.60 (0.1083, 0, 0.4706, 0.4034) (0.1083, 0, 0.4706, 0.4034) 0.70 (0.1012, 0, 0.4873, 0.4148) (0.1012, 0, 0.4840, 0.4148) 0.80 (0.0949, 0, 0.4840, 0.4177) (0.0949, 0, 0.4840, 0.4177) 0.90 (0.0849, 0, 0.4903, 0.4203) (0.0849, 0, 0.4903, 0.4203) 1 (0.0844, 0, 0.4930, 0.4225) (0.0844, 0, 0.4930, 0.4225) aThis value is obtained directly from the LP model. bThis value is obtained doing the matrix operations. Table 9. Numerical comparative of Equation (11), Proposition 4.4, Descending perturbation in . −0.01 (0.1898, 0, 0.4362, 0.3739) (0.1898, 0, 0.4362, 0.3739) −0.02 (0.1921, 0, 0.4349, 0.3728) (0.1921, 0, 0.4349, 0.3728) −0.03 (0.1946, 0, 0.4336, 0.3717) (0.1946, 0, 0.4336, 0.3717) −0.04 (0.1971, 0, 0.4323, 0.3705) (0.1971, 0, 0.4323, 0.3705) −0.05 (0.1996, 0, 0.4309, 0.3693) (0.1996, 0, 0.4309, 0.3693) −0.06 (0.2022, 0, 0.4295, 0.3681) (0.2022, 0, 0.4295, 0.3681) −0.07 (0.2049, 0, 0.4280, 0.3669) (0.2049, 0, 0.4280, 0.3669) −0.08 (0.2077, 0, 0.4265, 0.3656) (0.2077, 0, 0.4265, 0.3656) −0.09 (0.2106, 0, 0.4250, 0.3643) (0.2106, 0, 0.4250, 0.3643) −0.10 (0.2135, 0, 0.4234, 0.3629) (0.2135, 0, 0.4234, 0.3629) −0.20 (0.2979, 0, 0.4049, 0.3471) (0.2979, 0, 0.4049, 0.3471) −0.30 (0.2955, 0, 0.3793, 0.3251) (0.2955, 0, 0.3793, 0.3251) −0.40 (0.3658, 0, 0.3414, 0.2926) (0.3658, 0, 0.3414, 0.2926) −0.50 (0.4800, 0, 0.2800, 0.2400) (0.4800, 0, 0.2800, 0.2400) −0.60 (0.6976, 0, 0.1627, 0.1395) (0.6976, 0, 0.1627, 0.1395) aThis value is obtained directly from the LP model. bThis value is obtained doing the matrix operations.  E. S. H. Gress et al. A region of feasibility is found , if the op timal basis is perturbed considering this region of feasibility, the optimal solution and the objective function change but the decisions of the replacement problem do not change. Some theorems and propositions are obtained to analyze the effects of the perturbation of the optim- al basis , a numerical example is included to support them. The algebraic relations obtained, also were proved numerically when the perturbation of the optimal basis is done in several elements of the matrix at once. Future work could consider other perturbations over the optimal basis (in this document the perturbation used is ) and perturb the entries of the matrix as random variables. References [1] Childress, S. and Durango-Cohen. (2005) On Paralle l Machi ne Replace ment Prob lems with General Replacement Cost Functions and Stochastic Deterioration. Naval Research Logistics, 52, 409-419. http://dx.doi.org/10.1002/nav.20088 [2] Cooper, L. and Cooper, M. (1981) Introduction to Dynamic Programming. Pergamon Press, London. [3] Howard, R. (1960) Dynamic Programming and Markov Processes. The MIT Press, Cambridge. [4] Sernik, E. and Marcus, S. (1991) Optimal Cost and Policy for a Markovian Replacement Problem. Journal of Optimi- zation Theory and Applications, 71, 105-126. http://dx.doi.org/10.1007/BF00940042 [5] Kristensen, A. (1996) Dynamic Programming and Markov Processes. Dina Notat No. 49. http://www.prodstyr.ihh.kvl.dk/pdf/notat49.pdf [6] Sethi, S., Sorger, G. and Zhou, X. (2000) Stability of Real-Time Lot Scheduling and Machine Replacement Policies with Quality Levels. IEEE Transactions on Automatic Control, 45, 2193-2196. http://dx.doi.org/10.1109/9.887687 [7] Hadley G. (1964) Nonlinear and Dynamic Programming. Addison-Wesley, Massachussetts. [8] Fu, M., Hu, J. and Shi, L. (1993) An Application of Perturbation Analysis to a Replacement Problem in Maintenance Theory. Proceedings of the 1993 Winter Simulation Conference, 12-15 December 1993, 329-337. [9] Pérez, G., Álvarez, M. and Garnica, J. (2006) Stochastic Linear Programming to Optimize some Stochastic Systems. WSEAS Transactions on Systems, 9, 2263-2267. [10] Sherwin, J. a nd Al-Najjar, B. (1999) Practical Models for Condition-Based Monitoring Inspection Intervals. J ournal of Quality on Maintenance Engineering, 5, 203-221. http://dx.doi.org/10.1108/13552519910282665 [11] Lewis, E. (1987) Introduction to Reliability Theory. Wiley, Singapore. [12] Cheng, T. (1992) Optimal Replacement of Ageing Equipment Using Geometric Programming. International Journal of Production Research, 3, 2151-2158. http://dx.doi.org/10.1080/00207549208948142 [13] Karabakal, N., Bean, C. and Lohman, J. (2000) Solving Large Replacement Problems with Budget Constraints. Engi- neering Economy, 5, 290-308. http://dx.doi.org/10.1080/00137910008967554 [14] Dohi, T., Ashioka, A., Kaio, N. and Osaki, S. (2004) A Simulation Study on the Discounted Cost Distribution under Age Replacement Policy. Journal of Management and Engineering Integration, 3, 134-139. [15] Bellman, R. (1955) Equipment Replacement Policy. Journal of the Society for Industrial and Applied Mathematics, 3, 133-136. http://dx.doi.org/10.1137/0103011 [16] White, R. (1969) Dynamic Programming. Holden Hay, San Francisco. [17] Davidson, D. (1970) An Overhaul Policy for Deteriorating Equipment. In: Jardine, A.K.S., Ed., Operational Research in Maintenance, Manchester University Press, Manchester, 72-99. [18] Walker, J. (1992) Graphical Analysis for Machine Replacement: A Case Study. International Journal of Operations and Production Management, 14, 54-63. http://dx.doi.org/10.1108/01443579410067252 [19] Bertsekas, D. (2000) Dynamic Programming and Optimal Control. Athena Scientific, Belmont. [20] Plá, L., Pomar, C. and Pomar, J.A (2004) Markov Sow Model Representing the Productive Lifespan of Herd Sows. Agricultural Systems, 76, 253-272. http://dx.doi.org/10.1016/S0308-521X(02)00102-6 [21] Nielsen, L. and Kristensen, A. (2006) Finding the K Best Policies in a Finite-Horizon Markov Decision Process. Euro- pean Journal of Operational Research, 175, 1164-1179. http://dx.doi.org/10.1016/j.ejor.2005.06.011 [22] Nielsen, L., Jørgensen, E., Kristensen, A. and Østergaard, S. (2009) Optimal Replacement Policies for Dairy Cows Ba- sed on Daily Yield Measurements. Journal of Dairy Science, 93, 75-92. http://dx.doi.org/10.3168/jds.2009-2209 [23] Hillier, F. and Lieberman, J. (2002) Introduction to Operations Research. Mc Graw Hill, New York. [24] Meyer, C. (1994) Sensitivity of the Stationary Distribution of a Markov Chain. SIAM Journal on Matrix Analysis and Applications, 15, 715-728. http://dx.doi.org/10.1137/S0895479892228900 [25] Abbad, M. and Filar, J. (1995) Algorithms for Singularly Perturbed Markov Control Problems: A Survey. Control and  E. S. H. Gress et al. Dynamic Systems, 73, 257-288. [26] Feinberg, E. (2000) Constrained Discounted MarkovDecision Processes and Hamiltonian Cycles. Mathematics of Op- erations Research, 25, 130-140. http://dx.doi.org/10.1137/S0895479892228900 [27] Schrijner, P. and van Doorn, E. (2001) The Deviation Matrix of a Continuous-Time Markov Chain. Probability in the Engineering and Informational Sciences, 16, 351-366. [28] Ross, S. (1992) Applied Probability Models with Optimization Applications. Holden Day, San Francisco.

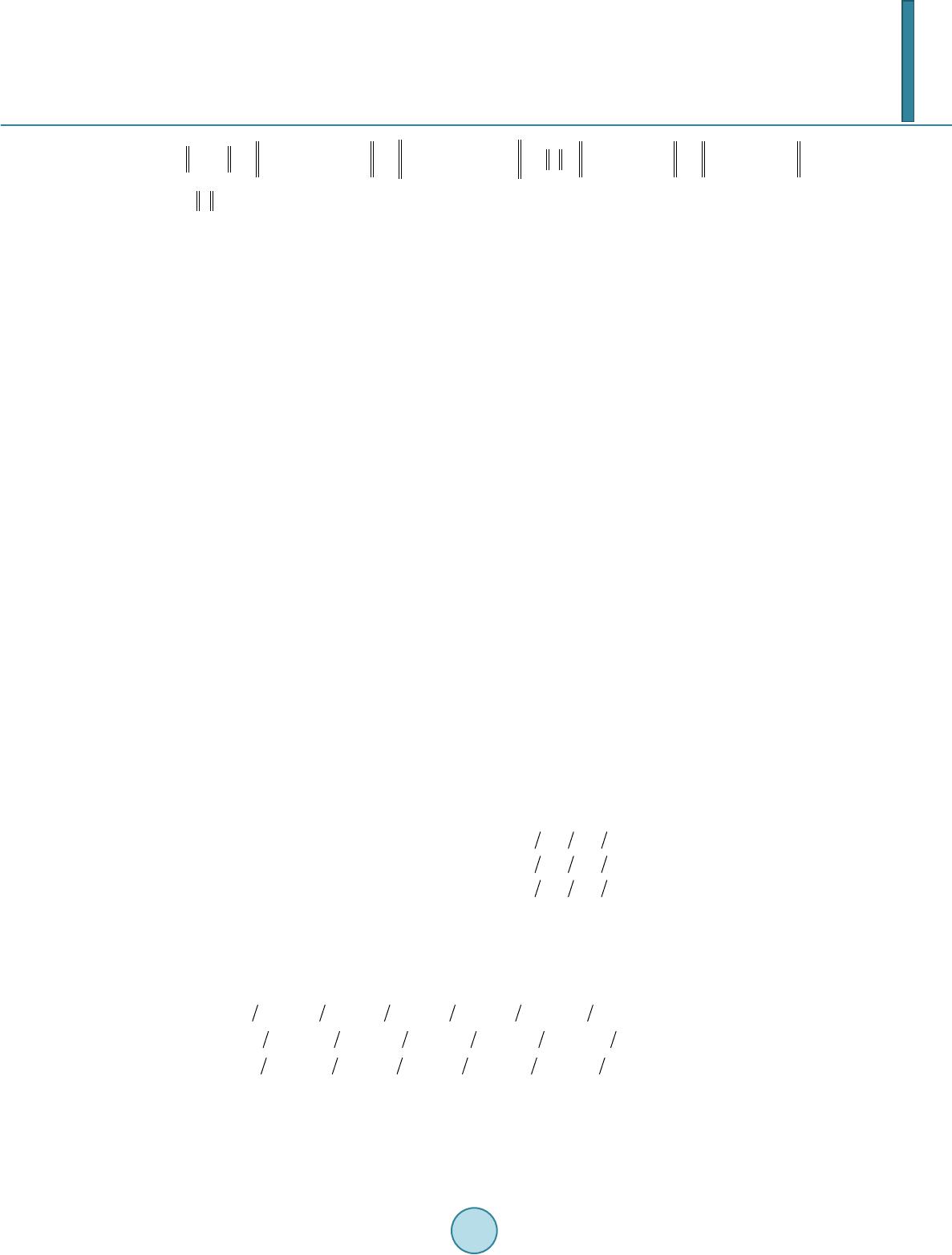

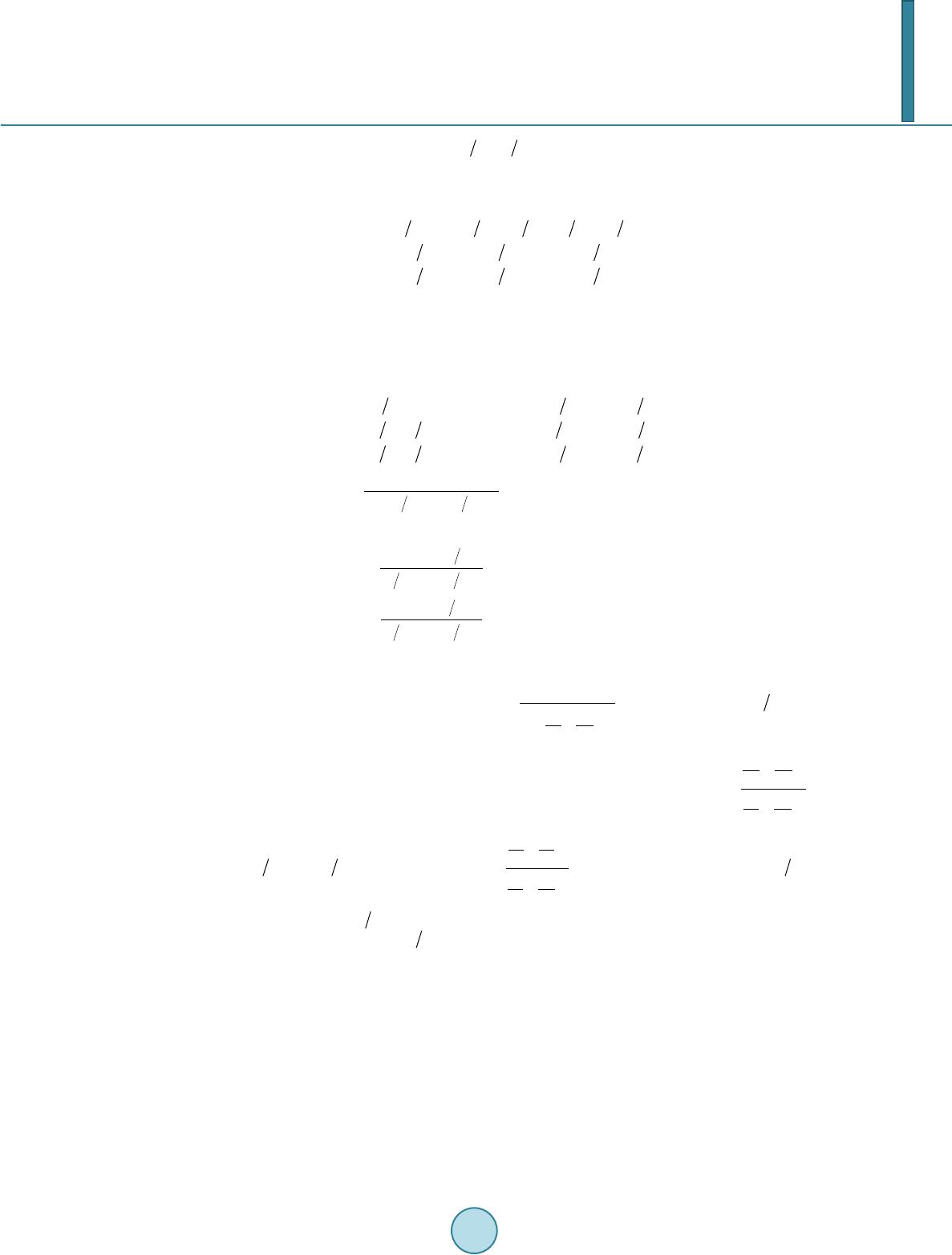

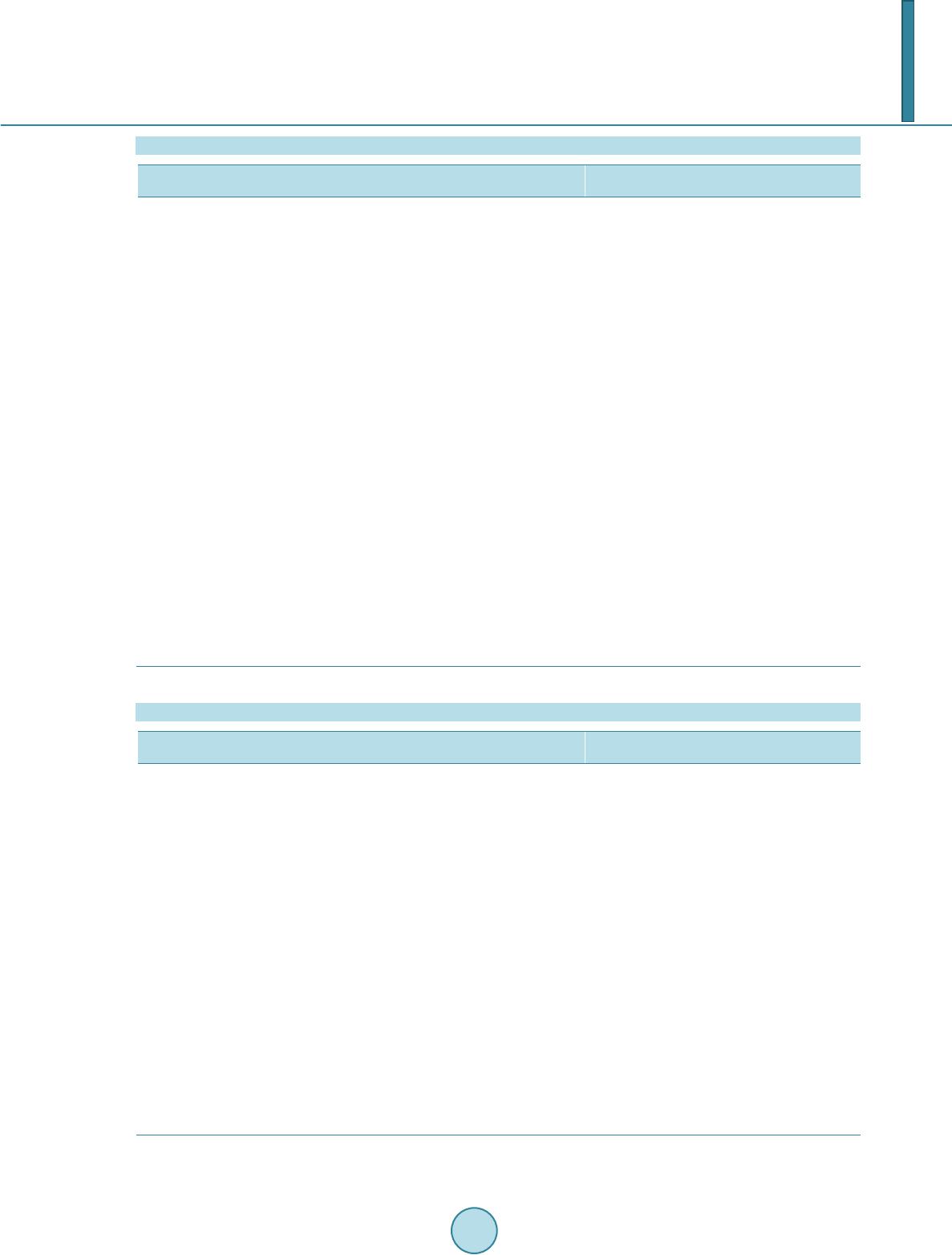

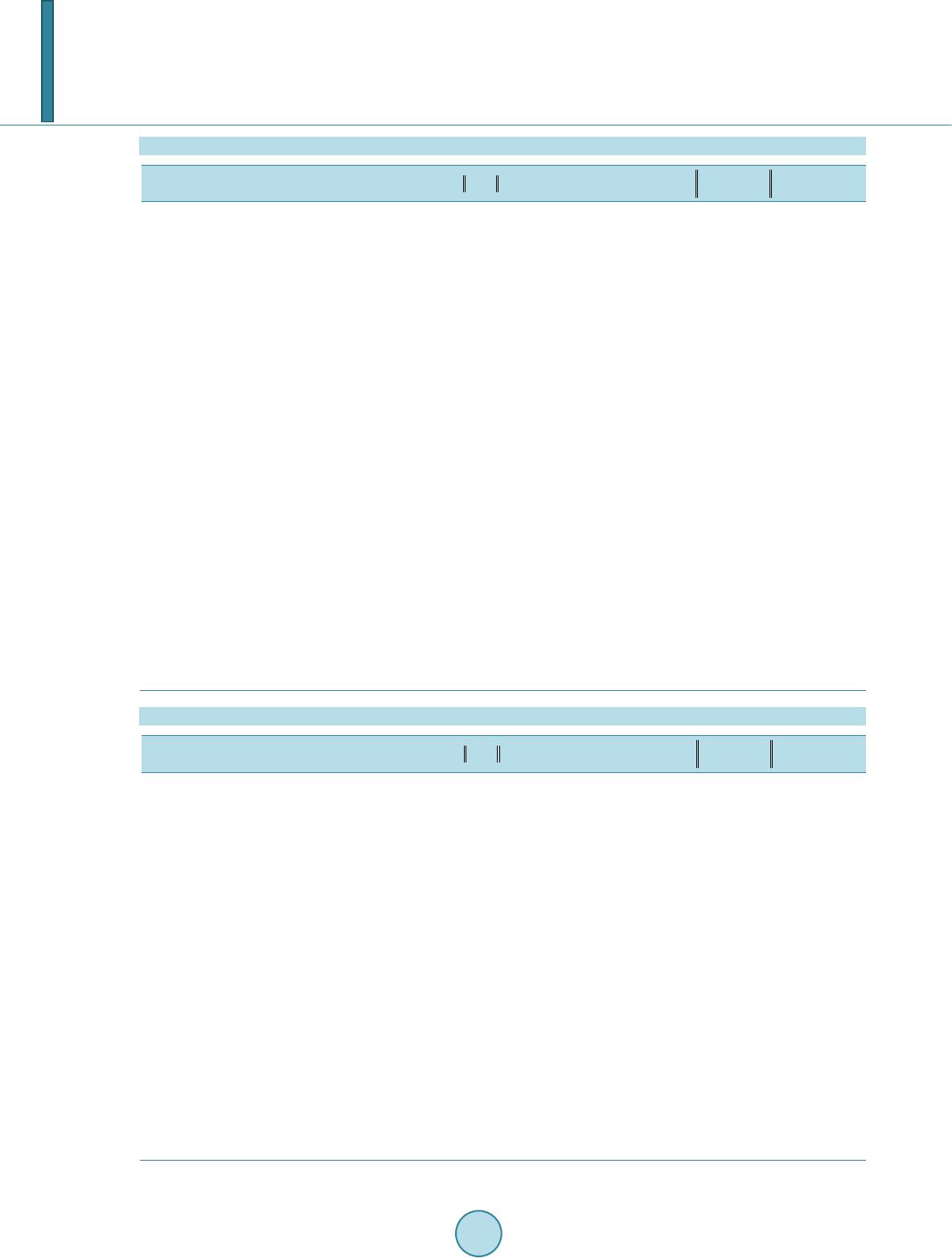

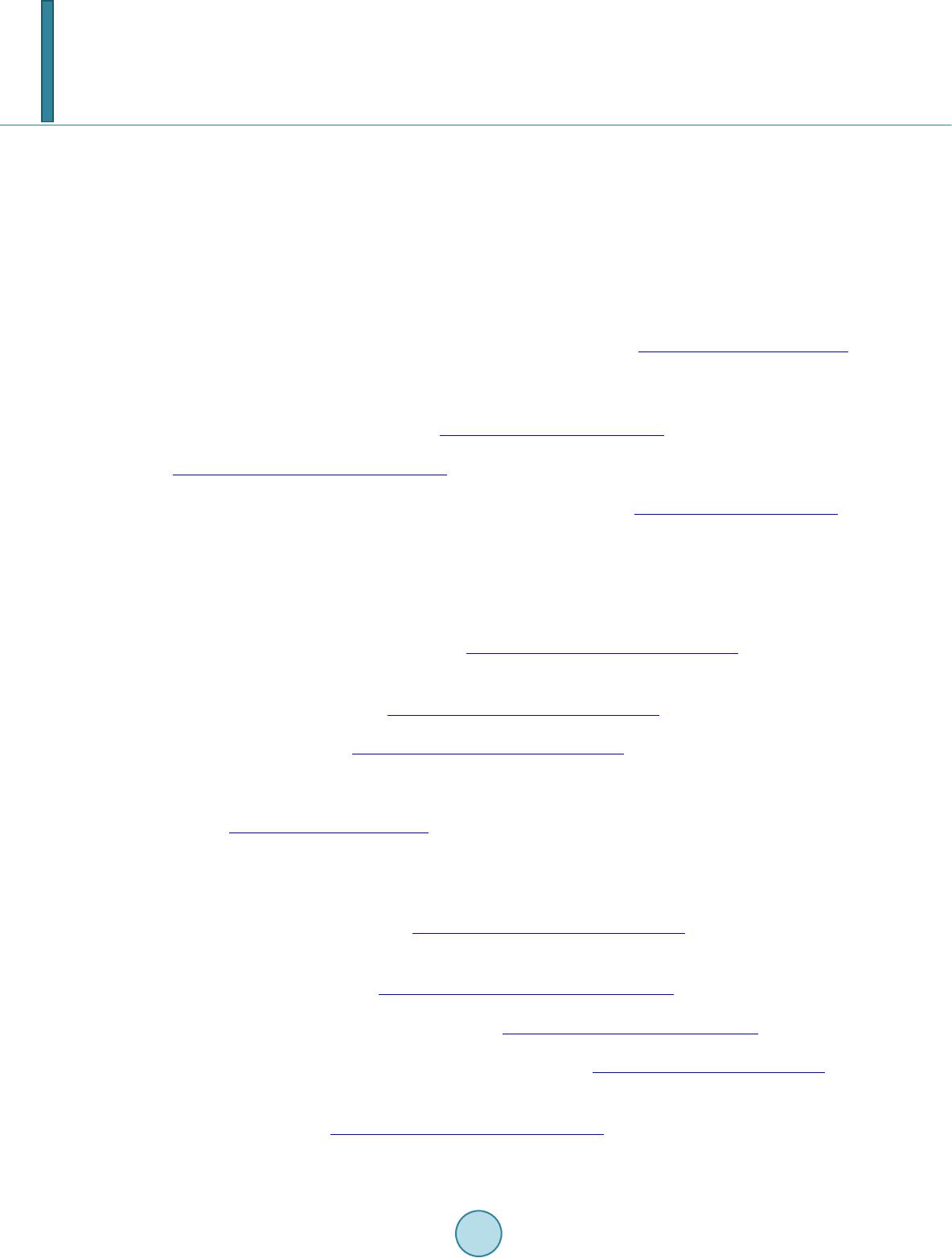

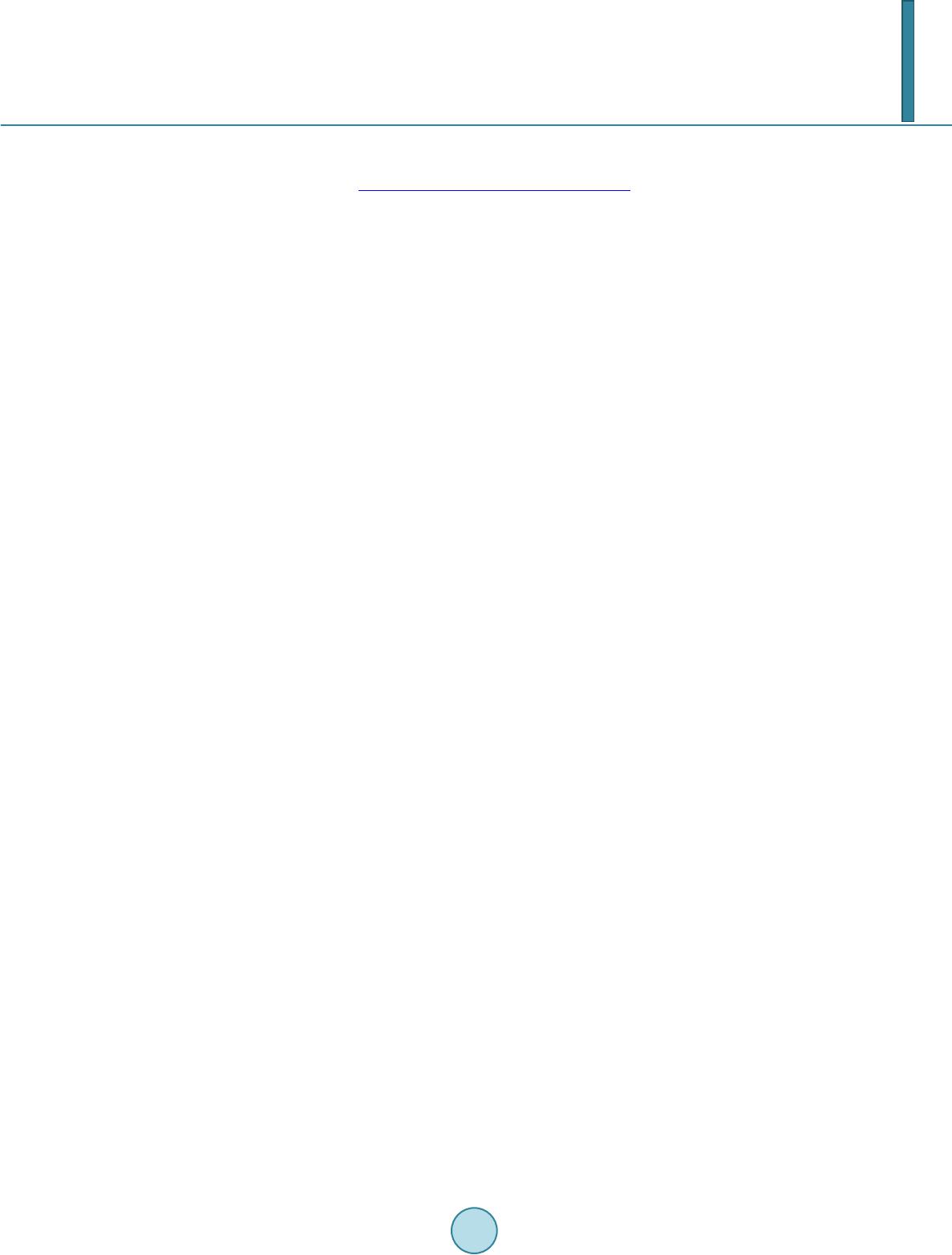

|