Paper Menu >>

Journal Menu >>

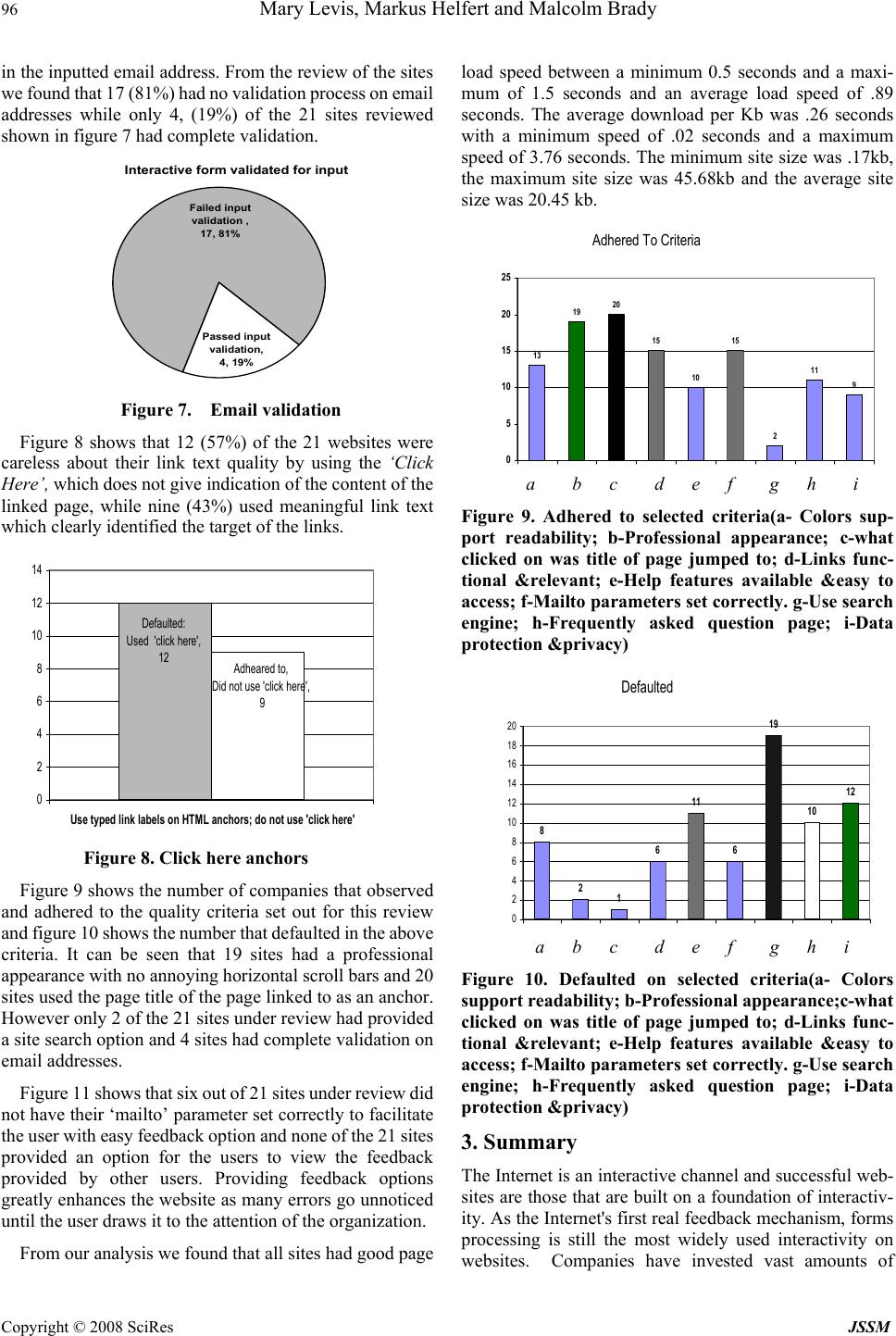

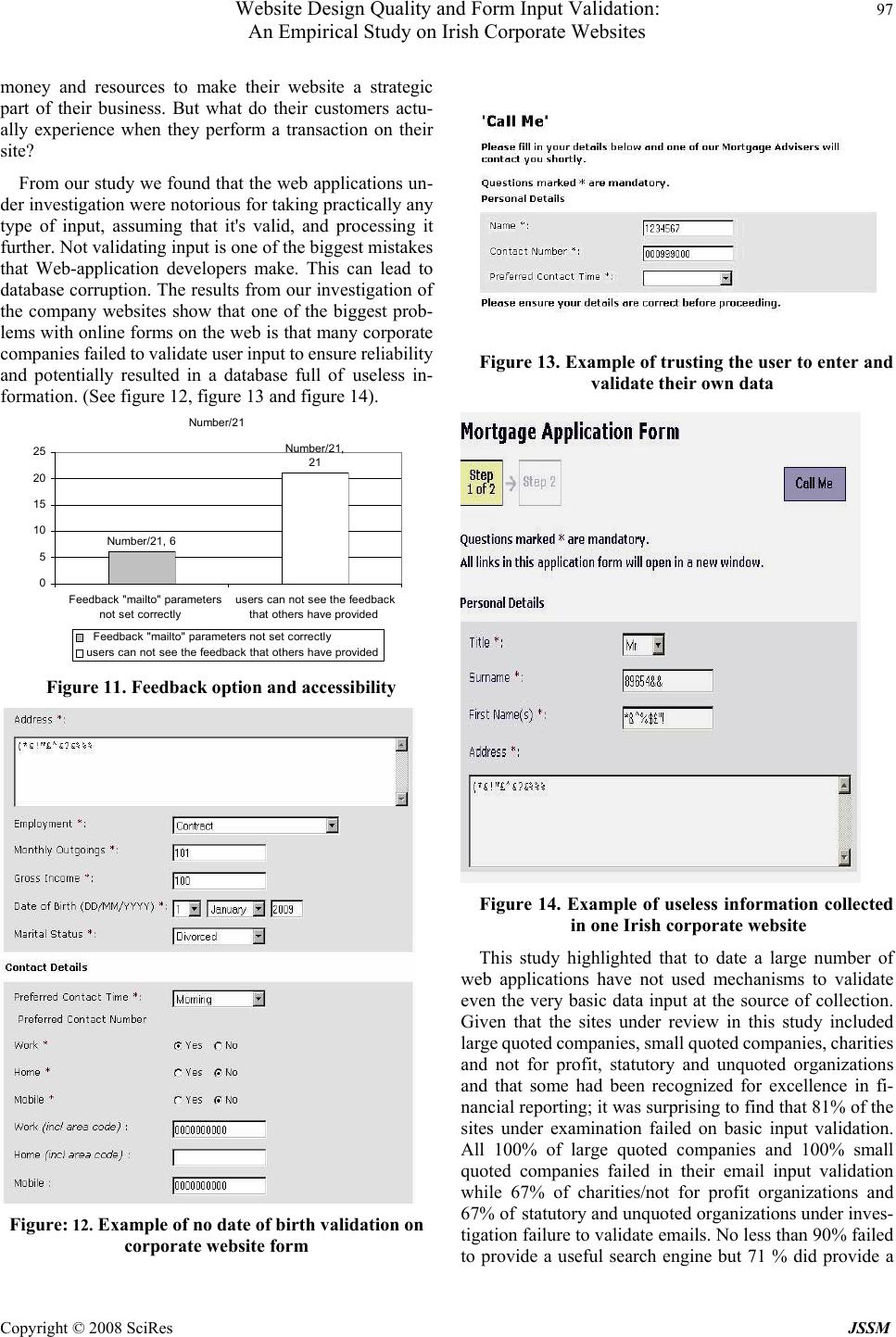

J. Serv. Sci. & Management. 2008, 1: 91-100 Published Online June 2008 in SciRes (www.SRPublishing.org/journal/jssm) Copyright © 2008 SciRes JSSM Website Design Quality and Form Input Validation: An Empirical Study on Irish Corporate Websites Mary Levis1, Markus Helfert1 and Malcolm Brady1 1 Dublin City University ABSTRACT The information ma intained ab out products, services and cu stomers is a m ost valuable o rganisational asset. Th erefore, it is important for successful electronic business to have high quality websites. A website must however, do more than just look attractive it must be usable and presen t useful, usable information. Usability essen tia lly means tha t the website is intuitive and allows visitors to find what they are looking for quickly and without effort. This means careful consid- eration of the structure of information and navigational design. According to the Open Web Applications Security Pro- ject, invalidated input is one of the top ten critical web-application security vulnerabilities. We empirically tested 21 Irish corporate websites. The findings suggested that one of the biggest problems is that many failed to use mechanisms to validate even the basic user data input at the source of collection which could potentially result in a database full of useless information. Keywords: Website Design Quality, Form Input Validation, Information Quality, Data Quality 1. Introduction The World Wide Web (WWW) is the largest available distributed dynamic repository of information, and has undergone massive and rapid growth since its inception. There are over 2,060,000 users in Ireland alone. Over the last seven years (2000 - 2007), Internet usage in Ireland has grown by 162.8%; in United Kingdom by 144.2%; in Europe by 221. 5% an d Wo rl d wi de by 24 4.7% [18]. Based on these facts, the Internet has assumed a central role in many aspects of our lives and therefore creates a greater need for businesses to design better websites in order to stay competitive and increase revenue. Interac- tivity is essential to engage visitors and lead them to the desired action and customers are more likely to return to a website that has useful interactivity. The website's homepage should be a marketing tool designed as a 'bil lboard' for t he or ganizat ion. The desi gn is critical in capturing the viewer's attention and interest [25] and should represent the company in a meaningful and positive light. Therefore, there are many web design concerns for commercial organizations when designing their website. The most basic are as follows: content that should be included, selecting relevant and essential information, designing a secure, usable, user friendly web interface that is relatively easy to nav igate, and ensuring the site is easy to find us ing any of the ma jor se arch eng ines. In the driv e to make the website look appealing from a visual per- spective other factors are often ignored, such as validation and security, which leads to poor user experience and data quality problems. Data in the real world is constantly changing therefore feedback is necessary in order to ensure that quality is maintained. Data is dee med of high quality if it ‘correctly represents the real-world construct to which it refers so that products or decisions can be made’ [30]. One can probably find as many definitions for quality on the web as there are papers on quality. There are however, a number of theoretical frameworks for understanding data quality. Redman [33] and Orr [27] have presented cybernetic models of information quality. The cybernetic view con- siders organizations as made up of closely interacting feedback systems that link quality of information to how it is used, in a feedback cycle where the actions of each system is continuously modified by the actions, changes and outputs of the others [2,29,36]. Figure 1 shows an information system in the real world context. Figure 1: Information syste m in the realworld context [29]  92 Mary Levis, Markus Helfert and Malcolm Brady Copyright © 2008 SciRes JSSM Wang and Strong proposed a data quality framework that includes the categories of intrinsic data quality, ac- cessibility data quality, contextual data quality and repre- sentational data quality outlined in table 1. Table1. IQ dimensions [17] DQ Category DQ Dimensions Intrinsic DQ Accuracy, Objectivity, Believability, Reputation Accessibility DQ Accessibility, Access Security Contextual DQ R e le vancy , Value Added Timeliness, Completeness, Amount of Data Representational DQ Interpretability, Ease of understanding, Concise Representation, Consistent Representation The quality of websites may be linked to such criteria as timeliness, ease of navigation, ease of access and presentation of information. From the customer's perspec- tive usability is the most important quality of a Web ap- plication [8]. However, even if all procedures are adhered to, errors can still arise that reduce the quality standard of the online experience . Fo r e xam pl e, a fil e m ay be moved or a n image deleted, which results in broken links. The root cause that leads to web application problems is the poor appro ach to web design. To remedy this several techniques exist to evaluate the quality of websites for exam ple link checkers, accessi bility checkers and cod e validation. To help improve th e quality of a website, aspects such as structure and page layout need to be consistent and coherent. A good website must include safe guards a gainst fa ilure an d prov ide si mple , user friendly data entry and validation processes. From the literature reviewed a universal definition of information quality is difficult to achieve [3, 21, 26, 29, 38, 42]. Accor ding to [25] 'Technically, information that meets all the requirements is quality information'. Some ac- cepted definitions of quality from the quality gurus are shown in table 2. Table 2. Quality definitions from the quality gurus Author Quality Definitions Deming Meeting the customers needs Juran Fitness for use Crosby Conformance to requirements Ishikawa Continuous improvement Feigenbaum Customer satisfaction One definition of quality is ‘the totality of characteris- tics of an entity that bear on its ability to satisfy stated and implied needs’ [13, 14]. Two requirements for website evaluation emerge from this definition. 1) general valua- tion of all the site’s characteristics and 2) how well the site meets specific needs. 1.1. Related Work Pernici and Scannapieco [28] d iscuss a set of data quality dimensions such as expiration, completeness, source reli- ability, and accuracy to evaluate data quality in web in- formation systems to support correct interpretation of web pages content. Cusimano Com Corp [4] declared that ef- fective web sites must be clear, informative, concise, and graphically appealing. Tilton [40] recommends that Web designers should present clear information that has a consistent navigation structure. Hylnka and Welsh [12] put forward the argu- ment that the web page is a sour ce of communication and should be analysed within communication theory. Kelly and Vidgen [16] is concerned with the combina- tion of a quality assessment method, E-Qual, and a light- weight quality assurance method, QA focus and states that website developers need to use standards and best prac- tices to ensure that websites are functional, accessible and interoperable. There are a number of ways to evaluate the quality of websites, such as competitive analysis, inspection, and online questi onnaires. WebQu al, develope d by Ba rnes and Vidgen [1] is one approach to the assessment of website quality. WebQual, has 3 main dimensions: usability, in- formation quality, and service interaction quality. Ac- cording to [1], WebQual is a ‘structured and disciplined process that provides a means to identify and carry the voice of the c ustomer thr ough each stage of product a nd or service development and implementation’. Usability is concerned with the quality associated with the site design; Information Quality is con cerned with the quality of content of the site; Service In teraction quality is concerned with quality prov ided to the users as they enter into the site. Within these dimensions, WebQual consists of a set of 23 questions regarding the website being as- sessed and each question contains a rating from 1-7; 1 = strongly disagree, 7 = strongly agree. Detailed information about evaluating websites can be found at [24, 34, 35]. Eppler & Muenzem ayer [7] identifies 2 manifestations, 4 quality categories, and 16 quality di- mensions. Kahn et al. [17] mapped IQ dimensions to the PSP/IQ model with 2 quality types, 4 quality classifica- tions, and 16 quality dimensions. Zhu & Gauch [44] out- lines 6 quality metrics for information retrieval on the web. 1.2. What Is a Quality Website? Online interactivity is a valuable way of improving the  Website Design Quality and Form Input Validation: 93 An Empirical Study on Irish Corporate Websites Copyright © 2008 SciRes JSSM quality of b usiness websites and web designers shou ld be aware of how design affects the quality of the website and the image of the organization. Good websites have a rich and intuitive link structure. A link going to the Customer Service should be named 'Customer Service' and the surfer looking for Customer Service information will know this link goes to the page they want. T herefore, ' click he re' should ne ver be use d as a link. Information managers and developers must determine how much information users need [25]. Some users will need much background on a specific topic whilst others may only need a summary or overview. A good web de- signer will think clearly about how each piece of data links up with the rest of the content on the website and will organize the links accordingly. Without a clear navigation system, viewers can become disoriented. Hyperl inks are di stinguis hed fr om norm al text within a page by its colour. When the page pointed to by a hyperlink has been 'visited’ browsers will inform the users by changing the link's colour [41]. The most vulnerable part of any web application is its forms and the most common activity of web applications is to validate the users’ data. According to the Open Web Applications Security Project [27] invalidated input is in the top ten critical web application security vulnerabilities. Input validation is an important part of creating a robust technological system and securing web applications. Be- cause of the fundamental client server nature of the web application, input validation should be done both on the client and the server. Client side validation is used to provide input data validation at the data collection point before the form is submitted and check that the required fields are filled and conform to certain characteristics such as built in length restriction, numeric limits, email address valid ity, charac- ter data format etc. Incorrect data validation can lead to data corruption. Table 3. Example validation checks Validation check Description Character set Ensure data only contain char- acters you expect Data format Ensure structure of data is con- sistent with what is expected Range check Data lies within specific range of values Presence check No missing / empty fields Consistency check If title is 'Mr' then gender is 'Male' Input validation should be performed on all incoming data ensuring the information system stores clean, correct and useful data. Examples of invalid data are: text entered into a numeric field, numeric data entered into a text field, or a percentage entered into a currency field. Table 3 provides an example set of checks that coul d be perform ed to ensure the incoming data is valid before data is proc- essed or used . Having contact information available and visible on the website is a marketing plus that potential customers use in order to judge a company’s trustworthiness, as it signifies respect for the customer and implies promise of good service. Feedback mechanisms built into the website are a useful way to get meaningful feedback on the website and service quality from the people who matter most – your customers. After all, one definition of quality is ‘meeting or exceeding the customer’s expectations’. One of the most important factors for a website being successful is speed. If the website is unresponsive, with long response times the visitors will not come again. Speed or responsiveness is integral to good website design and organizational success. Web pages should be designed with speed in mind [31]. It is estimated that if a page doesn't load within 5-8 seconds you will lose 1/3 of your visitors [35]. However, many designers believe that with the recent development of broadband, visual aesthetics is now more im portant as downl oad speed is not such a major concern. Nevertheless, not all users have broadband and this should be taken into cons ideration . For the pu rpos e of t his st udy we c ond ucted an em pi rical study using a data set of twenty one finalists in a recent website quality technology award. The aim of this study was to examine these websites for Technical quality issues from the user’s perspective. Our analysis focused on helping website owners under- stand the importance of certain website characteristics, quality of information and functionalities. During the analysis we tested functionalities in the website like forms, the navigation process, the relevance of all click through and the page download speed. The rest of the paper is organized as follows: Sectio n 2 shows our methodology, Section 3 giv es a brief summary and Section 4 some conclusions. 2. Research 2.1. Methodology We conducted an empirical study on a recent accountancy website quality technology award competition using the full data set of twenty one finalists that included (3) Char- ity/Not for Profit organizations, (7) Large Quoted Com- panies, (2) Sma ll Quot ed C om panies an d (9) Statut ory an d Unquoted Companies. The identity of websites has been concealed due to confidentiality regulations regarding  94 Mary Levis, Markus Helfert and Malcolm Brady Copyright © 2008 SciRes JSSM 38% 10% 5% 29% 52% 29% 90% 48% 57% 62% 90% 95% 71% 48% 71% 10% 52% 43% 0% 10% 20% 30% 40% 50% 60% 70% 80% 90% 100% their identity. The aim of this study was to examine these websites for Technical quality issues. This required validatin g the sites against a series of checkpoints that included: checking that legal and regulatory guidelines were adhered to (e.g. data protection and privacy), that pages conformed to Web-Accessibility standard (e.g. missing 'alt tags'), miss- ing page titles, browser compatibility, user feedback mechanisms, applications were functioning correctly (e.g. online forms are validated for input etc.). It also included evaluation of the main characteristics and structure of the sites for example clear ordering of information, broken links, and ease of navi gation. The principle us ed was based on the same criteria used t o evaluate the participants in the 2006 award [10, 32, 39, 43]. The criteria subset used for this study is outlined in tab le 4. Table 4. Set of criteria used in our study Validation Criteria Contrast colours support readability & understanding Professional appearance Do not use 'click here' What you clicked on is title of page jumped to Links back to home page are functional & relevant Help features available and easy to access Visited links change colour Site map Interactive form validated for input Mailto parameters set correctly? Web address simply a case of adding .com or .ie to Useful search eng ine provi ded Site search provided FAQ Data Protection & Privacy 2.2. Findings and Analysis Table 5 shows the number of companies who defaulted and the number of companies who adhered to selected criteria. Twelve websites did not include a link to their data protection and privacy policy. A help and Frequently Asked Question (FAQ) page is a general requirement for good website design. As far as navigation goes, this page should tell the user how to find products or information and how to get to the sitemap, yet, 10 companies did not have a FAQ link and 11 did not have help features avail- able and easy to access. Seven out of the 21 sites evalu- ated did not have the mailto parameters set correctly. Fifteen sites had fully functional and relevant links to other pages and back to the homepage. Thirteen sites promoted contrast colours supporting readability and un- derstanding and 19 had a professional feel and appearance and did not ha ve horizo ntal s croll bars . T went y o f the tot al twenty one sites adhered to the criteria of having the title of page jumped also as the labe l of the link connecting to it. The percentage of sites that adherence to the criteria and the percentage of sites that defaulted on the criteria are shown in figure 2. Table 5. Criteria for website evaluation Validation Criteria De- faulted Ad- hered Contrast colours support read- ability and understanding 8 13 Professional appearance 2 19 No use of 'click here' links 12 9 What is clicked on is title of page jumped to 1 20 Links to home page functional and relevant 6 15 Help features available & easy to access 11 10 Visited links change colour 16 5 Site map available 6 15 Form validation for input 17 4 Mailto parameters set correctly 7 14 Web address is a case of add- ing .com or .ie to company name 4 17 Useful search engine pr o vi de d 19 2 Site search provided 7 14 Frequently Asked Questions 10 11 Data Protection and Privacy 12 9 a b c d e f g h i Figure 2. Percentage of sites that defaulted and per- centage of sites that adhered to selected criteria (a- Colours s support readability; b-Professional appear- ance; c-what clicked on was title of page jumped to; d-Links functional &relevant; e-Help features avail- able &easy to access; f-Mailto parameters set cor- rectly. g-Use search engine; h-Frequently asked ques- tion page; i-Data protection &privacy) Figure 3 depicts the results from the Friendly URLs  Website Design Quality and Form Input Validation: 95 An Empirical Study on Irish Corporate Websites Copyright © 2008 SciRes JSSM (Uniform Resource Locator). Seventeen (81%) of the 21 sites tested had good structured semantic URLs, made up of the actual name of t he specific company where we could guess the UR L by si mply addi ng .com , .org or .ie to the company name. For example a company named ‘Jitnu’ had a URL http://www.jitnu.ie or http://www.jitnu.co m or http://www.jitnu.org as the web addresses which convey meaning and structure. Only 19 % of the companies ex- amined defaulted on these criteria having a URL for ex- ample such as http://www.jitnu.ie/?id=478 instead of http://www.jitnu.ie/services or had a file extension like .php as part of their URL. Web address adding .com, .org or .ie Adhered to , 17, 81% Defaulted on, 4, 19% Figure 3. Res u l ts o f th e friendl y URL's crite ria Figure 4 shows that 16 (76%) of the 21 sites examined used the same link colour for visited and unvisited pages and did not support a convention that users expect. Failing on this navigational aid could well increase navigational confusion and introduce usability problems for the user. Good practice is to let viewers see their navigation path history (i. e. pages they have al read y visited) by dis pl a y ing links to ‘visi t ed pages’ in a different colour. Visited links change colour Did not change colour, 16, 76% Changed Co lo ur , 5, 24% Figure 4. Visited pages changed link colour Sitemaps are particularly beneficial when users cannot access all areas of a we bsite through the browsing interface. Failure to provide this access option may lose potential viewers. A large website should contain a site map and search optio n. From analysis of our findings in figu re 5, we show that six websites ( 29% ) di d n ot provide a site map. Visitors appreciate search capability on sites that deals with several different products or services. In figure 6 we show that although adding a search function on a website helps visitors to quickly find information they need, seven (33%) of the 21 sites reviewed failed to provide a com- prehensive site search or search interface. Defaulted, Site map , 6 Adheared to, Site map , 15 0 2 4 6 8 10 12 14 16 Site map Figure 5. Site map While creating a good navigation system will be suffi- cient help for many people, it won't meet the needs of everyone . It ap pears that these companies fail to realise t he importance of providing a search capability, which not only make sites more interactive but also gives visitors more control over their browsing experience. Provision of search facility No Search facility provided, 19 Search Facility provided, 2 0 2 4 6 8 10 12 14 16 18 20 Is a useful search engine provided Figure 6. Search facility Figure 7 shows the results of checking user-entered email addresses for valid inpu t. An email address should contain one and only one (@) and also c o ntain at l e ast one (.). There should be no spaces or extra (@). There must be at least one (.) after the (@) for an email address to be valid. Some websites had implemented some form of email address validation bu t did so incorrectly. Fo r exam- ple they correctly rejected jitnu.eircon.net and jitnu@eircom@net as invalid email addresses, however, they incorrectly accepted 'jitnu.eircom@net', as a valid email address thus allowing an invalid email address to pass to the system as a valid. While they co rrectly checked for the presence of the (@) and the (.), they did not how- ever check the order in which the (@) and the (.) appeared  96 Mary Levis, Markus Helfert and Malcolm Brady Copyright © 2008 SciRes JSSM in the inputted email addr ess. From the review of the sites we found that 17 (81%) had no validation process on email addresses while only 4, (19%) of the 21 sites reviewed shown in figure 7 had complete validation. Interactive form validated for input Passed input validation, 4, 19% Failed input validation , 17, 81% Figure 7. Email validation Figure 8 shows that 12 (57%) of the 21 websites were careless about their link text quality by using the ‘Click Here’, which does not give in dicat ion of the conte nt of t he linked page, while nine (43%) used meaningful link text which clearly identified the target of the links. Defaulted: Used 'click here', 12 Adheared to, Did not use 'click here', 9 0 2 4 6 8 10 12 14 Use typed link labels on HTML anchors; do not use 'click here' Figure 8. Click here anchors Figure 9 shows the number of companies that observed and adhered to the quality criteria set out for this review and figure 10 shows the number that defaulted in the above criteria. It can be seen that 19 sites had a professional appearance wi th no annoying horizont a l scroll bars and 20 sites used the page title of th e page link ed to as an an chor. However only 2 of th e 21 sites un d er r eview h ad pr ov ided a site search option and 4 sites had complete validation on email addresses. Figure 11 shows that six out of 21 sites under review did not have their ‘mailto’ parameter set correctly to facilitate the user with easy feedback option and none of the 21 sites provided an option for the users to view the feedback provided by other users. Providing feedback options greatly enhances the website as many errors go unnoticed until the user draws it to the attention of the organization . From our analysis we found that all sites had good page load speed between a minimum 0.5 seconds and a maxi- mum of 1.5 seconds and an average load speed of .89 seconds. The average download per Kb was .26 seconds with a minimum speed of .02 seconds and a maximum speed of 3.76 seconds. The mini mum si te si ze wa s .17k b, the maximum site size was 45.68kb and the average site size was 20.45 kb. Adhered To Criteria 13 19 20 15 10 15 2 11 9 0 5 10 15 20 25 a b c d e f g h i Figure 9. Adhered to selected criteria(a- Colors sup- port readability; b-Professional appearance; c-what clicked on was title of page jumped to; d-Links func- tional &relevant; e-Help features available &easy to access; f-Mailto parameters set correctly. g-Use search engine; h-Frequently asked question page; i-Data protection &privacy) Defaulted 8 21 6 11 6 19 10 12 0 2 4 6 8 10 12 14 16 18 20 a b c d e f g h i Figure 10. Defaulted on selected criteria(a- Colors support readability; b-Professional appearance;c-what clicked on was title of page jumped to; d-Links func- tional &relevant; e-Help features available &easy to access; f-Mailto parameters set correctly. g-Use search engine; h-Frequently asked question page; i-Data protection &privacy) 3. Summary The Internet is an interactive channel and successful web- sites are those that are built on a foundation of interactiv- ity. As the Internet's first real feedback mechanism, forms processing is still the most widely used interactivity on websites. Companies have invested vast amounts of  Website Design Quality and Form Input Validation: 97 An Empirical Study on Irish Corporate Websites Copyright © 2008 SciRes JSSM money and resources to make their website a strategic part of their business. But what do their customers actu- ally experience when they perform a transaction on their site? From our study we found that th e web applications un- der investigation were notorious for taking practically any type of input, assuming that it's valid, and processing it further. Not validating input is one of the biggest mistakes that Web-application developers make. This can lead to database corruption. The results from our investigation of the company websites show that one of the biggest prob- lems with online forms on the web is that many corporate companies failed to validate user input to ensure reliability and potentially resulted in a database full of useless in- formation. (See figure 12, figure 13 and figure 14). Number/21 Number/21, 6 Number/21, 21 0 5 10 15 20 25 Feedback " mailto" parameters not set correctly users can not see the feedback that ot he rs have provided Feedback " mailto" parameters not set c orrec t ly users c an not see t he feedback that others have provided Figure 11. Feedback option and accessibility Figure: 12. Example of no date of birth validation on corporate website form Figure 13. Example of trusting the user to enter and validate their own data Figure 14. Example of useless information collected in one Irish corporate website This study highlighted that to date a large number of web applications have not used mechanisms to validate even the very basic data input at the source of collection. Given that the sites under review in this study included large quoted companies, small quoted companies, charities and not for profit, statutory and unquoted organizations and that some had been recognized for excellence in fi- nancial reporting; it was surprising to find that 81% of the sites under examination failed on basic input validation. All 100% of large quoted companies and 100% small quoted companies failed in their email input validation while 67% of charities/not for profit organizations and 67% of statutory and unq uo ted or gan izations und er inv es- tigation failure to valid ate emails. No less than 90 % failed to provide a useful search engine but 71 % did provide a  98 Mary Levis, Markus Helfert and Malcolm Brady Copyright © 2008 SciRes JSSM site map. Providing a site search function makes the site search- able. The sitemap should include every page on the site, categorized for easier navigation. These are the links that users look for when they cannot find what they are actually looking for on the site. However, 67% provided a site search facility and 81% had friendly URL’s that were easy to remember and most sites had a good de sign layout that was consistent throughout. The consistency aspect of quality was closely adhered to by all sites making it easier for the user to navigate. 4. Conclusions Today's Internet user expects to experience personalized interaction with websites. If the company fails to deliver they run the risk of losing a potential customer forever. An important aspect of creating interactive web forms to collect information from users is to be able to check that the information entered is valid, therefore; information submitted through these forms should be extensively validated. Validation could be performed using client script where errors are detected when the form is submit- ted to the server and if any errors are found the submis- sion of the form to the server is cancelled and all errors displayed to the user. This allows the user to correct their input before re-submitting the form to the server. We can not underestimate the importance of input validation which ensures that the application is robust against all forms of input data obtained from the user. Although the majority of web vulnerabilities are easy to understand and avoid many web developers are unfortu- nately not very security-aware. A company database needs to be of reliable quality in order to be usable. A simple check whether a website conforms to the very basic stan- dards could have been done using the W3C HTML vali- dation service, which is free to use. Web developers need to become aware and trained in Information Quality Management principles, and espe- cially in the information quality dimensions as outlined in Table 2. The only proven reliable way to dea l with bad data is to prevent it from entering the system. Input can be compared against a specific value; ensure that an input field was filled and that the value falls within a certain range. Allowing bad data into the system makes the en tire system unreliable and indeed unusable. Making purchases online is all about confidence; a customer must feel assured that you are a reputable com- pany, and the best way to project that image is through a well designed website. A consumer visiting a website that looks a little dodgy will not feel confident enough to submit their credit card information. Slow response times and difficult navigation are the most common complaints of Internet users. After waiting past a certain ‘attention threshold’ users look for a faster site. Of course, exactly where that threshold is d epends on many factors. How compelling is the experience? Is there effective feedback? Etc. Our analysis identified these and many other shortcomings that should have been realised and dealt with during the website test phases. Many problems could be eliminated by checking for letters (al- phabet entries only); checking for numbers (numeric en- tries only); checking for a valid range of values ; checking for a valid date input; and checking for valid email ad- dresses. However, it is important to keep in mind that a user could enter a valid e-mail address that does not actu- ally exist. It is therefore, imperative that some sort of ac- tivation process needs to be done in order to confirm a valid and correct email add ress. 5. Acknowledgements This research is funded by Dublin City University Busi- ness School. REFERENCES [1] Barnes S. J., and Vidgen R.T., An Integrative Ap- proach to the Assessment of E-Commerce Quality, Journal of Electronic Commerce Research, Vol. 3, No. 3, (2002) pp.114-27. [2] Beckford J., 2nd edition, Quality, Rutledge Taylor and Frances Group, London and New York (2005). [3] Bugajski J., Grossman R. L., Tang Zhao., An event based framework for improving information quality that integrates baseline models, casual models and formal models, IQIS 2005 ACM 1-59593-160-0/5/06. (2005). [4] Cusimano Com Corp., Planning and Designing an Effective Web Site, http://www.cusimano.com/webdesign/articles/effecti v.html . [5] Clarke R., Internet Privacy Concerns Confirm the Case for Intervention, Communications of the ACM, Vol. 42, No. 2, (1999) pp.60-68. [6] Cranor L.F., Internet Privacy, Communications of the ACM, Vol. 42, No. 2, (19 9 9) , pp.28-31. [7] Eppler, M & Muenzenmayer, P, Measuring informa- tion quality in the web context: A survey of state of the art instruments and an application methodology. Proceedings of 7th international conference on in- formation quality, (2002), pp.187-196. [8] Fraternali, P., Tools and Approaches for Developing Data-Intensive Web Applications: A Survey, ACM Computing Surveys, Vol.31, No.3, (1999). [9] Internet World Statistics http://www.internetworldstats.com. [10] FFHS Web Award http://www.ffhs.org.uk/awards/web/criteria.php . [11] Gefen D., E-Commerce: The Role of Familiarity and Trust, Omega, Vol. 28, No. 6, (2000), pp.725-737. [12] Hlynka D., and Welsh J., What Makes an Effective  Website Design Quality and Form Input Validation: 99 An Empirical Study on Irish Corporate Websites Copyright © 2008 SciRes JSSM Home Page? A Critical Analysis http://www.umanitoba.ca/faculties/education/cmns/a ect.html). [13] ISO 8402, Quality Management Assurance- Vo- cabulary, Int’l Org. for standardization, (1994) http://www.techstreet.com/iso_resource_center/iso_ 9000_meet.tmpl . [14] ISO 9241- 11 , Int ernational Or gani zati o n fo r St an- dardization, Geneva, (1998). http://www.idemployee.id.tue.nl/g.w.m.rauterberg/le cturenotes/ISO9241part11.pdf . [15] Jarvenpaa S.L., N. Tractinsky, and M. Vitale, Con- sumer Trust in an Internet Store, Information Tech- nology and Management, Vol. 1, No. 1, (2000), pp.45-71. [16] Kelly B., and Vidgen R., A Quality Framework for Web Site Quality: User Satisfaction and Quality As- surance, WWW 2005, May 10-14, 2005, Chiba, Ja- pan, ACM 1-59593-051-5/05/0005, (2005). [17] Khan B K ., Strong D.M. & Wang R.Y., Information Quality benchmarks: Product and service perform- ance, Communications of the ACM, Vol. 45, No 4, (2002), pp.84-192. [18] Kumar G, Ballou T, Ballou D, P.,Guest editors, Examining data Quality, Communications of the ACM, Vol. 41, No 2, (1998), pp.54-57. [19] Useit.com: http://www,useit.com/ . [20] Manchala D.W., E-Commerce Trust Metrics and Models, IEEE Internet Computing, Vol. 4, No. 2, (2000), pp.36-44. [21] Mandel T., Quality Technical Information: Paving the Way for UsableW3C Web Content Accessibility Guidelines 1.0, http://www.w3.org/tr/wai-webcontent/ . [22] McKnight, D.H., L.L. Cummings, and N.L. Cher- vany, Initial Trust Formation in New Organizational Relationships, Academy of Managemen t Review, Vol. 23, (1998), pp.473-490. [23] McKnight D.H., and N.L. Chervany, An Integrative Approach to the Assessment of E-Commerce Qual- ity, Journal of Electronic Commerce Research, Vol. 3, No. 3, (2002), pp.114-127. [24] Web standard schecklist. http://www.maxdesign.com.au/presentation/checklist .htm. [25] Olson J.E., Data Quality: The Accuracy dimens ion, Morgan Kaufmann, ISBN 1558608915, (2003). [26] Open W eb Application Security Project, http://umn.dl.sourceforge.net/sourceforge/owasp/O WASPTopTen2004.pdf . [27] Orr K., Data Quality and Systems, Communications of the ACM, Vol. 41, No 2, (1998), pp.66-71. [28] Pernici B., and Scannapieco M., Data Quality in Web Information Sys tems, (2002) 397-413. [29] Pike R.J., Barnes R., TQM in Action: A practical approach to continuous performance improvement, Springer, ISBN 0412715309 (1996). [30] Mandel T., Print and Web Interface Design, ACM Journal of Computer Documentation, Vol. 26, No. 3. ISSN: 1527-6805, (2002). [31] Poll R., and te Boekhorst, P., Measuring quality performance measurement in libraries, 2nd revised., Saur, Munchen, IFLA publications Durban, 127, (2007) http://www.ifla.org/iv/ifla73/index.htm. [32] Quality Criteria for Website Excellence World Best Website Awards http://www.worldbestwebsites.com/criteria.htm. [33] Redmond T.C., Improve Data Quality for Competi- tive Advantage, Sloan Management Review, Vol. 36, No 2, (1995), pp.99-107. [34] Schulz U., Web usability, http://www.bui.fh-hamburg.de/pers/ursula.schulz/we busability/webusability.html, [35] Selfso http://www.selfseo.com/website_speed_test.php. [36] Strong D. M., Lee Y. W., Wang R,Y., Data Quality in Context Communications of the ACM, Vol. 40, No 5, , (1997), pp.103-109. [37] Stylianou A.C., Kumar R.L., An integrative frame- work for IS Quality management, Communications of the ACM, Vol. 43, No 9, (2000), pp.99-104. [38] Tauscher L., Greenberg S., How people revisit web pages: Empirical findings and implication for the design of history systems, International Journal of Human-Computer Studies, Vol. 47, (1997), pp.97-137. [39] McClainT, http://www.e-bzzz.com/web_design.htm. [40] Tilton J. E., Composing Good HTML, http://www.cs.cmu.edu/~tilt/cg h/. [41] Wang R.Y., and Strong D.M. Beyond accuracy: what data quality means to data consumers, Journal of Management Information Systems Vol. 12, No 4, (1996), pp.5–34. [42] Wang R.Y., A product perspective on Total Data Quality Management, Communications of the ACM, Vol.41, No.2, (1998), pp.58-65. [43] Web standards http://www.webstandard sawards.com/criteria/#Crite ria . [44] Zhu X., and Gauch S., Incorporating quality metrics in centralized distributed information retrieval on the World Wide Web. Proceedings of the 23rd an- nual international ACM SIGIR conference on Re- search and development in information retrieval, Athens, Greece. (2000), pp.288-295.  100 Mary Levis, Markus Helfert and Malcolm Brady Copyright © 2008 SciRes JSSM AUTHORS’ BIOGRAP HIES Mary Levis joined Dublin City University Business School as a full time, Ph.D Research Scholar in the field of Info r- mation Management, in November 2006. Mary’s work is co-supervised by Dr. Malcolm Brady, Dublin City University Business School, and Dr. Markus Helfert, Dublin City University (School of Computing). Her studies are funded for three years by a scholarship from Dublin City University Business School. She holds a B.Sc degree in Computer Ap- plications (Info Sys) from Dublin City University (School of Computing). Mary has presented her work at many Inter- national conferences. Mary is a member of the Irish Computer Society (ICS), British Computer Society (BCS), the In- ternational Association of Information Data Quality (IAIDQ), Engineers Ireland (EI) and United Kingdom Academy for Information Systems (UKAIS) Institute for the Management of Information Systems. Mary has been invited by the In- ternational Conference on Enterprise Information Systems (ICEIS) to be session chair of an ICEIS-2008 session(s), in Barcelona, Spain. Email: Mary.levis2@mail.dcu.ie Dr. Malcolm Brad y has l ectured in manag ement at Dubli n C i ty Uni versity B usiness School since 19 9 6. P ri o r t o joining DCU he worked for m any years as an IT consultant in the fi nancial services and utility indust ries and before that as a ci vil engineer i n the desig n and const ruction of g as, water, a nd sewera ge systems. He teaches c ourses in strat egic ma nagement, project management, and business process innovation. His research interests are in game theory, duopoly, advertising, differentiation and competitive advantage. He graduated from University College Dublin with a Bachelors degree in Civil Engineering and a Masters degree in Management Science; he holds a Diplôme d'Ingénieur from Ecole National Supérieur du Pétrole et des Moteurs, a French Grande Ecole; he obtained an MBA from Dublin City University. He recently completed a PhD from the Department of Economics at Lancaster University. Email: malcolm.brady@dcu.ie Dr. Markus Helfert is a lecturer in Information Systems at Dublin City University, Irelan d and programme ch air of the European M.Sc. in Business Informatics at Dublin City University. His research interests include Information Quality, Data Warehousing, Information System Architectures, Supply Chain Management and Business Informatics education. His current research in information quality builds on his PhD research in data quality management in data warehouse systems. He holds a Doctor in business administration from the University of St. Gallen (Switzerland ), a Master-D egr ee in business informatics from the University Mannheim (Germany) and a Bachelor of Science from Napier University, Edinburgh (UK-Scotland). He has authored academic articles and book contributions and has presented his work at in- ternational conferences. Email: markus.helfert@dcu.ie |