Journal of Information Security

Vol.07 No.02(2016), Article ID:65142,21 pages

10.4236/jis.2016.72005

Is Public Co-Ordination of Investment in Information Security Desirable?

Christos Ioannidis1, David Pym2, Julian Williams3

1Department of Economics, University of Bath, Bath, UK

2Department of Computer Science, University College London, London, UK

3Durham University Business School, Durham, UK

Copyright © 2016 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 21 March 2016; accepted 27 March 2016; published 30 March 2016

ABSTRACT

This paper provides for the presentation, in an integrated manner, of a sequence of results addressing the consequences of the presence of an information steward in an ecosystem under attack and establishes the appropriate defensive investment responses, thus allowing for a cohesive understanding of the nature of the information steward in a variety of attack contexts. We determine the level of investment in information security and attacking intensity when agents react in a non-coordinated manner and compare them to the case of the system’s coordinated response undertaken under the guidance of a steward. We show that only in the most well-designed institutional set-up the presence of the well-informed steward provides for an increase of the system’s resilience to attacks. In the case in which both the information available to the steward and its policy instruments are curtailed, coordinated policy responses yield no additional benefits to individual agents and in some case they actually compared unfavourably to atomistic responses. The system’s sustainability does improve in the presence of a steward, which deters attackers and reduces the numbers and intensity of attacks. In most cases, the resulting investment expenditure undertaken by the agents in the ecosystem exceeds its Pareto efficient magnitude.

Keywords:

Information Security, Information Stewardship, Investment, Public Co-Ordination

1. Information Stewardship

Information produces value for an organization or individual when it improves the solutions to decision-making problems whose outcomes have consequences for their welfare. The information system refers to the entire collection of data sources and related service capabilities both internal and external to the organization that decision makers are required to use. The system is user-centred because it serves the objectives of the organi- zation by providing the information needed to achieve its mission.

The security of such systems is of paramount importance and economic agents are willing to allocate scarce resources in protecting the system when it is threatened. Information systems based almost exclusively on digital technologies are subject to and degraded by cyberattacks, which are initiated and executed remotely. As the subjects of such attacks have no clear means of identifying the initiators and stopping their activities, their main concern is to preserve the system’s functionality in all its dimensions by allocating resources, thus incurring cost, to maintain it at the level of operational capacity required by the organization. More specifically, information security, is conventionally defined as protecting the system’s confidentiality, integrity and availability (CIA).

The aim of this paper is to examine whether the decisions about expenditure/investment in information security should be socially coordinated via a steward or such decisions are better left to individual organizations. The subsequent discussion and models follow closely [1] - [4] , where the proofs elided here may be found. This paper’s contribution lies in the integration of a dispersed body of work addressing the issues regarding the co- ordination of investment in information security. Whilst the problems of sustainability and resilience have been addressed separately, combining them in a single theoretical framework provides for a clear appreciation of the policy issues emerging from public co-ordination. Related organizational issues have been examined by [5] . There is no generally accepted scientific definition of the concept of stewardship. In more general terms, stewardship is an ethic guiding the allocation and management of some of the participants’ resources in an ecosystem (household, common interest community, commercial firm, etc.) in order to sustain and protect the ecosystem, rather than the welfare maximization of individual agents, in the presence of anticipated and unanticipated shocks. The steward will be part of the ecosystem itself and can emerge either from internal or external forces.

The concept of stewardship in environmental economics is an established tool for environmental and natural resource management; see [6] and mitigation risk for climate change [7] . In the context of information security, we define the role of the steward as the institution that maintains the sustainability and resilience of the ecosystem’s operational capacity―that is, its levels of confidentiality, integrity, and availability―which may be threatened by attacks. By resilience (cf. [8] ), we mean the system’s internal capacity to restore itself to an acceptable operating state following a disturbance to its status; by sustainability, we mean the tendency of the system to maintain itself within acceptable bounds of operating state despite possibly hidden dynamics that may tend to guide the system outwith these bounds.

Stewards might emerge as a consequence of the behaviour of agents interacting in a system of exchange which is in turn conditioned by their preferences, the established legal framework, and existing social conventions. Such conventions, known as norms, are either descriptive―that is, what actions the agents in the system take―or prescriptive, influencing what behaviour ought to be. The legal framework expresses system’s values and determines the consequences (punishment) for actions deviating from such values. [9] argued that agents derive benefits from the supply of the public good―in this context, sustainability and resilience for the ecosystem―and, more importantly, that they have an intrinsic motivation to undertake costly effort to the production of the public good.

Part of the role of the steward is to alter what constitutes normal behaviour. Agents in a decentralized ecosys- tem may have beliefs, based on incomplete information, regarding the contributions of the others and thus are in danger in holding erroneous perceptions of the true societal norm. The steward, by dispelling such miss-percep- tions, can attain substantial benefits for the system as participants modify their behaviour under its guidance. For example, when agents are excessively optimistic regarding the conduct of others, the result is a fall in compliance. In this context, the steward of the ecosystem, which is subject to shocks and in secular decline, to protect the system will exercise the option available to him, prescriptive intervention. Such interventions range from widely publicized public campaigns to enforceable standards, and which boost social pressure on the individual agents to comply and make punishable the failure by agents to meet these standards. The underlying assumption here is that the steward is globally-informed compared to individuals about the currently prevailing community standards. In a more general setting, the steward knows the underlying distribution of preferences/risk aversion/ discount rates in society, information which is difficult and costly for a single agent to collect and process. The importance of the legal framework in delivering binding agreement to the production of the public good has been studied by [10] [11] , and others. Such studies show that compliance is raised when its level has been chosen through a voting decision by the participants of the community/ecosystem. To maximize the effectiveness of its actions, the law-maker/principal/steward, in setting the regulatory framework and other obligations/incentives, must take into account the impact of its actions on the formulation of the norms which will be now expected to prevail. To illustrate the point in the context of investment in cybersecurity we may consider a steward signalling to its community the need for very high levels of IT defence expenditure. Such request is actually conveying the signal that the current situation is very dangerous and in this case this might deter well meaning agents as they perceive themselves as spending too much compared to their community. It is important that the steward allows a framework where the behaviour of the individual is observed by others to ensure compliance to the chosen standard.

The exercise of stewardship is costly to the ecosystem participants and to agents assuming this role as it requires investment of resources in infrastructure, the creation of a legal framework and monitoring required to deliver its mission. The motives for agents to engage in such activities are diverse, and they range from individual welfare maximization to a form of altruistic behaviour. Simple profit maximization can be seen as the motive of a retailer, who voluntarily assumes the role of the steward, in the case where multiple sellers are using its platform to connect with consumers. In this case the retailer acting as the steward safeguards that contracts negotiated between the sellers and the consumers on its platform are honoured and the ecosystem maintains its credibility as a space of secure commercial transactions.

Profit maximization is by no means the only motive for engaging in stewardship. Recent studies from psychology and economics provide strong empirical evident for the existence of pro-social behaviour. For a recent survey, see [9] [12] [13] . That is, agents engage frequently in costly activities whose benefits accrue to others. In a highly decentralized ecosystem, some agents will possess the intrinsic motivation to behave pro-socially. Such agents will typically have two motivations. First, they will care for the overall provision of the public good to which their individual actions contribute, but also they will care for the consumption of this good by others.

We begin this paper by developing separate models, to elucidate in some detail the concepts of resilience and sustainability in terms of investment in information security with and without the information steward. Both models presented in Sections 2 and 3 (following [1] - [4] ) are based on strategic interactions between the agents of the eco-system who wish to protect their information resources and attackers who derive benefits from degrading the system’s operational capacity. Both attackers and defenders are incurring costs whilst engaging in their respective activities and the extent of their involvement in defending and attacking is determined by the existing technologies, time preferences and the value of the information assets.

The two graphs below depict the concepts in terms of the time evolution of the system’s operational capacity in the presence, first, of secular deterioration, and, second, unanticipated performance reducing shocks. The dy- namics of resilience are depicted in Figure 1. In this graph, we depict the system’s predictable time path within the acceptable tolerances in performance denoted by its nominal operational capacity. Along this path, at time  the system experiences an unanticipated shock of moderate magnitude that degrades its capacity, placing it outside the acceptable range and guides the system to lower capacity levels. In the absence of the steward, such a shock may prove permanently detrimental to the state of the system, with the system’s path depicted by the broken line. The actions of the steward render the system resilient as they are able to reverse the divergent path and restore the system to its “trend” capacity (solid line), up to the planning horizon, T. Alternatively, in the presence of a substantial shock, as the one depicted at time

the system experiences an unanticipated shock of moderate magnitude that degrades its capacity, placing it outside the acceptable range and guides the system to lower capacity levels. In the absence of the steward, such a shock may prove permanently detrimental to the state of the system, with the system’s path depicted by the broken line. The actions of the steward render the system resilient as they are able to reverse the divergent path and restore the system to its “trend” capacity (solid line), up to the planning horizon, T. Alternatively, in the presence of a substantial shock, as the one depicted at time , the best the steward can achieve is to halt the system’s rapid deterioration and stabilize the system’s operational capacity to a steady, albeit lower, level.

, the best the steward can achieve is to halt the system’s rapid deterioration and stabilize the system’s operational capacity to a steady, albeit lower, level.

The dynamics of sustainability are depicted in Figure 2. In this graph, we characterize the system’s equili- brium course over time. We envisage that the system degrades steadily and predictably along this path. Its internal dynamic structure without the steward will result in the system’s inability to perform within the accept- able bounds by . The steward’s contribution to the system’s sustainability is to delay the rate of degradation beyond the planning horizon T. Again, the steward adopts the relevant policies and installs the required institutional framework at

. The steward’s contribution to the system’s sustainability is to delay the rate of degradation beyond the planning horizon T. Again, the steward adopts the relevant policies and installs the required institutional framework at . Therefore, the steward permanently changes the long-term dynamic structure of the system at the beginning, permitting the system to enjoy a considerable extension to its useful life compared to the state where the steward is absent.

. Therefore, the steward permanently changes the long-term dynamic structure of the system at the beginning, permitting the system to enjoy a considerable extension to its useful life compared to the state where the steward is absent.

By adopting such actions/policies, the steward extends the life of the system, minimizes the impact of the shocks, enhancing the system’s predictability and robustness of performance. The benefits of the existence of such institutional arrangement accrue to all the participants of the ecosystem, reducing the incentive for existing members to abandon and encouraging new agents to join. Figure 3 nests the previous notions of stewardship

Figure 1. Resilience.

Figure 2. Sustainability.

into a multi-period representation of system sustainability.

There are prominent examples of the exercise of information stewardship in EU, UK, and US legislation. For example, consider the contribution of the existence of the Freedom of Information Acts in the UK and US on policy decision-making. In their absence, information provided by the public will be limited by the public’s per- ception of misuse, so restricting the information available to policy-makers. For another example, the US’s response to EU privacy legislation in constituting “Safe Harbor” (http://export.gov/safeharbor/, access 4 March 2013) encouraged and maintained trade between the two economics. Both cases are examples of sustainability as the steward intervenes to maintain the market.

The failure of stewardship to maintain resilience is demonstrated by the failure in June 2012 of the Royal Bank of Scotland’s payment processing systems, which support an ecosystem subsidiary banks. A software upgrade corrupted the system and, in the absence of sufficient system management resources, the ecosystem’s payment processing systems ceased to function for a considerable period of time and, for several banks, acceptable service levels were stored only after considerable delay.

The remainder of this paper is organized as follows: Section 2 provides a summary of mathematical results on

Figure 3. Nesting one period sustainability into a multi-period framework.

the notion of sustainability, and the proofs for this section may be found in [2] ; Section 3 provides a summary of a mathematical model of resilience in a simple security setting, and the proofs for this section may be found in [4] ; finally, Section 4 provides a brief summary of the implications for stewardship in this setting.

2. A Model of Sustainability

We begin with a condensed outline of the model presented in [1] - [4] .

We assume that the steward is able to pass on the cost of his decisions to the agents in the ecosystem whilst improving the agents’ security. In this set-up, individual agents are assumed face a known probability of successful attack and they possess known mitigation technologies. In both cases, to demonstrate the role of a steward in enhancing the ecosystem’s sustainability and resilience, we set up a model of strategic interactions between targets (typically firms, but possibly individuals) and attackers (typically individuals, but possibly organized groups). Attackers are assumed to be profit-maximizing and risk-neutral.1 We introduce the concept of a steward who co-ordinates the defensive expenditure of the targets. We show that as the steward, as in Figure 2, seeks to sustain the life of the ecosystem to at least the planning horizon is setting mandatory levels of defensive expenditure for each target. These are set using time preferences for the valuation of expected future losses from successful attacks over the period of his planning horizon.

We begin by evaluating the role of the steward in contributing to the system’s sustainability. We assume that the steward is a Stackelberg policy-maker, who imposes a policy as a first move irrespective of the reactions of the participants in the ecosystem. In addition, we assume that increasing defensive expenditure by an ecosystem participant reduces the likelihood of successful attacks on that participant and that for any given participant, the greater the number of attackers, the higher the likelihood of a successful attack.

Building on the seminal contribution to benefit-cost analysis for information security investments presented by Gordon and Loeb (GL) [14] , we present a framework for managing security investments in information eco- systems [5] in which we identify the role of the steward in regulating the allocation of resources by the eco- system’s participants.

Targets of security attacks are GL expected-loss-minimizers and Attackers act as rational agents. They are assumed to have utility functions, with well-defined preferences. All attack and investment decisions are taken discounting under a risk-neutral measure. That is, the combination of time preferences (captured by discount factors, ), probability measures,

), probability measures,  , and measured losses, L, admits any standard representation (e.g., constant relative or constant absolute) of risk-aversion. The possible difference between the discount factors,

, and measured losses, L, admits any standard representation (e.g., constant relative or constant absolute) of risk-aversion. The possible difference between the discount factors,  , adopted by targets and the steward will be of importance in evaluating the stewards’s contribution to the system’s sustainability.

, adopted by targets and the steward will be of importance in evaluating the stewards’s contribution to the system’s sustainability.

Consider an ecosystem with  targets and a fixed number

targets and a fixed number  of attackers. We set the ratio

of attackers. We set the ratio ; that is, the number of attackers per target.

; that is, the number of attackers per target.

Decisions on security investment are taken at time zero and are assumed to be made with full commitment. The usefulness of time in this context is not to add temporal dynamics to the security investment problem, but to illustrate the impact of different discount rates between participants in the game.

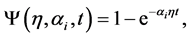

Let  be the instantaneous probability that a single attack will be successful in the absence of any defensive expenditure. We will assume that attacks have independent probabilities and a functional form that satisfies the condition that eventually an attacker will be successful is

be the instantaneous probability that a single attack will be successful in the absence of any defensive expenditure. We will assume that attacks have independent probabilities and a functional form that satisfies the condition that eventually an attacker will be successful is

where

The expected loss at t, in the absence of security investment, is given by

The present value of losses, in the absence of security investment, given a discount rate

Let

That is, integrating losses multiplied by their probabilities and discounted at rate

2.1. Target Security Investment

As targets are aware of the threats to their information assets each target has a control instrument, denoted

We further assume that defensive expenditure reduces the probability of a successful attack by a continuous rate,

Therefore, in the presence of defensive expenditure the instantaneous expected loss from attacks, in the

presence of defensive expenditure at time t, is now

expected losses constant and independent of defensive expenditure

The term

As targets are risk neutral (relative to their discount rate), the net present value (adding the t0 expenditure of

In the presence of an exogenous

Differentiating the net present value of losses with respect to xi and setting the derivative equal to zero yields

Therefore, for a given

Thus improvements in protective technology (increasing

For any given monetary loss,

Attackers are non-cooperative and risk neutral and make rational choices to participate in attacks. The reward for individual attacker successfully attacking agent I is denoted

can only identify average defensive expenditure

and its present value by

The marginal attacker enters the market until the present value of expected rewards,

Dividing both sides of this equation by R, setting

The following proposition, proved in [1] [2] , establishes the level of optimal expenditure in information security by each target and the attack intensity (attacks per target):

Proposition 1. For

When the target responses are coordinated by a fully informed information steward whose sole objective is to improve the ecosystem’s sustainability and can impose his choice of defensive expenditure,

The steward minimizes his objective function, where the

where

In this formulation, the rational steward anticipates the impact of its impact on the market for attacks as it sets

As mentioned above the possible differences between the discount rates of the steward and the individual agents are important factors in determining the optimal level of investment in information security. In the first instance, we evaluate the case where

With

The policy-maker takes fully into account the attacker intensity into their optimization problem. The algebraic expression is more complex, but the derivative with respect to xP is analytic. The loss per target

This is the discounted present value using the policy-maker’s discount rate and in the first instance

Proposition 2. For a steward setting mandatory defensive expenditure xP for NT ex-ante identical targets with discount rate

Although this equation is not analytically solvable for

We now set up the following simulations: the discount rate of the attackers

and the discounted expected total loss

for comparison purposes. The results are depicted in Figure 4.

We now consider the case where

The steward’s time preferences indicate longer horizon planning than the individual participants, by setting

Using the previous case parameter values as a starting point we solve for

The results are as expected: for all configurations of

The ex-ante identical individual firms now no longer view the imposed

Figure 4. Comparison of the impact of a steward on defensive expenditure, risk, and expected losses assuming that the ecosystem consists of UK SMEs of the type surveyed in Case 2. For tractability, we assume identical targets. The level of defensive expenditure for each target is denoted by x (the left plot), the expected loss factor

all values of

Unambiguously, if the steward has a lower discount rate than the individual target firms, then the value of the costly action deemed necessary to negate the externality will be higher than that required by the targets to attain the Pareto optimal allocation subject to their time preferences.

A more attractive way of thinking about discount rates is to derive the time horizon over which the majority of their value amortizes towards zero. The firm-specific discount rates are set to amortize the firm’s current investment assets over a time period consistent with the lifespan of previous information security assets. This provides a baseline for the steward’s time horizon in terms of managing externalities. Should the steward desire the externalities to be managed over a longer, more sustainable, time horizon, then the adopted discount rate will be set lower than the representative rate determined by the individual firms. Larger scale ecosystems, such as the internet, are usually assumed to require longer term planning. Hence, stewards in this context might amortize expected losses from risks to the system over much longer periods. Therefore the incremental investment in

Figure 5. We compare the allocation of optimal investment

information security that are imposed on individual participants in the ecosystem are deemed to be unnecessarily burdensome given their own time preferences.

If

3. A Model of Resilience

A key feature of our account of resilience is that we illustrate how thresholds for the effectiveness of stewards emerge from the underlying model of the response of information ecosystems to the hostility of the environ- ment.2

Again, we consider a set of NT ex-ante identical targets choosing to allocate defensive resources that mitigate the harm from attacks. However, in this case, the targets need to solve, simultaneously, a multi-dimensional resource-allocation problem. Let the subscripts h and l represent to potential areas of allocation of assets, where h and l denote the areas of high and low security at which information assets are held, and let

Our model depends crucially on two key (vector) parameters. Employing the same notation albeit in two dimensions h and l, we consider the elasticity of attacking intensity denoted by the vector

In this case, we need to consider just two elasticities,

Let

where

Our assumption is that increased investment

of a successful attack,

A functional form for

Under this formulation, there is an upper bound on

Let the number of attackers for each asset area be

We assume that attackers do not coordinate attacks (or are commissioned by a single attacker) and rewards are claimed on a first-winner-takes-all basis. Attackers are assumed to be drawn from a pool and make one-off entry decisions until marginal cost and marginal benefit are equal and hence

For the targets of such attacks, let

The optimal allocation bundle

tion of

and

either

we impose the inequality constraint that

In the case of resilience, the time horizon, T, is of greater significance compared to the previous case and maybe determined endogenously. Let

Therefore, the approximation of the time horizon

What is important, to the steward, is the overall mass of attacks against systems containing assets under the types l and h “storage/operations” areas and this will be influenced by the aggregate behaviour of targets and attackers, rather than the microstructure of individual attack-defence interactions. The more attractive the eco- system is to attackers, the greater the mass of attacks against its individual components.

Proposition 3. (Existence of Nash equilibria without the steward)

1) (Equilibrium Target Investment) Under the preceding assumptions, when

2) (Equilibrium Attacker Intensity) Following from Part 1, above, the Nash equilibrium attacker intensities, denoted

where

We demonstrate that, in this modelling approach, we do not have to impose an arbitrary constraint on

3.1. Introducing the Steward

The aim of the steward here is to ensure resilience, as defined above: the information system may not, given the choices of investment in information security allocated to the individual components, be resilient to debilitating shocks.

The first stewardship action that we evaluate replicates our previous work (on sustainability [17] ) by postulat- ing a Stackelberg policy framework in which the policy-maker stewarding the system sets rules relative to a target level of sustainability. When the steward is fully informed, our model reverts to the mechanism design problem discussed in [17] , in which the steward is able to set a mandatory investment bundle (denoted by the lower bar) on the individual targets

The Nash equilibrium allocation for the

A classical efficiency model, with the steward acting as a public policy-maker and imposing

Indeed, the analysis of sustainability [1] [2] illustrates that, from the subjective viewpoint described by targets’ heterogeneous discount rates, the chosen values of

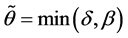

Let the steward’s discount rate be

where

in terms of

Proposition 4. (The fully informed steward)

1) (Target investment with steward) When

2) (Attacking intensity) Following from Part 1, above, the attacker intensity

where

Proposition 5. (The steward’s improvement) If

A useful by-product of the comparison between Propositions 1 and 2 is that we can define an upper bound on

We now progress to the case of a partially informed steward with a minority action: Let the steward observe and enforce only

and the steward now solves the following minority optimization, with the steward’s given information set:

where

From the steward’s point of view, this is now

Note that the steward now takes for given L as the value of losses. This is because the steward can no longer identify

Proposition 6. (Attackers and the steward)

1) (Asset Class h investment guided by the steward) If

Following from the steward’s choice, the attacker intensity, given the steward’s actions

where

2) (Asset Class l) We now consider the targets’ and attackers’ new equilibrium: if

and the attacker intensity

Note that we use the

3.2. Measuring Resilience

Measuring the impact of technological shocks to

where

For a single period, resilience will be measured by a response function to shocks to the parameters

where each case has a set of functional forms for

We are interested in establishing the thresholds, illustrated in Figure 6, which describe levels of system operating capacity, as measured by loss, for differing degrees of the steward’s effectiveness. We attempt to establish whether the system restores, through co-ordinated investment, to the target zone or not.

In our model, these thresholds reveal themselves as discontinuities, relative to shock size, in the solutions to Equation (21), below. Such discontinuities can be seen in our simulations as the asymptotes in Figure 7 and Figure 8, for fully and partially informed stewards.

Figure 6. Resilience with an incompletely informed steward.

Figure 7. The steward’s total non-discounted loss function,

Let

We can measure the effectiveness of the steward by comparing

To examine the impact of shocks and measure resilience we compare the response functions

3.3. An Example Simulation

This simulation is designed to provide an overview of the intuition of our model and is not supposed to provide specific quantification for our proposed application. However, we have tried to stay close to real data when possible.

Let us assume that targets have a discount rate of 20% per annum (

We assume that the societal discount rate used by the steward is much lower and ranges from

Figure 8. Partially informed steward with minority action total non-discounted loss function

For our starting numerical example, we assume that

We arbitrarily fix the instantaneous total loss to $1M, as an example, and divide all losses by

We set the attackers’ discount rate to be

For the partially informed steward with minority action, the total non-discounted loss In this case, the pattern is similar to the Nash equilibrium for small shocks. The targets, however have costly regulation in the h asset class and are under investing in the l asset class. Unfortunately, in this case there is a discontinuity at

4. Conclusions

An information security ecosystem consists in a finite set of interacting agents supported by a specific in- frastructure, which may have logical, physical, and economic components, supporting people, process, and tech- nology.

The security posture of an ecosystem is a function of the postures of the participating agents and specified properties of the infrastructure that supports the ecosystem. The ecosystem exists and operates in an environ- ment in which threats to the system’s confidentiality, integrity and availability are initiated by a variety of sources. Such threats are taken as giving rise to shocks deteriorating ecosystem’s operational posture. That is, its sustainability―the tendency of a firm or of the ecosystem to remain within acceptable operating bounds―and resilience―the tendency of an agent or of the ecosystem to restore, in a timely manner, its operating capacity to within acceptable operating range having been subject to a shock that has placed its operating capacity outwith it.

In this paper, we have addressed the question of whether it is socially desirable to introduce an institution with the authority to mandate resources for investment in information security in order to maintain (or preserve) the system’s sustainability and resilience, rather than protecting these characteristics at the individual agents’ levels. This institution, the information steward is therefore entrusted and empowered with the authority to maintain the sustainability and resilience of the security posture of the ecosystem. The steward’s preferences are understood to derive in some form from the collective preferences of the participating agents, in their desire to maintain the system’s operational stability.

The threats to the system’s stability emanate from rational agents, the attackers, who, in deploying their resources, are fully informed of their potential gains given their technologies and, more importantly, the system’s average protective posture. Agents operating in the system also invest in information security measures that take into account the costs of such investment and the possible losses following a successful attack. Both the defending agents and attackers engage in a situation of strategic interaction, and it is in this context that the relevant resources are deployed.

The introduction of the information steward, with authority to mandate to all agents in the system investment in information security, whilst fully informed on both the attacking and defending technologies, alters the decision landscape. In terms of maintaining and preserving the system’s sustainability in the presence of secular deterioration, we show that the presence of the steward will act as a deterrent to attackers who will now know that the system’s average defensive expenditure is higher thus reducing their expected gains from a successful attack by reducing markedly the probability of such event. In this case, the intensity of attacks declines and the system’s secular decline is interrupted as its capacity shifts to higher level. The steward is shown to be effective in this respect. However, whether such intervention is strictly welfare-improving for all individual agents in the system is debatable, given the possibly large range of discount factors used by the agents in computing their “equilibrium” investment in information security. It is not uncommon to postulate that such a collective body will, in deciding the optimal investment in information security, use higher discount factors than the individual agents as its brief is the system’s sustainability over some longer horizon, which may not coincide with the horizon of the agents, who aim at achieving short-run performance measures.

In terms of improving the system’s ability to recover quickly after a negative temporary shock to its operational capacity―that is, of resilience―the influence of the presence of the information steward requires the appropriate design of the steward’s mandate. There now two sets of strategic interactions: the usual one between the attackers and defenders, but, in addition, agents now have incentives to avoid additional information security investment―that which may be demanded by the steward―by deliberately mis-classifying the status of information assets which are under threat of attack. In such incomplete design, when the steward’s ability to be fully informed is impaired and fails to enjoy the complete set of instruments at its disposal, the public co-ordination of investment in information security is actually welfare-reducing compared to the un-coordinated outcome for the system as a whole.

In conclusion, public co-ordination of investment in information security in order to maintain the system’s capacity in terms of sustainability and resilience can, by increasing the system’s defensive posture, be effective in deterring attacks. It is important that a great deal of attention is required in designing such a co-ordinating in- stitution, which will enjoy rather wide powers to decree the allocation of resources to information security. We have addressed the issue of the desirability of public co-ordination of investment in information security by bringing together a body of work on the common thread of the information steward. Integrating in a coherent manner these diverse results provides for a lucid understanding of the institutional requirements for the design and function of such a public institution and, depending upon the nature of the threat facing the information ecosystem, helps clarify the policy responses.

Cite this paper

Christos Ioannidis,David Pym,Julian Williams, (2016) Is Public Co-Ordination of Investment in Information Security Desirable?. Journal of Information Security,07,60-80. doi: 10.4236/jis.2016.72005

References

- 1. Ioannidis, C., Pym, D. and Williams, J. (2013) Sustainability in Information Stewardship: Time Preferences, Externalities, and Social Co-Ordination. In: Friedman, A., Ed., Proceedings of the 12th Annual Workshop on the Economic of Information Security (WEIS 2013), Georgetown University, Washington DC, 11-12 June 2013.

http://weis2013.econinfosec.org/papers/IoannidisPymWilliamsWEIS2013.pdf - 2. Ioannidis, C., Pym, D. and Williams, J. (2014) Sustainability in Information Stewardship: Time Preferences, Externalities, and Social Co-Ordination. University College London, Department of Computer Science, Research Note RN/14/ 15.

http://www.cs.ucl.ac.uk/fileadmin/UCL-CS/research/Research_Notes/rn-14-15_01.pdf - 3. Ioannidis, C., Pym, D., Williams, J. and Gheyas, I. (2013) Resilience in Information Stewardship. In: Grossklags, J., Ed., Proceedings of the 13th Annual Workshop on the Economic of Information Security (WEIS 2014), Pennsylvania State University, 23-24 June 2014.

http://weis2014.econinfosec.org/papers/Ioannidis-WEIS2014.pdf - 4. Ioannidis, C., Pym, D., Williams, J. and Gheyas, I. (2014) Resilience in Information Stewardship. University College London, Department of Computer Science, Research Note RN/14/16.

http://www.cs.ucl.ac.uk/fileadmin/UCL-CS/research/Research_Notes/rn-14-16_01.pdf - 5. Nardi, B. and O’Day, V. (1999) Information Ecologies. MIT Press.

- 6. Chapin III, F.S., Kofinas, G.P. and Folke, C. (2009) Principles of Ecosystem Stewardship: Resilience-Based Natural Resource Management in a Changing World. Springer-Verlag.

- 7. Stern, N. (2006) Stern Review on the Economics of Climate Change: Executive Summary Long. HM Treasury Stationary Office.

- 8. Hall, C., Anderson, R., Clayton, R., Ouzounis, E. and Trimintzios, P. (2013) Resilience of the Internet Interconnection Ecosystem. In: Schneier, B., Ed., Economics of Information Security and Privacy III, Springer, 119-148.

http://dx.doi.org/10.1007/978-1-4614-1981-5_6 - 9. Benabou, R. and Tirole, J. (2012) Laws and Norms. Working Paper IZA DP No. 6290.

- 10. Funk, P. (2007) Is There an Expressive Function of Law? An Empirical Analysis of Voting Laws with Symbolic Fines. American Economic Review, 9, 135-139.

http://dx.doi.org/10.1093/aler/ahm002 - 11. Tyran, J. and Feld, L. (2006) Achieving Compliance When Legal Sanctions Are Non-Deterrent. Scandinavian Journal of Economics, 108, 135-156.

http://dx.doi.org/10.1111/j.1467-9442.2006.00444.x - 12. Andreoni, J. (1989) Giving with Impure Altruism: Applications to Charity and Ricardian Equivalence. Journal of Political Economy, 97, 1447-1458.

http://dx.doi.org/10.1086/261662 - 13. Deci, E. (1985) Intrinsic Motivation in Human Behavior. Plenum.

http://dx.doi.org/10.1007/978-1-4899-2271-7 - 14. Gordon, L. and Loeb, M. (2002) The Economics of Information Security Investment. ACM Transactions on Information and Systems Security, 5, 438-457.

http://dx.doi.org/10.1145/581271.581274 - 15. Caplin, A. and Leahy, J. (2004) The Social Discount Rate. Journal of Political Economy, 112, 1257-1268.

http://dx.doi.org/10.1086/424740 - 16. Ioannidis, C., Pym, D. and Williams, J. (2012) Fixed Costs, Investment Rigidities, and Risk Aversion in Information Security: A Utility-Theoretic Approach. In: Schneier, B., Ed., Economics of Security and Privacy III, Springer, Proceedings of the 2011 Workshop on the Economics of Information Security.

- 17. Ioannidis, C., Pym, D.J. and Williams, J.M. (2013) Sustainability in Information Stewardship: Time Preferences, Externalities, and Social Co-Ordination. The Twelfth Workshop on the Economics of Information Security (WEIS 2013).

http://weis2013.econinfosec.org/papers/IoannidisPymWilliamsWEIS2013.pdf - 18. Fudenberg, D. and Tirole, J. (1991) Game Theory. MIT Press.

- 19. Baldwin, J., Gellatly, G., Tanguay, M. and Patry, A. (2005) Estimating Depreciation Rates for the Productivity Accounts. Technical Report, OECD Micro-Economics Analysis Division Publication.

- 20. Publications, N. (2013) Second Draft 2014 Business Plan and Budget. Technical Report, North American Electric Reliability Corporation.

- 21. Statement, F.P. (2009) Smart Grid Policy. Technical Report, Federal Energy Regulatory Commission.

NOTES

1It is straightforward to vary the model to account for payoffs that are utility-maximizing, but not necessarily monetary; for example, as in terrorism.

2Note by that the hostility of the environment we mean a representation of the capacity of attackers rather than simply the success or failure of an individual attack.