Advances in Linear Algebra & Matrix Theory

Vol.05 No.04(2015), Article ID:61736,11 pages

10.4236/alamt.2015.54016

A Generalization of Cramer’s Rule

Hugo Leiva

Department of Mathematics, Louisiana State University, Baton Rouge, LA, USA

Copyright © 2015 by author and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 27 April 2014; accepted 4 December 2015; published 7 December 2015

ABSTRACT

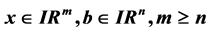

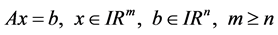

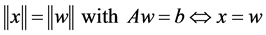

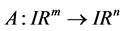

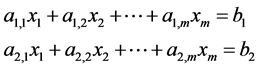

In this paper, we find two formulas for the solutions of the following linear equation

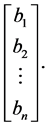

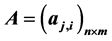

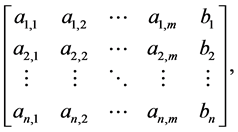

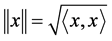

, where

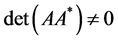

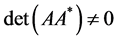

, where  is a

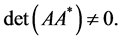

is a  real matrix. This system has been well studied since the 1970s. It is known and simple proven that there is a solution for all

real matrix. This system has been well studied since the 1970s. It is known and simple proven that there is a solution for all  if, and only if, the rows of A are linearly independent, and the minimum norm solution is given by the Moore-Penrose inverse formula, which is often denoted by

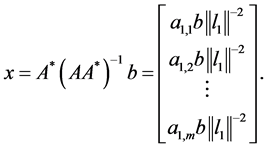

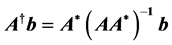

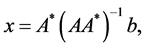

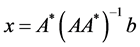

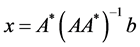

if, and only if, the rows of A are linearly independent, and the minimum norm solution is given by the Moore-Penrose inverse formula, which is often denoted by ; in this case, this solution is given by

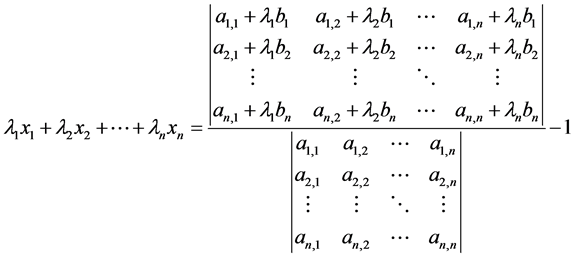

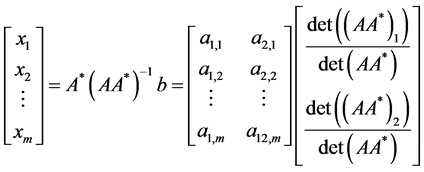

; in this case, this solution is given by . Using this formula, Cramer’s Rule and Burgstahler’s Theorem (Theorem 2), we prove the following representation for this solution

. Using this formula, Cramer’s Rule and Burgstahler’s Theorem (Theorem 2), we prove the following representation for this solution

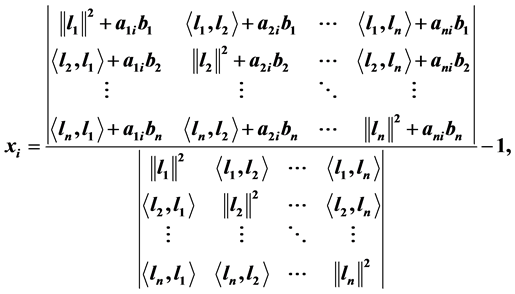

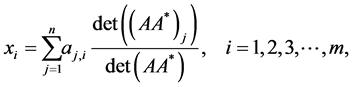

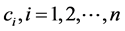

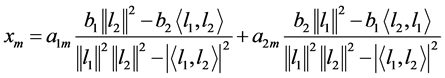

, where

, where  are the row vectors of the matrix A. To the best of our knowledge and looking in to many Linear Algebra books, there is not formula for this solution depending on determinants. Of course, this formula coincides with the one given by Cramer’s Rule when

are the row vectors of the matrix A. To the best of our knowledge and looking in to many Linear Algebra books, there is not formula for this solution depending on determinants. Of course, this formula coincides with the one given by Cramer’s Rule when .

.

Keywords:

Linear Equation, Cramer’s Rule, Generalized Formula

1. Introduction

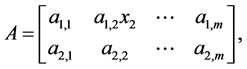

In this paper, we find a formula depending on determinants for the solutions of the following linear equation

(1)

(1)

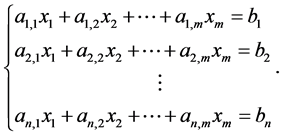

or

(2)

(2)

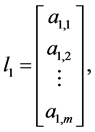

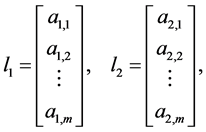

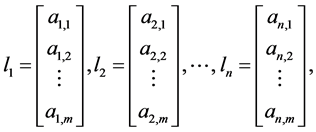

Now, if we define the column vectors

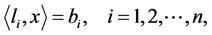

then the system (2) also can be written as follows:

(3)

(3)

where

to the form that is simple enough such that the system of equations can be solved by inspection. But, to my knowledge, in general there is not formula for the solutions of (1) in terms of determinants if

When

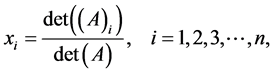

Theorem 1.1. (Cramer Rule 1704-1752) If A is

where

A simple and interested generalization of Cramer Rule is done by Prof. Dr. Sylvan Burgstahler ( [1] ) from University of Minnesota, Duluth, where he taught for 20 years. This result is given by the following Theorem:

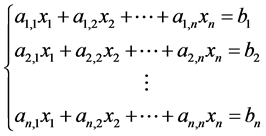

Theorem 1.2. (Burgstahler 1983) If the system of equations

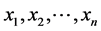

has(unique) solution

Using Moore-Penrose Inverse Formula and Cramer’s Rule, one can prove the following Theorem. But, for better understanding of the reader, we will include here a direct proof of it.

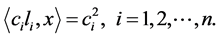

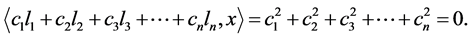

Theorem 1.3. For all

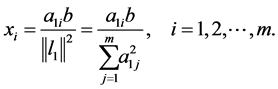

Moreover, one solution for this equation is given by the following formula:

where

Also, this solution coincides with the Cramer formula when

where

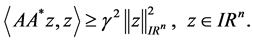

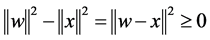

In addition, this solution has minimum norm, i.e.,

and

The main results of this work are the following Theorems.

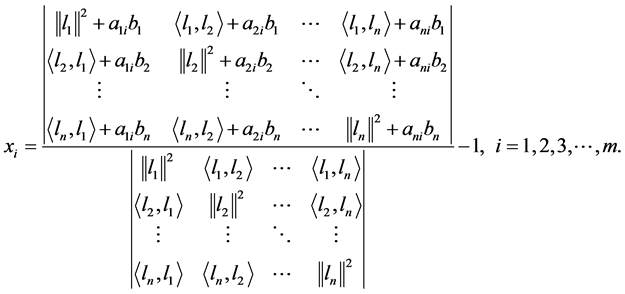

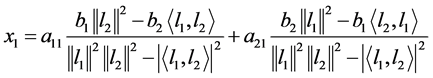

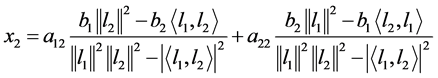

Theorem 1.4. The solutions of (1)-(3) given by (9) can be written as follows:

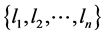

Theorem 1.5. The system (1) is solvable for each

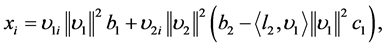

Moreover, a solution for the system (1) is given by the following formula:

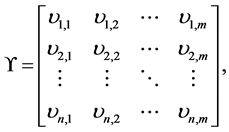

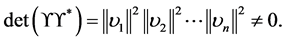

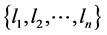

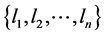

where the set of vectors

and

2. Proof of the Main Theorems

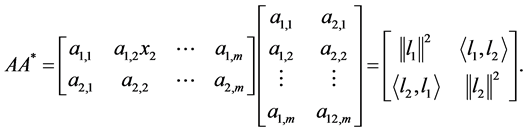

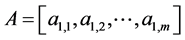

In this section we shall prove Theorems 1.3, 1.4, 1.5 and more. To this end, we shall denote by

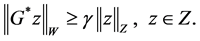

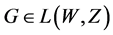

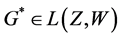

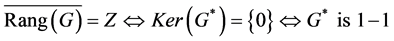

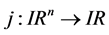

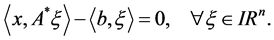

Lemma 2.1. Let W and Z be Hilbert space,

[(i)]

[(ii)]

We will include here a direct proof of Theorem 1.3 just for better understanding of the reader.

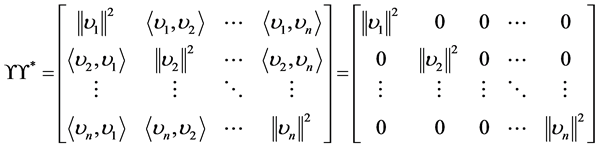

Proof of Theorem 1.3. The matrix A may also viewed as a linear operator

Then, system (1) is solvable for all

Therefore,

This implies that

Suppose now that

Now, since

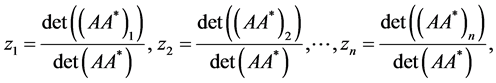

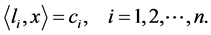

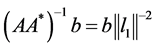

then from Theorem 1.1 (Cramer Rule) we obtain that:

where

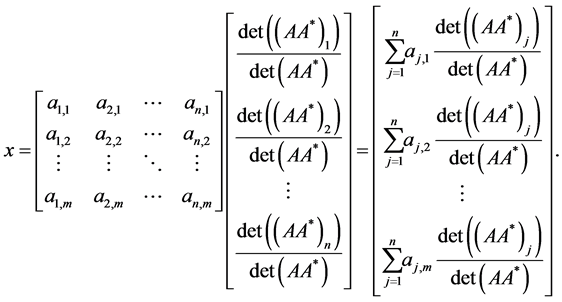

Then, the solution

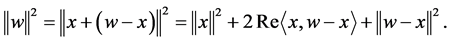

Now, we shall see that this solution has minimum norm. In fact, consider w in

On the other hand,

Hence,

Therefore,

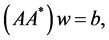

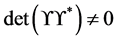

Proof of Theorem 1.5. Suppose the system is solvable for all

Then, there exists

In other words,

Hence,

So,

Therefore,

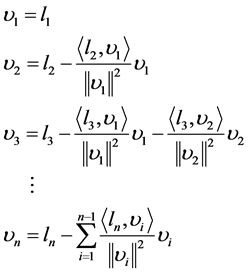

Now, suppose that the set

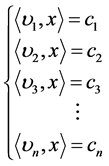

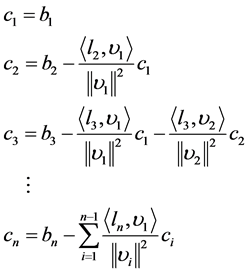

Then, system (1) will be equivalent to the following system:

where

If we denote the vectors

and the

then, applying Theorem 1.3 we obtain that system (17) has solution for all

So,

From here and using the formula (9) we complete the proof of this Theorem.

Examples and Particular Cases

In this section we shall consider some particular cases and examples to illustrate the results of this work.

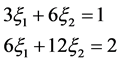

Example 2.1. Consider the following particular case of system (1)

In this case

Then,

Therefore, a solution of the system (19) is given by:

Example 2.2. Consider the following particular case of system (1)

In this case

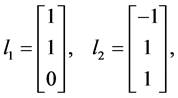

Then, if we define the column vectors

then

Hence, from the formula (10) we obtain that:

Therefore, a solution of the system (21) is given by:

Now, we shall apply the foregoing formula or (12) to find the solution of the following system

If we define the column vectors

then

Example 2.3. Consider the following general case of system (1)

Then, if

and the solution of the system (1) is very simple and given by:

Now, we shall apply the formula (28) or (12) to find solution of the following system:

If we define the column vectors

Then,

3. Variational Method to Obtain Solutions

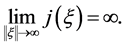

Theorems 1.3, 1.4 and 1.5 give a formula for one solution of the system (1) which has minimum norma. But it is not the only way allowing to build solutions of this equation. Next, we shall present a variational method to obtain solutions of (1) as a minimum of the quadratic functional

Proposition 3.1. For a given

It is easy to see that (31) is in fact an optimality condition for the critical points of the quadratic functional j define above.

Lemma 3.1. Suppose the quadratic functional j has a minimizer

is a solution of (1).

Proof. First, we observe that j has the following form:

Then, if

So,

Remark 3.1. Under the condition of Theorem 1.3, the solution given by the formulas (32) and (9) coincide.

Theorem 3.1. The system (1) is solvable if, and only if, the quadratic functional j defined by (30) has a minimum for all

Proof. Suppose (8) is solvable. Then, the matrix A viewed as an operator from

Then,

Therefore,

Consequently, j is coercive and the existence of a minimum is ensured.

The other way of the proof follows as in proposition 3.1.

Now, we shall consider an example where Theorems 1.3, 1.4 and 1.5 can not be applied, but proposition 3.1 does.

Example 3.1. It considers the system with linearly independent rows

In this case

Then

Therefore, the critical points of the quadratic functional j given by (30) satisfy the equation:

i.e.,

So, there are infinitely many critical points given by

Hence, a solution of the system is given by

Cite this paper

Hugo Leiva, (2015) A Generalization of Cramer’s Rule. Advances in Linear Algebra & Matrix Theory,05,156-166. doi: 10.4236/alamt.2015.54016

References

- 1. Burgstahier, S. (1983) A Generalization of Cramer’s Rulle. The Two-Year College Mathematics Journal, 14, 203-205.

http://dx.doi.org/10.2307/3027088 - 2. Iturriaga, E. and Leiva, H. (2007) A Necessary and Sufficient Conditions for the Controllability of Linear System in Hilbert Spaces and Applications. IMA Journal Mathematical and Information, 25, 269-280.

http://dx.doi.org/10.1093/imamci/dnm017 - 3. Curtain, R.F. and Pritchard, A.J. (1978) Infinite Dimensional Linear Systems. Lecture Notes in Control and Information Sciences, Vol. 8, Springer Verlag, Berlin.