Open Journal of Statistics

Vol.06 No.02(2016), Article ID:65859,15 pages

10.4236/ojs.2016.62021

Marginal Conceptual Predictive Statistic for Mixed Model Selection

Cheng Wenren1, Junfeng Shang2*, Juming Pan2

1Process Modeling Analytics Department, Bristol-Myers Squibb, New York, NY, USA

2Bowling Green State University, Bowling Green, OH, USA

Copyright © 2016 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 9 March 2016; accepted 23 April 2016; published 26 April 2016

ABSTRACT

We focus on the development of model selection criteria in linear mixed models. In particular, we propose the model selection criteria following the Mallows’ Conceptual Predictive Statistic (Cp) [1] [2] in linear mixed models. When correlation exists between the observations in data, the normal Gauss discrepancy in univariate case is not appropriate to measure the distance between the true model and a candidate model. Instead, we define a marginal Gauss discrepancy which takes the correlation into account in the mixed models. The model selection criterion, marginal Cp, called MCp, serves as an asymptotically unbiased estimator of the expected marginal Gauss discrepancy. An improvement of MCp, called IMCp, is then derived and proved to be a more accurate estimator of the expected marginal Gauss discrepancy than MCp. The performance of the proposed criteria is investigated in a simulation study. The simulation results show that in small samples, the proposed criteria outperform the Akaike Information Criteria (AIC) [3] [4] and Bayesian Information Criterion (BIC) [5] in selecting the correct model; in large samples, their performance is competitive. Further, the proposed criteria perform significantly better for highly correlated response data than for weakly correlated data.

Keywords:

Mixed Model Selection, Marginal Cp, Improved Marginal Cp, Marginal Gauss Discrepancy, Linear Mixed Model

1. Introduction

With the development in data science over the past decades, people become more aware of the complexity of data in real life. Univariate linear regression models with independent identically distributed (i.i.d.) Gaussian errors cannot achieve good fitness for some types of data, especially for the data with observations that are correlated. For instance, in longitudinal data, observations are usually recorded from the same individual over time. It is reasonable to assume that correlation exists among the observations from the same individual and linear mixed models are therefore appropriately utilized for modeling such data.

Since linear mixed models are extensively used, mixed model selection plays an important role in statistical literature. The aim of mixed model selection is to choose the most appropriate model from a candidate pool in the mixed model setting. To facilitate this task, a variety of model selection criteria are employed to implement the selection process.

In linear mixed models, a number of criteria have been developed to characterize model selection. The most widely used criteria are the information criteria such as the AIC [3] [4] and the BIC [5] . Sugiura [6] proposed a marginal AIC (mAIC) which involved the number of random effects parameters into the penalty term. Shang and Cavanagh [7] employed the bootstrap method to estimate the penalty term of mAIC for proposing two variants of AIC. For longitudinal data, a special case of linear mixed models, Azari, Li and Tsai [8] proposed a corrected Akaike Information Criterion (AICc). In the justification of AICc, the paper mainly handled the challenge initiated by the correlation matrix under certain conditions for the mixed models. Vaida and Blanchard [9] redefined the Akaike information based on the best linear unbiased predictor (BLUP) [10] - [12] for the random effects in the mixed models, and proposed a conditional AIC (cAIC). Dimova et al. [13] derived a series of variants of the Akaike Information Criterion in small samples for linear mixed models.

Another information criterion, BIC, can be considered as a Bayesian alternative to AIC. In linear mixed models, BIC is converted from marginal AIC by replacing the constant 2 in the penalty by , where N is the sample size (mBIC) [14] . Jones [15] proposed a measure of the effective sample size to replace the sample size in the penalty term of BIC, leading to a new criterion BICJ.

, where N is the sample size (mBIC) [14] . Jones [15] proposed a measure of the effective sample size to replace the sample size in the penalty term of BIC, leading to a new criterion BICJ.

We note that the BIC-type information criteria are derived using Bayesian approaches. Different from that, the AIC-type information selection criteria are justified from the frequentist perspective and based upon the information discrepancy. However, little research has relied on other discrepancy to propose criteria including Mallows’ Cp [1] [2] in linear mixed models. In fact, because of dissimilar derivation, each selection criterion has its own advantages, and no unique selection criterion can cover all the benefits for model selection. To further develop the selection criteria in the mixed modeling setting, we aim to justify the Cp-type ones relying on the Gauss discrepancy.

Mallows’ Cp [1] [2] in linear regression models targets to estimate the Gauss discrepancy between the true model and a candidate model. It serves as an asymptotically unbiased estimator of the expected Gauss discrepancy. Fujikoshi and Satoh [16] identified Cp in multivariate linear regression. Davies et al. [17] presented the estimation optimality of Cp in linear regression models. Cavanaugh et al. [18] provided an alternate version of Cp. The Gauss discrepancy is an L2 norm measuring the distance between the true model and a candidate model in linear models. To select the most appropriate model among competing fitted models, the candidate model leading to the smallest value of Cp is chosen. However, since the covariance matrix of linear mixed models poses the challenge for the justification of selection criteria, Cp statistic in linear mixed models has not been identified.

This paper extends the justification of Cp from linear models to linear mixed models. We first define a marginal Gauss discrepancy reflecting the correlation for measuring the distance between the true model and a candidate model. We utilize the assumption that under certain conditions, the estimator of the correlation matrix for the candidate model is consistent to that for the true correlation matrix. The marginal Cp, abbreviated as MCp. MCp serves as an asymptotically unbiased estimator of the expected marginal Gauss discrepancy between the true model and a candidate model. An improvement of MCp, abbreviated as IMCp, is also proposed and proved. We then justify IMCp as an asymptotically more precisely unbiased estimator of the expected marginal Gauss discrepancy. We examine the performance of the proposed criteria in a simulation study where we utilize various correlation structures and different sample sizes.

The paper is organized as follows: Section 2 presents the notation and defines the marginal Gauss discrepancy in the setting of linear mixed models. In Section 3, we provide the derivations of the model selection criteria MCp and IMCp. Section 4 presents a simulation study to demonstrate the effectiveness of the proposed criteria. Section 5 concludes.

2. Marginal Gauss Discrepancy

In this section, we will introduce the true model, also called the generating model, and the candidate model in the setting of linear mixed models, then define the marginal Gauss discrepancy.

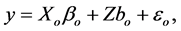

Suppose that the generating model for the data is given by

(2.1)

(2.1)

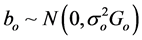

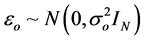

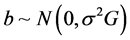

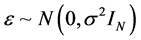

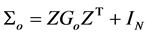

where y denotes an N × 1 response vector, Xo is an N × po design matrix of full column rank, βo is a po × 1 unknown vector for fixed effects. Z is an N × mr known matrix of full column rank and bo is an mr × 1 unknown vector for random effects, where m is the number of cases, the sample size, and r is the dimension of the random effects for each case. Here,  ,

,  , and bo and εo are mutually independent and Go is a positive definite matrix and

, and bo and εo are mutually independent and Go is a positive definite matrix and  is a scalar.

is a scalar.

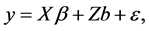

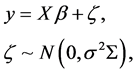

We fit the data with a candidate model of the form

(2.2)

(2.2)

where X is an N × p design matrix of full column rank, β is a p × 1 unknown vector,  ,

,  , and b and ε are mutually independent. The design matrix of the random effects Z and the random effects b are the same as those in the generating model. The matrix G is a positive definite matrix with the q unknown parameters in it.

, and b and ε are mutually independent. The design matrix of the random effects Z and the random effects b are the same as those in the generating model. The matrix G is a positive definite matrix with the q unknown parameters in it.

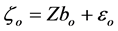

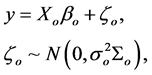

Since the random part of the model (i.e. Zb) is not subject to selection, it is easier to use the marginal form in [19] of linear mixed models. Let , then the generating model (2.1) can be written as

, then the generating model (2.1) can be written as

(2.3)

(2.3)

where the scaled variance .

.

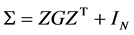

For the candidate model (2.2), let , we have

, we have

(2.4)

(2.4)

where the scaled variance . Therefore, the Σ is a nonsingular positive definite matrix.

. Therefore, the Σ is a nonsingular positive definite matrix.

In models (2.3) and (2.4), the terms ζo and ζ are the combinations of the random effects and errors in the model, respectively. Since they are both assumed to have mean zero, the parameters scaled variances Σo and Σ contain all the information of the random effects and errors, including the correlation structures.

We measure the distance between the true model and a candidate model by defining the marginal Gauss discrepancy based on the marginal forms of models (2.3) and (2.4). The true model is assumed to be included in the pool of candidate models. Let θo and θ denote the vectors of parameters  and

and

where Eo denotes the expectation with respect to the true model. Note that the marginal Gauss discrepancy contains a weight of inverse scaled variance Σ−1 into the L2 norm. Therefore, the correlation between observations is involved when we use the marginal Gauss discrepancy to measure the distance between the true model and a candidate model.

Now let

which can be therefore expressed as

We define a transformed marginal Gauss discrepancy between the true generating model and the fitted candidate model as a linear function of the marginal Gauss discrepancy (2.5) as

Taking the expectation of the transformed marginal Gauss discrepancy (2.6), we obtain the expected transformed marginal Gauss discrepancy as

To serve as a model selection criterion based on the expected transformed marginal Gauss discrepancy in Equation (2.7), an unbiased estimator or an asymptotically unbiased estimator will be proposed. To simplifying the procedure, we will first abbreviate this discrepancy in Equation (2.7).

From expression (2.7), the expectation part in the numerator can be written as

where

Theorem 1. For every

1)

2)

The proof is given in the Appendix.

Corollary 1. Following Theorem 1, we have:

1)

2)

3)

The proof of Corollary 1 can be easily completed following Theorem 1.

By Corollary 1, expression (2.8) can be written as

Note that the scaled variance Σ is a function of the q unknown parameter vector of variance components γ, i.e.,

First, since

Second, using the approximation

Using expressions (2.9), (2.10), and (2.11),

Following Mallows’ interpretation,

where VP and Bp are respectively “variance” and “bias” contributions given by

and

We comment that increasing the number of the parameters of the fixed effects p will decrease the bias Bp for the fitted model, yet will increase the variance VP at the same time. The marginal Gauss discrepancy can therefore be considered as a bias-variance trade-off. Since a smaller value of the discrepancy indicates a smaller distance between the true model and a candidate model, the size of the Gauss discrepancy can really reflect how a fitted model is close to the true model.

3. Derivations of Marginal Cp and Improved Marginal Cp

3.1. Marginal Cp

In this section, model selection criteria based on

We start with the expectation of the sum of squared errors SSRes from a candidate model. In linear mixed models, the sum of squared errors SSRes can be written as

By Theorem 1 and Corollary 1, the expectation of the “scaled sum of squared error”

and then we have

Similar to the derivation of Equation (2.11), the numerator of first term of Equation (3.1) is expressed as

Then, by Equations (3.1) and (3.2), it is straightforward to construct a function

Note that the function T is not a statistic since the parameter

where

For the estimator of

which is an asymptotically unbiased estimator for

MCp is then obtained as

Note that MCp is biased for

3.2. Improved Marginal Cp

To improve the performance of the MCp statistic in linear mixed models, we wish to propose an improved marginal Cp, called IMCp, which is expected to be a more accurate or less biased estimator of the expected transformed marginal Gauss discrepancy than MCp. IMCp is proposed as

where SSRes and

To evaluate the expectation of IMCp, we first need to calculate the ratio of the sum of squared errors

By using the approximation

tively, and

To continue the proof, we will use the following theorem and corollary.

Theorem 2. If

The proof of Theorem 2 is presented in the Appendix.

Corollary 2. Following Theorem 2, we can obtain following results:

1)

2)

The proof of Corollary 2 is included in the Appendix.

By Theorem 1 and Corollary 2, we have

such that the quadratic forms

expectation of

For the term

For the term

Note that

where

Now, its inverse

Using the results of (3.8) and (3.9), we have the expectation of

We recall that the criterion IMCp in (3.5) is defined as

By the result of (3.10) and the approximation

Hence, IMCp is an asymptotically unbiased estimator of the expected overall transformed Gauss discrepancy

We comment that the proposed MCp and IMCp are justified based upon the assumption that the true model is contained in the candidate models. Hence, we can calculate the MCp and IMCp values for the correctly and overfitted candidate models. However, the proposed criteria are also can be utilized for the underspecified models except that the values will be quite large and not behave well.

4. Simulation Study

In this simulation study, we investigate the ability of MCp in (3.4) and IMCp in (3.5) to determine the correct set of fixed effects for the simulated data in different models.

4.1. Presentation of Simulations

Consider a setting in which data are generated by the model of the following form

where the random effects

tions from the same case is

ing function of f. Therefore, a higher f implies a higher correlation between the observations in the same case.

For convenience, the generating model can also be expressed by

where

Since the random part of the model (i.e. Zb) is not subject to selection, we would like to express the model by its marginal form. Let

which can also be expressed by the general form as

where

In this simulation study, we generate the design matrix X with

1) Model 1:

2) Model 2:

3) Model 3:

These three models correspond to the three bs:

Furthermore, we consider the case where the correlated errors have varying degrees of exchangeable structure. The variance component of error term

4.2. Results

4.2.1. Model 1:

Table 1 presents the performance of the two versions of marginal Cp (MCp and IMCp), mAIC and mBIC, under model 1 with the true fixed effects parameter

4.2.2. Model 2:

We evaluate the proposed criteria for model 2 in the same manner as for model 1. Table 2 presents the performance of MCp and IMCp, mAIC and mBIC under model 2, where the true fixed effects parameter is

4.2.3. Model 3:

As in the first two models, we evaluate the performance of model selection criteria by the rates in correctly selecting the true model. The results are presented in Table 3. Model 3 is identical to model 2 with the exception that we add one more significant fixed effect variable X2 with the coefficient

The simulation results of model 3 are similar to those of models 1 - 2. Considering the rates in choosing the correct model, we can find the trend of dramatic improvement of all criteria on model 3 over those on models 1 and 2, implying that the proposed MCp and IMCp essentially and effectively implement model selection when the fixed-effects are significant. In moderately large (m = 20) sample sizes, compared to that of mAIC and mBIC, MCp and IMCp have comparative performance in selecting the correct model.

Table 1. Correct selection rate in model 1.

Table 2. Correct selection rate in model 2.

Table 3. Correct selection rate in model 3.

5. Concluding Remarks

The simulation results illustrate that the proposed criteria MCp and IMCp outperform mAIC and mBIC when the observations are highly correlated in small samples. The results also show that with the increasing of the ratio f between the variance for the random effects and that for errors, the MCp and IMCp perform better. Since a larger f implies a higher correlation between the observations, we can conclude that with the correlation between observations increases, a better performance from the proposed criteria MCp and IMCp would be observed. Since the model with a small f which close to 0 is similar to a linear regression model with independent errors, our proposed criteria are not advantageous to be applied in such case.

The simulation results show that the proposed criteria MCp and IMCp significantly outperform mAIC and mBIC when the sample size is small. As the sample size increases, the performance of the proposed criteria becomes comparable to that of mAIC and mBIC. Therefore, MCp and IMCp are highly recommended in small samples in the setting of linear mixed models.

Our research (not shown in this paper) also shows that both proposed criteria behave best when the maximum likelihood estimation (MLE) is employed, comparing to those when the restricted maximum likelihood estimation or least squares estimation are used. The research on MCp and IMCp under REML estimation needs to be further developed in the future.

In the simulation study, by the comparison among models 1, 2 and 3, we see that when the true model includes more significant fixed effect covariates, the proposed criteria perform better in selecting the correct model. This fact indicates that the models with more significant variables (larger bs) are more identifiable by the proposed criteria than the models with variables which are not quite significant.

Comparing the performance between MCp and IMCp, we find that when the sample size is small, IMCp obtains a higher correct selection rate than MCp, which demonstrates that IMCp improves the performance of MCp in selecting the most appropriate model. However, when the sample size becomes larger, the performance of MCp and IMCp is quite identical.

Regarding the consistency of a model selection criterion, it means that as the sample size increases, the model selection will select the true model with probability 1. Note that MCp, IMCp, and mAIC are not consistent, whereas mBIC is consistent as expected since its penalty term

Cite this paper

Cheng Wenren,Junfeng Shang,Juming Pan, (2016) Marginal Conceptual Predictive Statistic for Mixed Model Selection. Open Journal of Statistics,06,239-253. doi: 10.4236/ojs.2016.62021

References

- 1. Mallows, C.L. (1973) Some Comments on Cp. Technometrics, 15, 661-675.

- 2. Mallows, C.L. (1995) More Comments on Cp. Technometrics, 37, 362-372.

- 3. Akaike, H. (1973) Information Theory and an Extension of the Maximum Likelihood Principle. In: Petrov, B.N. and Csaki, F., Eds., International Symposium on Information Theory, 267-281.

- 4. Akaike, H. (1974) A New Look at the Model Selection Identification. IEEE Transactions on Automatic Control, 19, 716-723.

http://dx.doi.org/10.1109/TAC.1974.1100705 - 5. Schwarz, G. (1978) Estimating the Dimension of a Model. Annals of Statistics, 6, 461-464.

http://dx.doi.org/10.1214/aos/1176344136 - 6. Sugiura, N. (1978) Further Analysis of the Data by Akaike’s Information Criterion and the Finite Corrections. Communications in Statistics—Theory and Methods A, 7, 13-26.

http://dx.doi.org/10.1080/03610927808827599 - 7. Shang, J. and Cavanaugh, J.E. (2008) Bootstrap Variants of the Akaike Information Criterion for Mixed Model Selection. Computational Statistics & Data Analysis, 52, 2004-2021.

http://dx.doi.org/10.1016/j.csda.2007.06.019 - 8. Azari, R., Li, L. and Tsai, C. (2006) Longitudinal Data Model Selection. Applied Times Series Analysis, Academic Press, New York, 1-23.

http://dx.doi.org/10.1016/j.csda.2005.05.009 - 9. Vaida, F. and Blanchard, S. (2005) Conditional Akaike Information for Mixed-Effects Models. Biometrika, 92, 351-370.

http://dx.doi.org/10.1093/biomet/92.2.351 - 10. Henderson, C.R. (1950) Estimation of Genetic Parameters. Annals of Mathematical Statistics, 21, 309-310.

- 11. Harville, D.A. (1990) BLUP (Best Linear Unbiased Prediction) and beyond. In: Gianola, D. and Hammond, K., Eds., Advances in Staitstical Methods for Genetic Improvement of Livestock, Springer, New York, 239-276.

http://dx.doi.org/10.1007/978-3-642-74487-7_12 - 12. Robinson, G.K. (1991) That BLUP Is a Good Thing: The Estimation of Random Effects. Statistical Science, 6, 15-32.

http://dx.doi.org/10.1214/ss/1177011926 - 13. Dimova, R.B., Mariantihi, M. and Talal, A.H. (2011) Information Methods for Model Selection in Linear Mixed Effects Models with Application to HCV Data. Computational Statistics & Data Analysis, 55, 2677-2697.

http://dx.doi.org/10.1016/j.csda.2010.10.031 - 14. Müller, S., Scealy, J.L. and Welsh, A.H. (2013) Model Selection in Linear Mixed Models. Statistical Science, 28, 135-167.

http://dx.doi.org/10.1214/12-STS410 - 15. Jones, R.H. (2011) Bayesian Information Criterion for Longitudinal and Clustered Data. Statistics in Medicine, 30, 3050-3056.

http://dx.doi.org/10.1002/sim.4323 - 16. Fujikoshi, Y. and Satoh, K. (1997) Modified AIC and Cp in Multivariate Linear Regression. Biometrika, 84, 707-716.

http://dx.doi.org/10.1093/biomet/84.3.707 - 17. Davies, S.L., Neath, A.A. and Cavanaugh, J.E. (2006) Estimation Optimality of Corrected AIC and Modified Cp in Linear Regression. International Statistical Review, 74, 161-168.

http://dx.doi.org/10.1111/j.1751-5823.2006.tb00167.x - 18. Cavanaugh, J., Neath, A.A. and Davies, S.L. (2010) An Alternate Version of the Conceptual Predictive Statistic Based on a Symmetrized Discrepancy Measure. Journal of Statistical Planning and Inference, 140, 3389-3398.

http://dx.doi.org/10.1016/j.jspi.2010.05.002 - 19. Jiang, J. (2007) Linear and Generalized Linear Mixed Models and Their Applications. Springer, New York.

- 20. Jiang, J. and Rao, J.S. (2003) Consistent Procedures for Mixed Linear Model Selection. Sankhya, 65, 23-42.

Appendix

Proof of Theorem 1. 1) To prove that

Thus, we prove that

2) By the properties of trace, we have

Therefore, we have

Thus, Theorem 1 is proved. □

Proof of Theorem 2. Let

Since

By

So we have

Proof of Corollary 2. 1) Since

such that

Let

Now, let

and

Since

The first part of Corollary 2 is therefore proved.

2) Following the first part proof of Corollary 2, since

Therefore, the proof for the second part of Corollary 2 is completed. □

NOTES

*Corresponding author.