Open Journal of Statistics

Vol.05 No.07(2015), Article ID:61996,15 pages

10.4236/ojs.2015.57068

Statistical Classification Using the Maximum Function

T. Pham-Gia1, Nguyen D. Nhat2, Nguyen V. Phong3

1Université de Moncton, Moncton, Canada

2University of Economics and Law, Hochiminh City, Vietnam

3University of Finance and Marketing, Hochiminh City, VietNam

Copyright © 2015 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

Received 8 October 2015; accepted 14 December 2015; published 17 December 2015

ABSTRACT

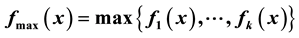

The maximum of k numerical functions defined on ,

,

, by

, by ,

,

is used here in Statistical classification. Previously, it has been used in Statistical Discrimination [1] and in Clustering [2] . We present first some theoretical results on this function, and then its application in classification using a computer program we have developed. This approach leads to clear decisions, even in cases where the extension to several classes of Fisher’s linear discriminant function fails to be effective.

is used here in Statistical classification. Previously, it has been used in Statistical Discrimination [1] and in Clustering [2] . We present first some theoretical results on this function, and then its application in classification using a computer program we have developed. This approach leads to clear decisions, even in cases where the extension to several classes of Fisher’s linear discriminant function fails to be effective.

Keywords:

Maximum, Discriminant Function, Pattern Classification, Normal Distribution, Bayes Error, L1-Norm, Linear, Quadratic, Space Curves

1. Introduction

In our two previous articles [1] and [2] , it is shown that the maximum function can be used to introduce new approaches in Discrimination Analysis and in Clustering. The present article, which completes the series on the uses of that function, applies the same concept to develop a new approach in classification that can be shown to be versatile and quite efficient.

Classification is a topic encountered in several disciplines of applied science, such as Pattern Recognition (Duda, Hart and Stork [3] ), Applied Statistics (Johnson and Wichern [4] ), Image Processing (Gonzalez, Woods and Eddins [5] ). Although the terminologies can differ, the approaches are basically the same. In , we are in the presence of training data sets to build discriminant functions that will enable us to do some classification of a future data set into one of the C classes considered. Several approaches are proposed in the literature. The Bayesian Decision Theory approach starts with the determination of normal (or non-normal) distributions

, we are in the presence of training data sets to build discriminant functions that will enable us to do some classification of a future data set into one of the C classes considered. Several approaches are proposed in the literature. The Bayesian Decision Theory approach starts with the determination of normal (or non-normal) distributions

governing these data sets, and also prior probabilities

governing these data sets, and also prior probabilities

(with sum

(with sum ) assigned to these distributions. More general considerations include the cost

) assigned to these distributions. More general considerations include the cost

of misclassifications, but since in applications we rarely know the values of these costs they are frequently ignored. We will call this approach the common Bayesian one. Here, the comparison of the related posterior probabilities of these classes, also called “class conditional distribution functions”, is equivalent to compare the values of

of misclassifications, but since in applications we rarely know the values of these costs they are frequently ignored. We will call this approach the common Bayesian one. Here, the comparison of the related posterior probabilities of these classes, also called “class conditional distribution functions”, is equivalent to compare the values of , and a new data point

, and a new data point

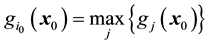

will be classified into

will be classified into

the distribution

with highest value of

with highest value of , i.e.

, i.e. .

.

On the other hand, Fisher’s solution to the classification problem is based on a different approach and remains an interesting and important method. Although the case of two classes is quite clear for the application of Fisher’s linear discriminant function, the argument and especially the computations, become much harder when we are in the presence of more than two classes.

At present, the multinormal model occupies, and rightly so, a position of choice in discriminant analysis, and various approaches using this model have led to the same results. We have, in ,

,

1) For discrimination and classification into one of the two classes, we have the two equations:

and their ratio

2) In general, using the logarithm of

Expanding the quadratic form, we obtain:

where

This function

An equivalent approach considers the ratio of two of these functions

and leads to the decision of classifying a new observation as in class

The presentation of our article is as follows: in Section 2, we recall the classical discriminant function in the two-class case when training samples are used. Section 3 formalizes the notion of classification and recalls several important results presented in our two previous publications, which are useful to the present one. Section 4 presents the intersections of two normal surfaces and their projections on Oxy. Section 5 deals with classification into one of C classes, with

2. Classification Rules Using Training Samples

Working with samples, since the values of

1)

The decision rule is then:

For a new vector

and to class

We can see that the discriminant function

where

2) Different covariance matrices:

For a new vector

and to

3. Classification Functions

3.1. Decision Surfaces and Decision Regions

Let a population consist of C disjoint classes. We now present our approach and prove that for the two class case it coincides with the method in the previous section.

Definition 1. A decision surface

Definition 2. Let

A max-classification function

For a value

3.2. Properties of gmax(×)

There are several other properties associated with the max function and we invite the reader to look at these two articles [2] and [1] , to find:

1) Clustering of densities using the width of successive clusters.

A

and is slightly different than the case

2) Considering now

From [6] and [7] , we have the double inequality

with Bayes error given by

since

4. Discrimination between 2 Classes

For simplicity and for graphing purpose we will consider only the bivariate case

4.1. Determining the Function gmax

Our approach is to determine the function

achieved by finding the regions of definition of

1) For the two-class case we show that this approach is equivalent to the common Bayesian approach recalled earlier in Section 2. First, from Equation (6), equation

When the dimension of p exceeds 2 we have these projections as hyperquadrics, which are harder to visualize and represent graphically.

2) For the C classes case,

determine definition regions of

These regions are given below. Once they are determined they are clearly marked down as assigned to class i, or to class j, and the family of all these regions will give the classification regions for all observations. Naturally, definition regions for

4.2. Intersections of Normal Surfaces

In the non-normal case, the intersection space curve(s), and its projection, can be quite complex (see example 6). Below are some examples for the normal case.

Two normal surfaces, representing

In

Equaling the two expressions we obtain equations of the projections (in the horizontal plane) of the intersections curves in

1) A straight line (when covariance matrices are equal), or a pair of straight lines, parallel or intersecting each other.

2) A parabola: This happens when

Example 1.

Let

Figure 1 shows the graph of

Figure 1.

3) An ellipse: When

Example 2.

Let

Figure 2 shows the graph of

The equation of this ellipse is

4) A hyperbola: This happens when

Example 3. Let

Figure 3 shows the graph of

Figure 2.

Figure 3.

hyperbola.

The equation of this hyperbola is

5. Classification into One of C Classes (C ≥ 3)

The

In the case these matrices are unequal they can intersect according to a complicated pattern, as shown in Figure 4(b).

5.1. Our Approach

For normal surfaces of different means and covariance matrices, in the common Bayesian approach we can use (6) or (7), or equivalently, classify a new value

1) One against all, using the

2) Two at a time, using

As pointed out by Fukunaga ([8] , p. 171) these methods can lead to regions not clearly assignable to any group.

In our approach, we use the second method and compile all results so that

5.2. Fisher’s Approach

It is the method suggested first [9] , in the statistical literature for discrimination and then for classification. It is still a very useful method. The main idea is to find, and use, a space of lesser dimensions in which the data is projected, with their projections exhibiting more discrimination, and being easier to handle.

1) Case of 2 classes. It can be summarized as follows:

with

is maximum, where

Fisher’s method in this case reduces to the common Baysian method if we suppose the populations normal. Implicitly it already supposes the variances equal. But Fisher’s method allows the consideration that variables can be can enter individually, so as to measure their relative influence, as in analysis of variance and regression.

2) Fisher’s multilinear method (extension of the above approach due to CR Rao): C classes, of dimension p and

Projection into space of

Figure 4. (a).

The projection from a p-dim space to a

that the ratio

We can see that Fisher’s multilinear method can be quite complicated.

5.3. Advantages of Our Approach

Our computer-based approach offers the following advantages:

1) It uses concepts at the base: Max of

2) The determination of the maximum function is essentially machine-oriented, and can often save the analyst from performing complex matrix or analytic manipulations. This point is of particular interest when this analysis concerns vectors of high dimensions. To classify a new observation

3) Complex cases arise when there are a large number of classes, or a large number of variables (high value for p). But as long as the normal surfaces can be determined the software Hammax can be used. In the case where p is much larger than the sample sizes, we have to find the most significant dimensions and use them only, before applying the software.

4) It offers a visual tool very useful to the analyst when

5) Regions not clearly assignable to any group, are removed with the use of

6) For the non-normal case,

7) It permits the computation of the Bayes error, which can be used as a criterion in ordering different classification approaches. Naturally, the error computed by our software from data is an estimation of the theoretical, but unknown, Bayes error obtained from population distributions.

6. Output of Software Hammax in the Case of 4 Classes

The integrated computer software developed by our group is able to handle most of the computations, simulations and graphic features presented in this article. This software extends and generalizes some existing routines, for example the Matlab function Bayes Gauss ([5] , p. 493), which is based on the same decision principles.

Below are some of its outputs, first in the case of classification into a four-class population.

Example 4. Numerical and graphical results determining

To obtain Figure 6 we use all intersection curves given in Figure 7 below.

In this example we have all hyperbolas as boundaries in the horizontal plane. Their intersections will serve to determine the regions of definition of

Classification: For the new observation, for example (25, 35), we can see that it is classified in

Note: In the above graph, for computation purpose we only consider

7. Risk and the Minimum Function

1) When risk, as the penalty in misclassification, is considered in decision making we aim at the min risk rather than the max risk. In the literature, to simplify the process, we usually take the average risk, also called Bayes

Figure 5. 3D Graph of

Figure 6. Regions in Oxy of definition of

Figure 7. Projections of intersection curves of

Figure 8. Points used to determine definition regions of

risk, or the min of all max values of all different risks, according to the minimax principle.

We suppose here that risk

and various competing risks

A minimum-classification function

Definition 4. A min-classification function

For a value

2) A relation between the max and min functions can be established by using the inclusion-exclusion principle:

Integrating this relation we have a relation between

class having the lowest risk at that point. The function

Example 5. The four normal distributions are the same as in Example 1 but represent the densities of the risks associated with the problem. Using the same prior probabilities the function

For

Remarks: a) For the two-population case this integral is also the overlap coefficient and can be used for inferences on the similarity, or difference between the two populations.

b) The boundaries between regions defining

8. Applications and Other Considerations

8.1. The Software Hammax

This software has been developed by our research group and is part of a more elaborate software to deal with discrimination, classification and cluster analysis, as well as with other applications related to the multinormal distribution. This software is in further development to be interactive and more user-friendly, and has its own

Figure 9. (a). 3D-graph of

copyright. It will also have more connections with social sciences applications.

8.2. The Non-Parametric Density Estimation Approach

A more general approach would directly use data available in each group to estimate the density of its distribution. The

they are estimated by

where

computed by simulation, gives the same value as for the parametric normal case.

8.3. Non-Normal Model

As stated earlier an approach based on the maximum function is valid for non-normal populations. We construct here an example for such a case.

Let us consider the case where the population density

where

with

Similarly, we have:

where

Example 6. For

We can see that the last two functions intersect each other along a curve in

where

This curve will serve in the classification of a new observation in either of the two groups.

Figure 10. Two bivariate beta densities, their intersection and its projection.

Any data above the curve, e.g. (0.2, 0.6), is classified as in Class 1. Otherwise, e.g. (0.2, 0.2), it is in Class 2. Numerical integration gives

9. Conclusion

The maximum function, as presented above, gives another tool to be used in Statistical Classification and Analysis, incorporating discriminant analysis and the computation of Bayes error. In the two-dimensional case, it also provides graphs for space curves and surfaces that are very informative. Furthermore, in higher dimensional spaces, it can be very convenient since it is machine oriented, and can free the analyst from complex analytic computations related to the discriminant function. The minimum function is also interested, has many applications of its own, and will be presented in a separate article.

Cite this paper

T.Pham-Gia,Nguyen D.Nhat,Nguyen V.Phong, (2015) Statistical Classification Using the Maximum Function. Open Journal of Statistics,05,665-679. doi: 10.4236/ojs.2015.57068

References

- 1. Pham-Gia, T., Turkkan, N. and Vovan, T. (2008) Statistical Discrimination Analysis Using the Maximum Function, Communic. in Stat., Computation and Simulation, 37, 320-336.

http://dx.doi.org/10.1080/03610910701790475 - 2. Vovan, T. and Pham-Gia, T. (2010) Clustering Probability Densities. Journal of Applied Statistics, 37, 1891-1910.

- 3. Duda, R.O., Hart, P.E. and Stork, D.G. (2001) Pattern Classification. John Wiley and Sons, New York.

- 4. Johnson and Wichern (1998) Applied Multivariate Statistical Analysis. 4th Edition, Prentice-Hall, New York.

http://dx.doi.org/10.2307/2533879 - 5. Gonzalez, R.C., Woods, R.E. and Eddins, S.L. (2004) Digital Image Processing with Matllab. Prentice-Hall, New York.

- 6. Glick, N. (1972) Sample-Based Classification Procedures Derived from Density Estimators. Journal of the American Statistical Association, 67, 116-122.

http://dx.doi.org/10.1080/01621459.1972.10481213 - 7. Glick, N. (1973) Separation and Probability of Correct Classification among Two or More Distributions. Annals of the Institute of Statistical Mathematics, 25, 373-382.

http://dx.doi.org/10.1007/BF02479383 - 8. Fukunaga (1990) Introduction to Statistical Pattern Recognition. 2nd Edition, Academic Press, New York.

- 9. Fisher, R.A. (1936) The Statistical Utilization of Multiple Measurements. Annals of Eugenic, 7, 376-386.

- 10. Flury, B. and Riedwyl, H. (1988) Multivariate Statistics. Chapman and Hall, New York.

http://dx.doi.org/10.1007/978-94-009-1217-5 - 11. Martinez, W.L. and Martinez, A.R. (2002) Computational Statistics Handbook with Matlab. Chapman & Hall/CRC, Boca Raton.