M. BLANCO ET AL.

Copyright © 2013 SciRes. ENG

Figure 6. Serial segmentation results: the rectangle marks

the defined ROI within which a restricted thresholding is

performed to delineate the glottis (outlined).

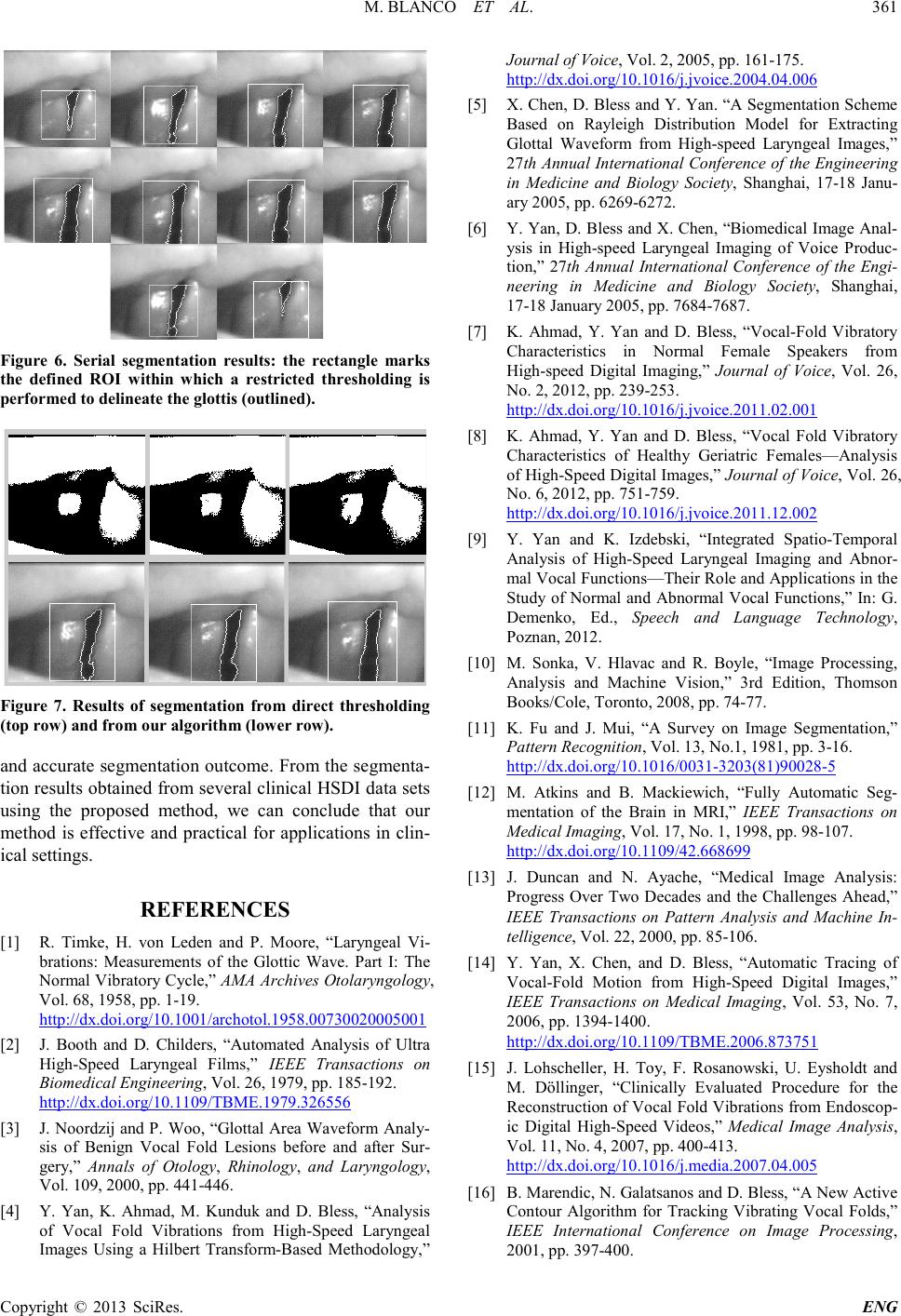

Figure 7. Results of segmentation from direct thresholding

(top r ow) and f r om our algorithm (lower row).

and accurate segmentation outcome. From the segmenta-

tion results obta ined from several c linical HSDI data sets

using the proposed method, we can conclude that our

method is effective and practical for applications in clin-

ical settings.

REFERENCES

[1] R. Timke, H. von Leden and P. Moore, “Laryngeal Vi-

brations: Measurements of the Glottic Wave. Part I: The

Normal Vibratory Cycle,” AMA Archives Otolaryngology,

Vol. 68, 1958, pp. 1-19.

http://dx.doi.org/10.1001/archotol.1958.00730020005001

[2] J. Booth and D. Childers, “Automated Analysis of Ultra

High-Speed Laryngeal Films,” IEEE Transactions on

Biomedical Engineering, Vol. 26, 1979, pp. 18 5-192.

http://dx.doi.org/10.1109/TBME.1979.326556

[3] J. Noordzij and P. Woo, “Glottal Area Waveform Analy-

sis of Benign Vocal Fold Lesions before and after Sur-

gery,” Annals of Otology, Rhinology, and Laryngology,

Vol. 109, 2000, pp. 441-446.

[4] Y. Yan, K. Ahmad, M. Kunduk and D. Bless, “Analysis

of Vocal Fold Vibrations from High-Speed Laryngeal

Images Using a Hilbert Transform-Based Methodology,”

Journal of Voice, Vol. 2, 2005, pp. 161-175.

http://dx.doi.org/10.1016/j.jvoice.2004.04.006

[5] X . Chen, D. Bles s and Y. Yan. “A Segmen tation Scheme

Based on Rayleigh Distribution Model for Extracting

Glottal Waveform from High-speed Laryngeal Images,”

27th Annual International Conference of the Engineering

in Medicine and Biology Society, Shanghai, 17-18 Janu-

ary 2005, pp. 6269-6272.

[6] Y. Yan, D. Bless and X. Chen, “Biomedical Image Anal-

ysis in High-speed Laryngeal Imaging of Voice Produc-

tion,” 27th Annual International Conference of the Engi-

neering in Medicine and Biology Society, Shanghai,

17-18 January 2005, pp. 7684-7687.

[7] K. Ahmad, Y. Yan and D. Bless, “Vocal-Fold Vibratory

Characteristics in Normal Female Speakers from

High-speed Digital Imaging,” Journal of Voice, Vol. 26,

No. 2, 2012, pp. 239-253.

http://dx.doi.org/10.1016/j.jvoice.2011.02.001

[8] K. Ahmad, Y. Yan and D. Bless, “Vocal Fold Vibratory

Characteristics of Healthy Geriatric Females—Analysis

of High-Speed Digital Images,” Journal of Voice, Vol. 26,

No. 6, 2012, pp. 751-759.

http://dx.doi.org/10.1016/j.jvoice.2011.12.002

[9] Y. Yan and K. Izdebski, “Integrated Spatio-Temporal

Analysis of High-Speed Laryngeal Imaging and Abnor-

mal Vo cal F un ction s—Their Role and Applications in the

Study of Normal and Abnormal Vocal Functions,” In: G.

Demenko, Ed., Speech and Language Technology,

Poznan, 2012.

[10] M. Sonka, V. Hlavac and R. Boyle, “Image Processing,

Analysis and Machine Vision,” 3rd Edition, Thomson

Books /C ole, Toro nt o, 20 08, pp. 74-77.

[11] K. Fu and J. Mui, “A Survey on Image Segmentation,”

Pattern Recognition, Vol. 13, No.1, 1981, pp. 3-16.

http://dx.doi.org/10.1016/0031-3203(81)90028-5

[12] M. Atkins and B. Mackiewich, “Fully Automatic Seg-

mentation of the Brain in MRI,” IEEE Transactions on

Medical Imaging, Vol. 17, No. 1, 1998, pp . 98-107.

http://dx.doi.org/10.1109/42.668699

[13] J. Duncan and N. Ayache, “Medical Image Analysis:

Progress Over Two Decades and the Challenges Ahead,”

IEEE Transactions on Pattern Analysis and Machine In-

telligence, Vol . 2 2, 20 00, pp. 85-106.

[14] Y. Yan, X. Chen, and D. Bless, “Automatic Tracing of

Vocal-Fold Motion from High-Speed Digital Images,”

IEEE Transactions on Medical Imaging, Vol. 53, No. 7,

2006, pp . 1394-1400.

http://dx.doi.org/10.1109/TBME.2006.873751

[15] J. Lohscheller, H. Toy, F. Rosanowski, U. Eysholdt and

M. Döllinger, “Clinically Evaluated Procedure for the

Reconstruction of Vocal Fold Vibrations from Endoscop-

ic Digital High-Speed Videos,” Medical Image Analysis,

Vol. 11, No. 4, 2007, pp. 400-413.

http://dx.doi.org/10.1016/j.media.2007.04.005

[16] B. Marendic, N. Galats ano s and D. Bless, “A N e w Acti v e

Contour Algorithm for Tracking Vibrating Vocal Folds,”

IEEE International Conference on Image Processing,

2001, pp . 397-400.