N. T. HAI ET AL.

Copyright © 2013 SciRes. ENG

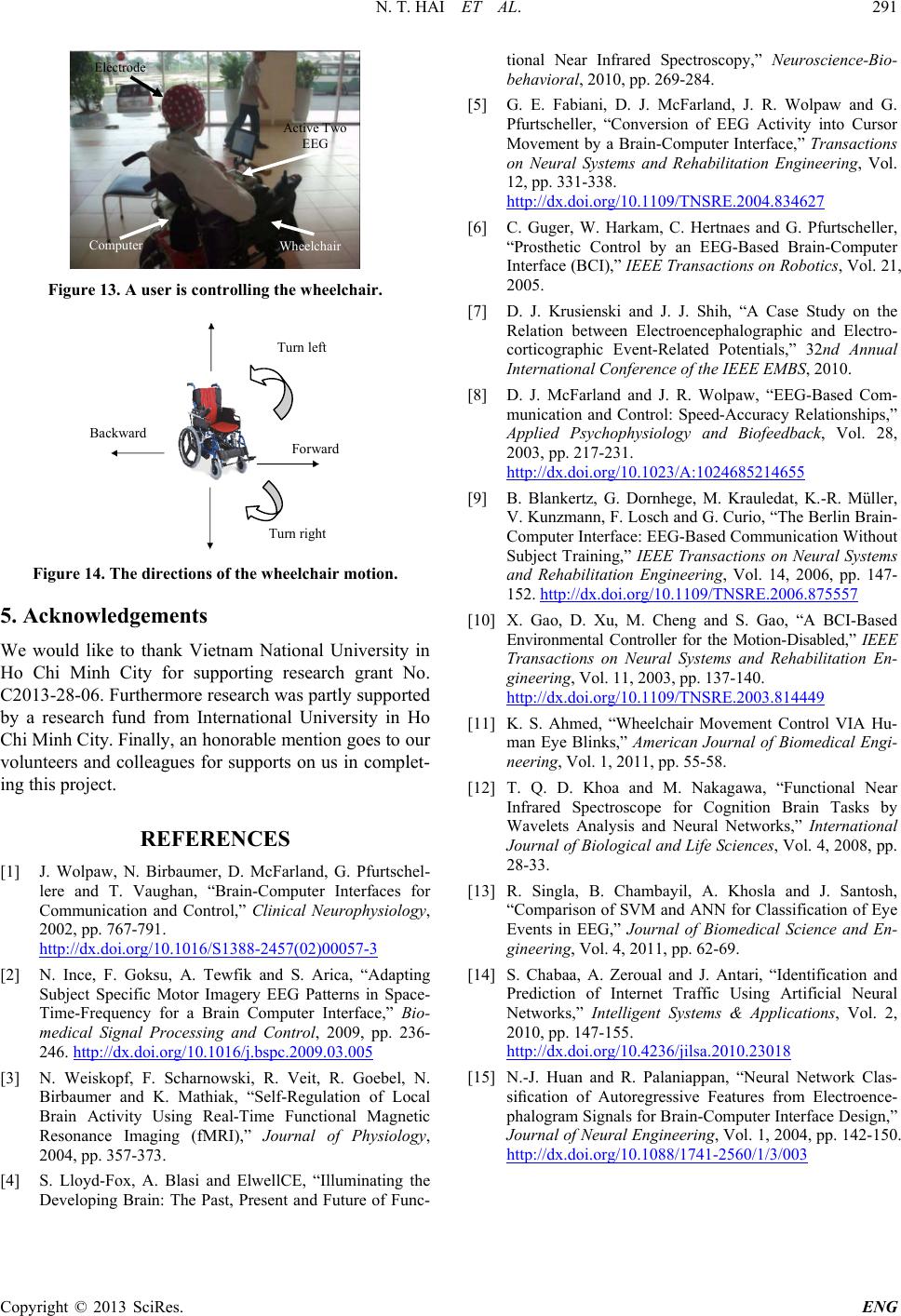

Figure 13. A user is controlling the wheelchair.

Figure 14. The directions of the wheelchair motion.

5. Acknowledgements

We would like to thank Vietnam National University in

Ho Chi Minh City for supporting research grant No.

C2013-28-06. Furthermore research was partly supported

by a research fund from International University in Ho

Chi Minh City. Finally, an honorable mention goes to our

volunteers and colleagues for supports on us in complet-

ing this project.

REFERENCES

[1] J. Wolpaw, N. Birbaumer, D. McFarland, G. Pfurtschel-

lere and T. Vaughan, “Brain-Computer Interfaces for

Communication and Control,” Clinical Neurophysiology,

2002, pp. 767-791.

http://dx.doi.org/10.1016/S1388-2457(02)00057-3

[2] N. Ince, F. Goksu, A. Tewfik and S. Arica, “Adapting

Subject Specific Motor Imagery EEG Patterns in Space-

Time-Frequency for a Brain Computer Interface,” Bio-

medical Signal Processing and Control, 2009, pp. 236-

246. http://dx.doi.org/10.1016/j.bspc.2009.03.005

[3] N. Weiskopf, F. Scharnowski, R. Veit, R. Goebel, N.

Birbaumer and K. Mathiak, “Self-Regulation of Local

Brain Activity Using Real-Time Functional Magnetic

Resonance Imaging (fMRI),” Journal of Physiology,

2004, pp. 357-373.

[4] S. Lloyd-Fox, A. Blasi and ElwellCE, “Illuminating the

Developing Brain: The Past, Present and Future of Func-

tional Near Infrared Spectroscopy,” Neuroscience-Bio-

behavioral, 2010, pp. 269-284.

[5] G. E. Fabiani, D. J. McFarland, J. R. Wolpaw and G.

Pfurtscheller, “Conversion of EEG Activity into Cursor

Movement by a Brain-Computer Interface,” Transactions

on Neural Systems and Rehabilitation Engineering, Vol.

12, pp. 331-338.

http://dx.doi.org/10.1109/TNSRE.2004.834627

[6] C. Guger, W. Harkam, C. Hertnaes and G. Pfurtscheller,

“Prosthetic Control by an EEG-Based Brain-Computer

Interface (BCI),” IEEE Transactions on Robotics, Vol. 21,

2005.

[7] D. J. Krusienski and J. J. Shih, “A Case Study on the

Relation between Electroencephalographic and Electro-

corticographic Event-Related Potentials,” 32nd Annual

International Conference of the IEEE EMBS, 2010.

[8] D. J. McFarland and J. R. Wolpaw, “EEG-Based Com-

munication and Control: Speed-Accuracy Relationships,”

Applied Psychophysiology and Biofeedback, Vol. 28,

2003, pp. 217-231.

http://dx.doi.org/10.1023/A:1024685214655

[9] B. Blankertz, G. Dornhege, M. Krauledat, K.-R. Müller,

V. Kunzmann, F. Losch and G. Curio, “The Berlin Brain-

Computer Interface: EEG-Based Communication Without

Subject Training,” IEEE Transactions on Neural Systems

and Rehabilitation Engineering, Vol. 14, 2006, pp. 147-

152. http://dx.doi.org/10.1109/TNSRE.2006.875557

[10] X. Gao, D. Xu, M. Cheng and S. Gao, “A BCI-Based

Environmental Controller for the Motion-Disabled,” IEEE

Transactions on Neural Systems and Rehabilitation En-

gineering, Vol. 11, 2003, pp. 137-140.

http://dx.doi.org/10.1109/TNSRE.2003.814449

[11] K. S. Ahmed, “Wheelchair Movement Control VIA Hu-

man Eye Blinks,” American Journal of Biomedical Engi-

neering, Vol. 1, 2011, pp. 55-58.

[12] T. Q. D. Khoa and M. Nakagawa, “Functional Near

Infrared Spectroscope for Cognition Brain Tasks by

Wavelets Analysis and Neural Networks,” International

Journal of Biological and Life Sciences, Vol. 4, 2008, pp.

28-33.

[13] R. Singla, B. Chambayil, A. Khosla and J. Santosh,

“Comparison of SVM and ANN for Classification of Eye

Events in EEG,” Journal of Biomedical Science and En-

gineering, Vol. 4, 2011, pp. 62-69.

[14] S. Chabaa, A. Zeroual and J. Antari, “Identification and

Prediction of Internet Traffic Using Artificial Neural

Networks,” Intelligent Systems & Applications, Vol. 2,

2010, pp. 147-155.

http://dx.doi.org/10.4236/jilsa.2010.23018

[15] N.-J. Huan and R. Palaniappan, “Neural Network Clas-

sification of Autoregressive Features from Electroence-

phalogram Signals for Brain-Computer Interface Design,”

Journal of Neural Engineering, Vol. 1, 2004, pp. 142-150.

http://dx.doi.org/10.1088/1741-2560/1/3/003