L. LIAO

Copyright © 2013 SciRes. ENG

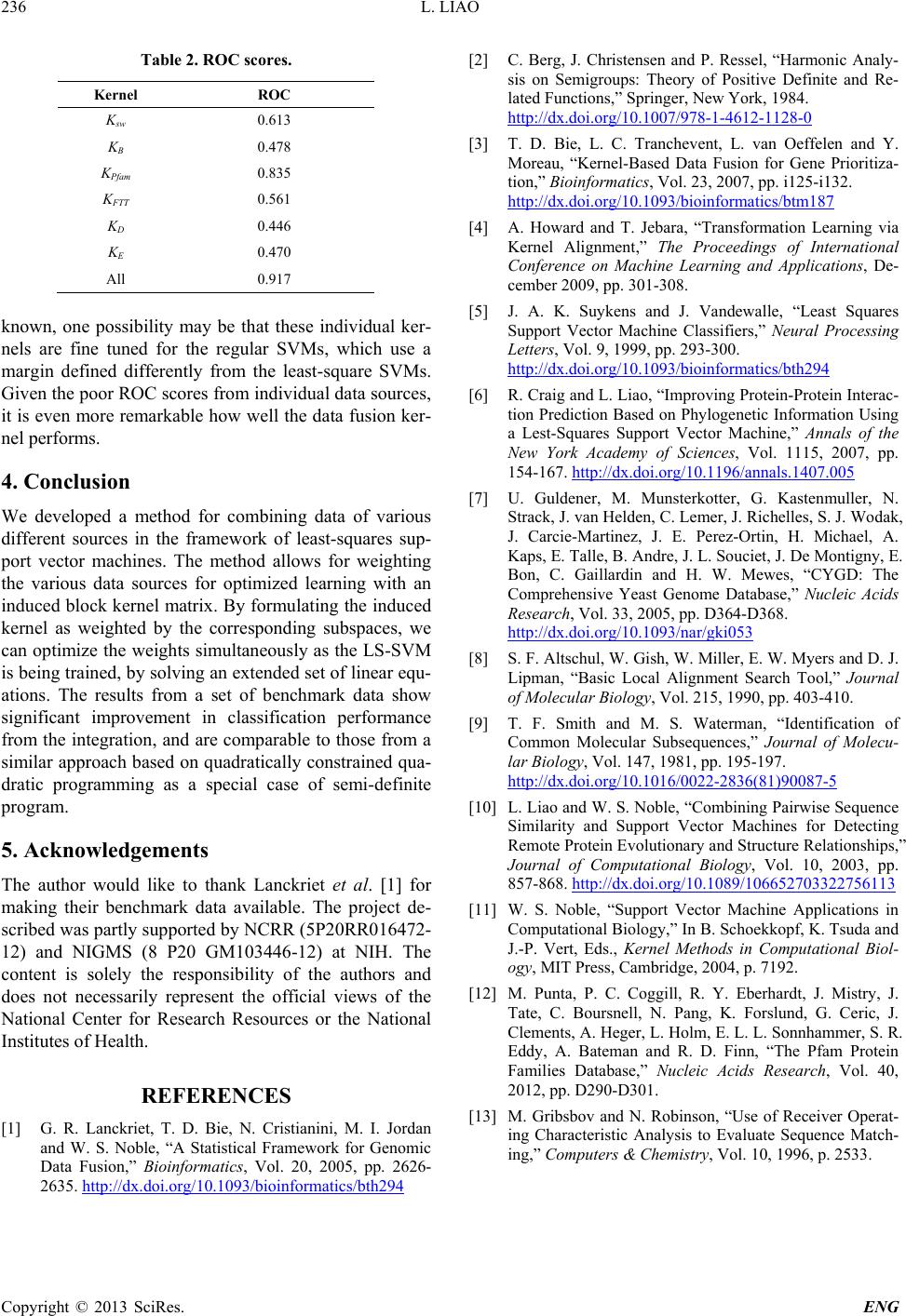

Table 2. ROC scores.

Kernel ROC

Ksw 0.613

KB 0.478

KPfam 0.835

KFTT 0.561

KD 0.446

KE 0.470

All 0.917

known, one possibility may be that these individual ker-

nels are fine tuned for the regular SVMs, which use a

margin defined differently from the least-square SVMs.

Given the poor ROC scores from individual data sources,

it is even more remarkable how well the data fusion ker-

nel performs.

4. Conclusion

We developed a method for combining data of various

different sources in the framework of least-squares sup-

port vector machines. The method allows for weighting

the various data sources for optimized learning with an

induced block kernel matrix. By formulating the induced

kernel as weighted by the corresponding subspaces, we

can optimize the weights simultaneously as the LS-SVM

is being trained, by solving an extended set of linear equ-

ations. The results from a set of benchmark data show

significant improvement in classification performance

from the integration, and are comparable to those from a

similar appr oach based on quadratica lly constrained qua -

dratic programming as a special case of semi-definite

program.

5. Acknowledgements

The author would like to thank Lanckriet et al. [1] for

making their benchmark data available. The project de-

scribed was partly supported b y NCRR (5P2 0RR0164 72-

12) and NIGMS (8 P20 GM103446-12) at NIH. The

content is solely the responsibility of the authors and

does not necessarily represent the official views of the

National Center for Research Resources or the National

Institutes of Health.

REFERENCES

[1] G. R. Lanckriet, T. D. Bie, N. Cristianini, M. I. Jordan

and W. S. Noble, “A Statistical Framework for Genomic

Data Fusion,” Bioinformatics, Vol. 20, 2005, pp. 2626-

2635. http://dx.doi.org/10.1093/bioinformatics/bth294

[2] C. Berg, J. Christensen and P. Ressel, “Harmonic Analy-

sis on Semigroups: Theory of Positive Definite and Re-

lated Functions,” Springer, New York, 1984.

http://dx.doi.org/10.1007/978-1-4612-1128-0

[3] T. D. Bie, L. C. Tranchevent, L. van Oeffelen and Y.

Moreau, “Kernel-Based Data Fusion for Gene Prioritiza-

tion,” Bioinformatics, Vol. 23, 2007, pp. i125-i132.

http://dx.doi.org/10.1093/bioinformatics/btm187

[4] A. Howard and T. Jebara, “Transformation Learning via

Kernel Alignment,” The Proceedings of International

Conference on Machine Learning and Applications, De-

cember 2009, pp. 301-308.

[5] J. A. K. Suykens and J. Vandewalle, “Least Squares

Support Vector Machine Classifiers,” Neural Processing

Letters, Vol. 9, 1999, pp. 293-300.

http://dx.doi.org/10.1093/bioinformatics/bth294

[6] R. Craig and L. Liao, “Improving Protein-Protein Interac-

tion Prediction Based on Phylogenetic Information Using

a Lest-Squares Support Vector Machine,” Annals of the

New York Academy of Sciences, Vol. 1115, 2007, pp.

154-167. http://dx.doi.org/10.1196/annals.1407.005

[7] U. Guldener, M. Munsterkotter, G. Kastenmuller, N.

Strack, J. van Helden, C. Lemer, J. Richelles, S. J. Wodak,

J. Carcie-Martinez, J. E. Perez-Ortin, H. Michael, A.

Kaps, E. Talle, B. Andre, J. L. Souciet, J. De Montigny, E.

Bon, C. Gaillardin and H. W. Mewes, “CYGD: The

Comprehensive Yeast Genome Database,” Nucleic Acids

Research, Vol. 33, 2005, pp. D364-D368.

http://dx.doi.org/10.1093/nar/gki053

[8] S. F. Altsc hul, W. Gish, W. Miller, E. W. My ers and D. J .

Lipman, “Basic Local Alignment Search Tool,” Journal

of Molecular Biology, Vol. 215, 1990, pp. 403-410.

[9] T. F. Smith and M. S. Waterman, “Identification of

Common Molecular Subsequences,” Journal of Molecu-

lar Biology, Vol. 147, 1981, pp. 195-197.

http://dx.doi.org/10.1016/0022-2836(81)90087-5

[10] L. Liao and W. S. Noble, “Combining Pairwise Sequence

Similarity and Support Vector Machines for Detecting

Remote Protein Evolutionary and Structure Relationships,”

Journal of Computational Biology, Vol. 10, 2003, pp.

857-868. http://dx.doi.org/10.1089/106652703322756113

[11] W. S. Noble, “Support Vector Machine Applications in

Computational Biology,” In B. Schoekkopf, K. Tsuda and

J.-P. Vert, Eds., Kernel Methods in Computational Biol-

ogy, MIT Press, Cambridge, 2004, p. 7192.

[12] M. Punta, P. C. Coggill, R. Y. Eberhardt, J. Mistry, J.

Tate, C. Boursnell, N. Pang, K. Forslund, G. Ceric, J.

Clements, A. Heger, L. Hol m, E . L. L. Sonnhammer, S. R.

Eddy, A. Bateman and R. D. Finn, “The Pfam Protein

Families Database,” Nucleic Acids Research, Vol. 40,

2012, pp. D290-D301.

[13] M. Gribsbov and N. Robinson, “Use of Receiver Operat-

ing Characteristic Analysis to Evaluate Sequence Match-

ing,” Computers & Chemistry, Vol. 10, 1996, p. 2533.