Development of a Client-Server System for 3D Scene Change Detection

18

mations including rotation, translation, and scaling.

Our system chooses Lin’s algorithm as our image

processing algorithm on the server side, the details will

be introduced in next section.

3. System Overview

3.1. Change Detection Algorithm

We use Lin’s method to detection change in the server

side. In their algorithm, temporal changes are detected by

using 3D scene geometry at time A (reference) and the

image of the same scene at time B (query). To quickly

detect changes and visualize them for operators at a

regular observation, the method uses 3D-2D matching

between a 3D reference scene and 2D query images fol-

lowed by 2D-2D feature comparison between 2D training

and query images, which is superior to either of 3D-3D

or 2D-2D matching.

In the case of 3D-3D matching [3,9,10], two 3D

scenes are registered for change detection. This may be

able to provide a detail comparison between reference

and query 3D scenes, however, this approach is not suit-

able because 1) online and real-time 3D reconstruction is

very difficult and still a challenging problem, and 2) a 3D

laser range finder is not easy to handle at the places of

our task because of its heavy weight and the need of

electric power. In the case of 2D-2D matching [11-13],

images (or videos) taken by a fixed camera are compared

to detect changes, or more commonly, moving objects.

This approach is also not appropriate for the detection

purpose: a number of fixed cameras are needed to cover

the whole scene of the observed place, hence, it is im-

practical if in particular the scene is wide and large. If the

scene is observed by a hand-held camera for taking a

number of 2D images, the problem above may not arise.

However, reference and query 2D images are very diffi-

cult to register unless they have a large overlapping area

in each pair of the images.

In contrast, Lin’s approach uses the combination of

3D-3D and 2D-2D matching. Assume that 3D scene

geometry is given but only at time A as a reference 3D

scene, hence no 3D range finder is necessary. At time B

(query), a hand-held camera is used to take images of the

same scene, and the reference 3D geometry is used for

3D-2D matching for camera pose estimation. This en-

ables us to perform a robust 3D-2D matching.

3.2. Model Overview

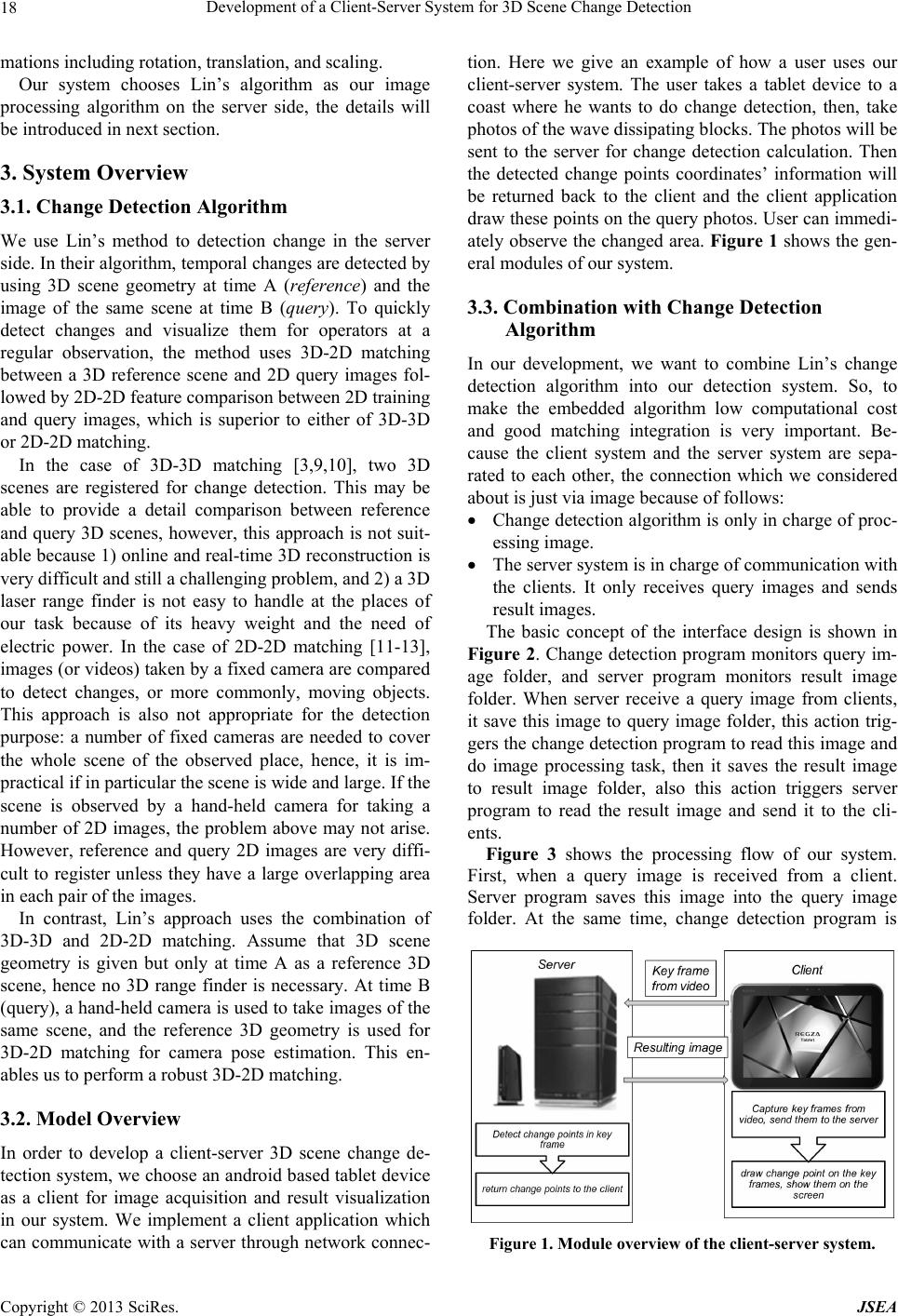

In order to develop a client-server 3D scene change de-

tection system, we choose an android based tablet device

as a client for image acquisition and result visualization

in our system. We implement a client application which

can communicate with a server through network connec-

tion. Here we give an example of how a user uses our

client-server system. The user takes a tablet device to a

coast where he wants to do change detection, then, take

photos of the wave dissipatin g blocks. Th e photo s will be

sent to the server for change detection calculation. Then

the detected change points coordinates’ information will

be returned back to the client and the client application

draw these points on the query photos. User can immedi-

ately observe the changed area. Figure 1 shows the gen-

eral modules o f ou r syst em.

3.3. Combination with Change Detection

Algorithm

In our development, we want to combine Lin’s change

detection algorithm into our detection system. So, to

make the embedded algorithm low computational cost

and good matching integration is very important. Be-

cause the client system and the server system are sepa-

rated to each other, the connection which we considered

about is just via image because of follows:

Change detection algorithm is only in charge of proc-

essing image.

The server system is in charge of communication with

the clients. It only receives query images and sends

result images.

The basic concept of the interface design is shown in

Figure 2. Change detection program monitors query im-

age folder, and server program monitors result image

folder. When server receive a query image from clients,

it save this image to query image folder, this action trig-

gers the change detection program to read this image and

do image processing task, then it saves the result image

to result image folder, also this action triggers server

program to read the result image and send it to the cli-

ents.

Figure 3 shows the processing flow of our system.

First, when a query image is received from a client.

Server program saves this image into the query image

folder. At the same time, change detection program is

Figure 1. Module overview of the client-server system.

Copyright © 2013 SciRes. JSEA