Privacy Preserving Two-Party Hierarchical Clustering Over Vertically Partitioned Dataset

28

Homomorphic Encryption: Encryption method mainly

resolves the problems that people jointly conduct mining

tasks based on the private inputs they provide. The en-

cryption method can ensure that the transformed data is

exact and secure.

Homomorphic encryption schemes allow certain

computations on encrypted values. In particular, an en-

cryption scheme is additively homomorphic if there is

some operation ⊗ on encryptions such that for all clear-

text values a and b, E(a) ⊗ E(b) = E(a + b).

Let (G, E, D, M) be a homomorphic encryption

scheme, where G is an algorithm for generating keys, E

and D are the encryption and decryption functions, and

M is the message space. It has the following properties:

• (G, E, D) is semantically secure

• E(a1) × E(a2) = E(a1 + a2), for a1, a2 ∈ M.

• E(a1)α = E(α · a1), for a1 ∈ M and α ∈N.[4,7,12]

Secure Scalar Product Protocol: In this scheme the

scalar product or dot product of two vectors is computed

securely. It means that the actual values of the vectors are

not revealed only the result is revealed.

Let a vector X = (x1,...,xn), held by Alice, and Y =

(y1,..., yn), held by Bob. They need to securely compute

the scalar product X ·Y =∑i=1-n (xi*yi). So, at the end of

the protocol Alice knows only X.Y not Y and so for Bob.

[3,7,11]

Secure Add and Compare: It is based on the homo-

morphic encryption. It builds a circuit that has two inputs

from each party, sums the first input of both parties and

the second input of both parties, and returns the result of

comparing the two sums. It is a semantically secure ap-

proach.

Let two parties, P1 and P2, such that P1 has numbers a1

and b1, and P2 has numbers a2 and b2, then this scheme

securely find if a1 + a2 < b1 + b2 without revealing the

following two pieces of information to the other party: (1)

the numbers possessed by each party; and (2) the differ-

ence between a1 + a2 and b1 + b2. [7]

3. Privacy Preserving Clustering Algorithm

3.1. Privacy Preserving Clustering Algorithm

Assume that a data set D consists of n instances with m

attributes and it is vertically partitioned between Alice

and Bob. So, Alice contains her own database with first

m1 attributes and Bob contains his own database with last

m2 attributes, m1+m2=m.

Alice and Bob want to find the final k-clusters over the

total partitioned data but trying to protect their own pri-

vacy. So, both parties learn final k clusters and nothing

else.

In this paper a novel approach of hierarchical cluster-

ing is given for vertically partitioned data set which con-

siders the privacy factor also. The assumptions made

during the process are:

1. Both parties contain the same number of data re-

cords.

2. Alice contains some attributes for all records, Bob

contains the other attributes.

3.2. Clustering on Vertically Partitioned Data Set

This algorithm runs in a divide, conquer and merge

strategy. So in the first step each party will compute k

number of clusters on their own data set. In the next

stage both parties will compute the distance between

each record and each of the k cluster centers. So, n × k

matrix will be computed by each party. Up to this stage

there will be no privacy issue as all the computations are

done by the parties on their private databases. Then in the

third stage both parties send their distance matrices to the

other party. Along with the matrix the k cluster centers

are also sent to the other party in the randomized form.

Next each party computes the all possible combinations

of cluster centers from the total 2k clusters. So, finally k2

cluster centers will be formed. Now each party will have

information about these k2 clusters and they will compute

the distance between each point and the k2 cluster centers.

So, minimum closest cluster will be chosen for n data

points and finally they will be merged into k-clusters.

This merging is done by choosing a best pair of clusters

Ci and Cj and then clusters are replaced by (Ci U Cj). A

best pair of clusters is one with least error. The error be-

tween the clusters can be computed by using the follow-

ing formula.

Let C1 and C2 be two clusters being considered for a

merge and C.weight denote the number of objects in

cluster C and dist2(C1, C2) is the distance between the

clusters C1 and C2 [6], then

Error (C1 U C2) = C1.weight × C2.weight × dist2(C1, C2)

(1)

3.3. Secure Hierarchical Clustering on Vertically

Partitioned Data Set

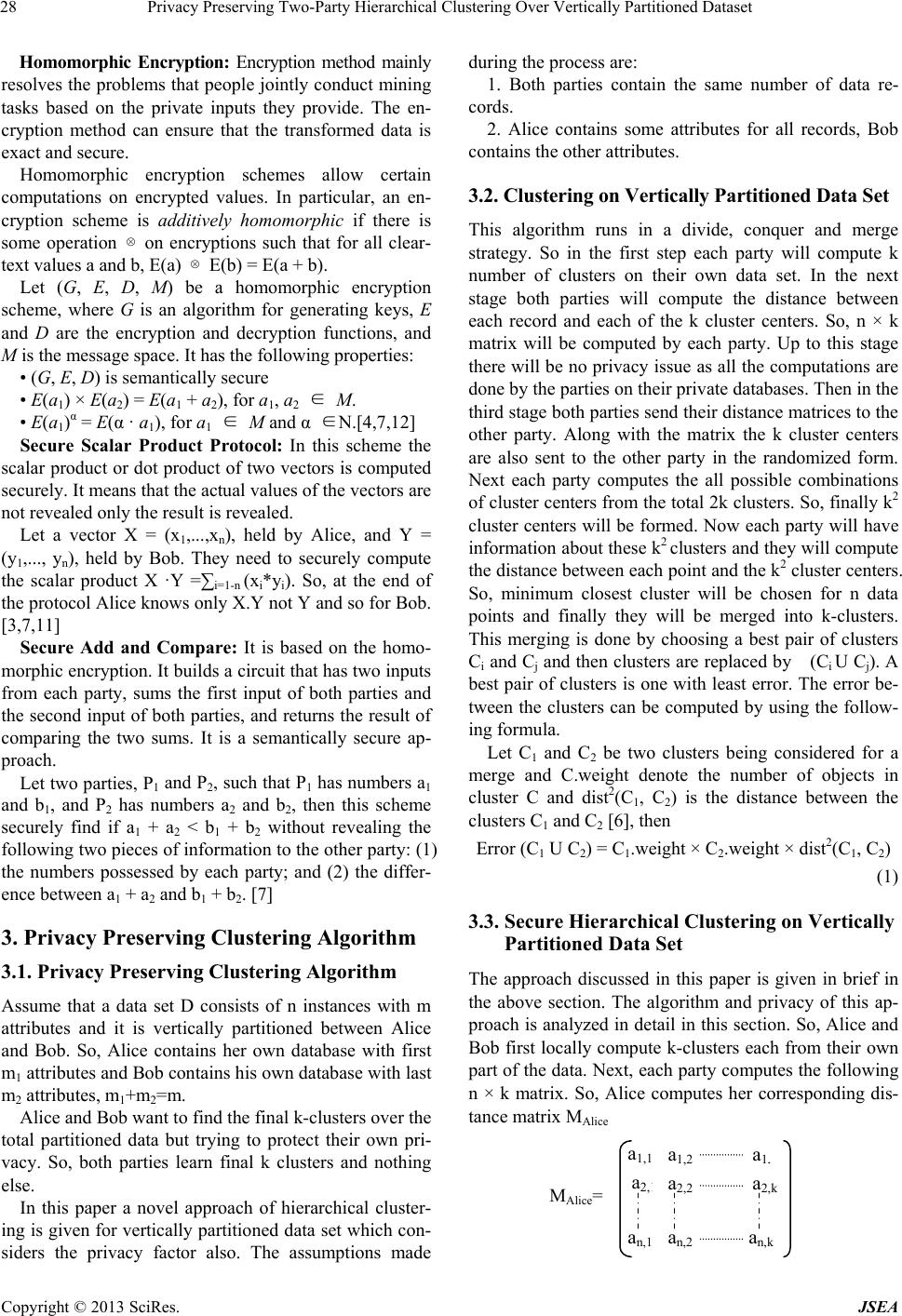

The approach discussed in this paper is given in brief in

the above section. The algorithm and privacy of this ap-

proach is analyzed in detail in this section. So, Alice and

Bob first locally compute k-clusters each from their own

part of the data. Next, each party computes the following

n × k matrix. So, Alice computes her corresponding dis-

tance matrix MAlice

MAlice=

a2,1 a2

2

a1

1 a1

2 a1,

an,1 an,2 an,

a2

Copyright © 2013 SciRes. JSEA