Vol.3, No.1, 85-90 (2011) Natural Science http://dx.doi.org/10.4236/ns.2011.31012 Copyright © 2011 SciRes. OPEN ACCESS A hybrid conjugate gradient method for optimization problems* Xiangrong Li, Xupei Zhao Department of Mathematics and Information Science, Guangxi University, Nanning, Guangxi, China; xrli68@163.com Received 14 October 2010; revised 18 November 2010; accepted 22 November 2010. ABSTRACT A hybrid method of the Polak-Ribière-Polyak (PRP) method and the Wei-Yao-Liu (WYL) method is proposed for unconstrained optimization pro- blems, which possesses the following proper- ties: i) This method inherits an important prop- erty of the well known PRP method: the ten- dency to turn towards the steepest descent di- rection if a small step is generated away from the solution, preventing a sequence of tiny steps from happening; ii) The scalar 0 k β holds automatically; iii) The global convergence with some line search rule is established for nonconvex functions. Numerical results show that the method is effective for the test prob- lems. Keywords: Line Search; Unconstrained Optimization; Conjugate Gradient Method; Global Convergence 1. INTRODUCTION We are interested to consider the unconstrained opti- mization problem min , n x x (1.1) where :n f is continuously differentiable. It is well known that there are many methods for solving optimization problems (see [24,26,28-32,34] etc.), where the conjugate gradient(CG) method is a powerful line search method because of its simplicity and its very low memory requirement, especially for the large scale opti- mization problems [22,23,27], which can avoid, like steepest descent method, the computation and storage of some matrices associated with the Hessian of objective functions. The following iterative formula is often used by the nonlinear CG method 1,0,1,2, kkkk xx dk (1.2) for (1.1), where k is the current iterate point, 0 k is a steplength, and k d is the search direction designed by 1, if k1, , if k= 0 kkk k k gd dg (1.3) where k is a scalar which determines the differ- ent conjugate gradient methods [4,5,8,9,12,13,15,16,18, 20,21,25,33] etc., and k is the gradient of () x at the point k . The well-known formula for k from the computation point of view is the following PRP method 11 2, T kk k PRP k k gg g g (1.4) where k and 1k are the gradients k xand 1k fx of ()fx at the point k and 1k , respec- tively, and denotes the Euclidian norm of vectors. Throughout this paper, we also denote k x by k f. Polak and Ribèire [18] proved that this method with the exact line search is globally convergent when the objec- tive function is convex. Powell [19] gave a counter ex- ample to show that there exist nonconvex functions on which the PRP method does not converge globally even the exact line search is used. He suggested that k should not be less than zero. Considering this suggestion, Gilbert and Nocedal [10] proved that the modified PRP method max 0, RP kk is globally convergent with the weak Wolfe-Powell (WWP ) line search tech- nique and the assumption of sufficient descent condition. However, the global convergence of the PRP method is still open under the WWP line search rule. Recently, Wei, Yao, and Liu(WYL) [21] propose a new conjugate gradient formula 1 11 2 k T kk k k WYL k k g gg g g (1.5) It is not difficult to deduce that *This work is supported by China NSF grands 10761001 and Guangxi SF grands 0991028.  X. R. Li et al. / Natural Science 3 (2011) 85-90 Copyright © 2011 SciRes. OPEN ACCESS 86 2 11 11 11 22 0 k Tk kkk kk k kk WYL k kk gg gg g gg gg gg is true. The numerical results show that this method is competitive to the PRP method for the test problems of [17]. Under the sufficient descent condition, this method is globally convergent with the WWP line search. These observations make us know that the sufficient descent condition 2,0 T kk k gdcg c is a constant holds for all 0k (1.6) is very important to ensure the global convergence [1,2,10,14], and the scalar 0 k also plays a very important role [10,19]. This motivates us to propose a hybrid method combining the PRP method and the WYL method. The hybrid method will possess some better properties of the PRP method and the WYL method: (i) the tendency to turn towards the steepest descent direction if a small step is generated away from the solution, preventing a sequence of tiny steps from happening; (ii) The scalar 0 k holds automatically. The global convergence with the WWP line search of the presented method is established for nonconvex ob- jective function. Numerical results show that this given method is competitive to the PRP method and the WYL method. This paper is organized as follows. In the next section, the algorithm is stated. The global convergence is proved in Section 3, and the numerical results are re- ported in Section 4. The last section gives one conclu- sion. 2. ALGORITHM Now we describe the given algorithm as follows. Here we call it Algorithm 1. Algorithm 1 (The hybrid algorithm of the PRP method and the WYL method) Step 0: Choose an initial point 0,0,1. n x Set 00 0 , :0.dg fxk Step 1: If , k g then stop; Otherwise go to the next step. Step 2: Compute step size k by some line search rules. Step 3: Let 1. kkkk xd If 1, k g then stop. Step 4: Calculate the search direction 11 , PW kkkk dg d (2.1) where max , . P WPRPWYL kkk Step 5: Set :1kk , and go to Step 2. Remark i) If 1, kk x we have 1kk g and 1kk g which imply that 0, PRP k and 0, WYL k which means that 0 PW k if a small step is gener- ated for all 0k. Thus the given method inherits the better property of the PRP method: the directions will turn out to be the steepest descent directions if the tiny steps from happening. ii) By the definition of the new formula , W k we have 21 11 2 max , 0 P WPRPWYLWYL kkkk k kk k k k g gg g g 3. THE GLOBAL CONVERGENCE The following assumptions are often needed to prove the convergence of the nonlinear conjugate gradient methods (see [5,9,10,20,21] etc.). Assumption 3.1 i) The function x has a lower bound on the level set 0 n fx fx , where 0 is a given point and is bounded. ii) In an open convex set 0 that contains , J is differentiable and its gradient g is Lipschitz continuous, namely, there exists a constants 0L such that 0 , , .gxgyLx yxy (3.1) 3.1. The global Convergence with the Weak Wolfe-Powell Line Search The weak Wolfe-Powell (WWP) search rule is to find a step length k such that T kkk kkkk xdfgd (3.2) and , TT kkkk k xddg (3.3) where 0,12, ,1. This line search tech- nique is often used to study the convergence of conju- gate gradient algorithms [6,27,34]. At present, the global convergence of the PRP method with the WWP line search is still open. Lemma 3.1 Suppose that Assumption 3.1 holds. Let the sequence k and k d be generated by Algo- rithm 1, 0, T kk gd and the stepsize k be determined  X. R. Li et al. / Natural Science 3 (2011) 85-90 Copyright © 2011 SciRes. OPEN ACCESS 8787 by the WWP line search (3.2) and (3.3) Then the zoutendijk condition [34] 2 2 0 T kk kk gd d (3.4) holds. Proof. By (3.3) and Assumption 3.1 ii), we have 2 1 1, T T kkkkkk k gdgg dLd this means that 2 1, T kkkk gd Ld which to- gether with 0, T kk gd and (3.2) implies that 2 1 2 1 , T kk kk k gd f Ld summing up this inequality from 0k to , and us- ing Assumption 3.1 i), we can obtain this lemma. This completes the proof. We will prove the global convergence of Algorithm 1 by contradiction. Then we assume that there exists a positive constant 0 such that , 0. k gk (3.5) Using (3.5) deduces a contradiction to obtain our con- clusion. Similar to Lemma 3.3.1 in [6], based on Assumption 3.1, Lemma 3.1, the fact 0, PW k and (3.5), we can get the following lemma. Lemma 3.2 Let Assumption 3.1 hold and the se- quences k and k d be generated by Algorithm 1. The sufficient descent condition (1.6) holds, and the stepsize k is determined by (3.2) and (3 .3). Suppose that the inequalities (3.5) is true. Then we have 0 k d and 2 1 0 , kk k uu where k k k d ud . Proof. These two inequalities (1.6) and (3.5) imply that 0 k d is true, for otherwise 0, k g then kkk udd is reasonable. Denote 1 1 11 , k PW k kkk kk d g rdd By (2.1), for 0k, we have 11 , kk kk ur u this combining with 11 kk uu shows that 11 1kkkkkkk ru uuu (3.6) The inequality 0 PW k implies that0 k is true, then it follows that from (3.6) and triangular inequality 1 1 11 1 11 2. kk kk kk kkkkkk k uu uu uuuu r (3.7) By (1.6) and (3.4), we get 4 22 1 11 2 01 1 k kk kk k grg d Which together with (3.5), we obtain 2 1 0 k k r By the above inequality and (3.7), we get this lemma. The proof is complete. The following property (*) was introduced by Gilbert and Nocedal [10], which pertains to the k under the sufficient descent condition. The WYL formula also has this property. Now we show that this property (*) per- tains to our method. Property (*). Suppose that 12 0. k rg r (3.8) We say that the method has Property (*), if for all k, there exists constants 1b and 0 such that kb and 1 2 kk sb Lemma 3.3 Let Assumption 3.1 hold and the se- quences k and k d be generated by Algorithm 1. Then the new formula W k possesses property (*). Proof. The result of this lemma is proved by the fol- lowing two cases. Case i: we consider RP k By (3.1), we have 1 11 22 .. T kk kk k PRP k kk Lg s gg g gg (3.9) From Assumption 3.1 i), then there exists a constant 10M such that 1. k M (3.10) Let 2 21 1 max 2,1bLrrM and 2 12 2bL , it follows that PRP kb and  X. R. Li et al. / Natural Science 3 (2011) 85-90 Copyright © 2011 SciRes. OPEN ACCESS 88 122 22 2 11 .22 1. 2 kk PRP kk k Lg sLL sb g Then the PRP formula RP k has this property (*). Case ii: let us consider WYL k . Denote 1kk Yg 1, kkk gg by (3.1), we get 1 1 1 1 11 11 2, k kkk k k kkk k k kk kk kkkk k g Yg g g g gg g g gg gg gggg Ls (3.11) By (1.5), (3.11), (3.10) and (3.8) we have 12 1 222 1 .2 , T kk k WYLkk k kk YLs gY gg (3.12) let 2 21 1 max2, 2bLM and 2 12 22 ,bL it follows that (3.12) and the definition of b and that 1b 22 24 11 22 1 , and 2 WYL WYL kk k LL bs b Thus, the formula WYL k also has the property (*). Using the definition of the max , WWYL PRP kkk , we conclude that the formula W k possesses the property (*). The proof is complete. By Lemma 3.3, similar to Lemma 3.3.2 in [6], it is not difficult to prove the following result. Here we only state it as follows, but omit the proof. Lemma 3.4 (Lemma 3.3.2 in [6]) Let the sequences k and k d be generated by Algorithm 1 and the conditions in Lemma 3.3 hold. If 0 PW k and has property (*), then there exists a constant 0 such that, for any N and any index 0 k there is an in- dex 0 kk satisfying ,, 2 k k where ,:1,, ki kiNkik s N de- notes the set of positive integers, and ,k k denotes the numbers of elements in ,k k . Finally, by Lemma 3.2 and Lemma 3.4, we present the global convergence theorem of Algorithm 1 with the WWP line search. Similar to Theorem 3.3.3 in [6], it is not difficult to prove the result, here we also give the process of the proof. Theorem 3.1 Let the sequence , kk d be gener- ated by Algorithm 1 with the weak Wolfe-Powell line search and the conditions in Lemma 3.3 hold. Then lim inf0 kk g . Proof. We will get this theorem by contradiction. Suppose that (3.5) is true, then the conditions in Lemma 3.2 and 3.3 hold. By Assumption 3.1 i), then there exists a constant 00 such that 0,xx (3.13) We also denote , iii udd then for all integers ,lk lk, we have 11 111 1 . l lki i ik ll iki k ik ik xx su suu u Taking the norm in both sides of the above equality, and using (3.13) we get 10 111 2 ll iiik ik ik ssuu Let 0 8 be the smallest integer where does not less than 0 8 . By Lemma 3.2, there exists an index 0 k such that 2 1 1 4 ii ik uu (3.14) On the other hand, by Lemma 3.3, there exists 0 kk satisfying ,2 k k (3.15) For all ,1ikk , by Cauchy-Schwarz inequal- ity and (3.14), we obtain 1 11 1 1 112 2 21 1 12 2 11 . 42 i ik jj jk i jj jk uu uu ikuu By the above inequality, (3.15) and (3.13), we have 1 01, 1 2, 224 k ik ik sk Thus 0 8, this contradicts with the definition of . Therefore, the conclusion of this theorem is right. This completes the proof. 4. NUMERICAL RESULTS In this section, we report some numerical experiments.  X. R. Li et al. / Natural Science 3 (2011) 85-90 Copyright © 2011 SciRes. OPEN ACCESS 8989 The unconstrained optimization problems with the given initial points can be found at: www.ici.ro/camo/neculai/SCALCG/testuo.pdf, which were collected by Neculai Andrei. Since this new method is the hybrid method of the PRP method and the WYL method, we test Algorithm 1 with the WWP line search and compare its performance with those of the WYL [21] and the PRP [18] methods. The stop criteri- ons are given below: we stop the program if the inequal- ity k gx is satisfied or the inequality 1 kk gxf x is satisfied, where 1.0 5.D All the codes were written in Fortran and run on PC with 2.60 GHz CPU processor and 256 MB memory and Windows XP op- eration system. In the experiments, the parameters were chosen as 1.0 2, 1.0 1DD . The dimension of the test problems is from 500 to 5000. The detailed numerical results are listed on the web site http://210.36.18.9:8018/publication.asp?id=35392. In Figure 1, “WYL”, “PRP”, and “MPRP-WYL” stand for the WYL method, the PRP method, and the new method, respectively. Figure 1 shows the performance of these methods relative to the iterative number of the function and gra- dient(NFN), which were evaluated using the profiles of Dolan and Moré [7]. It is easy to see that the MPRP-WYL is predominant among these three methods and the new method can solve about 99% of the test problems successfully. The PRP method is better than the WY L method for 11.2t and the WYL method is better than the PRP method for 1.26t. Moreover, the PRP method solves about 98% of the test problems and the WYL method solve about 99% of the test prob- lems successfully, respectively. In a word, the given Figure 1. Performance profiles of conjugate gradient me- thods in Table 1 (NFN). method is competitive to the other two methods and the hybrid formula is notable. 5. CONCLUSION This paper gives a hybrid conjugate gradient method for solving unconstrained optimization. The global con- vergence for nonconvex functions with the WWP line search is established. The numerical results show that the given method is competitive to the other standard conjugate gradient methods for the test problems. For further research, we should study the convergence of the new algorithm under other line search rules. Moreover, more numerical experiments and testing en- vironments (such that [3]) for large practical problems should be done in the future. REFERENCES [1] Ahmed, T. and Storey, D. (1990) Efficient hybrid conju- gate gradient techniques. Journal of Optimization Theory and Applications, 64, 379-394. doi:10.1007/BF00939455 [2] Al-Baali, A. (1985) Descent property and global conver- gence of the Flecher-Reeves method with inexact line search. IMA Journal of Numerical Analysis, 5, 121-124. doi:10.1093/imanum/5.1.121 [3] Bongartz, K.E., Conn, A.R., Gould, N.I.M. and Toint, P.L. (1995) CUTE: Constrained and unconstrained test- ing environments. ACM Transactions on Mathematical Software, 21, 123-160. doi:10.1145/200979.201043 [4] Dai, Y. (2002) A nonmonotone conjugate gradient algo- rithm for unconstrained optimization. Journal of Systems Science and Complexity, 15, 139-145. [5] Dai, Y. and Yuan, Y. (2000) A nonlinear conjugate gra- dient with a strong global convergence properties. SIAM Journal on Optimization, 10, 177-182. doi:10.1137/S1052623497318992 [6] Dai, Y. and Yuan, Y. (1998) Nonlinear conjugate gradi- ent methods. Shanghai Scientific and Technical Publish- ers, Shanghai. [7] Dolan, E.D. and Moré, J.J. (2002) Benchmarking opti- mization software with performance profiles. Mathe- matical Programming, 91, 201-213. doi:10.1007/s101070100263 [8] Fletcher, R. (1997) Practical Method of Optimization, Vol I: Unconstrained Optimization. 2nd Edition, Wiley, New York. [9] Fletcher, R. and Reeves, C. (1964) Function minimiza- tion bu conjugate gradients. Computer Journal, 7, 149-154. doi:10.1093/comjnl/7.2.149 [10] Gibert, J.C. and Nocedal, J. (1992) Global convergence properties of conugate gradient methods for optimization. SIAM Journal on Optimization, 2, 21-42. doi:10.1137/0802003 [11] Grippo, L. and Lucidi, S. (1997) A globally convergent version of the Polak-Ribière gradient method. Mathe-  X. R. Li et al. / Natural Science 3 (2011) 85-90 Copyright © 2011 SciRes. OPEN ACCESS 90 matical Programming, 78, 375-391. doi:10.1007/BF02614362 [12] Hager, W.W. and Zhang, H.C. (2005) A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM Journal on Optimization, 16, 170-192. doi:10.1137/030601880 [13] Hestenes, M.R. and Stiefel, E. (1952) Method of conju- gate gradient for solving linear equations. Journal of Re- search National Bureau of Standards, 49, 409-436. [14] Hu, Y.F. and Storey, C. (1991) Global convergence re- sult for conjugate method. Journal of Optimization The- ory and Applications, 71, 399-405. doi:10.1007/BF00939927 [15] Li, G., Tang, C. and Wei, Z. (2007) New conjugacy con- dition and related new conjugate gradient methods for unconstrained optimization. Journal of Computational and Applied Mathematics, 2, 523-539. doi:10.1016/j.cam.2006.03.005 [16] Liu, Y. and Storey, C. (1992) Effcient generalized con- jugate gradient algorithms, part 1: theory. Journal of Op- timization Theory and Applications, 69, 17-41. [17] Moré, J.J., Garbow, B.S. and Hillstrome, K.E. (1981) Testing unconstrained optimization software. ACM Transactions on Mathematical Software, 7, 17-41. doi:10.1145/355934.355936 [18] Polak, E. and Ribiere, G. (1969) Note sur la xonvergence de directions conjugees. Rev. Francaise informat Re- cherche Operatinelle, 3e Annee, 16, 35-43. [19] Powell, M.J.D. (1984) Nonconvex minimization calcula- tions and the conjugate gradient method. Lecture Notes in Mathematics, 1066, 122-141. [20] Wei, Z., Li, G. and Qi, L. (2006) New nonlinear conju- gate gradient formulas for large-scale unconstrained op- timization problems. Applied Mathematics and Compu- tation, 179, 407-430. doi:10.1016/j.amc.2005.11.150 [21] Wei, Z., Yao, S. and Liu, L. (2006) The convergence properties of some new conjugate gradient methods. Ap- plied Mathematics and Computation, 183, 1341-1350. doi:10.1016/j.amc.2006.05.150 [22] Yu, G.H. (2007) Nonlinear self-scaling conjugate gradi- ent methods for large-scale optimization problems. The- sis of Doctor’s Degree, Sun Yat-Sen University. [23] Yuan, G.L. (2009) Modified nonlinear conjugate gradient methods with sufficient descent property for large-scale optimization problems. Optimization Letters, 3, 11-21. doi:10.1007/s11590-008-0086-5 [24] Yuan, G.L. and Lu, X.W. (2008) A new line search method with trust region for unconstrained optimization. Communications on Applied Nonlinear Analysis, 15, 35-49. [25] Yuan, G.L. and Lu, X.W. (2009) A modified PRP con- jugate gradient method. Annals of Operations Research, 166, 73-90. doi:10.1007/s10479-008-0420-4 [26] Yuan, G.L., Lu, X.W. and Wei, Z.X. (2007) New two-point stepsize gradient methods for solving uncon- strained optimization problems. Natural Science Journal of Xiangtan University, 29, 13-15. [27] Yuan, Y. and Sun, W. (1999) Theory and Methods of Optimization. Science Press of China, Beijing. [28] Yuan, G.L. and Wei, Z.X. (2009) New line search meth- ods for unconstrained optimization. Journal of the Ko- rean Statistical Society, 38, 29-39. doi:10.1016/j.jkss.2008.05.004 [29] Yuan, G.L. and Wei, Z.X. (2008) The superlinear con- vergence analysis of a nonmonotone BFGS algorithm on convex objective functions. Acta Mathematica Sinica, 24, 35-42. doi:10.1007/s10114-007-1012-y [30] Yuan, G.L. and Wei, Z.X. (2010) Convergence analysis of a modified BFGS method on convex minimizations. Computational Optimization and Applications, 47, 237-255. doi:10.1007/s10589-008-9219-0 [31] Yuan, G.L. and Wei, Z.X. (2009) A Rank-One fitting method for unconstrained optimization problems. Mathe- matica Applicata, 22, 118-122. [32] Zhang, H.C. and Hager, W.W. (2004) A nonmonotone line search technique and its application to unconstrained optimization. SIAM Journal on Optimization, 14, 1043-1056. doi:10.1137/S1052623403428208 [33] Zhang, L., Zhou, W. and Li, D. (2006) A descent modi- fied Polak-Ribiére-Polyak conjugate method and its global convergence. IMA Journal on Numerical Analysis, 26, 629-649. doi:10.1093/imanum/drl016 [34] Zoutendijk, G. (1970) Nonlinear programming computa- tional methods. In: Abadie, J. Ed., Integer and Nonlinear Programming, NorthHolllad, Amsterdam, 37-86.

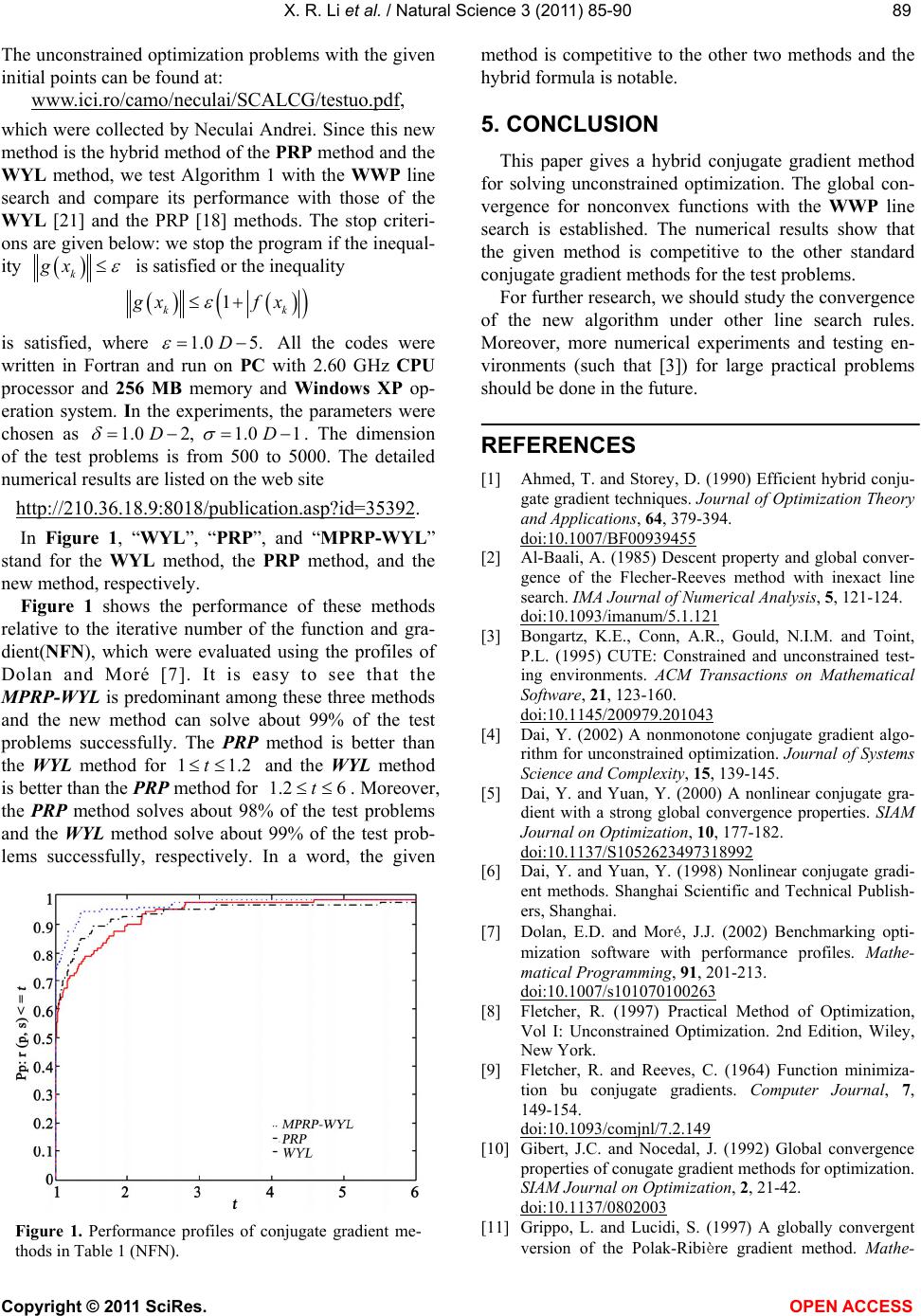

|