Paper Menu >>

Journal Menu >>

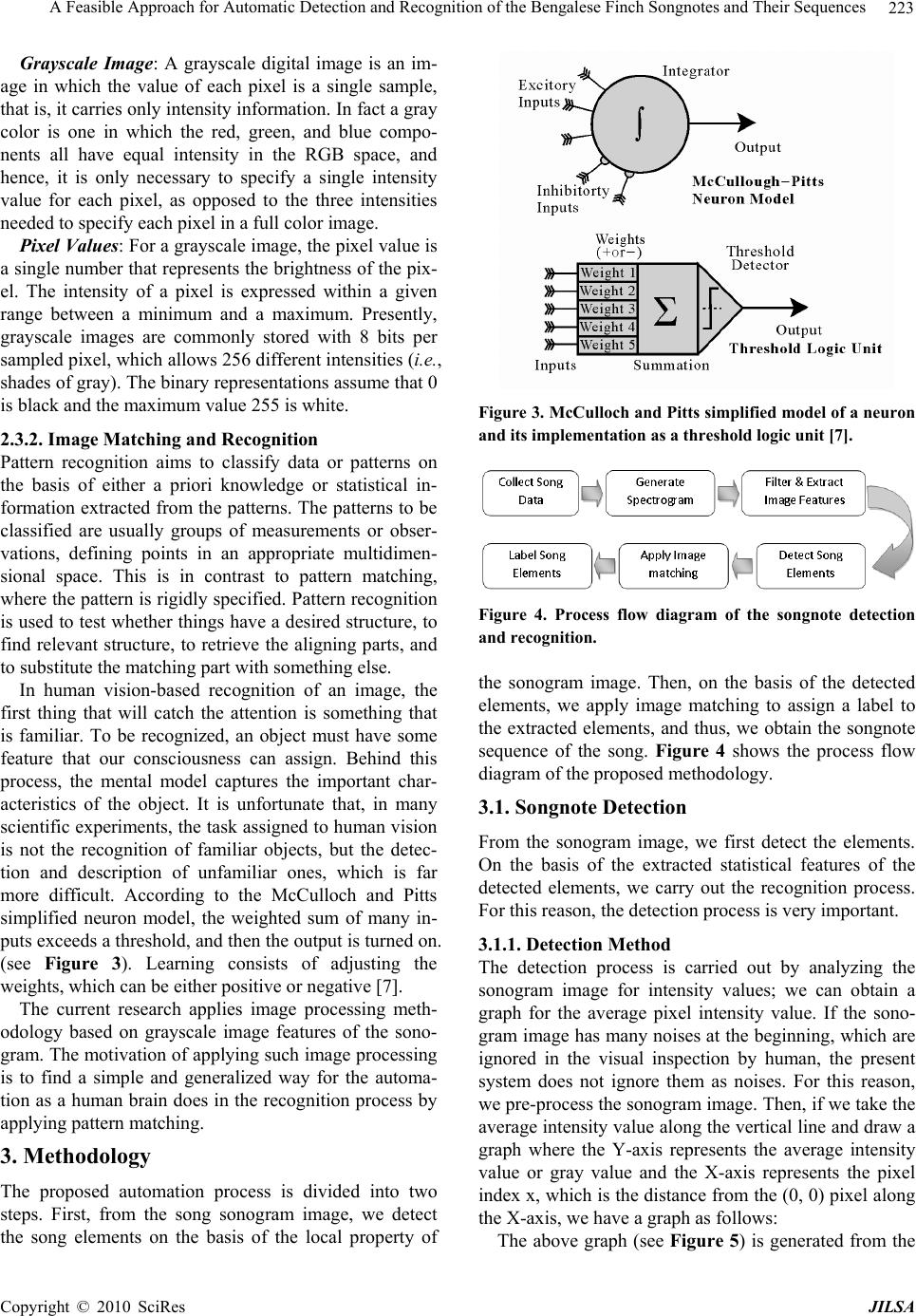

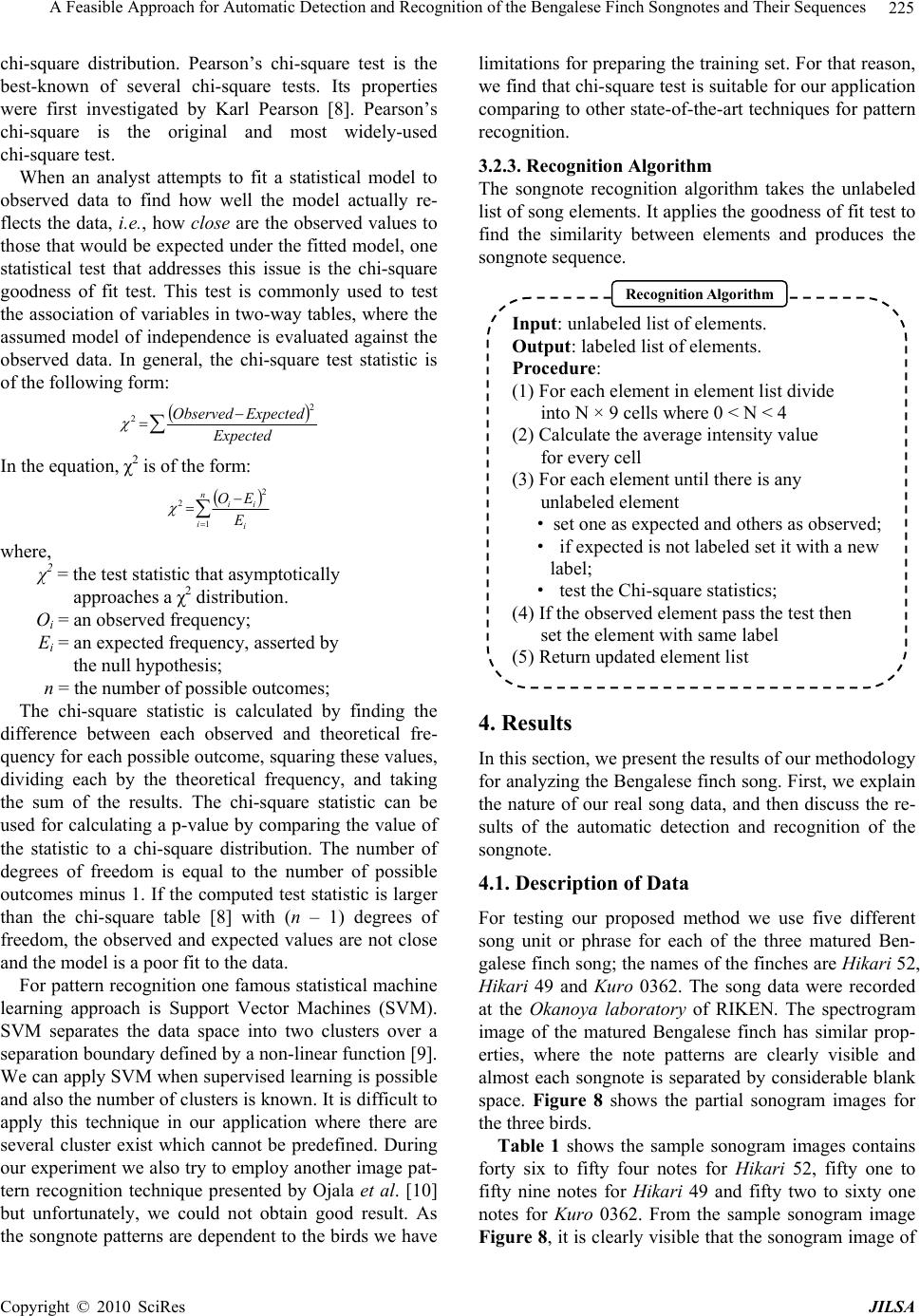

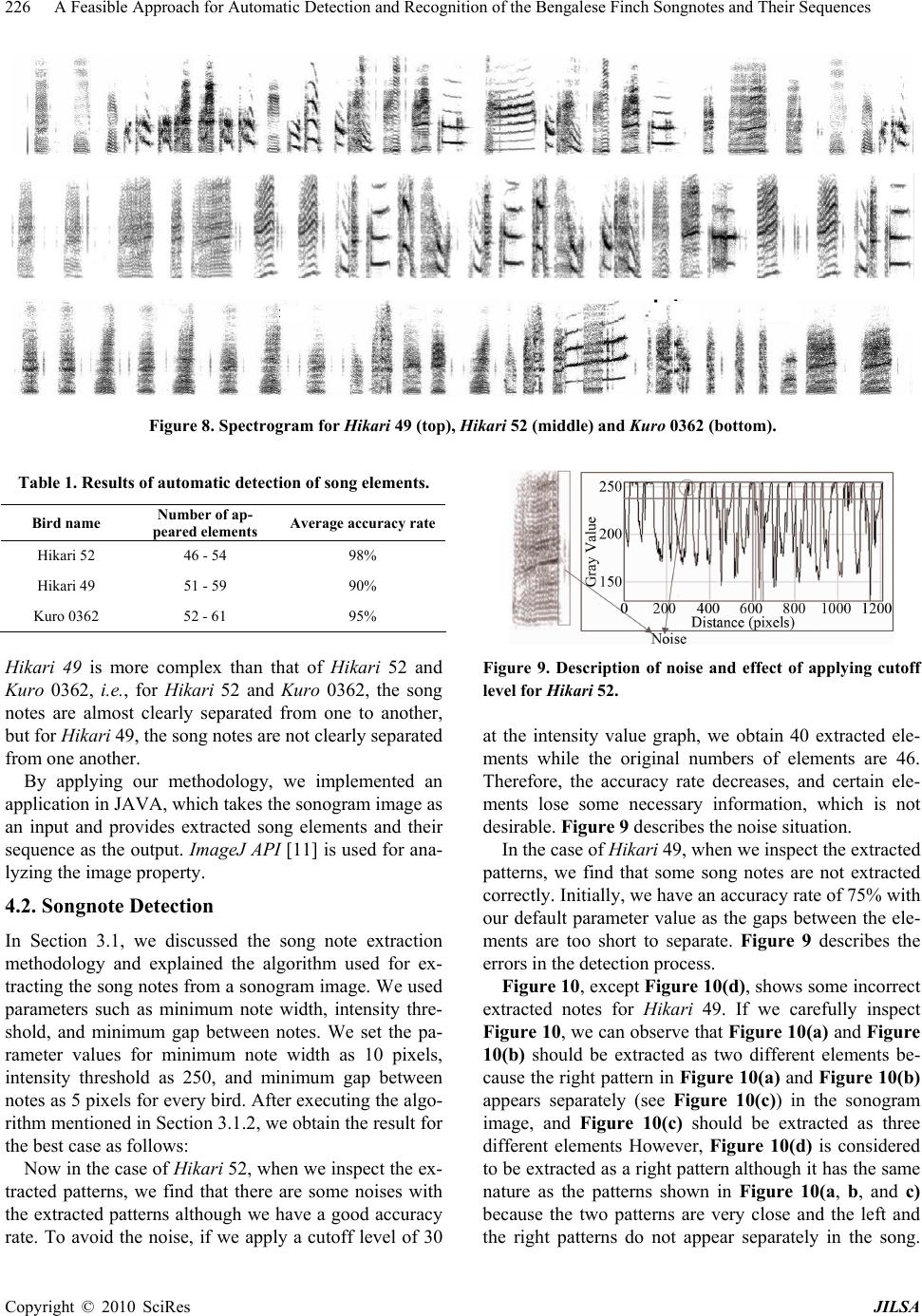

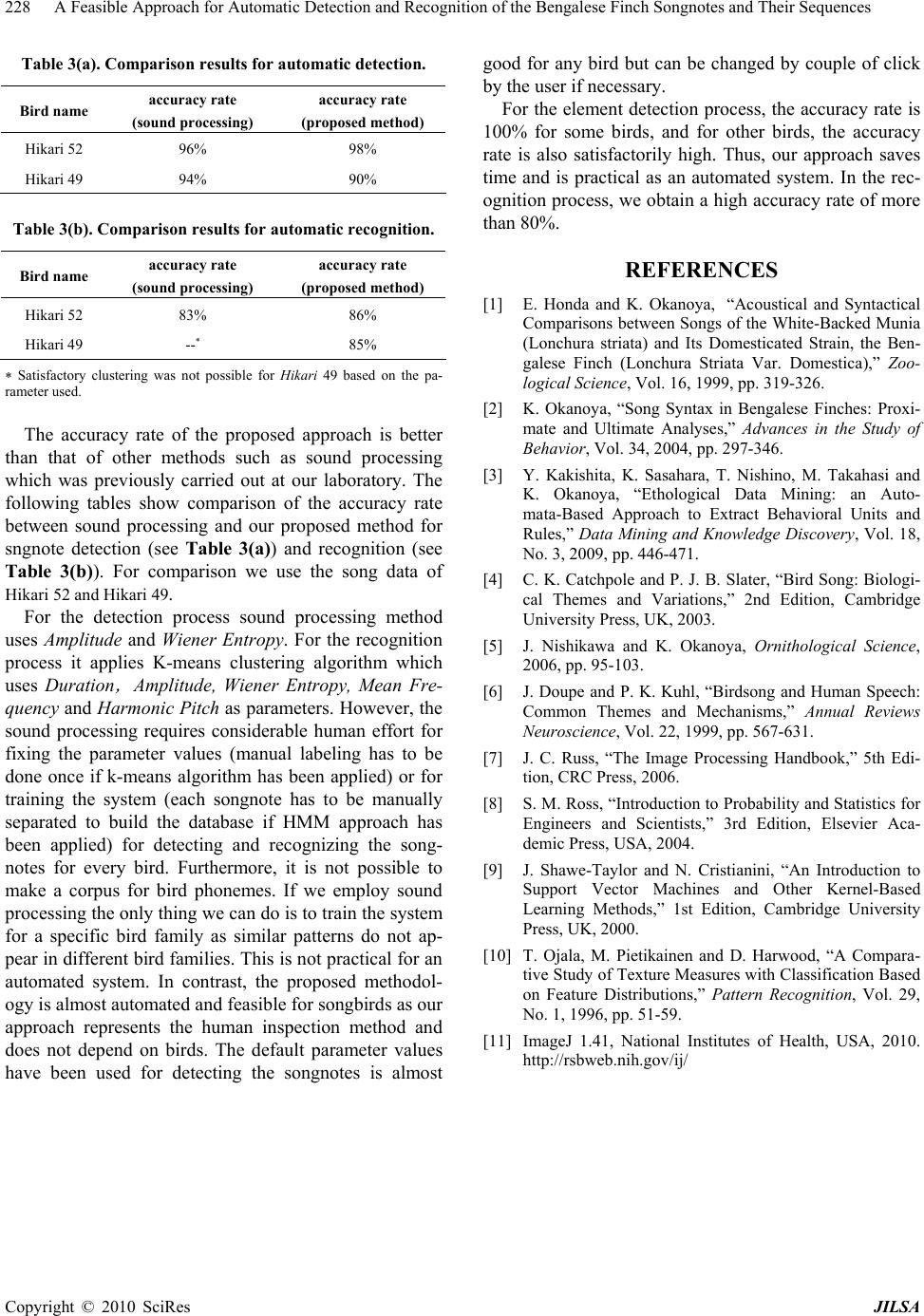

Journal of Intelligent Learning Systems and Applications, 2010, 2, 221-228 doi:10.4236/jilsa.2010.24025 Published Online November 2010 (http://www.scirp.org/journal/jilsa) Copyright © 2010 SciRes JILSA A Feasible Approach for Automatic Detection and Recognition of the Bengalese Finch Songnotes and Their Sequences Khan Md. Mahfuzus Salam1,*, Tetsuro Nishino1, Kazutoshi Sasahara2, Miki Takahasi2, Kazuo Okanoya2 1Department of Information and Communication Engineering, the University of Electro-Communications, Tokyo, Japan; 2Laboratory for Bioliguistics, RIKEN Brain Science Institute (BSI), Saitama, Japan. *corresponding author E-mail: mahfuz@ice.uec.ac.jp Received March 23rd, 2010; revised September 14th, 2010; accepted September 18th, 2010. ABSTRACT The Bengalese finch song has been widely studied for its unique features and similarity to human language. For com- putational analysis the songs must be represented in songnote sequences. An automated approach for this purpose is highly desired since manual processing makes human annotation cumbersome, and human annotation is very heuristic and easily lacks objectivity. In this paper, we propose a new approach for automatic detection and recognition of the songnote sequences via image processing. The proposed method is based on human recognition process to visually identify the patterns in a sonogram image. The songnotes of the Bengalese finch are dependent on the birds and similar pattern does not exist in two different birds. Considering this constraint, our experiments on real birdsong data of dif- ferent Bengalese finch show high accuracy rates for automatic detection and recognition of the songnotes. These results indicate that the proposed approach is feasible and generalized for any Bengalese finch songs. Keywords: Birdsong Analysis, Bengalese Finch Song, Songnote Detection and Recognition, Pattern Recognition 1. Introduction Birdsong has been actively studied via analysis of song- note sequences to understand the language model of birds. The songs of the Bengalese finch (Lonchura stri- ata var. domestica) – a popular fowl in Japan, is widely employed for this purpose. The song of the Bengalese finch has a complex structure as compared with those of other songbirds such as zebra finches (Taeniopygia gut- tata) [1]. Thus, Bengalese finch songs have been studied as a model of human language. According to the recent studies, the courtship songs of Bengalese finches have unique features and similarity with a human language [2]. In birdsong research, acoustic song analysis is necessary to find the song elements and their sequence for carrying out an analysis to understand the song syntax [3] and the learning process of the song. The current research is fo- cused on automatic detection and recognition of the songnote and its sequence. Previous studies that em- ployed sound processing had drawbacks as an automated approach. This paper introduces a new generalized ap- proach that employs image processing to overcome the drawbacks. 2. Preliminaries This section briefly introduces the theoretical founda- tions of a birdsong, its representations, image basics, and the recognition process by humans as we focused on the recognition process that is manually carried out by hu- mans. 2.1. Birdsong Representation In birdsong analysis, the song data is recorded in an ap- propriate environment – special cage equipped with au- tomated recording system and also to avoid noise. From the recorded sound data, we obtain the sonogram image of the song. For further computational analysis, the ob- tained sonogram image is used as the standard represen- tation of the song [4]. The following of this section briefly explains some general terms that are used in birdsong research. Songnote: An independent pattern appearing in sono-  A Feasible Approach for Automatic Detection and Recognition of the Bengalese Finch Songnotes and Their Sequences 222 gram which is assigned a symbol is called a songnote. It is also referred as a song element or a behavioral element. From the definition, we can say the text data consisting of symbols (such as a, b, c, and so on) are called song- note sequence. Songnotes are analogous to phonemes in human language. Chunk: A fixed sequence of song notes is called a chunk. In Figure 1, for example, the chunks are ab, cde and fg. Chunks are analogous to words in human lan- guage. Song unit: A song unit consists of chunks. Song units are analogous to sentences in human language. Sonogram: A sonogram is an image that shows how the spectral density of a signal varies with time. It is also known as a spectrogram, voiceprint, or voicegram. So- nogram are used to identify phonetic sounds to analyze the animal cries and also in the fields of speech process- ing, music, sonar/radar, seismology, etc. There are many variations in the format of the sono- gram. Sometimes, the vertical and horizontal axes are switched; sometimes, the amplitude is represented as the height of a 3D surface instead of color or intensity. The frequency and amplitude axes can be either linear or lo- garithmic, depending on what the graph is being used for. For instance, audio would usually be represented with a logarithmic amplitude axis, and frequency would be lin- ear in order to emphasize harmonic relationships, or logarithmic to emphasize musical, tonal relationships. The most common format is a graph with two geometric dimensions: the horizontal axis represents time, and the vertical axis is frequency; a third dimension indicating the amplitude of a particular frequency at a particular time is represented by the intensity or color of each point in the image. For the birdsong research this common format is used. Figure 1 shows a sample grayscale so- nogram image of a Bengalese finch courtship song. 2.2. Bengalese Finch Song Recent studies on Bengalese finches show that the songs of male Bengalese finches are neither monotonous nor random; they consist of chunks, each of which is a fixed Figure 1. Grayscale sonogram image of a Bengalese finch song. Figure 2. Courtship song syntax represented by an auto- maton sequence of a few song notes. The song of each individ- ual can be represented by a finite automaton, which is called song syntax (see Figure 2) [2]. The songs of Bengalese finches have double articulation – a sentence consists of words, and each word consists of phonemes, which is also one of the important faculties of human language. The song syntax is manipulated by the song control nuclei in the brain. The hierarchy of the song control nuclei directly corresponds to the song hierarchy [5]. Because of the structural and functional similarities of vocal leaning between songbirds and humans, the former have been actively studied as a good model of a human language [6]. In particular, the song syntax of Bengalese finches sheds light on the biological foundations of syn- tax. 2.3. Detection and Recognition Human vision is one of the most important and percep- tive mechanisms. It provides information required for the relatively simple tasks (e.g., object recognition) and for very complex tasks as well. In bird song research, the songnote recognition is carried out by humans by in- specting the patterns visually represented in a sonogram image [4]. 2.3.1. Image Feature Extraction Digital image processing denotes the analysis carried out on the basis of the pixel property of the image irrespec- tive of the image type. A digital image has a finite set of digital values called picture elements or pixels. The im- age contains a fixed number of rows and columns of pix- els. Pixels are the smallest individual elements in an im- age, holding quantized values that represent the bright- ness of a given color at any specific point. Typically, the pixels are stored in computer memory as a raster image or raster map, a two-dimensional array of small integers. These values are often transmitted or stored in a com- pressed form. Each pixel of a raster image is typically associated with a specific position in some 2D region and has a value of one or more quantities related to that position. Digital images can be classified according to the number and nature of such samples into different categories like Binary, Grayscale, Color and False-color. In our re- search, we use a grayscale sonogram image. Copyright © 2010 SciRes JILSA  A Feasible Approach for Automatic Detection and Recognition of the Bengalese Finch Songnotes and Their Sequences 223 Grayscale Image: A grayscale digital image is an im- age in which the value of each pixel is a single sample, that is, it carries only intensity information. In fact a gray color is one in which the red, green, and blue compo- nents all have equal intensity in the RGB space, and hence, it is only necessary to specify a single intensity value for each pixel, as opposed to the three intensities needed to specify each pixel in a full color image. Pixel Values: For a grayscale image, the pixel value is a single number that represents the brightness of the pix- el. The intensity of a pixel is expressed within a given range between a minimum and a maximum. Presently, grayscale images are commonly stored with 8 bits per sampled pixel, which allows 256 different intensities (i.e., shades of gray). The binary representations assume that 0 is black and the maximum value 255 is white. 2.3.2. Image Ma tching and Recogni tio n Pattern recognition aims to classify data or patterns on the basis of either a priori knowledge or statistical in- formation extracted from the patterns. The patterns to be classified are usually groups of measurements or obser- vations, defining points in an appropriate multidimen- sional space. This is in contrast to pattern matching, where the pattern is rigidly specified. Pattern recognition is used to test whether things have a desired structure, to find relevant structure, to retrieve the aligning parts, and to substitute the matching part with something else. In human vision-based recognition of an image, the first thing that will catch the attention is something that is familiar. To be recognized, an object must have some feature that our consciousness can assign. Behind this process, the mental model captures the important char- acteristics of the object. It is unfortunate that, in many scientific experiments, the task assigned to human vision is not the recognition of familiar objects, but the detec- tion and description of unfamiliar ones, which is far more difficult. According to the McCulloch and Pitts simplified neuron model, the weighted sum of many in- puts exceeds a threshold, and then the output is turned on. (see Figure 3). Learning consists of adjusting the weights, which can be either positive or negative [7]. The current research applies image processing meth- odology based on grayscale image features of the sono- gram. The motivation of applying such image processing is to find a simple and generalized way for the automa- tion as a human brain does in the recognition process by applying pattern matching. 3. Methodology The proposed automation process is divided into two steps. First, from the song sonogram image, we detect the song elements on the basis of the local property of Figure 3. McCulloch and Pitts simplified model of a neuron and its implementation as a threshold logic unit [7]. Figure 4. Process flow diagram of the songnote detection and recognition. the sonogram image. Then, on the basis of the detected elements, we apply image matching to assign a label to the extracted elements, and thus, we obtain the songnote sequence of the song. Figure 4 shows the process flow diagram of the proposed methodology. 3.1. Songnote Detection From the sonogram image, we first detect the elements. On the basis of the extracted statistical features of the detected elements, we carry out the recognition process. For this reason, the detection process is very important. 3.1.1. Detection Met hod The detection process is carried out by analyzing the sonogram image for intensity values; we can obtain a graph for the average pixel intensity value. If the sono- gram image has many noises at the beginning, which are ignored in the visual inspection by human, the present system does not ignore them as noises. For this reason, we pre-process the sonogram image. Then, if we take the average intensity value along the vertical line and draw a graph where the Y-axis represents the average intensity value or gray value and the X-axis represents the pixel index x, which is the distance from the (0, 0) pixel along the X-axis, we have a graph as follows: The above graph (see Figure 5) is generated from the Copyright © 2010 SciRes JILSA  A Feasible Approach for Automatic Detection and Recognition of the Bengalese Finch Songnotes and Their Sequences 224 sample sonogram image shown in Figure 6. It is clearly visible that from the graph we can find some clear gaps between the elements. By defining parameters (see Fig- ure 6) such as minimum element width, minimum gap between elements, and the intensity threshold, we can execute our algorithm to find the song elements. If some region does not fit with the three above mentioned pa- rameters, we consider it to be noise. Note that these pa- rameters can vary from bird to bird. The detected song elements and the features of the elements, such as width information, are used for the recognition process. 3.1.2. Detection Al go rithm The song element detection algorithm takes the array of the average intensity values as the input. On the basis of the defined parameter values, the proposed detection algorithm produces an unlabeled list of song elements. Input: array of intensity values. Output: a list of elements. Procedure: (1) Initialize the parameters. (2) If the intensity value exceeds threshold and next is not a gap · set start element flag true; · set start index to current index; (3) If start element flag is true and next minimum gap is detected · set start element flag false; · set end index to current index; · add to element list; (4) Continue step 2 and 3 until end of the intensity array (5) Return element list. Detect ion Algo rithm 3.2. Songnote Recognition For extracting the songnote sequence from the sonogram image, we extract local statistical features and then carry out the statistical pattern matching for recognition. 3.2.1. Recog nition Method As discussed in the previous section, similar patterns are assigned with the same label in the recognition process. Our recognition method is based on the local property of the sonogram image. By executing the note detection algorithm, we obtain element list information. This un- labeled element list provides the start pixel and the end pixel information for every element. As for the Bengalese finch song, note patterns differ from bird to bird. Therefore, we decided not to use any prior knowledge; rather, we use the statistical informa- tion extracted from the patterns. See Figure 7. First, we divide every note into N regions, and every region is Figure 5. Average intensity value graph derived from the sonogram image. Figure 6. Sample sonogram image and the parameters. 12310171 20 27 23037 3 { ,...,,,, ...,,,,...,, } c cc RRR RgRgRgRg Rg RgRgRg Rg Figure 7. Explains the procedure while N = 3. divided into nine (3×3) cells. We denote the center cell as gc and the other cells as gn in a clockwise direction, where n = 0, 1, …, 7. Thus, we obtain a set of values for every single element. Then, we apply a statistical test called the chi-square test to find the similarity between elements. Note that the value of N should not be greater than 3 because if the set size exceeds thirty, the Chi-square distribution tends toward a normal distribution. 3.2.2. Chi-square Goodness Fit Test The chi-square test (χ2) is a statistical hypothesis test whose results are evaluated by reference to the Copyright © 2010 SciRes JILSA  A Feasible Approach for Automatic Detection and Recognition of the Bengalese Finch Songnotes and Their Sequences 225 chi-square distribution. Pearson’s chi-square test is the best-known of several chi-square tests. Its properties were first investigated by Karl Pearson [8]. Pearson’s chi-square is the original and most widely-used chi-square test. When an analyst attempts to fit a statistical model to observed data to find how well the model actually re- flects the data, i.e., how close are the observed values to those that would be expected under the fitted model, one statistical test that addresses this issue is the chi-square goodness of fit test. This test is commonly used to test the association of variables in two-way tables, where the assumed model of independence is evaluated against the observed data. In general, the chi-square test statistic is of the following form: Expected ExpectedObserved 2 2 In the equation, χ2 is of the form: n ii ii E EO 1 2 2 where, χ2 = the test statistic that asymptotically approaches a χ2 distribution. Oi = an observed frequency; Ei = an expected frequency, asserted by the null hypothesis; n = the number of possible outcomes; The chi-square statistic is calculated by finding the difference between each observed and theoretical fre- quency for each possible outcome, squaring these values, dividing each by the theoretical frequency, and taking the sum of the results. The chi-square statistic can be used for calculating a p-value by comparing the value of the statistic to a chi-square distribution. The number of degrees of freedom is equal to the number of possible outcomes minus 1. If the computed test statistic is larger than the chi-square table [8] with (n – 1) degrees of freedom, the observed and expected values are not close and the model is a poor fit to the data. For pattern recognition one famous statistical machine learning approach is Support Vector Machines (SVM). SVM separates the data space into two clusters over a separation boundary defined by a non-linear function [9]. We can apply SVM when supervised learning is possible and also the number of clusters is known. It is difficult to apply this technique in our application where there are several cluster exist which cannot be predefined. During our experiment we also try to employ another image pat- tern recognition technique presented by Ojala et al. [10] but unfortunately, we could not obtain good result. As the songnote patterns are dependent to the birds we have limitations for preparing the training set. For that reason, we find that chi-square test is suitable for our application comparing to other state-of-the-art techniques for pattern recognition. 3.2.3. Recog nition Algorit hm The songnote recognition algorithm takes the unlabeled list of song elements. It applies the goodness of fit test to find the similarity between elements and produces the songnote sequence. Input: unlabeled list of elements. Output: labeled list of elements. Procedure: (1) For each element in element list divide into N × 9 cells where 0 < N < 4 (2) Calculate the average intensity value for every cell (3) For each element until there is any unlabeled element · set one as expected and others as observed; · if expected is not labeled set it with a new label; · test the Chi-square statistics; (4) If the observed element pass the test then set the element with same label (5) Return updated element list Recogn ition A lgorithm 4. Results In this section, we present the results of our methodology for analyzing the Bengalese finch song. First, we explain the nature of our real song data, and then discuss the re- sults of the automatic detection and recognition of the songnote. 4.1. Description of Data For testing our proposed method we use five different song unit or phrase for each of the three matured Ben- galese finch song; the names of the finches are Hikari 52, Hikari 49 and Kuro 0362. The song data were recorded at the Okanoya laboratory of RIKEN. The spectrogram image of the matured Bengalese finch has similar prop- erties, where the note patterns are clearly visible and almost each songnote is separated by considerable blank space. Figure 8 shows the partial sonogram images for the three birds. Table 1 shows the sample sonogram images contains forty six to fifty four notes for Hikari 52, fifty one to fifty nine notes for Hikari 49 and fifty two to sixty one notes for Kuro 0362. From the sample sonogram image Figure 8, it is clearly visible that the sonogram image of Copyright © 2010 SciRes JILSA  A Feasible Approach for Automatic Detection and Recognition of the Bengalese Finch Songnotes and Their Sequences Copyright © 2010 SciRes JILSA 226 Figure 8. Spectrogram for Hikari 49 (top), Hikari 52 (middle) and Kuro 0362 (bottom). Table 1. Results of automatic detection of song elements. Bird name Number of ap- peared elements Average accuracy rate Hikari 52 46 - 54 98% Hikari 49 51 - 59 90% Kuro 0362 52 - 61 95% Hikari 49 is more complex than that of Hikari 52 and Kuro 0362, i.e., for Hikari 52 and Kuro 0362, the song notes are almost clearly separated from one to another, but for Hikari 49, the song notes are not clearly separated from one another. Figure 9. Description of noise and effect of applying cutoff level for Hikari 52. at the intensity value graph, we obtain 40 extracted ele- ments while the original numbers of elements are 46. Therefore, the accuracy rate decreases, and certain ele- ments lose some necessary information, which is not desirable. Figure 9 describes the noise situation. By applying our methodology, we implemented an application in JAVA, which takes the sonogram image as an input and provides extracted song elements and their sequence as the output. ImageJ API [11] is used for ana- lyzing the image property. In the case of Hikari 49, when we inspect the extracted patterns, we find that some song notes are not extracted correctly. Initially, we have an accuracy rate of 75% with our default parameter value as the gaps between the ele- ments are too short to separate. Figure 9 describes the errors in the detection process. 4.2. Songnote Detection In Section 3.1, we discussed the song note extraction methodology and explained the algorithm used for ex- tracting the song notes from a sonogram image. We used parameters such as minimum note width, intensity thre- shold, and minimum gap between notes. We set the pa- rameter values for minimum note width as 10 pixels, intensity threshold as 250, and minimum gap between notes as 5 pixels for every bird. After executing the algo- rithm mentioned in Section 3.1.2, we obtain the result for the best case as follows: Figure 10, except Figure 10(d), shows some incorrect extracted notes for Hikari 49. If we carefully inspect Figure 10, we can observe that Figure 10(a) and Figure 10(b) should be extracted as two different elements be- cause the right pattern in Figure 10(a) and Figure 10(b) appears separately (see Figure 10(c)) in the sonogram image, and Figure 10(c) should be extracted as three different elements However, Figure 10(d) is considered to be extracted as a right pattern although it has the same nature as the patterns shown in Figure 10(a, b, and c) because the two patterns are very close and the left and the right patterns do not appear separately in the song. Now in the case of Hikari 52, when we inspect the ex- tracted patterns, we find that there are some noises with the extracted patterns although we have a good accuracy rate. To avoid the noise, if we apply a cutoff level of 30  A Feasible Approach for Automatic Detection and Recognition of the Bengalese Finch Songnotes and Their Sequences 227 (a) (b) (c) (d) Figure 10. Description of the error in the detection for Hi- kari 49. Table 2. Results of the song note recognition. Bird name Accuracy rate Hikari 52 86% Hikari 49 85% Kuro 0362 78% We adjust the default parameter value of the minimum gap between the notes to be two pixels and use the cutoff level of nine. Thus, for the best case result we obtain an accuracy rate of 90%. 4.3. Songnote Recognition In Section 3.2, we discussed the songnote recognition methodology and explained the algorithm. The first step is to divide every extracted element into N parts, and then calculate the average intensity value for every re- gion. Thus, for every element, we have a set of 27 ele- ment while N = 3. Then, we apply the Chi-square test considering the note width information. In the proposed method, we compare the elements if the note width is greater than three-fourths or smaller than five-fourths of the observed element. After executing the algorithm mentioned in Section 3.2.3, we obtain the songnote se- quences. We can summarize the result for the recognition as follows (See Table 2): Notice that for Hikari 49, the result is based on ex- tracted patterns in the previous step. If we consider the wrong extracted pattern, then the accuracy rate become around 70%. For further discussion the songnote sequence of one song unit that is produced by our system and the se- quence by human annotation for Hikari 52 have been shown below where the bold letters show the different outcomes in recognition. System (Hikari 52): AABACDDEFGHEFGHIBJKLDEFAABACD- DEFGHEFGHIBJKLDEF Correct (Hikari 52): AABLBDDEFGHEFGHICJKDDE- FAABLBDDEFGHEFGHICJKDDEF If we inspect the wrong decisions made by the system for Hikari 52, we find that note B is labeled as C and note L is labeled as D. This is because the incorrectly labeled note contains a considerable noise (white part), which affects the matching process. In the case of incor- rectly labeling note L note A for Hikari 52, by carefully observing each note, we find that the intensity density is the same for both the notes (see Figure 11). From Figure 11 it is clearly visible that the distribu- tion of intensity density is the same for both the notes. This causes the recognition error and is a limitation of the proposed image matching algorithm. Notice that Note 1 and Note 4 are recognized as A, but originally by hu- man annotation by inspecting the image and hearing to the song, Note 4 was labeled L. We notice a similar rec- ognition error in the case of Hikari 49 and Kuro 0362. 5. Conclusions and Discussion The present study proposes a brand-new approach to automatic recognition of song elements and its sequences other then sound processing, and by applying image processing, we obtain good results for the approach. There are good possibilities to improve the accuracy rate for both the extraction and the recognition methods to some extent. From the obtained results, we find that the element extraction process is very important and has a significant effect on the recognition process. The major advantage of the proposed approach is its simplicity and feasibility. The approach is focused on a generalized (does not depend on the bird) process same as humans do. Figure 11. Note 1 (A, top left), note 4 (L, top right) and dis- tribution of intensity density value (bottom) for Hikari 52. Copyright © 2010 SciRes JILSA  A Feasible Approach for Automatic Detection and Recognition of the Bengalese Finch Songnotes and Their Sequences Copyright © 2010 SciRes JILSA 228 Table 3(a). Comparison results for automatic detection. Bird name accuracy rate (sound processing) accuracy rate (proposed method) Hikari 52 96% 98% Hikari 49 94% 90% Table 3(b). Comparison results for automatic recognition. Bird name accuracy rate (sound processing) accuracy rate (proposed method) Hikari 52 83% 86% Hikari 49 -- 85% Satisfactory clustering was not possible for Hikari 49 based on the pa- rameter used. The accuracy rate of the proposed approach is better than that of other methods such as sound processing which was previously carried out at our laboratory. The following tables show comparison of the accuracy rate between sound processing and our proposed method for sngnote detection (see Table 3(a)) and recognition (see Table 3(b)). For comparison we use the song data of Hikari 52 and Hikari 49. For the detection process sound processing method uses Amplitude and Wiener Entropy. For the recognition process it applies K-means clustering algorithm which uses Duration , Amplitude, Wiener Entropy, Mean Fre- quency and Harmonic Pitch as parameters. However, the sound processing requires considerable human effort for fixing the parameter values (manual labeling has to be done once if k-means algorithm has been applied) or for training the system (each songnote has to be manually separated to build the database if HMM approach has been applied) for detecting and recognizing the song- notes for every bird. Furthermore, it is not possible to make a corpus for bird phonemes. If we employ sound processing the only thing we can do is to train the system for a specific bird family as similar patterns do not ap- pear in different bird families. This is not practical for an automated system. In contrast, the proposed methodol- ogy is almost automated and feasible for songbirds as our approach represents the human inspection method and does not depend on birds. The default parameter values ave been used for detecting the songnotes is almost good for any bird but can be changed by couple of click by the user if necessary. h For the element detection process, the accuracy rate is 100% for some birds, and for other birds, the accuracy rate is also satisfactorily high. Thus, our approach saves time and is practical as an automated system. In the rec- ognition process, we obtain a high accuracy rate of more than 80%. REFERENCES [1] E. Honda and K. Okanoya, “Acoustical and Syntactical Comparisons between Songs of the White-Backed Munia (Lonchura striata) and Its Domesticated Strain, the Ben- galese Finch (Lonchura Striata Var. Domestica),” Zoo- logical Science, Vol. 16, 1999, pp. 319-326. [2] K. Okanoya, “Song Syntax in Bengalese Finches: Proxi- mate and Ultimate Analyses,” Advances in the Study of Behavior, Vol. 34, 2004, pp. 297-346. [3] Y. Kakishita, K. Sasahara, T. Nishino, M. Takahasi and K. Okanoya, “Ethological Data Mining: an Auto- mata-Based Approach to Extract Behavioral Units and Rules,” Data Mining and Knowledge Discovery, Vol. 18, No. 3, 2009, pp. 446-471. [4] C. K. Catchpole and P. J. B. Slater, “Bird Song: Biologi- cal Themes and Variations,” 2nd Edition, Cambridge University Press, UK, 2003. [5] J. Nishikawa and K. Okanoya, Ornithological Science, 2006, pp. 95-103. [6] J. Doupe and P. K. Kuhl, “Birdsong and Human Speech: Common Themes and Mechanisms,” Annual Reviews Neuroscience, Vol. 22, 1999, pp. 567-631. [7] J. C. Russ, “The Image Processing Handbook,” 5th Edi- tion, CRC Press, 2006. [8] S. M. Ross, “Introduction to Probability and Statistics for Engineers and Scientists,” 3rd Edition, Elsevier Aca- demic Press, USA, 2004. [9] J. Shawe-Taylor and N. Cristianini, “An Introduction to Support Vector Machines and Other Kernel-Based Learning Methods,” 1st Edition, Cambridge University Press, UK, 2000. [10] T. Ojala, M. Pietikainen and D. Harwood, “A Compara- tive Study of Texture Measures with Classification Based on Feature Distributions,” Pattern Recognition, Vol. 29, No. 1, 1996, pp. 51-59. [11] ImageJ 1.41, National Institutes of Health, USA, 2010. http://rsbweb.nih.gov/ij/ |