Paper Menu >>

Journal Menu >>

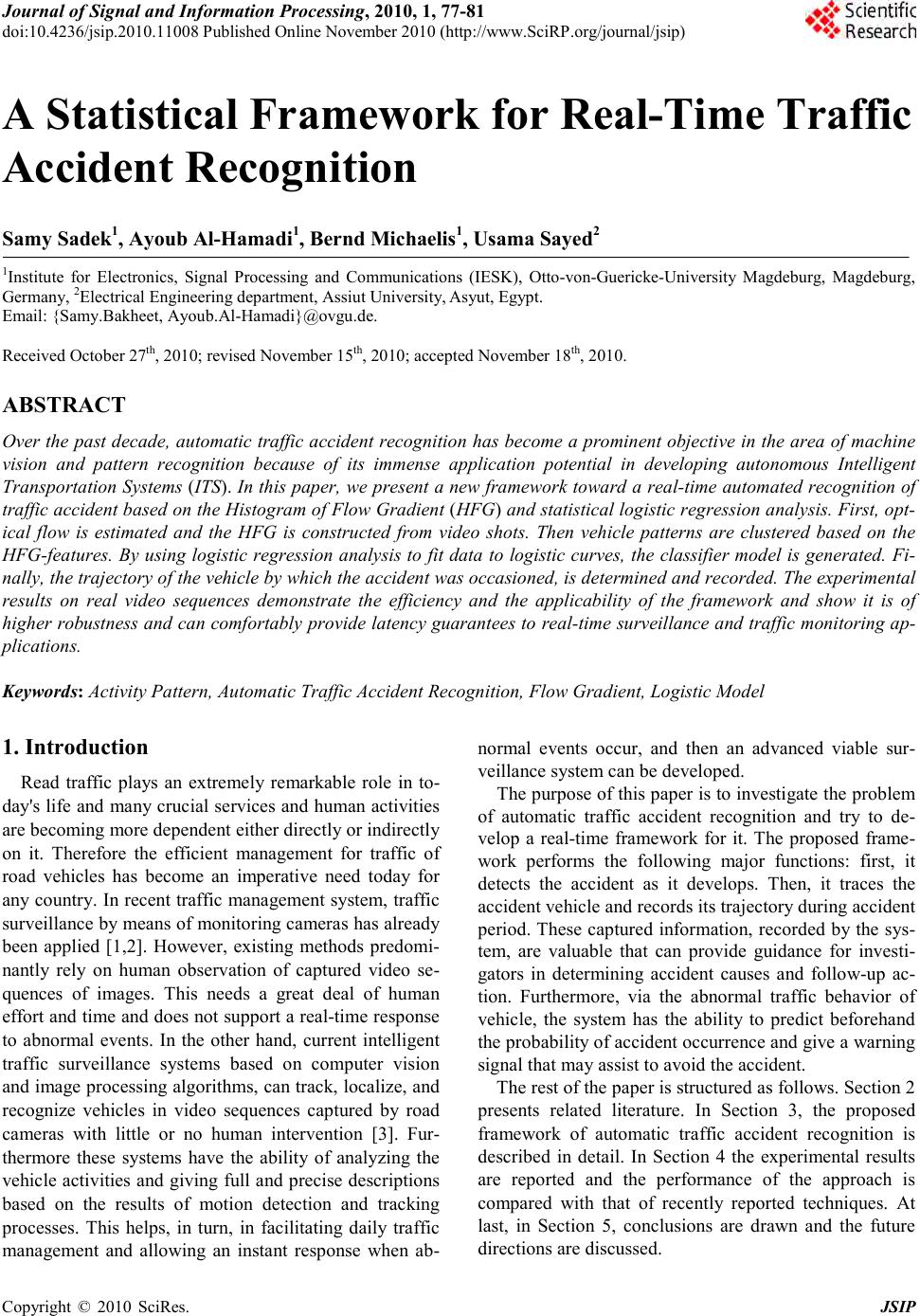

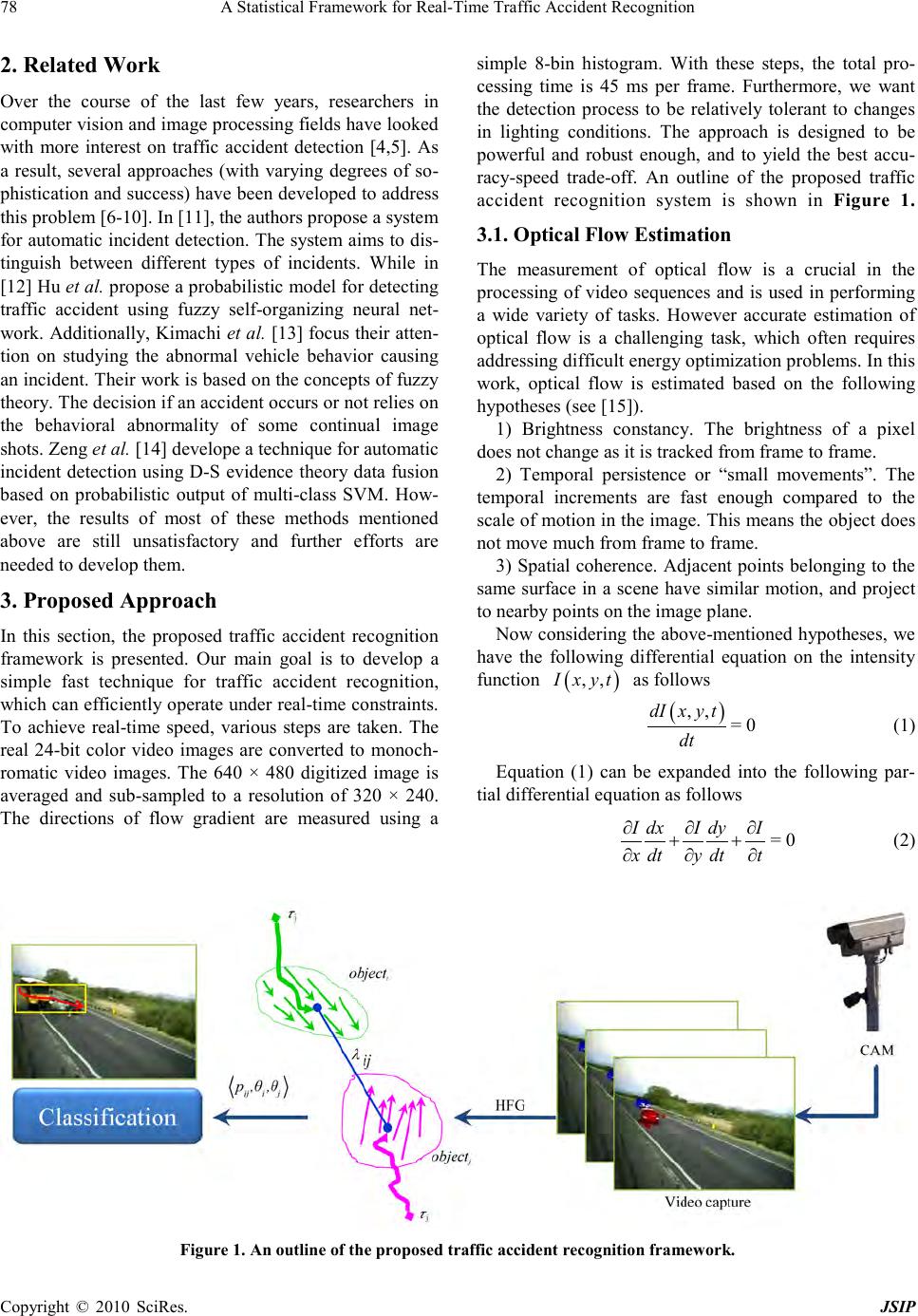

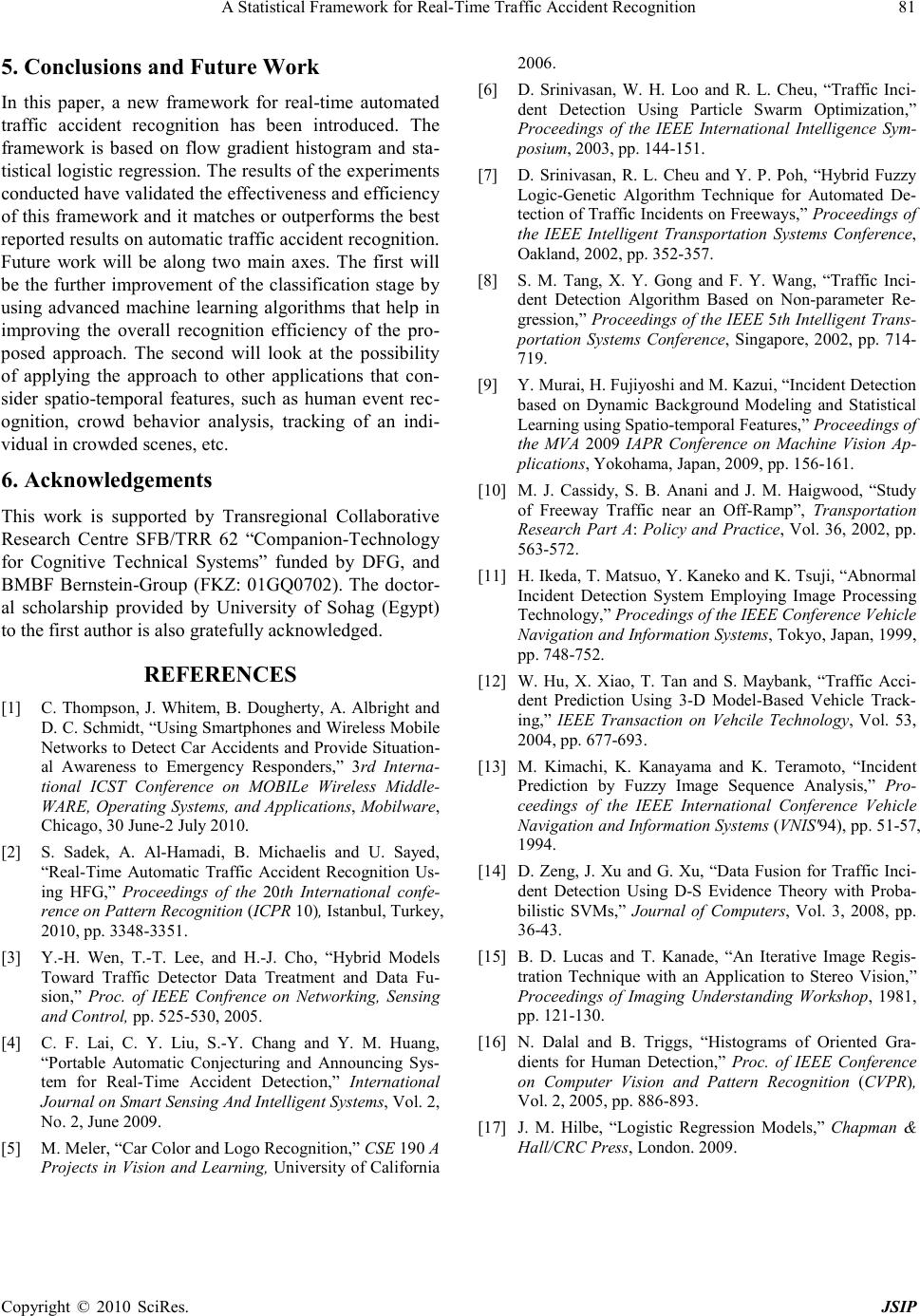

Journal of Signal and Information Processing, 2010, 1, 77-81 doi:10.4236/jsip.2010.11008 Published Online November 2010 (http://www.SciRP.org/journal/jsip) Copyright © 2010 SciRes. JSIP 77 A Statistical Framework for Real-Time Traffic Accident Recognition Samy Sadek1, Ayoub Al-Hamadi1, Bernd Mic h ae lis1, Usama Sayed2 1Institute for Electronics, Signal Processing and Communications (IESK), Otto-von-Guericke-University Magdeburg, Magdeburg, German y, 2Electrical Engin eer ing department, Assiut University, Asyut, Egypt. Email: {Samy.Bakheet, Ayoub .Al -Hamadi}@ovgu.de. Received October 27th, 2010; revised November 15th, 2010; accepted November 1 8th, 2010. ABSTRACT Over the past decade, automatic traffic accident recognition has become a prominent objective in the area of machine vision and pattern recognition because of its immense application potential in developing autonomous Intelligent Transportation Systems (ITS). In this pape r, we presen t a new framework toward a real-time automated recognition of traffic accident based on the Histogram of Flow Gradient (HFG) and statistical lo gistic r egression analysis. F irst, opt- ical flow is estimated and the HFG is constructed from video shots. Then vehicle patterns are clustered based on the HFG-features. By using logistic regression analysis to fit data to logistic curves, the classifier model is generated. Fi- nally, the trajectory of the vehicle by which the accident was occasioned, is determined and recorded. The experimental results on real video sequences demonstrate the efficiency and the applicability of the framework and show it is of higher robustness and can comfortably provide latency guarantees to real-time surveillance and traffic monitoring ap- plications. Keywords: Activity Pattern, Au tomatic Traffic Ac c ident Recogn ition, Flow Gradient, Logistic Model 1. Introduction Read traffic plays an extremely remarkable role in to- day's life and many crucial services and human activities are becoming more dependent either directly or indirectly on it. Therefore the efficient management for traffic of road vehicles has become an imperative need today for any country. In recent traffic management system, traffic surveillance by means of monitoring cameras has already been applied [1,2]. However, existing methods predomi- nantly rely on human observation of captured video se- quences of images. This needs a great deal of human effort and time and does not support a real-time resp onse to abnormal events. In the other hand, current intelligent traffic surveillance systems based on computer vision and image processing algorithms, can track, localize, and recognize vehicles in video sequences captured by road cameras with little or no human intervention [3]. Fur- thermore these systems have the ability of analyzing the vehicle acti vities and giving full a nd precise descrip tions based on the results of motion detection and tracking processes. This helps, in turn, in facilitating daily traffic management and allowing an instant response when ab- normal events occur, and then an advanced viable sur- veillance system can be developed. The purpose of this paper is to investigate the problem of automatic traffic accident recognition and try to de- velop a real-time framework for it. The proposed frame- work performs the following major functions: first, it detects the accident as it develops. Then, it traces the accident vehicle and records its trajectory during accident period. These captured information, recorded by the sys- tem, are valuable that can provide guidance for investi- gators in determining accident causes and follow-up ac- tion. Furthermore, via the abnormal traffic behavior of vehicle, the system has the ability to predict beforehand the probability of accident occurrence and give a warning signal that may assist to avoid the ac c ident. The rest of the paper is structured as follows. Section 2 presents related literature. In Section 3, the proposed framework of automatic traffic accident recognition is described in detail. In Section 4 the experimental results are reported and the performance of the approach is compared with that of recently reported techniques. At last, in Section 5, conclusions are drawn and the future directions are discussed.  A Statistical Framework for Real-Time Traffic Acci den t Recogn it ion Copyright © 2010 SciRes. JSIP 78 2. Related Work Over the course of the last few years, researchers in computer vision and image processing fields have looked with more interest on traffic accident detection [4,5]. As a result, several approaches (with varying degrees of so- phistication and success) have been developed to address this problem [6-10]. In [11], the authors propose a system for automatic incident detection. The system aims to dis- tinguish between different types of incidents. While in [12] Hu et al. propose a probabilistic model for detecting traffic accident using fuzzy self-organizing neural net- work. Additionall y, Kimachi et al. [13] focus their atten- tion on studying the abnormal vehicle behavior causing an incident. Their work is based on the concepts of fuzzy theory. The decision if an accident occurs or not relies on the behavioral abnormality of some continual image shots. Ze ng et al. [14] develope a technique for automatic incident detectio n using D-S evidence theory data fusion based on probabilistic output of multi-class SVM. How- ever, the results of most of these methods mentioned above are still unsatisfactory and further efforts are needed to develop them. 3. Proposed Approach In this section, the proposed traffic accident recognition framework is presented. Our main goal is to develop a simple fast technique for traffic accident recognition, which can efficiently operate under real-time constra ints. To achieve real-time speed, various steps are taken. The real 24-bit color video images are converted to monoch- romatic video images. The 640 × 480 digitized image is averaged and sub-sampled to a resolution of 320 × 240. The directions of flow gradient are measured using a simple 8-bin histogram. With these steps, the total pro- cessing time is 45 ms per frame. Furthermore, we want the detection process to be relatively tolerant to changes in lighting conditions. The approach is designed to be powerful and robust enough, and to yield the best accu- racy-speed trade-off. An outline of the proposed traffic accident recognition system is shown in Figure 1. 3.1. Opti ca l F lo w Estimation The measurement of optical flow is a crucial in the processing o f video sequence s and is used in performing a wide variety of tasks. However accurate estimation of optical flow is a challenging task, which often requires addressing di fficult ener gy optimization problems. In this work, optical flow is estimated based on the following hypotheses (see [15]). 1) Brightness constancy. The brightness of a pixel does not change as it is tracked from frame to frame. 2) Temporal persistence or “small movements”. The temporal increments are fast enough compared to the scale of motion in the image. T his means the object does no t mo v e m uc h f r om frame to frame . 3) Spatial coherence. Adjacent points belonging to the same surface in a scene have similar motion, and project to nearby points on the image plane. No w consid ering the above -mentioned hypotheses, we have the following differential equation on the intensity function ( ) ,,I xyt as follows ( ) ,, =0 dIxy t dt (1) Equation (1) can be expanded into the following par- tial differential equation as follows =0 Idx Idy I x dty dtt ∂∂∂ ++ ∂∂∂ (2) Figure 1. An outline of the proposed traffic accident rec ognition framework.  A Statistical Framework for Real-Time Traffic Acci den t Recogn it ion Copyright © 2010 SciRes. JSIP 79 Replacing dif fere ntial terms w ith ,, ,,. xyt III uv gives =0 x yt Iu Iv I++ (3) Equation (3) can be formed into a matrix equation as follows ( ) = T t If I∇⋅ − (4) where =x y I II ∇ and =u fv . Equation (4) has two unknowns and cannot be solved as such. To find the optical flow, another set of equations is needed, which can be obtained by an additional con- straint. The solution as given by [15] is a non-iterative method which assumes a locally constant flow. As an improvement over the original Lucas-Kanade method, the algorithm is iteratively carried out in a coarse-to-fine manner, in such a way that the spatial derivatives are first computed at a coarse scale, and then iterative updates are successively computed at finer scales. 3.2. Feature Extraction HOG (Histogram of Oriented Gradient), first proposed by Dalal and Triggs in 2005 [16], is a feature descriptor originally used for the purpose of pedestrian detection in static imager y. This technique counts o ccurre nces of gr a- dient orientation i n localiz ed p or tions of an ima ge. In this work, the original HOG algorithm is utilized, but with some adaption to be appropriate to deal with the flow field. Such a modified version of HOG is called HFG (Histogram of flow Gradient). HFG algorithm is very similar to that of HOG, but differs in that HFG locally runs on optica l flow field in motion sce nes (see Figure 2 (a)). Furthermore, the imple mentation of HFG is compu- tationally faster than that of HOG counterpart. T he ma g- nitude and the angle of the optical flow required to con- struct HFG are determined by: ( ) 22 ,=muvu v+ (5) ( ) ,= arctanv uv u ϕ (6) where m and ϕ are the magnitude and the angle of velocity flow respectively .The orientation of flow is represented by an 8-bin histogram of gradient orienta- tions in the range of ( ) , ππ − , as depicted in Figure 2(b). 3.3. Classification In this section the automatic classification stage of the proposed system is described, as well as some adapta- tions are made to allow the system to be robust against gradual and sudden changes in the scene. By using the Figure 2. Simple illustration of orientation histogram of flow (a) flow gradients, (b) 8-bin HFG. features extracted on the previous step, the location of the center of gravity for each pattern is given by ( ) 1 =i ij j i h ςυ ∑ (7) where ( ) 2 i j R υ ∈ and i h are the flow vectors belonging to the pattern i and the histogram of that pattern re- spectively. Then the Euclidean distance metrics (EDMs) between each two patterns are calculated iji j ij λ ςς =−∀≠ (8) The distances are then normalized to get a quantitative parameter upon which classification is determined since the normalized distance is a characteristic quantity not easily influenced by sudden changes. Given the norma- lized distances, ˆij λ between each two patterns i and j , it is possible to define the probability ij p that de- termines the relationships between different patterns us- ing a sigmoidal mapping as follows ( ) 2 ˆ = == 1 ijijij pp e αδ ς ςλδ + (9) where δ is the observed distance value. The parameter α is determined using the well-known logistic regres- sion technique. Statistically speaking, logistic regression (sometimes ter med logistic mo del or logit model) is used for prediction of the probability of occurrence of an event by fitting data to a logistic curve. It is a generalized li- near model used for binomial regression. Logistic model, like many fro m of regressio n anal ysis, make s use of sev- eral predictor variables that may be either numerical or categorical (for more details see [17]). The orientation mean is considered and combined with the probabilities obtained from the above mapping. We enforce some restrictions on the classificatio n process by adjusting the pairwise probabilities. For example, the  A Statistical Framework for Real-Time Traffic Acci den t Recogn it ion Copyright © 2010 SciRes. JSIP 80 pattern pair prob ability is set to ze ro if the pattern de nsity is too small (in the evaluations, ratio 0.2 was utilized as the limit). The pairwise probabilities obtained is finally compared with a threshold to determine the state of each vehicle and then decide whether the accident has oc- curr ed, i s likel y to occur o r not. 4. Experimental Results In this section, the experiments undertaken to evaluate the proposed framework illustrated above are described and the results that confirm the feasibility of the pro- posed system are shown. All the algorithms of the pro- posed system have been implemented using Visual Stu- dio 2008 and OpenCV and the software has been ex- ecuted on a Pentium 4 (2.83 GHz) computer running under Windows Vista platform. Due to the difficulty (and danger) of capturing or simulating traffic accidents in real scenes, it was only possible to carry out the expe- riments in a relatively limited number of a real traffic accident scenes. The proposed framework has been tested on a set of 45 video streams depicting a total of over 250 real scenes of traffic accidents or abnormal ve- hicle events captured by traffic surveillance cameras. All these data were collected from Internet sites and supplied free of charge. Data comprise of a wide variety of dif- ferent road types such as a straight roads, curves, ramps, crossings, and bridges, and also many vehicle events incl udin g tur ning l eft, tur ning right , e nteri ng, and leaving were involved in. In order to quantitatively evaluate the performance of the proposed framework in recognizing traffic accident, we have used a receiver operating cha- racteristic (ROC) curve that defines the relationship be- tween the detection rate (DR) and the false-alarm rate (FAR), which are given by # = 100% # correctlyrecognizeda ccidents DR Total ofaccidents× # = 100% # falselyrecognizedaccidents FAR Totalofnon- accidents× The symbol # used above means “the number of”. Figure 3 shows the interpolated ROC curve of the pro- posed recognition system. According to Figure 3, the performance of the proposed system is very promising. The recognition rate of the system reaches up to 99.6% with false alarm rate at 5.2%. What is more, this perfor- mance meets or exceeds those of recently reported me- thods [14,9] in terms of recognition rate and false alarm rate. The example shown in Figure 4 illustrates how the proposed system could successfully recognize the occur- rence of a highway traffic accident and then track out and record the trajectory of the vehicle by which the accident was occasioned. Finally, the proposed accident recogniz- er can run comfortably at 25 fps on average (using a 2.8 GHz Intel dual core machine with 4 GB RAM running Microsoft Windows Vista). This timing performance enables our method to provide delay guarantees to real-time surveillance and traffic monitoring applica- tions. Figure 3. ROC curve for the proposed automatic traffic accident recognition framework. Figure 4. An example of a highw ay traffic accident recognition. The first row depicts three camera shots of the accident ve- hicle, (a) before the accident, (b) beginning the accident, and (c) end of the accident. The second row shows the clustering results.  A Statistical Framework for Real-Time Traffic Acci den t Recogn it ion Copyright © 2010 SciRes. JSIP 81 5. Conclusions and Future Work In this paper, a new framework for real-time automated traffic accident recognition has been introduced. The framework is based on flow gradient histogram and sta- tistical logist ic regression. T he results of the e xperiments conducted have validated the effectiveness and efficiency of thi s fra mewor k and it matc hes or outperforms the best reported results on automatic traffic accident re cogni tio n. Future work will be along two main axes. The first will be the further improvement of the classification stage by using advanced machine learning algorithms that help in improving the overall recognition efficiency of the pro- posed approach. The second will look at the possibility of applying the approach to other applications that con- sider spatio-temporal features, such as human event rec- ognition, crowd behavior analysis, tracking of an indi- vidual in crowded scenes, etc. 6. Acknowledgements This work is supported by Transregional Collaborative Research Centre SFB/TRR 62 “Companion-Technology for Cognitive Technical Systems” funded by DFG, and BMBF Bernstein-Group (FKZ: 01GQ0702). The doctor- al scholarship provided by University of Sohag (Egypt) to the first author is also gratef ully acknowledged . REFERENCES [1] C. Thompson, J. Whitem, B. Dougherty, A. Albright and D. C. Schmidt, “Using Smart phon es and Wirel ess Mobile Networks to Detect Car Accidents and Pro vide Situation- al Awareness to Emergency Responders,” 3rd Interna- tional ICST Conference on MOBILe Wireless Middle- WARE, Operating Systems, and Applications, Mobilware , Chicago, 30 June-2 July 2010. [2] S. Sadek, A. Al-Hamadi, B. Michaelis and U. Sayed, “Real-Time Automatic Traffic Accident Recognition Us- ing HFG,” Proceedings of the 20th International confe- rence on Pattern Recognition (ICPR 10), Istanbul, Turkey, 2010, pp. 3348-3351. [3] Y.-H. Wen, T.-T. Lee, and H.-J. Cho, “Hybrid Models Toward Traffic Detector Data Treatment and Data Fu- sion,” Proc. of IEEE Confrence on Networking, Sensing and Control, pp. 525-530, 2005. [4] C. F. Lai, C. Y. Liu, S.-Y. Chang and Y. M. Huang, “Portable Automatic Conjecturing and Announcing Sys- tem for Real-Time Accident Detection,” International Journal on Smart Sensing And Intelligent Systems, Vol. 2, No. 2, June 2009. [5] M. Meler , “Car Color and Logo Recognition,” CSE 190 A Projects in Vision and Learning, University of California 2006. [6] D. Srinivasan, W. H. Loo and R. L. Cheu, “Traffic Inci- dent Detection Using Particle Swarm Optimization,” Proceedings of the IEEE International Intelligence Sym- posium, 2003, pp. 144 -151. [7] D. Srinivasan, R. L. Cheu and Y. P . Poh, “Hybrid Fuzzy Logic-Genetic Algorithm Technique for Automated De- tection of Traffic Incidents on Freeways,” Proceedings of the IEEE Intelligent Transportation Systems Conference, Oakland, 2002, pp. 352-357. [8] S. M. Tang, X. Y. Gong and F. Y. Wang, “Traffic Inci- dent Detection Algorithm Based on Non-parameter Re- gression,” Proceedings of th e IEEE 5th Intelligent Trans- portation Systems Conference, Singapore, 2002, pp. 714- 719. [9] Y. Mu ra i, H. Fujiyoshi and M. Kazui, “Incident Detection based on Dynamic Background Modeling and Statistical Learning using Spatio-tempo ral Features,” Proceedings of the MVA 2009 IAPR Conference on Machine Vision Ap- plications, Yokohama, Japan, 2009, pp. 156-161. [10] M. J. Cassidy, S. B. Anani and J. M. Haigwood, “Study of Freeway Traffic near an Off-Ramp”, Transportation Research Part A: Policy and Practice, Vol. 36, 2002 , pp. 563-572. [11] H. Ikeda, T. Matsuo, Y. Kaneko and K. Tsuji, “Abnormal Incident Detection System Employing Image Processing Technology,” Procedings of the IEEE Conference Vehicle Navigation and Information Systems, Tokyo, Japan, 1999, pp. 748-752. [12] W. Hu, X. Xiao, T. Tan and S. Maybank, “Traffic Acci- dent Prediction Using 3-D Model-Based Vehicle Track- ing,” IEEE Transaction on Vehcile Technology, Vol. 53, 2004, pp. 677-693. [13] M. Kimachi, K. Kanayama and K. Teramoto, “Incident Prediction by Fuzzy Image Sequence Analysis,” Pro- ceedings of the IEEE International Conference Vehicle Navigation and Information Systems (VNIS'94), pp. 51-57, 1994. [14] D. Zeng, J. Xu and G. Xu, “Data Fusion for Traffic Inci- dent Detection Using D-S Evidence Theory with Proba- bilistic SVMs,” Journal of Computers, Vol. 3, 2008, pp. 36-43. [15] B. D. Lucas and T. Kanade, “An Iterative Image Regis- tration Technique with an Application to Stereo Vision,” Proceedings of Imaging Understanding Workshop, 1981, pp. 121-130. [16] N. Dalal and B. Triggs, “Histograms of Oriented Gra- dients for Human Detection,” Proc. of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Vol. 2, 2005, p p. 886 -893. [17] J. M. Hilbe, “Logistic Regression Models,” Chapman & Hall/CRC P ress, London. 2009. |