Circuits and Systems

Vol.5 No.8(2014), Article ID:48615,7 pages

DOI:10.4236/cs.2014.58022

Real-Time Lane Detection for Driver Assistance System

Takialddin Al Smadi

Department of Communications and Electronics Engineering, College of Engineering, Jerash University, Jerash, Jordan

Email: dsmadi@rambler.ru

Copyright © 2014 by author and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 25 May 2014; revised 30 June 2014; accepted 10 July 2014

ABSTRACT

Traffic problem is more serious, as the number of vehicles is growing. Most of the road accidents were caused by carelessness of drivers. To reduce the number of traffic accidents and improve the safety and efficiency of traffic for many years around the world and company studies have been conducted on intelligent transport systems (ITS). Intelligent vehicle, (IV) the system is part of a system which is designed to assist drivers in the perception of any dangerous situations before, to avoid accidents after sensing and understanding the environment around itself. In this paper, proposes architecture for driver assistance system based on image processing technology. To predict possible Lane departure, camera is mounted on the windshield of the car to determine the layout of roads and determines the position of the vehicle on line Lane. The resulting sequence of images is analyzed and processed by the proposed system, which automatically detects the Lane lines. The results showed of the proposed system to work well in a variety of settings, In addition computer response system is inexpensive and almost real time.

Keywords:Traffic, Lane Detection, Image Processing, Intelligent Vehicle, Intelligent Transport Systems

1. Introduction

Developing a driver assistance system is very important in the context of road conditions. A driver finds it difficult to control the vehicle due to sudden pot holes or bumps or sudden turns where the road signs are not very prominent or missing most of the times. Suppose there is a system with integrated motion camera and an integrated onboard computer with the vehicle, a simple driver guidance system based on frame by frame analysis of the motion frames can be developed and there by generate the alarm signals accordingly, so that the driving can be made quite easier.

System of intelligent vehicle is a component of the system which is designed to assist drivers in the perception of any dangerous situations before, to avoid accidents after Sensing and understanding the environment around itself [1] [2] .

To date there have been numerous studies into the recognition. Authors start with a plane road bird image using reverse perspective. Mapping to delete then retrieves the perspective effect, Lane markers based on restriction, and the marker width Lane.

Traffic accidents have become one of the most serious problems. The reason is that most accidents happen due to negligence of the driver. Rash and negligent driving could push other drivers and passengers in danger on the roads. More and more accidents can be avoided if such dangerous driving condition is detected early and warned other drivers. Most of the roads, cameras and speed sensors are used for monitoring and identifying drivers who exceeded the permissible speed limit on roads and motorways. This simplistic approach, and there are no restrictions. If drivers slow down speed detectors, they would not be detected, even though they exceeded the allowed speed. In traditional methods [3] , there are some disadvantages. For example, an algorithm that performs well structured way may work poorly on flat roads, while the algorithm which is suitable for treatment of roads in rural areas may not be suitable for processing. More to the point, edge or intensity-based methods will be on flat roads for lack of obvious edges or markings with vivid intensity. On the other hand the background color or texture-based methods does not hold for roads because the color and texture of single band do not have much difference from the next lane.

The aim of this work is to inherit these promising research results and further explore this potential problem. Usually a reliable and efficient detection of Lane should be comprehensive, the following aspects:

Detection system on-board Lane for intelligent vehicle based on Monocular vision:

1) Considering ways, including straight, curved, painted, unpainted road.

2) The shadows are a result of artifacts produced by trees, buildings, bridges, or other means of transport.

3) A reasonable computational complexity to an embedded processor may qualify.

For the past years, more researches in the intelligent transportation system (ITS) community [4] has been devoted to the topic of lane departure warning (LDW) [5] -[7] .

Much of the highway deaths each year attributed to Lane departure of the vehicle. Many automobile manufacturers are developing advanced driver assistance systems, many of which include subsystems that help prevent unintended Lane departure. Consistent approach among these systems is warning the driver when predicted unintended Lane departure.

To predict possible Lane departure, vision system detects the vehicle markings on the road and determines the orientation and position of the detected Lane line.

2. Methodology

The following methodology was adapted to develop the system.

2.1. Lane Detection

The system that sends various warnings when the vehicle departs from the lane in certain conditions.

Ÿ Lane departure and getting too close to the lane marker gives an audio beep.

Ÿ LDWS will sound an alert when the vehicle is within 0.5 ft from the lane marker.

Ÿ If the vehicle stays on the lane marking, the beep repeats every 2 seconds.

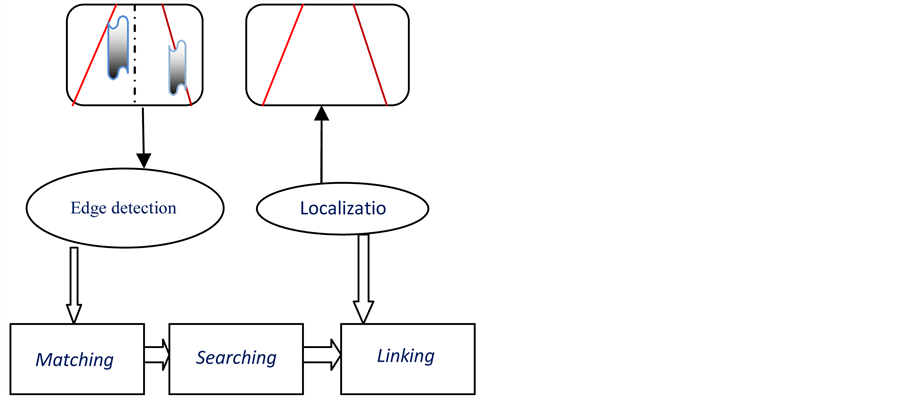

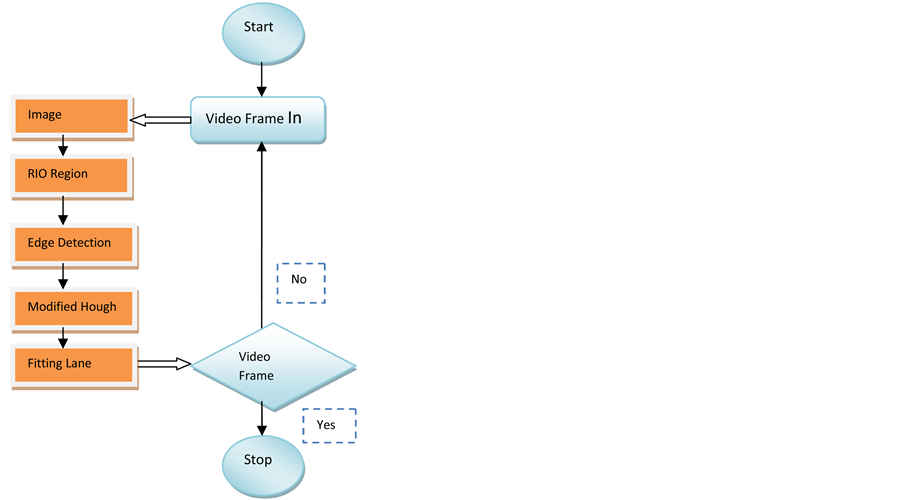

The complexity of the actual Lane line roads are often some degradation factors, such as shade, water, pavement cracks, etc., and in the discovery process, it is difficult to achieve high performance and reliability, so you need to optimize the algorithm. Lane detection is a vital operation in most of these applications as lanes provide, the scheme is depicted in Figure 1.

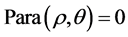

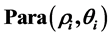

In most cases, lanes appear as well-defined, straight-line features on the image especially in highways, or as curves that can be approximated by smaller straight lines. The linear HT (Hough transform), a popular line detection algorithm, is widely used for lane detection [8] . The HT [9] is a parametric representation of points in the edge map. It consists of two steps, “voting” and “peak detection” [10] .

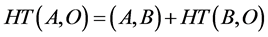

In the process of voting, every edge pixel

is transformed to a sinusoidal curve by applying the following:

is transformed to a sinusoidal curve by applying the following:

(1)

(1)

Figure 1. Block diagram of detection lane.

where

is the length of the perpendicular from the origin

is the length of the perpendicular from the origin

to a line passing through

to a line passing through , and is the angle made

by the perpendicular with the

, and is the angle made

by the perpendicular with the

-axis, as shown in Figure 2.

The resulting values are accumulated using a 2-D array, with the peaks in the array

indicating straight lines in the image. In the process of peak detection, it involves

analysis of the array accumulation to detect straight lines

[11] [12] .

-axis, as shown in Figure 2.

The resulting values are accumulated using a 2-D array, with the peaks in the array

indicating straight lines in the image. In the process of peak detection, it involves

analysis of the array accumulation to detect straight lines

[11] [12] .

The high computational time incurred by conventional Hough voting, attributed to the trigonometric operations and multiplications in (1) applied to every pixel in the edge map, makes it unsuitable for direct use in lane detection, which demands real-time processing. Hierarchical pyramidal approaches have been proposed in [13] -[15] to speed up the HT computation process through parallelism. These hierarchical approaches in [16] filter candidates to be promoted to the higher levels of hierarchy by the threshold of the Accumulation spaces. For each candidate that qualifies, they perform a complete HT computation again using (1). Hence, although the hierarchical approaches.

Speed up the HT by parallelizing the process, additional costs are incurred for re-computing HT at every level. These increased computational costs are not desirable in embedded applications like lane detection in vehicles, where computational resources are limited, in this paper; a modified approach was proposed to accelerate the HT process in a computationally efficient manner, thereby making it suitable for real-time lane detection. The proposed method is applied for straight lane detection and it is shown to give good results, with significant savings in computation cost.

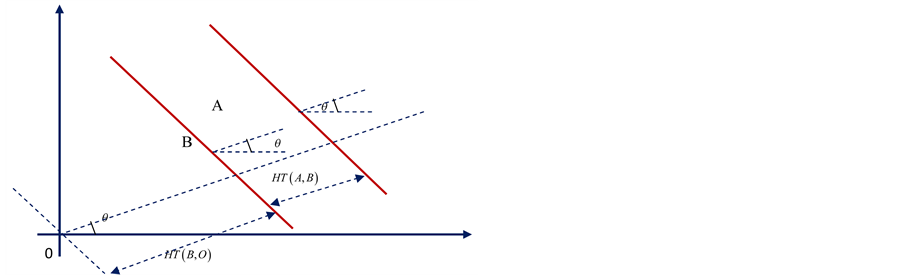

2.2. Hough Transforms

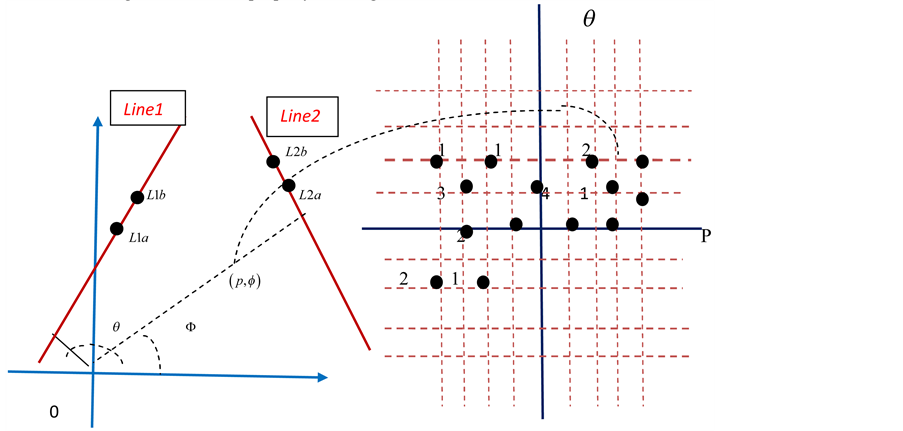

According to the additive property of HT (Hough Transform) as shown in Figure 3, the HT of point A with respect to the image origin

“ ” is equal to the sum of the HT of A with respect

to any intermediate point B, and HT of B with respect to

” is equal to the sum of the HT of A with respect

to any intermediate point B, and HT of B with respect to , i.e.

, i.e.

(2)

(2)

where

represents the HT of point

represents the HT of point With respect to point

With respect to point .

.

In other Parameters definitions see Table1

The HT of a point A with respect to a global origin O can be broken into two parts: the HT of that point A with Respect to a local origin B and the HT of the local origin B with respect to the global origin O.

The count is increased from 1 to 2.

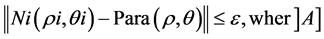

2.3. Fitting Lane

After the process of edge detection, lane line identification process is as follows:

Figure 2. The relation of lane line and Hough transform.

Figure 3. The flow chart of the lane detection.

There are parameter arrays storing two types of parameters,

and

and , where

, where

equals the pixel number of each ROI region. as shown in

Figure 4.

equals the pixel number of each ROI region. as shown in

Figure 4.

Step 1: Clear all parameter arrays empty, i.e.,

for

for

and

and

belong to real numbers.

belong to real numbers.

Step 2: Read in all pixels

from left and right simultaneously. Randomly select two points in Line 1 and Line

2, respectively.

from left and right simultaneously. Randomly select two points in Line 1 and Line

2, respectively.

Figure 4. the additive property of Hough Transform.

Step 3: Select two points. L1a

and L1b

and L1b

randomly from Line 1, as shown in Figure 5. Solve

two parameters

randomly from Line 1, as shown in Figure 5. Solve

two parameters

and

and

from the two points L1a

from the two points L1a

and L1b

and L1b . The number pair

. The number pair

is a combination of the above solution. The same procedure is repeated for Line

2.

is a combination of the above solution. The same procedure is repeated for Line

2.

Step 4: According to the

from Step 3Search

from Step 3Search

and

and

are the norm of A, all the parameter space and a default little value, respectively.

are the norm of A, all the parameter space and a default little value, respectively.

If

exists in the parameter space such

exists in the parameter space such , the Value of

, the Value of

will be increment by 1.

If the norm between

and

and

more than

more than , insert and initialize

, insert and initialize

to 1i.e.,

to 1i.e., .

.

Step 5: After all points in Line 1 and Line 2 are traversed from Step 3 to Step 4, all the elements of parameter space are already setup.

If the value of

is more than the threshold after the setup of parameter space, the road lane is

existing. The

is more than the threshold after the setup of parameter space, the road lane is

existing. The

will be kept for the next step to draw the corresponsive lane.

will be kept for the next step to draw the corresponsive lane.

If the value of

is less than the threshold, the road lane is not exist.

is less than the threshold, the road lane is not exist.

Step 6: according to the

got from Step 5, a straight line will be draw on the frame. The parameter space

is used for lane predicting.

got from Step 5, a straight line will be draw on the frame. The parameter space

is used for lane predicting.

2.4. Lane Departure Prediction

Generally speaking, the position deviation of lane obtained from two or more initial detecting process is small. So the position of lanes is predictable. After the lane recognition process, the prediction of lane departure starts to work.

The departure prediction is only made in even frames for reduction of computation.

If the

of left lane line is more than a departure threshold value for 10 continuous even

frame, the departure starts. If the

of left lane line is more than a departure threshold value for 10 continuous even

frame, the departure starts. If the

of right lane line is less than a departure threshold value for 10 continuous even

frame, the departure also starts.

of right lane line is less than a departure threshold value for 10 continuous even

frame, the departure also starts.

3. Experimental Results.

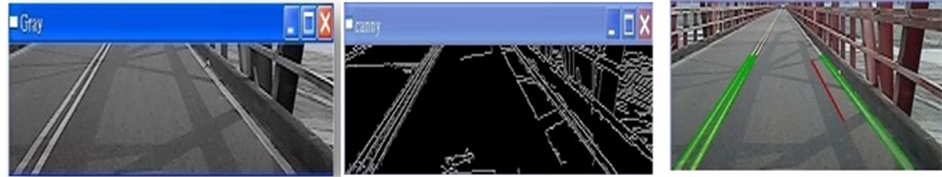

The experiment was run under the environment of Open CV. The identification.

Firstly, the application read in the color video frame. It transformed color image into gray images for every frame as shown in Figure 6(a). Only the pixels in ROI region was extracted edge image. The ROI region was about 1/3 part of a full frame. The calculation complexity could be reduced only in the ROI region.

Local difference of neighboring pixels is to look for the edge. It is an image gradient processing method. It locates edge accurately, and applies to image segmentation with obvious edge. Figure 6(b) depicts the edge line image in ROI region.

The modified Hough transform algorithm was applied in the setup of parameter space. The lane prediction was in the aided of parameter space. Draw the lane lines to the original frame as shown in Figure 6(c).

Figure 5. The transform procedure from image space to parameter space.

(a) (b) (c)

(a) (b) (c)

Figure 6. (a) After the process of gray image in ROI region; (b) After the process of edge detection in ROI region; (c) Prediction of road lane line.

4. Conclusions

Detection algorithm of the proposed Lane, based on modified Hough transform, combining Prediction algorithm, you can check out the lane, fast and stable. The experiment shows that this approach can easily find the appropriate parameter space Lane. The experimental results also demonstrated in this article. The results of successfully provide real-time detection and prediction of Lane in the personal computers. The algorithm also shows a good Performance at night. The experiment detected 2700 frames. The accuracy rate reaches 93.8%. The speed of the proposed algorithm is about 0.19 sec/frame, as shown in Table 2: Filtering parameter. Under the same accuracy, the speed has been improved greatly. The proposed algorithm has great significance for real-time applications.

References

- Miao, X.D., Li, S.M. and Shen, H. (2012) On-Board Lane Detection System for Intelligent Vehicle Based On Monocular Vision. International Journal on Smart Sensing and Intelligent Systems, 5. http://www.s2is.org/Issues/v5/n4/papers/paper13.pdf

- Houser, A., Pierowicz, J. and Fuglewicz, D. (2005) Concept of Operations and Voluntary Operational Requirements for Lane Departure Warning Systems (LDWS) On-Board Commercial Motor Vehicles. Federal Motor Carrier Safety Administration, Washington DC, Tech. Rep. http://dl.acm.org/citation.cfm?id=1656549

- Alessandretti, G., Broggi, A. and Cerri, P. (2007) Vehicle and Guard Rail Detection Using Radar and Vision Data Fusion. IEEE Transactions on Intelligent Transportation Systems, 8, 95-105. http://www.ce.unipr.it/people/bertozzi/pap/cr/cerridimmelolaprossimavolta.pdf

- Lalimi, M.A., Ghofrani, S. and McLernon, D. (2013) A Vehicle License Plate Detection Method Using Region and Edge Based Methods. Computers & Electrical Engineering, 39, 834-845. http://dx.doi.org/10.1016/j.compeleceng.2012.09.015

- Satzoda, R.K., Suchitra, S. and Srikanthan, T. (2008) Parallelizing Hough Transform Computation. IEEE Signal Processing Letters, 15, 297-300. http://dx.doi.org/10.1109/LSP.2008.917804

- Hardzeyeu, V. and Klefenz, F. (2008) On Using the Hough Transform for Driving Assistance Applications. 4th International Conference on Intelligent Computer Communication and Processing, Shanghai, 28-30 August 2008, 91-98.

- Duda, R.O. and Hart, P.E. (1972) Use of The Hough Transform to Detect Lines and Curves in Pictures. ACM Community Management, 15, 11-15,

- Olmos, K., Pierre, S. and Boudreault, Y. (2003) Traffic Simulation in Urban Cellular Networks of Manhattan Type. Computers and Electrical Engineering, 29, 443-461. http://dx.doi.org/10.1109/LSP.2008.917804

- Gonzalez, R.C. and Woods, R.E. (2002) Digital Image Processing. 2nd Edition, New York: Prentice Hall.

- Suchitra, S., Satzoda, R.K. and Srikanthan, T. (2009) Exploiting Inherent Parallelisms for Accelerating Linear Hough Transform Computation. IEEE Transactions on Image Processing, 18, 2255-2264.

- Yoo, H., Yang, U. and Sohn, K. (2013) Gradient-Enhancing Conversion for Illumination-Robust Lane Detection. IEEE Transactions on Intelligent Transportation Systems, 14, 1083-1094.

- Farrell, J.A., Givargis, T.D. and Barth, M.J. (2000) Realtime Differential Carrier Phase GPS-Aided INS. IEEE Transactions on Control Systems Technology, 8, 709-720. http://dx.doi.org/10.1109/87.852915

- Lopez, A., Canero, C., Serrat, J., Saludes, J., Lumbreras, F. and Graf, T. (2005) Detection of Lane Markings Based on Ridgeness and RANSAC. IEEE Conference on Intelligent Transportation Systems, 733-738.

- Wu, B.F., Chen, C.J., Hsu, Y.P. and Chung, M.W. (2006) A DSP-Based Lane Departure Warning System. Mathematical Methods and Computational Techniques in Electrical Engineering, Proc. 8th WSEAS Int. Conf., Bucharest, 240-245.

- Al Smadi, T. (2011) Computing Simulation for Traffic Control over Two Intersections. Journal of Advanced Computer Science and Technology Research, 1, 10-24. http://www.sign-ific-ance.co.uk/dsr/index.php/JACSTR/article/view/38

- Chen, L., Li, Q., Li, M., Zhang, L. and Mao, Q. (2012) Design of a Multi-Sensor Cooperation Travel Environment Perception System for Autonomous Vehicle. Sensors, 12, 12386-12404. http://dx.doi.org/10.3390/s120912386