Journal of Behavioral and Brain Science

Vol.06 No.05(2016), Article ID:66702,8 pages

10.4236/jbbs.2016.65022

Advances in Theory of Neural Network and Its Application

Bahman Mashood1, Greg Millbank2

11250 La Playa Street, 304, San Francisco, USA

2At Praxis Technical Group Inc., Nanaimo, Canada

Copyright © 2016 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 12 March 2016; accepted 21 May 2016; published 24 May 2016

ABSTRACT

In this article we introduce a large class of optimization problems that can be approximated by neural networks. Furthermore for some large category of optimization problems the action of the corresponding neural network will be reduced to linear or quadratic programming, therefore the global optimum could be obtained immediately.

Keywords:

Neural Network, Optimization, Hopfield Neural Network, Linear Programming, Cohen and Grossberg Neural Network

1. Introduction

Many problems in the industry involved optimization of certain complicated function of several variables. Furthermore there are usually set of constrains to be satisfied. The complexity of the function and the given constrains make it almost impossible to use deterministic methods to solve the given optimization problem. Most often we have to approximate the solutions. The approximating methods are usually very diverse and particular for each case. Recent advances in theory of neural network are providing us with completely new approach. This approach is more comprehensive and can be applied to wide range of problems at the same time. In the preliminary section we are going to introduce the neural network methods that are based on the works of D. Hopfield, Cohen and Grossberg. One can see these results at (section-4) [1] and (section-14) [2] . We are going to use the generalized version of the above methods to find the optimum points for some given problems. The results in this article are based on our common work with Greg Millbank of praxis group. Many of our products used neural network of some sort. Our experiences show that by choosing appropriate initial data and weights we are able to approximate the stability points very fast and efficiently. In section-2 and section-3, we introduce the extension of Cohen and Grossberg theorem to larger class of dynamic systems. For the good reference to linear programming, see [3] , written by S. Gass. The appearance of new generation of super computers will give neural network much more vital role in the industry, machine intelligent and robotics.

2. On the Structure and Applicationt of Neural Networks

Neural networks are based on associative memory. We give a content to neural network and we get an address or identification back. Most of the classic neural networks have input nodes and output nodes. In other words every neural networks is associated with two integers m and n. Where the inputs are vectors in  and outputs are vectors in

and outputs are vectors in . neural networks can also consist of deterministic process like linear programming. They can consist of complicated combination of other neural networks. There are two kind of neural networks. Neural networks with learning abilities and neural networks without learning abilities. The simplest neural networks with learning abilities are perceptrons. A given perceptron with input vectors in

. neural networks can also consist of deterministic process like linear programming. They can consist of complicated combination of other neural networks. There are two kind of neural networks. Neural networks with learning abilities and neural networks without learning abilities. The simplest neural networks with learning abilities are perceptrons. A given perceptron with input vectors in  and output vectors in

and output vectors in , is associated with treshhold vector

, is associated with treshhold vector  and

and  matrix

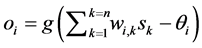

matrix . The matrix W is called matrix of synaptical values. It plays an important role as we will see. The relation between output vector

. The matrix W is called matrix of synaptical values. It plays an important role as we will see. The relation between output vector

and input vector vector

and input vector vector  is given by

is given by , with g a

, with g a

logistic function usually given as  with

with  This neural network is trained using enough number of corresponding patterns until synaptical values stabilized. Then the perceptron is able to identify the unknown patterns in term of the patterns that have been used to train the neural network. For more details about this subject see for example (Section-5) [1] . The neural network called back propagation is an extended version of simple perceptron. It has similar structure as simple perceptron. But it has one or more layers of neurons called hidden layers. It has very powerful ability to recognize unknown patterns and has more learning capacities. The only problem with this neural network is that the synaptical values do not always converge. There are more advanced versions of back propagation neural network called recurrent neural network and temporal neural network. They have more diverse architect and can perform time series, games, forecasting and travelling salesman problem. For more information on this topic see (section-6) [1] . Neural networks without learning mechanism are often used for optimizations. The results of D.Hopfield, Cohen and Grossberg, see (section-14) [2] and (section-4) [1] , on special category of dynamical systems provide us with neural networks that can solve optimization problems. The input and out put to this neural networks are vectors in

This neural network is trained using enough number of corresponding patterns until synaptical values stabilized. Then the perceptron is able to identify the unknown patterns in term of the patterns that have been used to train the neural network. For more details about this subject see for example (Section-5) [1] . The neural network called back propagation is an extended version of simple perceptron. It has similar structure as simple perceptron. But it has one or more layers of neurons called hidden layers. It has very powerful ability to recognize unknown patterns and has more learning capacities. The only problem with this neural network is that the synaptical values do not always converge. There are more advanced versions of back propagation neural network called recurrent neural network and temporal neural network. They have more diverse architect and can perform time series, games, forecasting and travelling salesman problem. For more information on this topic see (section-6) [1] . Neural networks without learning mechanism are often used for optimizations. The results of D.Hopfield, Cohen and Grossberg, see (section-14) [2] and (section-4) [1] , on special category of dynamical systems provide us with neural networks that can solve optimization problems. The input and out put to this neural networks are vectors in  for some integer m. The input vector will be chosen randomly. The action of neural network on some vector

for some integer m. The input vector will be chosen randomly. The action of neural network on some vector  consist of inductive applications of some function

consist of inductive applications of some function  which provide us with infinite sequence

which provide us with infinite sequence

3. On the Nature of Dynamic Systems Induced from Energy Functions

In order to solve optimization problems using neural network machinery we first construct a corresponding energy function E, such that the optimum of E will coincide with the optimum point for the optimization problem. Next the energy function E, that is usually positive will induce the dynamic system

Suppose we are given dynamic system L, as in the following,

Also the above equation can be expressed as

Definition 2.1 We say that the above system L satisfies the commuting condition if for each two indices

This is very similar to the properties of commuting squares in the V.Jones index theory [5] .

The advantage of commuting system as we will show later is that each trajectory

In particular note that if the dynamic system is induced from an energy function E, then the induced neural network

Lemma 2.2 Suppose the dynamic system L has a commuting property. Then there exists a function

Furthermore for every trajectory

Proof Let us pick up an integer

have

And the equality holds, i.e.

But the problem is that the induced

Lemma 2.3 Following the notation as in the above suppose L is a commuting system and that

Proof. Following the definition of Liaponov function and using Lemma 2.2, the fact that

There are some cases that we can choose

Lemma 2.4 Keeping the same notations as in the above, suppose that there exists a number

Proof. Let us define

Lemma 2.5 Let L be a commuting dynamic system. Let

Proof Set

Suppose L is a commuting dynamic system. Let

Lemma 2.6 Suppose L is a commuting dynamic system and

Proof Let

complete the proof we have to show that with regard to the trajectory

that

Suppose L is a commuting dynamic system and

Definition 2.6 Keeping the same notation as in the above, for a commuting dynamic system L, we call

canonical if for each critical point

Before proceeding to the next theorem let us set the following notations.

Let

point at which the trajectory

Lemma 2.7 Following the above notations suppose without loss of generality,

Proof. Otherwise for each

Theorem 2.8 Keeping the same notation as in the above, suppose we have a commuting system L, with the induced energy function

Proof. Suppose there is no non trivial trajectory passing through

Thus, applying mean value theorem implies

To complete the proof of the Theorem 2.8, we need the following lemma.

Lemma 2.9 Keeping the same notations as in the above then there exists a

Proof. Otherwise there exists a sequence of numbers

indices

This using the fact that

To complete the proof of theorem 2.8, note that for every point

each of length equal to

points corresponding to the above partitions. Furthermore consider the following countable set of points.

Then the set

Lemma 2.10 Keeping the same notations as in the above, suppose for a given commuting system L the induced energy function

Proof. If

than

Next we can assume that there exists a line

point

Let us set

Now if for each

get that

As a result of the Lemma 2.10 we get that if

At this point we have to mention that non trivial trajectories will supply us with much more chance of hitting the global optimum, once we perform a random search to locate it.

For some dynamic systems L which is expressed in the usual form

where

following as,

Let us denote

It is clear that if

This implies that

that for any trajectory

As we mentioned before more generalized version of Hopfield dynamic system which is called Cohen and Grossberg dynamic system is given as in the following.

where the set of coefficients

Likewise the system (2), system (3) is not a commuting system. But if we multiply both side of the ith equation in system (3) by

4. Reduction of Certain Optimization Problems to Linear or Quadratic Programming

In solving optimization problems using neural network we first form an energy function

Given the energy function

As we showed L is a commuting system.

As an example consider the travelling salesman problem. As it has been expressed in section 4.2, page 77 of [1] the energy function E is expressed as in the following,

where we are represent the points to be visited by travelling salesman as

Thus the dynamic system L corresponding to E, can be written as in the following,

As we proved the point at which E reaches its optimum is a limiting point

Next as we had,

But

Thus as we showed the system

Now consider the following set of variables

Hence we will have the following set of equations,

Replacing

But

equations together with the optimality of the expression

quadratic linear programming that will give us the optimum value much faster that usual neural network. In fact this method can be applied to many types of optimization problems which guaranties fast convergent to desired critical point.

Let us consider the Four color Theorem. The similar scenario to Four color Theorem is to consider the have two perpendicular axis X and Y and sets of points

line

Case-1. If

Case-2. If

Next let us take the set of following variables,

Furthermore let us set the following energy function to be optimize,

Now the above system is equivalent to find the optimum solution for coloring.

So as before assuming,

This implies,

Next let us define

On the other hand it is easy to show that

Next let us define,

Finally if the following inequalities holds,

where the indices satisfy, the conditions of Case-1 and Case-2. then as t tends to infinity we have,

At this point using the above arguments it is enough to find Q, and P, satisfying the above equalities and will optimize the following expression,

Therefore the above equations together with optimization expression will form a system of linear programming that will converge to the optimal solution at no time.

5. Conclusion

In this article we introduced the methods of approximating the solution to optimization problems using neural networks machinery. In particular we proved that for certain large category of optimization problems the appli- cation of neural network methods guaranties that the above problems will be reduced to linear or quadratic programming. This will give us very important conclusion because the solution of the optimization problems in these categories can be reached immediately.

Cite this paper

Bahman Mashood,Greg Millbank, (2016) Advances in Theory of Neural Network and Its Application. Journal of Behavioral and Brain Science,06,219-226. doi: 10.4236/jbbs.2016.65022

References

- 1. Hetz, J., Krough, A. and Palmer, R. (1991) Introduction to the Theory of Neural Computation. Addison Wesley Company, Boston.

- 2. Haykin, S. (1999) Neural Networks: A Comprehensive Foundation. 2nd Edition, Prentice Hall, Inc., Upper Saddle River.

- 3. Gass, S. (1958) Linear Programming. McGraw Hill, New York.

- 4. Yoshiyasu, T. (1992) Neural and Parallel Processing. The Kluwer International Series in Engineering and Computer Science: SEeS0164.

- 5. Jones, V. and Sunder, V.S. (1997) Introduction to Subfactors. Cambridge University Press, Cambridge.