Journal of Signal and Information Processing Vol.06 No.01(2015),

Article ID:54216,10 pages

10.4236/jsip.2015.61003

A Time Dependent Model for Image Denoising

Santosh Kumar, Mohammad Kalimuddin Ahmad

Department of Mathematics Aligarh Muslim University, Aligarh, India

Email: skykumar87@gmail.com, ahmad_kalimuddin@yahoo.co.in

Copyright © 2015 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 27 January 2015; accepted 16 February 2015; published 25 February 2015

ABSTRACT

In this paper, we propose a new time dependent model for solving total variation (TV) minimi- zation problem in image denoising. The main idea is to apply a priori smoothness on the solution image. This is a constrained optimization type of numerical algorithm for removing noise from images. The constraints are imposed using Lagrange’s multipliers and the solution is obtained using the gradient projection method. 1D and 2D numerical experimental results by explicit numerical schemes are discussed.

Keywords:

Total Variation, Image Denoising, Signal Denoising

1. Introduction

In many image processing problems, a denoising step is required to remove noise or spurious details from corrupted images. The presence of noise in images is unavoidable. It may be introduced at the stage of image formation like image recording, image transmission, etc. These random distortions make it difficult to perform any required image analysis. For example, the feature oriented enhancement introduced in [1] is very effective in restoring blurry images, but it can be “frozen” by an oscillatory noise component. Even a small amount of noise is harmful when high accuracy is required, especially in case of medical images.

In practice, to estimate a true signal in noise, the most frequently used methods are based on the least squares criteria. This procedure is L2-norm dependent. L2-norm based regularization is known to remove high frequency components in denoised images and make them appear smooth.

Most of the classical image deblurring or denoising techniques, due to linear and global approach, are contaminated by Gibb’s phenomenon resulting into smearing near edges. In order to preserve edges Rudin et al. [2] [3] introduced total variation (TV) norm models based on variational approach. TV norms are essentially L1 norms derivatives, hence L1 estimation procedures are more appropriate for the subject of image restoration. For more details we refer to [1] [4] -[7] .

In this paper we present a new time dependent model constructed by evolving the Euler-Lagrange equations of the optimization problem. We propose to apply priori smoothness on the solution image and then denoise it by minimizing the total variation norm of the estimated solution. We have tested our algorithm on various types of signals and images and found our model (11) better than previously known model (10). To quantify results, the experimental values in terms of PSNR are given in Tables 1-3.

2. Image Denoising Models

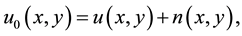

Formation of a noisy image is typically modeled as

(1)

(1)

where

denote the desired clean image,

denote the desired clean image,

denote the pixel

values of a noisy image for

denote the pixel

values of a noisy image for ,

,

is a bounded

open subset of

is a bounded

open subset of

and

and

is additive white noise assumed to be close to Gaussian. The values

is additive white noise assumed to be close to Gaussian. The values

of n at the pixels

of n at the pixels

are independent random variables, each with a Gaussian distribution of zero mean

and variance

are independent random variables, each with a Gaussian distribution of zero mean

and variance .

.

We wish to reconstruct u from . Most conventional variational methods involve a least squares

. Most conventional variational methods involve a least squares

fit because this leads to linear equations. The first attempt along these lines

was made by Phillips [8] and later refined by Twomey et al. [9] [10] in one-dimensional

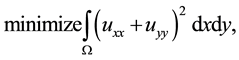

case. In two dimensional continuous framework their constrained minimization problem

is,

fit because this leads to linear equations. The first attempt along these lines

was made by Phillips [8] and later refined by Twomey et al. [9] [10] in one-dimensional

case. In two dimensional continuous framework their constrained minimization problem

is,

(2)

(2)

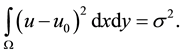

subject to constraints involving the mean

(3)

(3)

and standard deviation

(4)

(4)

The resulting linear system is now easy to solve using modern numerical techniques.

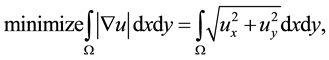

The total variation based image denoising model, which is based on the constrained minimization problem appeared in [2] , is as follows:

(5)

(5)

subject to constraints

and

The first constraint corresponds to the assumption that the noise has zero mean,

and the second constraint uses a priori information that the standard deviation

of the noise

The Euler-Lagrange equation is given by,

in

Since (8) is not well defined at points where

where

The solution procedure uses a parabolic equation with time as an evolution parameter, or equivalently, the gradient descent method. This means that we solve

for

Applying a priori smoothness on the solution image, our new time dependent model becomes,

for

Witkin [12] noticed that the convolution of the signal with Gaussians at each scale

was equivalent to solving the heat equation with the signal as initial datum. The

term

The first constraint (8) is dropped because it is automatically enforced by the

evolution procedure, i.e., the mean of

To compute

This gives us a dynamic value

We still write

The modified initial data are chosen so that the constraints are satisfied initially,

i.e.,

The explicit partial derivatives of model (10) and model (11) can be expressed as:

We define the derivative terms as,

We let,

and

Then (14) reads as follows:

with boundary conditions

The explicit method is stable and convergent for

3. Time Dependent Model for 1D

The 2D model described before is more regular than the corresponding 1D model because the 1D original optimization problem is barely convex. For the sake of understanding the numerical behavior of our schemes, we also discuss the 1D model. The Euler-Lagrange equation in the 1D case reads as follows:

This equation can be written either as

using the small regularizing parameter

using the

Our model in 1D will be

where

We can also state our model in terms of the

In this paper, we approximate

These evolution models are initialized with the noisy signal

We have estimated

CFL

The following is the explicit numerical scheme of model (22).

Let

We let,

Then (22) reads as follows:

4. Numerical Experiments for 1D

We, as an example, have taken 1D signals

In our test, we will use the signal to noise ratio (SNR) of the signal u to measure the level of noise, defined as

where

The standard deviation of noisy signals (given in Figure

1(c) and Figure 1(d)) are approximately

We use

Figure 1. (a) (b) Original signals; (c) (d) Corresponding noisy signals; (e) (f) Corresponding denoised signals by model (22).

We have performed many other experiments on 1D signals obtaining similar results.

5. Numerical Experiments for 2D

In our tests, we use peak signal to noise ratio (PSNR) as a criteria for the quality of restoration. This quality is usually expressed in terms of the logarithmic decibel scale:

where

When Gaussian white noise with mean zero and variance

We have used three gray scale images, Goldhill

The values of PSNR obtained using model (11) given in Tables 1-3 are larger than that of using model (10) at the same iteration number. Thus based on PSNR values and also on human perception, we conclude that the model (11) gives better denoised images than that of model (10).

6. Concluding Remarks

We have presented a new time dependent model (11) to solve the nonlinear total variation problem for image denoising. The main idea is to apply a priori smoothness on the solution image. Nonlinear explicit schemes are

Figure 2. Original test images used for different experiments. (a) Goldhill: 256 × 256; (b) Rice: 256 × 256; (c) Boat: 512 × 512.

Figure 3. (Top row) Noisy Goldhill images with different levels of Gaussian noise (a)-(c), s2 = 0.06, 0.08, 0.10, respectively; (Second row) (d)-(f) corresponding denoised images by model (10); (Third row) (g)-(i) by model (11).

Figure 4. (Top row) Noisy Rice images with different levels of Gaussian noise (a)-(c); s2 = 0.06, 0.08, 0.10, respectively; (Second row) (d)-(f) corresponding denoised images by model (10); (Third row) (g)-(i) by model (11).

Table 1. Results obtained by using models (10) and (11) applied to the images in Figure 3 with three different levels of Gaussian noise (s2 = 0.06, 0.08 and 0.10).

Figure 5. (Top row) Noisy Boat images with different levels of Gaussian noise (a)-(c); s2 = 0.06, 0.08, 0.10, respectively; (Second row) (d)-(f) corresponding denoised images by model (10); (Third row) (g)-(i) by model (11).

Table 2. Results obtained by using models (10) and (11) applied to the images in Figure 4 with three different levels of Gaussian noise (s2 = 0.06, 0.08 and 0.10).

Table 3. Results obtained by using models (10) and (11) applied to the images in Figure (5) with three different levels of Gaussian noise (s2 = 0.06, 0.08 and 0.10).

used to discretize models (10) and (11). The model (11) gives larger PSNR values than that of model (10), at the same iteration numbers. Besides, a new time dependent model (22) to solve the signal denoising in 1D has also been given.

References

- Osher, S. and Rudin, L.I. (1990) Feature Oriented Image Enhancement Using Shock Filters. SIAM Journal on Numerical Analysis, 27, 919-940. http://dx.doi.org/10.1137/0727053

- Rudin, L., Osher, S. and Fatemi, E. (1992) Nonlinear Total Variation Based Noise Removal Algorithm. Physica D, 60, 259-268. http://dx.doi.org/10.1016/0167-2789(92)90242-F

- Rudin, L. and Osher, S. (1994) Total Variation Based Image Restoration with Free Local Constraints. IEEE International Conference on Image Processing, 1, 31-35.

- Chambolle, A. and Lions, P.L. (1997) Image Recovery via Total Variation Minimization and Related Problems. Numerische Mathematik, 76, 167-188. http://dx.doi.org/10.1007/s002110050258

- Hunt, B.R. (1973) The Application of Constrained Least Squares Estimation to Image Restoration by Digital Computer. IEEE Transactions on Computers, 22, 805-812. http://dx.doi.org/10.1109/TC.1973.5009169

- Strang, G. (1964) Accurate Partial Difference Methods II. Non Linear Problems. Numerische Mathematik, 6, 37-46. http://dx.doi.org/10.1007/BF01386051

- Strang, G. (1968) On the Construction and Comparison of Difference Schemes. SIAM Journal on Numerical Analysis, 5, 506-517. http://dx.doi.org/10.1137/0705041

- Phillips, D.L. (1962) A Technique for the Numerical Solution of Certain Integral Equations of the First Kind. Journal of the Association for Computing Machinery (ACM), 9, 84-97. http://dx.doi.org/10.1145/321105.321114

- Twomey, S. (1963) On the Numerical Solution of Fredholm Integral Equations of the First Kind by the Inversion of the Linear System Produced by Quadrature. Journal of the Association for Computing Machinery (ACM), 10, 97-101. http://dx.doi.org/10.1145/321150.321157

- Twomey, S. (1965) The Application of Numerical Filtering to the Solution of Integral Equations Encountered in Indirect Sensing Measurements. Journal of The Franklin Institute, 279, 95-109. http://dx.doi.org/10.1016/0016-0032(65)90209-7

- Chang, Q. and Chern, I.-L. (2003) Acceleration Methods for Total Variation-Based Image Denoising. SIAM Journal on Scientific Computing, 25, 982-994. http://dx.doi.org/10.1137/S106482750241534X

- Witkin, A.P. (1983) Scale-Space Filtering. International Joint Conference on Artificial Intelligence, 2, 1019-1021.

- Marquina, A. (2006) Inverse Scale Space Methods for Blind Deconvolution. UCLA CAM Report, 06-36.

- Alvarez, L., Lions, P.L. and Morel, J.M. (1992) Image Selective Smoothing and Edge Detection by Nonlinear Diffusion II. SIAM Journal on Numerical Analysis, 29, 845-866. http://dx.doi.org/10.1137/0729052

- Catte, F., Lions, P.L., Morel, J.M. and Coll, T. (1992) Image Selective Smoothing and Edge Detection by Nonlinear Diffusion. SIAM Journal on Numerical Analysis, 29, 182-193. http://dx.doi.org/10.1137/0729012

- Rosen, J.G. (1961) The Gradient Projection Method for Nonlinear Programming. Part II. Nonlinear Constraints. Journal of the Society for Industrial and Applied Mathematics, 9, 514-532. http://dx.doi.org/10.1137/0109044

- Lapidus, L. and Pinder, G.F. (1983) Numerical Solution of Partial Differential Equations in Science and Engineering. SIAM Review, 25, 581-582. http://dx.doi.org/10.1137/1025136

- Marquina, A. and Osher, S. (2000) Explicit Algorithms for a New Time Dependent Model Based on Level Set Motion for Nonlinear Deblurring and Noise Removal. SIAM Journal on Scientific Computing, 22, 387-405. http://dx.doi.org/10.1137/S1064827599351751

- Chan, T.F., Golub, G.H. and Mulet, P. (1999) A Nonlinear Primal-Dual Method for Total Variation-Based Image Restoration. SIAM Journal on Scientific Computing, 20, 1964-1977. http://dx.doi.org/10.1137/S1064827596299767