International Journal of Modern Nonlinear Theory and Application

Vol.06 No.02(2017), Article ID:76860,4 pages

10.4236/ijmnta.2017.62006

The Computational Theory of Intelligence: Feedback

Daniel Kovach

Quantitative Research, Institute of Trading and Finance, Montreal, Canada

Copyright © 2017 by author and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY 4.0).

http://creativecommons.org/licenses/by/4.0/

Received: April 26, 2016; Accepted: June 11, 2017; Published: June 14, 2017

ABSTRACT

In this paper we discuss the applications of feedback to intelligent agents. We show that it adds a momentum component to the learning algorithm. We derive via Lyapunov stability theory the condition necessary in order that the entropy minimization principal of computational intelligence is preserved in the presence of feedback.

Keywords:

Neural Networks, Feedback, Intelligence, Computation, Artificial Intelligence, Lyapunov Stability

1. Introduction

In this paper, we continue the efforts of the Computational Theory of Intelligence (CTI) first introduced in [1] . Here, we investigate the influence of feedback in intelligence processes. It is our intent to show that feedback provides a momentum component to the learning process.

The fact that feedback is effective in various situations especially those involved with time series data is not novel. Some notable studies include [2] [3] [4] among others. Our intent is to provide a generalized theoretical approach to the addition of feedback into the intelligence process as understood through the framework of CTI.

We begin with necessary terminology and notation. Recall from [1] that given the two sets  and

and , the intelligence mapping

, the intelligence mapping , at a particular time,

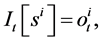

, at a particular time,  is represented by

is represented by

(1)

(1)

where  and

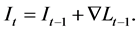

and  This mapping may be updated via the gradient of a learning function

This mapping may be updated via the gradient of a learning function

(2)

(2)

For the remainder of this paper, we will investigate the effects of introducing feedback into the formulation discussed above.

2. Feedback

For the purposes of this paper and its application to the Computational Theory of Intelligence, we define feedback as a process by which the result of a previous iteration of the intelligence process is combined with the input into a subsequent application. Typically, the epochs differ by one iteration, and we will proceed with this in mind.

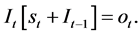

Let us consider Equation (1) and make a slight adjustment by considering the above assumptions, and the output of the previous epoch

(3)

(3)

Note that, due to the application of the addition operation between respective elements of  and

and  we are obliged to ensure that this operation is meaningfully defined between elements of these two sets. Also, for notational brevity we have removed the superscripts

we are obliged to ensure that this operation is meaningfully defined between elements of these two sets. Also, for notational brevity we have removed the superscripts  and

and . We will proceed in this manner when the context is clear.

. We will proceed in this manner when the context is clear.

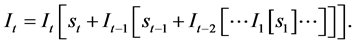

Explicit enumeration of each step in the recursive process gives us the following:

(4)

(4)

At this point we cannot move forward until we pontificate as to the nature of the . At the beginning of this section, our intent was to simply incorporate knowledge from previous iterations into the intelligence process. It therefore stands to reason that each subsequent input is related to the next in some way, as if perhaps by some function

. At the beginning of this section, our intent was to simply incorporate knowledge from previous iterations into the intelligence process. It therefore stands to reason that each subsequent input is related to the next in some way, as if perhaps by some function . In particular, if

. In particular, if

This insight will prove extremely valuable in determining in what types of applications feedback will be most efficacious.

Implementing Equation (5), Equation (4) becomes

2.1. Momentum

If we carry the ideas of this section further, we can apply our discussion about feedback to derive momentum terms to the learning function. If we expand the definition of the learning function taking into account the developments from Equation (6), we have

Applying the gradient operator as per Equation (2), we have

We may expand the above expression through diligent application of the chain rule:

Distributing terms and rearranging,

The above may be thought of as a summation of momentum terms, from the most recent iteration all the way back to the initial epoch.

Equation (10) may be written more compactly as

The momentum terms in Equations (10) and (11) are worth mention. As time increases, the amount of products in each momentum term increases. This insures automatically that more recent momentum terms will dominate over those more distal in time (since we are assuming each term is less than or equal to unity). Weighting more recent iterations is a natural consequence of our formulation.

2.2. Computational Self-Awareness

Finally, it might be insightful to quantify the ratio of the two sources, new information and that which came from feedback, at some particular epoch

For reasons we will discuss later, we will refer to the quantity

3. Stability

One of the core tenets of CTI [1] is that

In a similar manner to our derivation in Section (2.1), we can show that this works out to be

by our definition of

4. Conclusions

In this paper, we provided a generalized theoretical framework concerning the effect of feedback in the intelligence process. From our derivation, we were able to conclude that feedback adds a “momentum” component to the learning pro- cess, which is of particular interest for time series type data.

We also discussed computational self-awareness, purely in the context of the ratio of feedback to input. The concept of self-awareness is highly contentious and philosophical and we wish to keep this paper technical. For our purposes, this is simply the extent to which the agent applies feedback in the intelligence process relative to input data.

Cite this paper

Kovach, D. (2017) The Computational Theory of Intelligence: Feedback. International Journal of Modern Nonlinear Theory and Application, 6, 70- 73. http://dx.doi.org/10.4236/ijmnta.2017.62006

References

- 1. Kovach, D. (2014) The Computational Theory of Intelligence: Information Entropy. International Journal of Modern Nonlinear Theory and Application, 3, 182-190.

https://doi.org/10.4236/ijmnta.2014.34020 - 2. Kaastra, I. and Boyd, M. (1996) Designing a Neural Network for Forecasting Financial and Economic Time Series. Neurocomputing, 10, 215-236.

https://doi.org/10.1016/0925-2312(95)00039-9 - 3. Lee, K.Y., A. Sode-Yome, and June Ho Park. (1998) Adaptive Hopfield Neural Networks for Economic Load Dispatch IEEE Transactions on Power Systems, 13, 519-526.

- 4. Istook, E. and Martinez, T. (2002) Improved Backpropagation Learning in Neural Networks with Windowed Momentum. International Journal of Neural Systems, 12, 303-318.

https://doi.org/10.1142/S0129065702001114 - 5. Bird, R.J. (2003) Chaos and Life: Complexity and Order in Evolution and Thought. Columbia University Press, New York.

https://doi.org/10.7312/bird12662 - 6. Latora, V., Baranger, M., Rapisarda, A. and Tsallis, C. (2000) The Rate of Entropy Increase at the Edge of Chaos. Physics Letters A, 273, 97-103.

https://doi.org/10.1016/S0375-9601(00)00484-9 - 7. Cencini, M., Cecconi, F. and Vulpiani, A. (2010) Chaos: From Simple Models to Complex Systems. World Scientific, Hackensack, NJ.