Advances in Linear Algebra & Matrix Theory

Vol.04 No.03(2014), Article ID:50062,13 pages

10.4236/alamt.2014.43015

Low-Rank Positive Approximants of Symmetric Matrices

Achiya Dax

Hydrological Service, Jerusalem, Israel

Email: dax20@water.gov.il

Copyright © 2014 by author and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 25 July 2014; revised 25 August 2014; accepted 23 September 2014

ABSTRACT

Given a symmetric matrix X, we consider the problem of finding a low-rank positive approximant of X. That is, a symmetric positive semidefinite matrix, S, whose rank is smaller than a given positive integer, l, which is nearest to X in a certain matrix norm. The problem is first solved with regard to four common norms: The Frobenius norm, the Schatten p-norm, the trace norm, and the spectral norm. Then the solution is extended to any unitarily invariant matrix norm. The proof is based on a subtle combination of Ky Fan dominance theorem, a modified pinching principle, and Mirsky minimum-norm theorem.

Keywords:

Low-Rank Positive Approximants, Unitarily Invariant Matrix Norms

1. Introduction

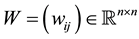

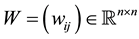

Let  be a given real symmetric

be a given real symmetric  matrix. In this paper we consider the problem of finding a low-rank symmetric positive semidefinite matrix which is nearest to

matrix. In this paper we consider the problem of finding a low-rank symmetric positive semidefinite matrix which is nearest to  with regard to a certain matrix norm. Let

with regard to a certain matrix norm. Let  be a given unitarily invariant matrix norm on

be a given unitarily invariant matrix norm on . (The basic features of such norms are explained in the next section.) Let

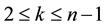

. (The basic features of such norms are explained in the next section.) Let  be a given positive integer such that

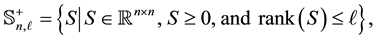

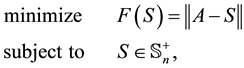

be a given positive integer such that , and define

, and define

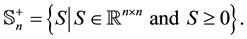

where the notation  means that

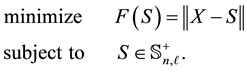

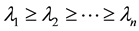

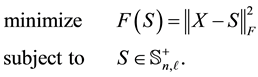

means that  is symmetric and positive semidefinite. Then the problem to solve has the form

is symmetric and positive semidefinite. Then the problem to solve has the form

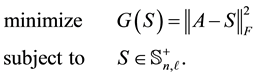

(1.1)

(1.1)

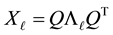

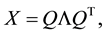

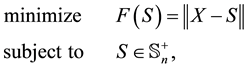

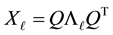

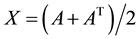

The need for solving such problems arises in certain matrix completion methods that consider Euclidean distance matrices, see [1] or [2] . Since  is assumed to be a symmetric matrix, it has a spectral decom- position

is assumed to be a symmetric matrix, it has a spectral decom- position

(1.2)

(1.2)

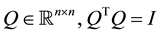

where , is an orthonormal matrix

, is an orthonormal matrix

is a diagonal matrix, and

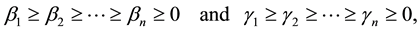

are the eigenvalues of

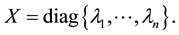

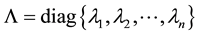

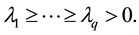

The rest of the paper assumes, therefore, that

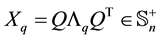

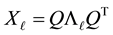

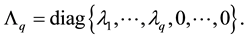

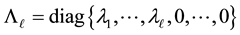

where

Let

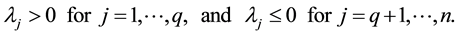

Let the diagonal matrix

(If

solves (1.3) in any unitarily invariant norm.

If

belongs to

solves (1.1) for any unitarily invariant norm.

Let

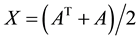

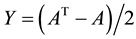

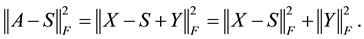

The relation between (1.7) and (1.3) is seen when using the Frobenius matrix norm. Let

Recall also that any matrix

Therefore, when using the Frobenius norm, a solution of (1.3) provides a solution of (1.7). This observation is due to Higham [3] . A matrix that solves (1.7) or (1.3) is called “positive approximant”. Similarly, the term “low-rank positive approximant” refers to a matrix that solves (1.1).

The current interest in positive approximants was initiated in Halmos’ paper [4] , which considers the solution of (1.7) in the spectral norm. Rogers and Ward [5] considered the solution of (1.7) in the Schatten-p norm, Ando [6] considered this problem in the trace norm, and Higham [3] considered the Frobenius norm. Halmos [4] has considered the positive approximant problem in a more general context of linear operators on a Hilbert space. Other positive approximants problems (in the operators context) are considered in [7] - [11] . The problems (1.1), (1.3) and (1.7) fall into the category of “matrix nearness problems”. Further examples of matrix (or operator) nearness problems are discussed in [12] - [18] . A review of this topic is given in Higham [19] .

The plan of the paper is as follows. In the next section we introduce notations and tools which are needed for the coming discussions. In Section 3 we show that

2. Notations and Tools

In this section we introduce notations and facts which are needed for coming discussions. Here

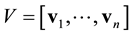

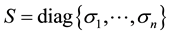

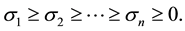

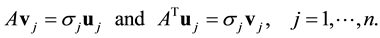

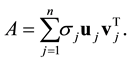

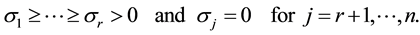

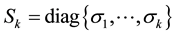

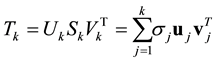

be an SVD of

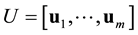

The columns of

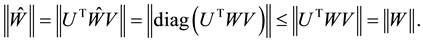

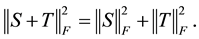

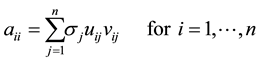

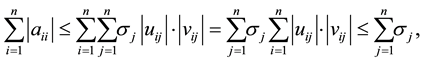

A further consequence of (2.1) is the equality

Moreover, let

So (2.4) can be rewritten as

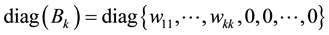

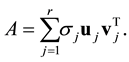

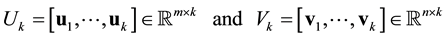

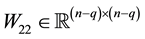

Let the matrices

be constructed from the first

is called a rank-k truncated SVD of

Let

and

where the last inequality follows from the Cauchy-Schwarz inequality and the fact that the columns of

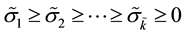

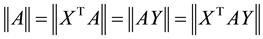

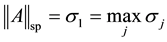

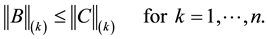

Another useful property regards the concepts of majorization and unitarily invariant norms. Recall that a matrix norm

are satisfied for any matrix

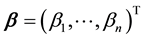

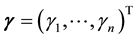

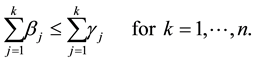

respectively. Let

In this case we say that

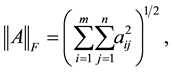

holds for any unitarily invariant norm. For detailed proof of this fact see, for example, [8] , [20] - [23] . The most popular example of an unitarily invariant norm is, perhaps, the Frobenius matrix norm

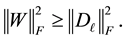

which satisfies

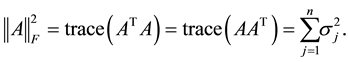

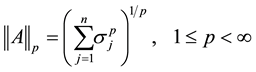

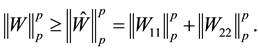

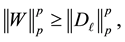

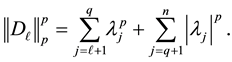

Other examples are the Schatten p-norms,

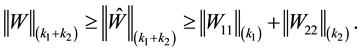

and Ky Fan k-norms,

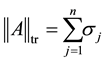

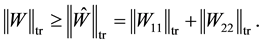

The trace norm,

is obtained for

corresponds to

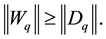

Theorem 1 (Ky Fan dominance theorem) The Inequality (2.13) holds for any unitarily invariant norm if and only if

Another useful tool is the following “rectangular” version of Cauchy interlacing theorem. For a proof of this result see ( [24] , p. 229) or ( [25] , p. 1250).

Theorem 2 (A rectangular Cauchy interlace theorem) Let the

denote the singular values of

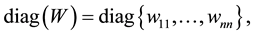

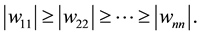

To ease the coming discussions we return to square matrices. In the next assertions

Theorem 3 Let the

holds for any unitarily invariant norm.

Theorem 4 Let the

be obtained from the diagonal entries of

in any unitarily invariant norm.

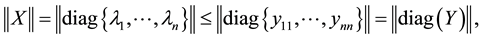

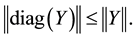

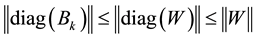

Proof. There is no loss of generality in assuming that the diagonal entries of

Let the matrix

which proves (2.23).

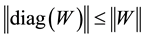

Corollary 5 The diagonal matrix

satisfies

in any unitarily invariant norm.

Lemma 6 Let

Then

in any unitarily invariant norm.

Proof. Using the spectral decomposition of

The matrix

and

while (2.23) gives

Theorem 7 (The pinching principle) Let the matrix

where

denote the “pinched” version of

holds in any unitarily invariant norm.

Proof. Using the SVD of

the singular values of

are orthonormal matrices, and

is a diagonal matrix. Moreover, comparing

Hence a further use of (2.23) gives

Equality (2.28) relates the singular values of

Lemma 8 (Pinching in Schatten p-norms)

Lemma 9 (Pinching in the trace norm)

Lemma 10 (Pinching in the spectral norm)

Lemma 11 (Pinching in Ky Fan k-norms) Let

Then

Proof. The sum

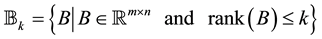

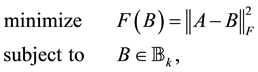

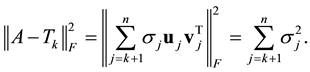

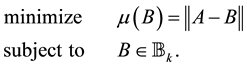

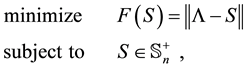

The next tools consider the problem of approximating one matrix by another matrix of lower rank. Let

denote the related set of low-rank matrices. Then here we seek a matrix

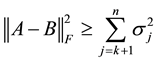

Theorem 12 (Eckart-Young) The inequality

holds for any matrix

giving the optimal value of

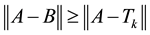

Theorem 13 (Mirsky) Let

holds for any matrix

3. Positive Approximants of Symmetric Matrices

In this section we consider the solution of problem (1.3). Since

whose solution provides a solution of (1.3).

Theorem 14 Let the matrix

Proof. Let the diagonal matrix

That is

Let

Then the proof is concluded by showing that

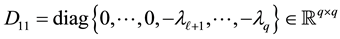

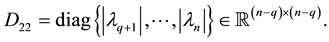

Let the diagonal matrix

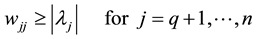

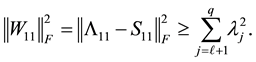

be obtained from the last

On the other hand, since

and

Now combining (3.6) and (3.8) gives (3.4)

Theorem 14 is not new, e.g. ( [8] , p. 277) and [9] . However, the current proof is simple and short. In the next sections we extend these arguments to derive low-rank approximants.

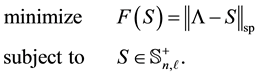

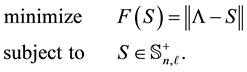

4. Low-Rank Positive Approximants in the Frobenius Norm

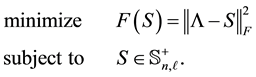

In this section we consider the solution of problem (1.1) in the Frobenius norm. As before, the spectral decom- position (1.2) can be used to “diagonalize” the problem and the actual problem to solve has the form

Theorem 15 Let the matrix

Proof. Let the diagonal matrix

That is,

and

Let

Then the proof is concluded by showing that

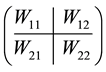

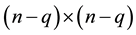

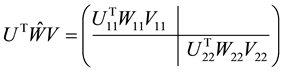

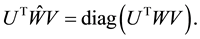

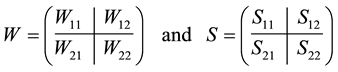

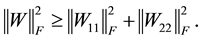

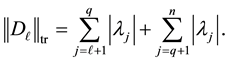

This aim is achieved by considering a partition of

where

Also, as before, since

and

It is left, therefore, to show that

Observe that the matrices

where

and

Moreover, since

Hence from the Eckart-Young theorem we obtain that

Corollary 16 Let

solves the problem

Corollary 17 Let

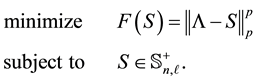

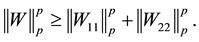

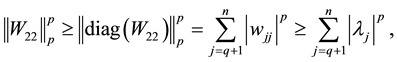

5. Low-Rank Positive Approximants in the Schatten p-Norm

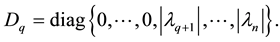

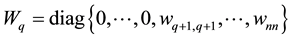

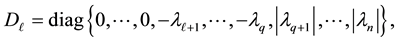

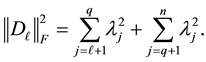

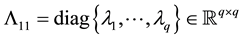

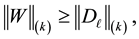

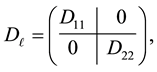

Let the diagonal matrix

Theorem 18 Let the matrix

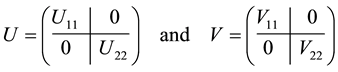

Proof. Let the matrices

where

Let

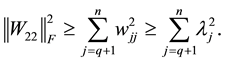

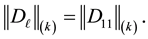

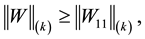

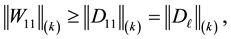

Now Theorem 4 and (4.8) imply

while applying Mirsky theorem on (4.11)-(4.14) gives

Finally substituting (5.5) and (5.6) into (5.4) gives (5.2).

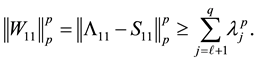

6. Low-Rank Positive Approximants in the Trace Norm

Using the former notations, here we consider the problem

Theorem 19 The matrix

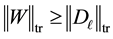

Proof. It is needed to show that

where

The use of Lemma 9 yields

Here Theorem 4 and (4.8) imply the inequalities

and Mirsky theorem gives

which completes the proof.

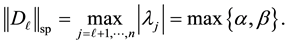

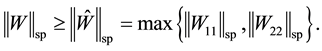

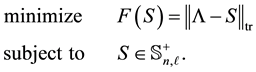

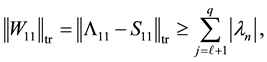

7. Low-Rank Positive Approximants in the Spectral Norm

In this case we consider the problem

Theorem 20 The matrix

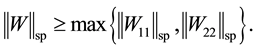

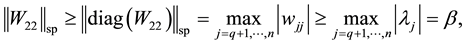

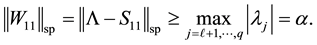

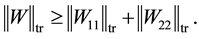

Proof. Following the former notations and arguments, here it is needed to show that

Define

Then, clearly,

Using Lemma 10 we see that

Now Theorem 4 and (4.8) imply

while Mirsky theorem gives

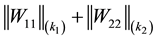

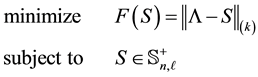

8. Unitarily Invariant Norms

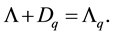

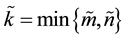

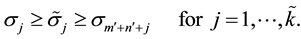

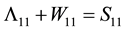

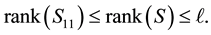

Let the diagonal matrices

The derivation of this result is based on the following assertion, which considers Ky Fan

Theorem 21 The matrix

for

Proof. We have already proved that

Let

where

and

Then there are three different cases to consider.

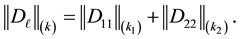

The first case occurs when

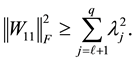

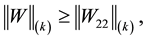

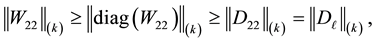

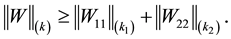

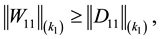

Here Theorem 3 implies the inequalities

while from (4.11)-(4.14) and Mirsky theorem we obtain

which proves (8.3).

The second case occurs when

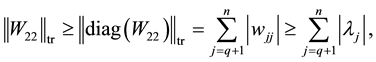

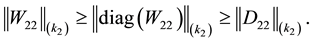

Here Theorem 3 implies

while Theorem 4 and the inequalities (4.8) give

which proves (8.3).

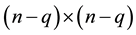

The third case occurs when neither (8.7) nor (8.10) hold. In this case there exist two positive integers,

and

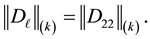

Now Lemma 11 shows that

A further use of (4.11)-(4.14) and Mirsky theorem give

and from Theorem 4 and (4.8) we obtain

Hence by substituting (8.16) and (8.17) into (8.15) we get (8.3).

The fact that (8.3) holds for

holds for any unitarily invariant norm. This observation is a direct consequence of Ky Fan dominance theorem. The last inequality proves our final results.

Theorem 22 The matrix

Theorem 23 Using the notations of Section 1, the matrix

solves (1.1) in any unitarily invariant norm.

9. Concluding Remarks

In view of Theorem 14 and Mirsky theorem, the observation that

Once Theorem 22 is proved, it is possible to use this result to derive Theorems 15-18. Yet the direct proofs that we give clearly illustrate why these theorems work. In fact, the proof of Theorem 15 paves the way for the other proofs. Moreover, as Corollary 17 shows, when using the Frobenius norm we get stronger results: In this case we are able to compute a low-rank positive approximant of any matrix

References

- Dax, A. (2014) Imputing Missing Entries of a Data Matrix: A Review. Techical Report, Hydrological Service of Israel.

- Trosset, M.W. (2000) Distance Matrix Completion by Numerical Optimization. Computational Optimization and Applications, 17, 11-22. http://dx.doi.org/10.1023/A:1008722907820

- Higham, N.J. (1988) Computing a Nearest Symmetric Positive Semidefinite Matrix. Linear Algebra and Its Applications, 103, 103-118. http://dx.doi.org/10.1016/0024-3795(88)90223-6

- Halmos, P.R. (1972) Positive Approximants of Operators. Indiana University Mathematics Journal, 21, 951-960. http://dx.doi.org/10.1512/iumj.1972.21.21076

- Rogers, D.D. and Ward, J.D. (1981) Cp-Minimal Positive Approximants. Acta Scientiarum Mathematicarum, 43, 109-115.

- Ando, T. (1985) Approximation in Trace Norm by Positive Semidefinite Matrices. Linear Algebra and Its Applications, 71, 15-21. http://dx.doi.org/10.1016/0024-3795(85)90230-7

- Ando, T., Sekiguchi, T. and Suzuki, T. (1973) Approximation by Positive Operators. Mathematische Zeitschrift, 131, 273-282. http://dx.doi.org/10.1007/BF01174903

- Bhatia, R. (1997) Matrix Analysis. Springer, New York. http://dx.doi.org/10.1007/978-1-4612-0653-8

- Bhatia, R. and Kittaneh, F. (1992) Approximation by Positive Operators. Linear Algebra and Its Applications, 161, 1-9. http://dx.doi.org/10.1016/0024-3795(92)90001-Q

- Bouldin, R. (1973) Positive Approximants. Transactions of the American Mathematical Society, 177, 391-403. http://dx.doi.org/10.1090/S0002-9947-1973-0317082-6

- Sekiguchi, T. (1976) Positive Approximants of Normal Operators. Hokkaido Mathematical Journal, 5, 270-279. http://dx.doi.org/10.14492/hokmj/1381758677

- Bouldin, R. (1980) Best Approximation of a Normal Operator in the Schatten p-Norm. Proceedings of the American Mathematical Society, 80, 277-282.

- Bouldin, R. (1987) Best Approximation of a Normal Operator in the Trace Norm. Acta Scientiarum Mathematicarum, 51, 467-474.

- Bouldin, R., (1988) Best Approximation of a Nonnormal Operator in the Trace Norm. Journal of Mathematical Analysis and Applications, 132, 102-113. http://dx.doi.org/10.1016/0022-247X(88)90046-7

- Laszkiewicz, B. and Zietak, K. (2008) Approximation by Matrices with Restricted Spectra. Linear Algebra and Its Applications, 428, 1031-1040. http://dx.doi.org/10.1016/j.laa.2007.09.009

- Phillips, J. (1977) Nearest Normal Approximation for Certain Normal Operators. Proceedings of the American Mathematical Society, 67, 236-240. http://dx.doi.org/10.1090/S0002-9939-1977-0458212-4

- Ruhe, A. (1987) Closest Normal Matrix Finally Found! BIT Numerical Mathematics, 27, 585-598. http://dx.doi.org/10.1007/BF01937278

- Zietak, K. (1997) Strict Spectral Approximation of a Matrix and Some Related Problems. Applicationes Mathematicae, 24, 267-280.

- Higham, N.J. (1989) Matrix Nearness Problems and Applications. In: Gover, M.J.C. and Barnett, S., Eds., Applications of Matrix Theory, Oxford University Press, Oxford, 1-27.

- Fan, K. (1951) Maximum Properties and Inequalities for the Eigenvalues of Completely Continuous Operators. Proceedings of the National Academy of Sciences of the United States of America, 37, 760-766. http://dx.doi.org/10.1073/pnas.37.11.760

- Fan, K. and Hoffman, A.J. (1955) Some Metric Inequalities in the Space of Matrices. Proceedings of the American Mathematical Society, 6, 111-116. http://dx.doi.org/10.1090/S0002-9939-1955-0067841-7

- Horn, R.A. and Johnson, C.R. (1991) Topics in Matrix Analysis. Cambridge University Press, Cambridge. http://dx.doi.org/10.1017/CBO9780511840371

- Marshall, A.W., Olkin, I. and Arnold, B.C. (2011) Inequalities: Theory of Majorization and Its Applications. Springer Series in Statistics, 2nd Edition, Springer, New York.

- Zhang, F. (1999) Matrix Theory: Basic Results and Techniques. Springer-Verlag, New York. http://dx.doi.org/10.1007/978-1-4757-5797-2

- Dax, A. (2010) On Extremum Properties of Orthogonal Quotient Matrices. Linear Algebra and Its Applications, 432, 1234-1257. http://dx.doi.org/10.1016/j.laa.2009.10.034

- Eckart, C. and Young, G. (1936) The Approximation of One Matrix by Another of Lower Rank. Psychometrika, 1, 211-218. http://dx.doi.org/10.1007/BF02288367

- Mirsky, L. (1960) Symmetric Gauge Functions and Unitarily Invariant Norms. Quarterly Journal of Mathematics, 11, 50-59. http://dx.doi.org/10.1093/qmath/11.1.50