International Journal of Intelligence Science

Vol.05 No.01(2015), Article ID:52460,23 pages

10.4236/ijis.2015.51003

Hyperscale Puts the Sapiens into Homo

Ron Cottam1, Willy Ranson2, Roger Vounckx1

1The Living Systems Project, Department of Electronics and Informatics, Vrije Universiteit Brussel (VUB), Brussels, Belgium

2IMEC vzw, Leuven, Belgium

Email: life@etro.vub.ac.be

Academic Editor: Zhongzhi Shi, Institute of Computing Technology, CAS, China

Copyright © 2015 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 16 October 2014; revised 21 November 2014; accepted 6 December 2014

ABSTRACT

The human mind’s evolution owes much to its companion phenomena of intelligence, sapience, wisdom, awareness and consciousness. In this paper we take the concepts of intelligence and sapience as the starting point of a route towards elucidation of the conscious mind. There is much disagreement and confusion associated with the word intelligence. A lot of this results from its use in diverse contexts, where it is called upon to represent different ideas and to justify different arguments. Addition of the word sapience to the mix merely complicates matters, unless we can relate both of these words to different concepts in a way which acceptably crosses contextual boundaries. We have established a connection between information processing and processor “architecture” which provides just such a linguistic separation, and which is applicable in either a computational or conceptual form to any context. This paper reports the argumentation leading up to a distinction between intelligence and sapience, and relates this distinction to human “cognitive” activities. Information is always contextual. Information processing in a system always takes place between “architectural” scales: intelligence is the “tool” which permits an “overview” of the relevance of individual items of information. System unity presumes a degree of coherence across all the scales of a system: sapience is the “tool” which permits an evaluation of the relevance of both individual items and individual scales of information to a common purpose. This hyperscalar coherence is created through mutual inter-scalar observation, whose recursive nature generates the independence of high-level consciousness, making humans human. We conclude that intelligence and sapience are distinct and necessary properties of all information processing systems, and that the degree of their availability controls a system’s or a human’s cognitive capacity, if not its application. This establishes intelligence and sapience as prime ancestors of the conscious mind. However, to our knowledge, there is no current mathematical approach which can satisfactorily deal with the native irrationalities of information integration across multiple scales, and therefore of formally modeling the mind.

Keywords:

Hyperscale, Hierarchy, Intelligence, Sapience, Consciousness

1. Introduction

There is much disagreement and confusion associated with the word intelligence. A lot of this results from its use in diverse contexts, where it is called upon to represent different ideas and to justify different arguments. James Albus [1] has defined intelligence as

“… the ability to act appropriately in an uncertain environment; appropriate action is that which maximizes the probability of success; success is the achievement or maintenance of behavioral goals; behavioral goals are desired states of the environment that a behavior is designed to achieve or maintain”.

Although many will find this definition insufficient, we will provisionally accept it, in that it is the most concise and complete description we are aware of which is amenable to use within a computational paradigm. We can extract from it the simplified message that intelligence promotes survival-related information-reductive decision-making in complex contexts (see also [2] ). So far so good, but if intelligence is a tool for survival, what is sapience: merely more of the same thing? If such were the case, it would be difficult to justify the addition of yet another technical term to the cognitive domain, which is already saturated with contextually-ambiguous terminology. Addition of the word “sapience” to the linguistic mix merely complicates matters, unless we can relate both of these words to different concepts in a way which acceptably crosses contextual boundaries.

The authors have earlier published [3] the identification of mind with the continuously evolving anticipatory capability of a complex networked information-processing system. In the work leading up to this, following paper we have established a connection between information processing and processor “architecture”, which provides linguistic separation between intelligence and sapience, and which is applicable in either a computational or a conceptual form to any context. The paper reports the argumentation leading up to this distinction between intelligence and sapience, relates the distinction to human “cognitive” activities, and establishes their relevance to the evolutionary appearance of the human conscious mind. The story is more than a little recursive, as will later become clear. Maybe, therefore, the best approach will be to adopt the communication principles laid down by Jean-Luc Doumont1 and begin by stating our final conclusion.

We live within hyperscale: intelligence is how we get there; sapience is how we remain there.

On its own, this statement will convey little, but our task now in this introduction is clear: it is to explain what this short conclusion means before delving into the technical arguments which lead up to it. There are two main areas of human concern which we must first address those of scale and presence.

1.1. Scale

Arguably, human technical evolution has progressed from initially “dealing with things at our own scale”, whether of size, hardness, time… towards progressively both larger and smaller scales―considerations of size, for example, have expanded outwards from the meter to Ångstroms, to light years, and even beyond. We now unthinkingly relate to numerous different scales of parameters or phenomena, but the critical distinction is not simply one of size, but how we access different scalar magnitudes. Our own eyes, for example, enable us to access reasonably and directly sizes down to about one micrometer, but not smaller. All observation or measurement devices suffer from similar limitations, which often relate the “size” of a device’s operational parameters (e.g. wavelength) to the “size” of the entity or effect which is to be observed. Waves on the sea, for example, only penetrate a harbor’s entrance in a diffracted manner for a restricted range of distance (or time) between their crests―their wavelength.

Unfortunately, therefore, we cannot access all scales directly, but are forced to rely on technically developed instruments to assist us, for example on electron microscopes, or on electronic signal integrators. Our belief in the “correctness” of the information these devices provide depends on our belief in the “correctness” of the modeling chains outwards from our own scale which we use to build them, to understand their operation and to derive meaningful information from them. These modeling chains similarly invoke sequences of scale―a particular scientific model may only be accurate within a certain parametric range, for example. It is worth pointing out here that there is no easily available single model which “works” well right across the meter, Ångstrom, and galactic range of scales2. Consequently, whether we are basing our considerations on “real” parametric sizes or “abstract” (model) parametric sizes, the same restriction holds―we can’t escape the implications of perceptional scale in any simplistic mechanical manner.

1.2. Presence

A current “hot topic” of research is that of presence: how should we formulate technical multimedia systems to enhance the impression that we are “somewhere else”. Interestingly, this question has already exercised the directors of theatre and film for many decades in terms of the “suspension of disbelief”3 they require of their audiences; we will return to the meaning of “suspension of disbelief” later. Surprisingly, technical presence research, with its emphasis on “being there”, appears to avoid a central aspect of philosophical presence research which could greatly inform it―namely “being here”! Psychology has long been interested in yet another aspect of presence, which is germane to the argument we will present―namely the capability of a human to effectively place him or herself at the location of a tool’s operation. Metzinger [5] has presented the hypothesis that we are unable to distinguish between the objects of our attention and the internal representations of them which we “observe”:

“That is why we ‘look through’ those representational structures, as if we were in direct and immediate contact with their content, with what they represent for us.” [5]

When we use a screwdriver, we are at the screw; when we drive a car, we become the car4. This transfer of presence is singular in character―we can only be present in one location at a time. In this, it is closely related to the concept of consciousness, which abides by a similar constraint.

The most astounding characteristic of this transfer of presence is the way in which we can effortlessly skip between different scales of an overall picture. Nowhere is this more evident than when riding a motorcycle at speed5, where safety demands a mental “backing off” from direct visual contact with the surroundings to a holistic “place” where any scalar aspect of the scene’s totality can be equivalently and speedily accessed whenever needed. It is tempting to associate this “place” with “the zone” referred to by professional athletes as the “state” within which they perform their best, and to identify the different “locations” to which we transfer our presence with the multiple differentiated conscious states of Tononi and Edelmans’ [6] Dynamic Core Hypothesis.

1.3. Hyperscale and the Present Argument

A central part of our argumentation in this paper will involve the combination of these two ideas of scale and presence, where there is an immediately noticeable dissimilarity of pluralism. Many of our day-to-day actions, if not all, are simultaneously associated with multiple scales of representation of a single context. How on earth do we manage to relate to a multiplicity of mutually-exclusive scales of our environment through the singular focus of consciousness? Presumably there must be some kind of interface between the two which implements coding/decoding―either of which may be integral or dis-integral in character. In any unified system the unification of multiple scales by their interaction is itself a recognizable system property: this is hyperscale.

The quality and realization of system unification is by far the most complex and difficult aspect of our environment to understand; so much so that its implications are virtually absent from conventional science, which until the “birth” of chaos in the nineteen-sixties habitually restricted itself to situations where information content changed little across scales, as in the case of inorganic crystals, for example [7] . Consistent with a view of nature which accepts that evolution cannibalizes old capabilities for new purposes6 [9] , the cognitive resolution of this problem has been to adopt the architecture of the stimulus in formulating a response: we relate to hyperscalar systems from within our own assiduously-constructed hyperscalar mental environment!

In this paper we will describe not only how hyperscale can fulfill the function of integral/dis-integral coding/decoding between the multiple scales and the singular forms of mental consciousness of an organism, but also how it is a natural and necessary part of any unified multiscalar system. We believe that the “spotlight of consciousness”7 in humans is momentarily focused at a single “location” within a spatio-temporal hyperscalar “phase space” which we construct from the entire history of our individual and social existences, including the “facts” of our believed “reality”, numerous apparently consistent but insufficiently investigated “logical” suppositions, and as yet untested or normally-abandoned hypothetical models which serve to fill in otherwise inconvenient or glaringly obvious omissions in its landscape8. Again, in reference to evolution’s cannibalism, this resembles the way our visual system “fills in” missing or occluded parts of an object or a visual scene with the most likely shapes, colors or objects.

Our major task here is to make sense of the relationship between these three technical terms: intelligence, sapience and hyperscale. Information is always contextual. The term “data” presupposes an externally predefined context within which it has a meaning. Those of us whose blood pressure has been measured will probably recognize the way the results were quoted as something like “sixteen, eight” (if they were lucky)―whatever that might mean! Information, however, cannot be dissociated from its context in a similar way. In this it is semiotic in nature, rather than simply semantic. Most people would almost certainly have no idea in what units the numbers of a blood-pressure measurement make sense as data, but are probably well aware that “twenty, eighteen” is worth worrying about; the context within which the numbers have a meaning is far wider and more diffuse than “in mm of mercury” (e.g. “… the doctor told my auntie she had ‘twenty, eighteen’, and she had to go into hospital…”).

Information processing in a system always takes place between different “architectural” scales of a processing entity: simplistically, we can view intelligence as the “tool” which permits an “overview” of the relevance of individual items of information and the means by which all available information is taken account of in generating or updating a new, higher-level, simplified representation of the information system. As such it functions as a complex mix of context-translator, interpreter and comparator. The reason why “normal” rationality loses track of this is that the translations, interpretations and comparisons it makes cannot be simply reduced to a combination of one-to-one, one-to-many and many-to-one relationships. Following Robert Rosen (see, for example, [10] [11] ) categorize complexity by

“… If there is no way to completely model all aspects of any given system, it is considered ‘non-computable’ and is, therefore, ‘complex’ in this unique sense”.

Intelligence not only resembles this categorization, but also depends on it in the way it operates. The authors are not aware of any single analytic or synthetic form capable of completely mirroring the properties and operation of intelligence, nor does it seem likely that there could be. Similarly referring to Rosen’s description of complexity, this time through the words of Mikulecky [12] :

“Complexity is the property of a real world system that is manifest in the inability of any formalism being adequate to capture all its properties. It requires that we find distinctly different ways of interacting with systems. Distinctly different in the sense that when we make successful models, the formal systems needed to describe each distinct aspect are not derivable from each other”.

As we indicated above, the translations, interpretations and comparisons intelligence makes cannot be simply reduced to a combination of one-to-one, one-to-many and many-to-one relationships. This, then, is the most inconvenient characteristic of intelligence: we cannot make conventionally simple models of it. We have commented earlier [13] that

“the primary quality of any recognizable entity is its unification. It is easy to bypass this aspect and concentrate on more observable characteristics, but an entity’s unification cannot be ignored if we are to come to any understanding of its nature and operation. A viable characterization of ‘unification’ is provided by comparison between intra-entity correlative organization and entity-environment inter-correlative organization―between cohesion and adhesion [14] . Although the difference between these two for a crystal is substantial, that for a living organism is far higher”.

System unity presumes a degree of coherence across all the scales of a system, where all of these are derived from others through intelligence. Sapience is the “tool” which permits evaluation of the relevance to a common purpose not only of individual items of information―as does intelligence―but also of the multiple system scales themselves as individual informational “entities”. In this its usefulness, value or meaning is reminiscent of the overarching Aristotelian concept of final cause, rather than his other more local concepts of material, formal and efficient cause. We will return to Aristotle’s [15] causes later―not only to final cause, but most especially to Rosen’s [16] consideration of the importance of internalization of efficient cause in organisms.

When quantum mechanics forced itself onto the scientific stage at the beginning of the twentieth century it brought with it a major illumination of the way nominally independent entities relate to each other through measurement. No longer was it sufficient to presume that we could stand outside an experiment and remain extraneous to its results. Matsuno [17] and Salthe [18] have pointed out that measurement in its most general form may be likened to a mutual observation between experimental “subject” and “object”―both contribute to the experiment’s conclusion, and neither remains untouched. This describes perfectly the dynamic relationship between different scales in a unified system: their mutual observation promotes an unending mutual adaptation.

Rosen [16] has proposed in great mathematical detail a formal self-referencing cycle of constructive causes which could be capable of maintaining the temporal viability of living systems. However, although his work includes a single but important reference to the mathematical possibility of “a hierarchy of (different) informational levels” [19] , to the best of the authors’ knowledge he never explicitly referred to the importance of scale in organisms, let alone the generation of hyperscale. Arguably, the most significant aspect of hyperscale is that it permits an organism to simultaneously operate, or appear to operate, as both a mono-scalar and a multiscalar entity [13] . The individual scales of a natural system are partially isolated from each other (through enclosure) but also partially in communication (through process-closure). The balance between these two through mutual observation takes the form of an autonomy negotiation [13] . Hyperscalar coherence is created through this mutual inter-scalar observation, whose recursive nature ultimately generates the independence of high-level consciousness, making humans human. Consciousness acts both as the servant of intelligence and sapience and their master in promoting an entity’s coherence, cohesion and survival. We conclude that intelligence and sapience are distinct and necessary properties of all information processing systems, and that the degree of their availability controls a system’s or a human’s cognitive capacity, if not directly its application. This establishes intelligence and sapience as prime ancestors of the conscious mind.

To our knowledge there is as yet no mathematical approach which can satisfactorily deal with the native irrationalities of information integration across multiple scales, although one promising suggestion has been published [20] . The principle difficulty lies, however, not in the mechanics of developing a self-consistent mathematics, but in escaping from the ‘blindness to viewpoint’ which is a natural consequence of our stated final conclusion that

we live within hyperscale.

The central hypothesis is that we mentally integrate all observational scales into a hyperscalar “phase space” within which we are free to roam without taking any account of the “reality” of the “location” from which we make our observations. Considerations of internalism, externalism and even the “existence” of our viewpoint itself are ephemeral within hyperscale: we are the lords of our own creation, of our own “scale-free selves”, and we can fly anywhere, view anything. Powerful though this may be, it dangerously conceals any distinction between “what really is”9 and “what we make use of”―as we pointed out earlier, we can expect the landscape of our spatio-temporal hyperscalar “phase space” to include not only “facts”, but also suppositions and conveniences.

2. Intelligence Sees Scale

Our first concrete task will be to address a long-standing problem related to sensory integration which most obviously raises its head in the way we habitually think about combining a number of elements into a whole. We are used to presuming that we can be simultaneously and accurately aware of both an entity and its constituent elements. Unfortunately, this presumption creates an apparently esoteric but intellectually-catastrophic problem, especially in the cognitive domain, which we must address before going any further. Whilst being a necessary part of our argument, this also provides an excellent example of both the logical power and the logical risk of relying on hyperscalar-located transferable presence in constructing a world view of presumed accuracy.

Let us first propose a simplistic provisional difference between natural and artificial contexts. As their name suggests, we will define natural contexts as those which come to pass without human intervention, and following the usual meaning of artificial we will define artificial contexts as those resulting from human intervention. Continuing our simplistic progress, we note that the stability of natural systems depends on naturally occurring constraints, while that of artificial systems depends primarily on imposed constraints, either directly or indirectly exercised by human intervention. Simplistic though this proposition may be, it leads us to an important conclusion about the possibility of simultaneous and accurate awareness of both an entity and its constituents. Figure 1 illustrates different forms of this relationship. Figure 1(a) shows what we will refer to as a collection of elements or observations, each of which is labeled as a kind of a. It is rather like “a bag” containing a black hole―we can put things in, but never see any relationship between those things―only the local exists, and there is no consequent global representation. Figure 1(b) shows a classical mathematical set10, where we can manipulate both elements and their global representation within one and the same rational environment. Figure 1(c) shows the usual implication of “self-organization”, where “the whole” b is not equivalent to “the sum of the parts” a1, a2, a3... i.e. the global representation cannot be directly obtained from knowledge of the elements from which it is generated?there is no complete direct local-to-global correlation.

Figure 2 illustrates the collection a1, a2, a3 ... we have referred to, presented as if we could “see everything” ―a dangerous operation, because if we are not careful the collection will mutate into a set, whether we want it to or not! This collection of a1, a2, a3 ... can be represented from “a single viewpoint” in a number of different ways b1, b2, b3... There is an implied ‘quod homines, tot sententiae’, in that in general the collection can appear to have as many different implications or meanings as we attribute points-of-view b1, b2, b3...

We can define (i.e. conveniently refer to) the collection a1, a2, a3... as A, but we cannot derive A from a1, a2, a3... as it is outside their individual contexts (we would have to be able to see into “the bag”, and know all of their current inter-relationships). A is the single-point-of-view collection of a1, a2, a3... (N.B. there is only one A―so far as we are aware), and from a single collection A we get a one-to-many relationship with a multiplicity of b1, b2, b3... This is radically different from the more familiar construction of a set A of a1, a2, a3... (Figure 1(b)), where we can not only derive A from a1, a2, a3, ... , but we usually presuppose that the two are equivalent.

Figure 1. (a) A collection of elements a, where there is no global representation; (b) a mathematical set of elements, where b equals the sum of the a’s; (c) “self-organization”, where b is not easily related to the sum of the a’s.

Figure 2. The collection of elements a, presupposing that we could “see everything”, and showing a number of different global representations b as seen from different points of view.

Whereas the collection of Figure 2 exemplifies an isolated natural context, the (mathematical) set of Figure 1(b) is an example of an artificial context, where stability is maintained by intentionally/externally imposed constraints (in this case the axioms which delineate the domain of applicability of the context’s logic system). These two―the collection and the set―provide limiting extremes of the more familiar context shown in Figure 3, where there is a greater degree of predictability in the derivation of a “more globally applicable” global form b from a set of elements or observations a1, a2, a3.... Any specific b is given by all of a1, a2, a3... mutually aligning themselves within some kind of stability―an infinite process if carried out to perfection. Here again, however, it is easy to fool ourselves. Any collection is a purely externalist description―A is externalist from the point of view of an externalist formal model of the viewpoint of a chosen b. This raises all sorts of problems!

To try and accurately describe the character of a specific b, either you have to do this from its own single point-of-view, in which case you can’t directly relate to A but b equals some kind of recursive integral of a1, a2, a3..., or you have to adopt another different (single) viewpoint. In this latter case you are then using an external model of b (i.e. you have to formalize―simplify―to say anything at all!). So either you only “see” a single b―a rather Newtonian conclusion, depending on intentionally imposed constraints―and b equals A or is derivable from A or you presume it is derivable from A (i.e. there is a causal relationship), or you “see” multiple b’s and you have no idea where they come from or how they are derived!

All of this implies that a collection is “defined” without “observer intelligence”; definition of a mathematical set presumes that there is “observer intelligence”―when in fact there is no intelligence involved; recognition of real scale requires “observer intelligence”. And it all seemed so simple to start with!

A small linguistic example should help at this point. If we think of each a as an individual’s pronounced word, then A is the hypothetically (and probably inaccessible) complete established set of meanings attributed to that pronounced word by the complete community of pronouncing individuals. Any specific b is then the community-negotiated agreement as to the sense of a within a specific meaningful context, where the entire collection of understood meanings is B (N.B. the sound we would make in English from the syllable “ma” can mean at least either “mother” or “horse” in Chinese by dint of its pronounced intonation―a somewhat risky confusion, which English-speakers would not usually be aware of). Note that a completely uniform language would exhibit11 b = a; a somewhat egotistical individual would presume b = a; a successful “living” language would exhibit a ≈ b by cultural agreement: only a completely controlled language (e.g. the “Newspeak” portrayed in George Orwell’s book “1984”) would make A equal to a controller-decided b―clearly an intentionally-constrained context!

The central conclusion we can draw from this account is that the incredible successfulness of our reliance on hyperscalar-based transferable presence effectively blinds us to whether we can reasonably presuppose equivalent access to different scales of a system or not. We would argue that in general we do not have equivalent access to different perceptional scales, and that the “scales” we apparently access are our internal models of them, which may be very different from “reality”. Scanning electron microscopes are now capable of providing photographs of single atoms, but whether the spherical images they produce prove that atoms are indeed spherical is somewhat debatable!12

Figure 3. A more “normal” situation intermediate between the collection of Figure 1(a) and the set of Figure 1(b), where there is a reasonably predictable emergent global representation b.

So, the idea we would wish the reader to hold on to at this point is that of the fallibility of our observation and understanding of scale. It may come as no surprise that our next action will be to apparently contradict this notion! Apparently yes?but in fact no! We merely wish to use this reminder as a device to emphasize that intelligence is not the logically self-consistent mechanism our transferable-presence intelligence tells us it is. We may not “correctly” view scale, but intelligence does! Logical rules are a crutch to rely on in the absence of intelligence. Intelligence is capable of far more than that.

We now appear to be suggesting something thoroughly idiotic: that although our human intelligence―of which we are so proud―is capable of transparently manipulating inter-scalar information conversions, we “ourselves” with our “all-powerful” conscious minds are incapable of maintaining a similar transparency without relying on the artificially constructed logical completeness of simple inter-scalar models. However, not only is the suggestion valid, but it could be no other way. We have already planted the seeds of this conundrum in previous sections of the paper.

Intelligence operates locally between system scales, and as such it is just as directly inaccessible from a “global” point of view as are the individual scales themselves: the wonderful integration of multiply-scaled phenomena through hyperscale precludes our direct and accurate access to local phenomena. Intelligence is an isolated component of our thought processes; a tool which operates within the confines of an ephemeral cage we construct momentarily to provoke its function. This idea that although we may be aware of the astounding capacity of intelligence we are unable to “reproduce” it within our conscious actions recalls Metzinger’s [5] “naïve realism” hypothesis concerning the emergence of a “first-person perspective” in consciousness, that

“the representational vehicles employed by the system are transparent in the sense that they do not contain the information that they are models on the level of their content… ‘Phenomenal transparency’ means that we are systems which are not able to recognize their own representational instruments as representational instruments… A simple functional hypothesis might say that the respective data structures are activated in such a fast and reliable way that the system itself is not able to recognize them as such any more (e.g. because of a lower temporal resolution of met are presentational processes making earlier processing stages unavailable for introspective attention)… For biological systems like ourselves―who always had to minimize computational load and find simple but viable solutions―naïve realism was a functionally adequate ‘background assumption’ to achieve reproductive success”.

In the strict sense we earlier gave to the word artificial, intelligence operates within an artificially constrained environment, or at least that is how it must locally appear to be, as the constraints are applied from scales other than local ones. In a natural context, however, there is a local-to-and-from-global consistency across the system- wide gamut of constraints, which corresponds to the unification of hyperscale. Local information processing is then mediated through natural intelligence, characterized as we describe above. In an artificial system, although there may be an attempt at consistency of constraints, this will never be complete13, and local information processing will be mediated through artificial intelligence (which linguistic usage corresponds exactly to that of Artificial Intelligence―AI). This situation corresponds to Figure 1(b).

Figure 2 illustrates the typical form of an inter-scalar information processing scenario. The multiple outcomes b1, b2, b3… correspond to different processing constraints, either on different occasions or at different points in time within a single occasion14. It is easy to see from this why it is tempting to be scathing about the commonly addressed “phenomenon” of “self-organization”. In anything other than a radically simple system different external system constraints result in different outcomes―organization is essentially externally driven and not internally: it would be more accurate to invoke as a description “environmental-organization”, rather than “self- organization”! It is important to note that “external” here only means “external to an entire system” if we are talking about system-wide processing. It may also mean only “external to a sub-system”―in which case the drive can still be internal to the system as a whole. This is an important point in systems exhibiting cyclic process- closure, for example in the organisms analyzed by Robert Rosen [16] , where the internalization of efficient cause depends on this duplicity of appearance.

If we rely on our “typical form of an inter-scalar information processing scenario” (see Figure 3) to represent a manifestation of intelligence, then in a multiscalar system it appears in a number of locally isolated processes, as illustrated by (pre-a → a), (a → b) and (b → c) in Figure 4. This diagram, however, presupposes that there

Figure 4. The manifestation of intelligence at a series of different levels in a multiscalar “system” where there is no global consistency between the various locally-applicable rationalities.

is no single cross-scalar rationality (e.g. as in a digital computer―which is therefore scale-less) and no hyperscalar correlation which couples together all the individual scales. The local processing constraints, therefore, are in all cases just that―purely local and individual―and the “system” is in fact not a system at all, but a completely fragmented assembly of multiply-scaled sub-components. In the absence of a global unifying “mechanism”, the individual scales correspond to the concept of a collection we proposed earlier. Global coherence would demand the presence of

1) a single globally consistent cross-scalar rationality (in which case it is an artificially constrained system, and is therefore in reality scale-less), or

2) a set of inter-scalar rationalities which conforms to some consistent global pattern (i.e. it is again a scale-less artificial system), or

3) a hyperscalar correlation (i.e. it is a natural system exhibiting real perceptional scale).

We conclude that in a naturally cohering multiscalar system there are two independent information processing “tools”. One of these operates between pairs of scalar levels to establish local scalar coherences; the other operates across the entire assembly of scalar levels to establish global scalar coherence. We associate the former tool with intelligence and the latter with sapience. Neither tool is independent, nor in a natural system does each of them rely on the other to provide its indispensable constraints. There is, however, a clear difference between the two relative to the emergence of a system and its stabilization. Intelligence is primarily a system-building tool; it can promote the creation of new scales and local stabilization. Sapience, however, is more concerned with the viability of an already-built scalar assembly: it has no function in a minimally-scaled entity, but comes into its own with increasing system size as the central generator of system-wide coherence and stability:

intelligence is how we get there; sapience is how we remain there.

In distinguishing between intelligence and sapience we have located the two precisely within different contexts, which provides a precise separation in meaning. Although within this paper we can establish an exact context for intelligence, outside the pages of this journal we completely lose control, and in this as in every other categorization the result becomes less than entirely clear15.

Across the complete spectrum of possibilities, a categorical separation of intelligence and sapience from an external viewpoint is implausible, most especially in that at a higher level they are both complementary components of wisdom. However, the primary distinction remains: intelligence sees scale; sapience sees all scales.

3. Sapience Sees All Scales

What happens when a multi-cellular network starts to expand? Rather than answering the question immediately we will back-track a little, to ask “how is it that a collection of cells constitutes ‘a network’ in the first place?” A simple description would be to suggest that inter-cellular cohesion must be greater than adhesion between the cells and parts of their environment. One possibility would be that a primitive force is responsible for the cohesion: gravity holds our planet Earth together, for example. A more advanced possibility is that it may be in the interest of a given cell to associate with others; often small fish remain in large shoals, apparently to increase their individual chance of survival in the presence of a predator. This “self-interest” proposal is of a cohesion based on communication16. Unfortunately, however, the basic nature of our surroundings is that of a restriction on communication, which makes it possible to differentiate between here and there; between this and that. A consequence is that communication is never instantaneous between different spatial locations; which leaves a spatially-large network vulnerable to stimuli which drive its various parts out of synchronization. Our answer to the initial question, therefore, must be that when a network of cells expands it will risk fragmenting if it cannot find a way to overcome the energetic needs of its progressively massively-scaled inter-cellular communication.

It is a basic tenet of information theory that communication requires energy. Ultimately, if a network expands beyond a certain point, the energy required to maintain communication-based cohesion will rise beyond the collection’s available resources. The only interesting strategy―interesting from our own point of view, that is―is for the network to generate a new global representation of itself, which both communicates internally in a simplified less energetic manner and permits economical external relations to be exercised. Intra-network communication energy can be saved by formalizing the nature of inter-cellular communication―by slaving [21] - [23] all the cells to one communication-model. Extra-network energy can be saved by only communicating information which is externally relevant17. Biological cells operate in precisely this manner. By first enclosing themselves in an “impenetrable” lipid membrane they are free to open up only those specific communication channels they wish to, and can portray themselves to their surroundings in whatever manner is “convenient” or “successful”.

This expansion scheme is a classical emergent scenario, where a new simplified architectural level emerges from an underlying population, and the new level exerts “downward causation”, or slaving, on the population individuals. It is important to notice that this creates a temporally infinite feedback loop:

1) (upward) emergence causes(downward) slaving,

2) which modifies (upward) emergence,

3) thus realigning(downward) slaving,

4) modifying (upward) emergence, and so on.

We will return to this vitally important aspect of infinity in the next section of the paper.

What has happened once can reoccur: a network which has managed to generate a new survival-promoting architectural level can do the same again and again… Arguably, and surprisingly, it will be easier to generate further new levels than it was to create the first one [24] . If we follow through with the logic of this argument, we can relatively easily end up with a naturally constrained system which is multiscalar and still unified. Let us stop for a moment at this critical point: what does it mean to say that the system is both multiscalar and unified? The entire architecture must be quasi-stable; each of the many scalar representations must be quasi-stable; each of the many inter-scalar interfaces must be quasi-stable. But notice, as in the skeletal representation of Figure 5, that this is only possible if there is a degree of correlation across the entire architecture, across all representations, across all interfaces. Not, however, complete correlation, which would imply that the scales are not real, but a partial correlation which is local to both individual representations and individual interfaces.

The relationship between any scale and its direct neighbors is fundamentally local in nature: the meaning or function of a biological cell when seen from a biological organ is very different from the meaning or function of an organ when seen from the organism. Inter-scalar relationships are very much a “you scratch my back and I’ll

Figure 5. An archetypal multiscalar natural system, displaying quasi-stability across the entire gamut of its features, evidencing a degree of correlation across the entire architecture, across all representations, across all interfaces.

scratch yours” kind of thing―but the different scales’ “backs” are poles apart! Adjacent scales are at liberty to negotiate away some uninteresting aspect of their autonomy in return for the receipt from their neighbors of a more valuable autonomy. Collier [14] has proposed as a plausible example of this autonomy negotiation that the brain has in the past ceded its biological support function to the body in return for greater freedom of information processing.

The global “integration” of this multiplicity of local negotiations serves to optimize as many aspects of a system’s operation as possible. A major result is the ejection into the scalar-interface regions of the majority of the intractable complexity which would otherwise reside at the scalar levels [13] , converting them into approximate Newtonian potential wells18 and raising the efficiency of the system’s computational response to external stimuli.

So, we now have a picture of a unified large system as a set of different quasi-stable scalar levels which are all quasi-correlated through hyperscale19. Centuries of philosophical effort, decades of neurological investigation and years of accurate imaging of the brain’s operation culminate in the supposition that we construct models in our “mind’s eye” which mirror the objects of our thoughts. The authors believe that we relate to hyperscalar systems from within our own assiduously-constructed hyperscalar mental environment! More than this, even― we believe that this is our “mind’s eye”!

We live within hyperscale.

There is a great similarity between this suggestion and Metzinger’s [5] proposition of naïve realism through phenomenal transparency:

“The phenomenal self is a virtual agent perceiving virtual agents in a virtual world. This agent doesn’t know that it possesses a visual cortex, and it does not know what electromagnetic radiation is: It just sees ‘with its own eyes’―by, as it were, effortlessly directing its visual attention. This virtual agent does not know that it possesses a motor system which, for instance, needs an internal emulator for fast, goal-driven reaching movements. It just acts ‘with its own hands’. It doesn’t know what a sensorimotor loop is―it just effortlessly enjoys what researchers in the field of virtual reality call ‘full immersion’, which for them is still a distant goal.” [5]

Figure 6 illustrates how we imagine this environment to be built and used. Inter-scalar constructional integration (though intelligence) adds all of the individual scales into the “virtual”20 scale-space of hyperscale. Within this space there is freedom of movement of the “spotlight of consciousness”, as we are now only dealing with

Figure 6. The construction of a hyperscalar environment from its constituent collection of “mutually inaccessible” individual scales, and its use in transparently accessing them.

models of a particular system scale, and not that scale itself in all its characteristics, and the “conscious observer” can access everything and everywhere in a transparent manner―with or without knowledge that “it is all only models”.

Sapience permits the “observer” to visit all scales, all points of view, and to “see” clearly whether they are in correlation with each other, or what changes need to be made. As such, sapience is the main “synergetic21 tool” permitting stabilization of the overall architecture of a system or, in our representation, of the “mind” itself.

We are now in a position from which we can provide an example of how this scheme works. All of the information, propositions, models and figures we have discussed in this paper appear within the hyperscalar scale- space of you, the attentive reader. This text itself is a hyperscalar device! Figure 6 presents a hyperscalar viewpoint; so, most particularly, does Figure 1(a), where we have transcended the impenetrable nature of our imagined “bag containing a black hole” in drawing the figure! The authors suggest that at this point the reader should “think about his or her complete environment” (a hyperscalar term, if ever there was one!), and notice that there is apparently no barrier to imagining, nay visiting all scales of all contexts [26] .

The greatest difference between naïve realism through phenomenal transparency and hyperscalar scale-space is that while Metzinger’s [5] proposition is derived from philosophical considerations in a top-down manner, our own argument is constructed bottom-up from system architecture.

We must now turn our attention to the “conscious observer” who we somewhat inexcusably slipped into the discussion in the manner of the author in Fowles’ [27] novel The French Lieutenant’s Woman.

4. Consciousness Makes It All Happen

Consciousness is a tricky phenomenon to get hold of. The reader will already have noticed that we, the authors, do not treat consciousness as being the sovereign of cognition, but as both the servant and master of intelligence and sapience. Consciousness only gains credibility in the context of mind; and vice versa! The emergence of consciousness depends primarily on the presence of real scale in a system―for this reason it is most likely that artificial systems could never exhibit consciousness. To see how we can justify attributing the emergence of consciousness to scale we must first follow through the arguments presented by Robert Rosen [16] for the success of Newtonian physics and the independent sustainability of living organisms.

The key to stability lies in the convergence or truncation of temporally infinite feedback loops such as that we described in the previous section, where up-scaling causes down-scaling, which causes up-scaling, which causes down-scaling, and so on.

A nice example of a convergent loop can be found in electronics in the guise of an operational amplifier (an “op-amp”). Figure 7(a) shows the symbolic representation of an op-amp with a “complementary” pair of inputs (labeled “+ve” and “−ve” in the figure) whose output Vout is fed back after a short delay τ to the negative input, −ve. Positive input voltage Vin applied to the +ve input will drive the output positively; positive input to the −ve one drives the output negatively. However, the op-amp itself pushes its output up to a large multiple of the dif-

Figure 7. (a) An operational amplifier without internal energy dissipation; (b) the resulting sequence of output voltages Vin.

ference between the two input voltages at +ve and −ve. For practical op-amps this amplification would be probably by a factor of more than one million. It is not difficult to see that having once been started up by a voltage at the +ve input, the op-amp output will cycle negative, positive, negative, positive… without stopping, as shown in Figure 7(b). However, that is not what electronics textbooks tell us―and on the contrary, if we measure the output, within certain constraints it will be equal to the input. The model we have presented left out “imperfections” in the op-amp which cause the cycling signal to lose energy, and the output converges towards a final state. In general terms this would correspond to the emergence of a new system character through the dissipation of energy. More often than not, however, dissipation leads to the eradication of structure, and to the phenomenon usually referred to as “heat death”22. In the case of our op-amp, internal dissipation does indeed lead to the decay of signals which are above the op-amp’s characteristic cutoff frequency, leaving only a quasi- static (low-frequency) output. Truncated infinite looping is infinitely more interesting than convergence through dissipation, as it does not preclude rapid changes in cycling information.

Rosen’s argument in relation to Newton’s Laws [16] is (very sketchily) as follows. We split the Universe into self and ambience, then ambience into system and environment, and begin with the identification of a “particle” as a formal structureless object which encodes ‘something’ in the “real” world. The first relevant question is where is it? The answers form a “chronicle”―a list of positions, but no way to derive any one of them from any other one. A second feasible question is of the derivative of the particle’s position: What is its velocity? The answers again form a chronicle?a list now of velocities; but of prime importance is that there is no way to derive the entries in one chronicle from those in the other! This questioning can be continued indefinitely, through what is the derivative of its velocity, what is the derivative of its acceleration, and so on, to infinity.

We now have, unhelpfully, an infinite collection23 of chronicles containing apparently unrelated entries! Newton’s First Law then specifies that in an empty environment the particle cannot accelerate: this truncates the infinite collection of chronicles, leaving just those of position and velocity. The key to performing a similar truncation for a non-empty environment lies in Taylor’s Theorem. The infinite set of chronicles expresses everything there is to know about the particle―they determine its state-and Taylor’s Theorem tells us that present state entails subsequent state: the state is recursive.

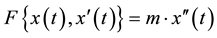

Newton’s Second Law collapses the state down to two variables by first representing the environment entirely by its effect as a force on the particle, then by reflecting this force in a single chronicle of acceleration, and finally by expressing the force as an explicit function of only position and velocity:

(1)

(1)

where

is the environmental force,

is the environmental force,

the particle position,

the particle position,

its velocity,

its velocity,

its acceleration,

its acceleration,

its mass and

its mass and

the time; or more familiarly

the time; or more familiarly

(2)

(2)

Rosen has used the curtailment of infinity in Newtonian physics as an introduction to his own formulation in Life Itself [16] . His general argument is that both Newtonian and living systems require an apparently infinite sequence of conditions to be valid, but also that both are stabilized by cutting off that infinite sequence. More accurately, his argument in respect of living organisms does not refer to simple truncation?by just cutting off a sequence―but to truncation of the form of the sequence by replacing the cut off member by one which was already encountered, earlier in the sequence. The result resembles the form of the op-amp circuit shown in Figure 7: the “output” is connected back to the “input”, creating a loop. As for the op-amp circuit; once the circuit has been started up, it will continue on its own―assuming, of course, as we initially did, that there is no dissipation. The original input is now no longer necessary, and it can be removed, leaving a self-sufficient system to oscillate into eternity. There are two important points here:

1) this self-sufficiency was Rosen’s main concern―to explain how life can be self-perpetuating once started, and

2) in fact, infinity is not removed from the picture: the infinity of one kind of sequence is merely replaced by the infinity of another. Connecting the output of an op-amp back to its input creates a temporal infinity through feedback, as we pointed out above24.

Rosen [16] defines an organism in relation to Aristotle’s [15] efficient cause25:

“a material system is an organism if, and only if, it is closed to efficient cause”.

Life, when described this way, is similar to fire from the point of view of a primitive people who cannot light fires on their own. Once started it can continue on its own, transfer from one substrate to another, split up, sire new offspring, populate large areas, and all this with just the input of some environmental material. But if it dies out, then that is the end of it. By “closed”, Rosen means that the answer to “Who or what constructed this part of the organism?”―i.e. its efficient cause―can always be found inside the organism itself.

Rosen’s argument leading to the self-sufficiency of organisms [16] is (again sketchily) as follows. The initial step is to draw in relational form the metabolic conversion of a collection of “environmental chemicals” A to a collection of “enzymes” B (Figure 8(a)) by a biological component f26.

The hollow-headed arrow indicates a flow from an “input” to an “output”; the solid-headed arrow denotes the induction of this flow by a component (or “processor”)27. If efficient cause is to be internalized, we must now ask “what makes f?” We can resolve this problem by adding in a new processor Φ, which “makes” f (Rosen calls this function repair) from its internally available supply of ingredients B (Figure 8(b)).

Figure 8. Rosen’s constructional sequence to internalize efficient cause in organisms: (a) the first step― establishing the relational form of a metabolic process; (b) adding a repair function based on internally available metabolic products; (c) adding a replication function as a separate component; (d) deriving replication from metabolism and repair, which merges replication into the system, rather than imposing it as an external cause.

This relational diagram represents any one of Rosen’s category of “(M, R)-systems”28. Unfortunately, of course, we must now ask “what makes Φ” Easy! We throw in another processor β which “makes” Φ (Rosen calls this function replication) from its internally available supply of components f (Figure 8(c)). Guess what comes next… “What makes β?” However, and it is a very big “however”, it is now possible to resolve the problem in quite a different way.

Rosen points out that under certain, not unduly restrictive, formal constraints on the mapping from A to B, this replication component β can be derived from the already-present mappings of metabolism (induced by f) and repair (induced by Φ). The replication “component” β can then be replaced by the addition of one new solid-headed arrow of induction to the diagram (Figure 8(d)) between Φ and f, which represents the new assumption that an element b of B is in fact a processor (a not too unrealistic proposition if b is an enzyme). Efficient cause is now completely internalized: Rosen states that

“as far as entailment is concerned, the environment is out of the picture completely, except for the initial input A.” [16]

The “not unduly restrictive” constraint this imposes on the mapping A to B is that it must be invertible (i.e. mapping B back to A must also be valid). This formally requires that the metabolic mapping A to B be one- to-one, and not many-to-one29.

Let us look at what has happened here. If we take A to be a package of hand-written code, and f to be a compiler (“metabolism”), then the output B can be a functional program30. Given suitable inputs Φ to B, we can arrange that the program (re)generates the compiler f (“repair”), but now we must justify the appearance of Φ (Figure 9(a)). If we continue the generative sequence, then we can spawn Φ, and therefore everything up to this point (except the initial “environmental supply” of written code A) from yet another program which is external to f, B and Φ.

To finalize this project, we are now well on the way to constructing an infinite set of nested computational environments, each of which creates everything which is inside itself, but each of which must be created from outside (Figure 9(b))31. This is the ineffective scenario which Rosen avoids by referring his new replicating processor β back to the already-included processors f, B and Φ. In doing so he truncates the otherwise necessarily infinite series of “efficient cause” processors. Figure 9(c) illustrates this process within our nested-environ-

Figure 9. (a) Representing Rosen’s metabolism and repair as a program and its compiler; (b) redrawing the system as an infinite series of nested environments; (c) truncating the infinite nesting series by referring back to an earlier stage.

ment formulation, where the constituents of β are assembled from levels which are inside β, thus truncating the infinite environmental nesting. The reader should be aware that Rosen’s “relational argument” and our own “nesting of computational environments” are mathematically equivalent, in that prior to truncation they share the same relational sequence in Rosen’s graph-theoretic formulation [16] :

where

The computational nesting is nominally multiscalar, but it is in fact a scale-less artificial system32, as can be concluded from examination of the graph-theoretic formulation, where it is possible to travel along the entire relational sequence by recourse to a single formal rationality (i.e. that within which Rosen constructed his arguments). This confirms our earlier conjecture that Rosen’s approach takes no account of scale. Even if that were not the case, if we now attempt to derive by truncation an enclosing environment (or scale) from only a single enclosed environment (or “smaller” scale), then the result is again an artificial system, whose operations indeed apparently “converge”―but not to anything of any particular interest! Much more interesting is that by truncating the infinite series of computational environments we move from global system control to some kind of local control, corresponding to Rosen’s [16] requirement for the internalization of efficient cause in living systems.

Our interest in Rosen’s approach to internalizing efficient cause is probably now becoming clear. Can we use it to see how the conscious mind appears “as if from nothing” in an organism, and in doing so can we relate the mind to intelligence and sapience?

Let us assemble the elements we need for this argument.

1) The quasi-stability of very large natural systems demands that they are multiscalar.

2) Inter-scalar transit in a natural system is counter-rational, in that we cannot rely on the rationality of any specific scale when crossing between scales33.

3) Individual scales are partially en-closed, and communicate with their neighbors through autonomy negotiation.

4) Intelligence is a tool which permits inter-scalar transit, in that it facilitates derivation of the “sub-global” representations of scale from collections of individual elements.

5) Sapience is a “higher-level” tool, in that it facilitates the derivation of “global” hyperscalar representations from collections of individual scales.

6) Intelligence and sapience depend for their success on mutual observation between the subjects of their “integrations”.

7) From within hyperscale we can “transparently” observe all scales, all elements which go to make up that hyperscalar environment―even those scales or elements which are fundamentally perceptionally invisible to us, for example the inside of a black hole!

Figure 6 illustrates the construction and use of a hyperscalar environment. This could never be a static construction, or its “owner”34 would no longer evolve, and would certainly fall victim to environmental change. The perpetual updating of all its inter-scalar and hyperscalar-scalar relationships, however, requires perpetual re- negotiation of all of its subsumed partial autonomies―there is a permanent mutual observation taking place. Now, is it possible that all of this takes place at a single scale? No, clearly not. So we again have a nested collection of environments similar to our re-formulation of Rosen’s proposition. To cut a (very) long story short35, we believe that the internalization of material replication in Rosen’s organisms is mirrored by the internalization of observational replication in natural multiscalar systems. How could this take place?

Rosen depends on the formalized partial isolation of the mappings induced by f, Φ and b to permit the equivalence between the “replicator” β and a combination of the “metabolizer” f and the “repairer” Φ. The development of consciousness from mutual observation in a multiscalar natural system would depend on the partial isolation of individual scales from each other, and most particularly on the negotiated isolation of hyperscale from the collection of individual scales. In Metzinger’s [5] words:

“We are systems that are not able to recognize their subsymbolic self-model as a model. For this reason we are permanently operating under the conditions of a ‘naïve-realistic misunderstanding’: we experience ourselves as being in direct and immediate epistemic contact with ourselves. What we have in the past simply called ‘self’ is not a non-physical individual, but only the content of an ongoing, dynamical process―the process of transparent self-modeling”.

And where does this dynamical “process of transparent self-modeling” take place?

Within the confines of an ongoing partially transparent hyperscale-to-and-from-multi-individual-scalar negotiation.

And how does observation from within hyperscale become stabilized to observation within hyperscal―how does the efficient cause of observation become internalized?

By the natural convergence of inter-scalar and hyperscale-to-and-from-multi-individual-scalar autonomy negotiations, which leads to coherent quasi-stabilized unity of the system and effectively to truncation of the infinite mutuality of observation of observation of observation of observation of observation…

We believe that observation becomes progressively restricted to within the hyperscalar “phase-space”, where it can more easily access “everything it needs” through phenomenal transparency. It should by now be evident that we are not limiting ourselves to a proposition that “consciousness is the ultimate emergence of networked intelligent processing”, but that “consciousness” is more or less a property of all information processing: Tononi [29] [30] maintains that consciousness is equivalent to the integration of information. This does not preclude the generation of a degree of consciousness within the confines of a real neural network which is impossible at lower information-processing densities [24] ―only that this kind of “high-level” consciousness is only ever generated within a hyperscalar environment. Nor does this suggest that we should be able to be aware of different levels of our own mind’s consciousness: all of these would be subsumed into the transparency of hyperscale, where the only “different levels” we observe are ones that we internally “permit to exist”: ego naturally resolves the problem, by insisting that there is “me and only me”! Even so, intelligence requires an observational capacity at its own level; sapience does too; even the interactions of apparently “Newtonian” particles (controversially) do: “unconscious” cognitive processing is maybe not so unconscious after all!

5. The Computational Implementation of Sapience

It will be evident from the preceding discussion that computational implementation of sapience is not only an exceedingly complex affair, but that it also depends on prior computational implementation of intelligence. Herein lies the problem. Is it currently feasible to artificially implement intelligence? Clearly not. The constraints which are imposed on computational machines to validate their performance eliminate any possibility of non-preprogrammed operations. Computers are blind servants of their designers and users. More specifically, the primary function of the system clock in a digital computer, for example, is to eliminate any unforeseen36 global-to-local influences: computer gates are completely isolated from each other, except for the inter-gate pathways laid down during the diffusion, poly-Si and metallization stages of their chips’ manufacture. This aspect of a computer cannot be overstated. Much is made of the emergence of global properties in digital-compu- tational simulations of multi-agent systems. There is no such emergence. The output of a digital computer is solely the result of its design, programming and data input: nothing is created that could not be done, albeit much more slowly, by personally following the process manually. The expression artificial intelligence is an oxymoron: intelligence is real, or it is not at all!

So, is all lost? Is a search for computational sapience worthless? No, not at all. Computers are in their infancy. The first, unreliably accurate analog ones were replaced by their digital counterparts, where the somewhat forgiving nature of analog manipulations was replaced by the theoretically (and approximately practically) absolute precision of binary arithmetic, in return for the ever-present risk of catastrophic “system crash”. Alleviation of this risk entailed the categorical elimination of anything which lies outside their designers’ specifications37. Inside a modern digital processor, extensive if not complete design testing has virtually eliminated catastrophic failure, but the risk is still unavoidably present in the combination of processor design with insufficiently tested application software. Current Air Traffic Control systems are demonstrably close to the limit of error-free design complication, but they are still nowhere near the complexity of even the simplest biological information processors.

So where do we go next? How do we solve the dual computational problems of overbearing operational formality and design error? And, more relevant to this paper, is it sufficient or even of value to decide within a digitally-computational scenario which techniques and mechanisms are required to build computational intelligent or sapient systems? Is it enough, for example, to assume that James Albus’ description of intelligence is sufficient for our general purpose, and to confidently say, therefore, that all that intelligent systems need to be able to do is to reason about actions, to generate plans, to compute the consequences of plans and compare them, to execute plans, etc.? Given the mismatch between current digital computation techniques and the nature of intelligence we have described in this paper, this would be very shortsighted. If we are to rely on the at least dual specification of intelligence and sapience, we must first attempt to decide which of them does what, even if we are not yet able to say how. Our exercise in terms of reasoning, actions, plans, decisions, and executions should then be used to inform ourselves where we should go next in what will be a very long search for implementation.

One thing is clear. All of the examples of intelligence we meet in nature are at the very least multi-scalar. We will not be able to implement intelligence without attention to scale, to inter-scalar complexity, to scalar isolation and to its attendant partial autonomies [7] [20] . None of these properties have any meaning in a purely digital context. Nature relies on digital-to-analog-to-digital codings in all its dealings. If we are to implement intelligence, we must do likewise. Sapience is an even more esoteric “property”, in its reliance on all of the scales of a processing entity. Sapience does not reside “here” or “there”: it has, for an individual entity, something of the character of “language” across a quasi-unified society, with all its attendant multiplicities of definition, of meaning, of implication. An important facet of both inter-scalar-(intelligent) and multi-scalar-(sapient) correlations is that any presumed orthogonality between systemic properties collapses [20] . Consequently, even the philosophically basic modes of analogic, inductive, deductive, abductive and subductive reasoning merge into a generalized contextually variable birational process [31] . Where could we “implement” these extreme systemic characteristics in a scheme which relies on absolute localization of its processing elements, as does a digital computer? Nowhere! We must first deal with the relatively simple intelligence of inter-scalar correlation, cooperation and conflict before we can even contemplate “integrating” a multiplicity of inter-scalar “systems” into a unified sapience.

6. Wisdom Is Everything

Homo sapiens (Latin) means “wise” or “clever” human―further classified into the subspecies of homo sapiens neanderthalensis (Neanderthals) and homo sapiens sapiens (Cro-Magnons and present-day humans).

Again following Jean-Luc Doumont’s criteria, we should first state the major conclusion which we will draw from this section of the paper. This will be that

wisdom subsumes intelligence, sapience and consciousness.

So what does wisdom consist of?

“To Socrates, Plato, and Aristotle, philosophy is about wisdom. The word ‘philosophy’, philosophía, to ‘love’ (phileîn) ‘wisdom’ (sophía), supposedly coined by Pythagoras, reflects this. Just what wisdom would involve, however, became a matter of dispute”38.

One of our major contentions in this paper is that if we equate the term sapience directly to wisdom we risk missing out a critical aspect of cognitive processing. Problematically, however, a substantive “architecture” of wisdom has never been articulated, and we find ourselves lacking a name for the specific attribute of wisdom which in “architectural” terms provides an intermediate faculty between the apparent simplicity and comprehensibility of intelligence and the “godlike” state of wisdom:

“The truth is this: no one of the gods loves wisdom {philosopheîn} or desires to become wise {sophós}, for he is wise already. Nor does anyone else who is wise love wisdom. Neither do the ignorant love wisdom or desire to become wise, for this is the harshest thing about ignorance, that those who are neither good {agathós} nor beautiful {kalós} nor sensible {phrónimos} think that they are good enough, and do not desire that which they do not think they are lacking.” [32]

We propose using the word sapience uniquely to describe this faculty of intermediacy between intelligence and wisdom. In doing so we do not presuppose that “this is the end of the matter”―but hope that by locating intelligence, sapience and wisdom realistically with respect to each other we may stimulate further, more detailed analysis of the extreme complexity of cognitive processing.

It is tempting to think in terms of a comparative scale. Wisdom stands far above the other individual elements of our conclusion, but we cannot establish an immutable order for intelligence, sapience and consciousness. Wisdom collapses in the absence of any one of these, and there is no state or performance of “partial wisdom” corresponding to the presence of only one or two of these three dramatis personae. Wisdom emerges from intelligence, sapience and consciousness, and this in the context of mind.

It is relatively easy to distinguish between intelligence, sapience and wisdom if we check to which domains of a hyperscalar system each is relevant. Intelligence is local with respect to scale (and operates primarily in a “bottom-up” manner); sapience is local with respect to hyperscale (and operates primarily in a “top-down” manner); wisdom is global with respect to both scale and hyperscale, and it is consequently “outside rationality” (and takes account of not only “bottom-up” and “top-down” considerations, but also scalar and hyperscalar aspects of all the relationships between a system and its environment39).

It is essential to notice that in a multiscalar system Aristotle’s [15] final cause is not unique: interscalar communicational difficulties preclude complete transfer of knowledge from one scale to another, and consequently in a naturally-correlated hyperscalar system there are at least as many versions of final cause as there are recognizable representations of the system, and there will be a sense of hierarchy in the degree to which a specific “local final cause” may be relevant from the “point of view” of a more global one.

A fine example of the relationship between intelligence, sapience and wisdom can be found in the hypothetical reactions of a soldier, threatened along with his40 comrades by an enemy machine gun. Intelligence may tell him amongst other things that if he wants to see what is happening he should put his head up; his sapient instinct to survive may moderate his choice of action and use his intelligence to keep his head down; his wisdom may well initially support his sapient conclusion, but his societal mind may cause him to rush forwards to disable the gun and thus save his comrades―even though he will be aware that in doing so he may lose his own life. Cognitive processing may well remain purely internal up to and including the level of sapience, but to arrive at a state of wisdom, society must necessarily enter into consideration.

Although wisdom is often equated to goodness41, the authors do not accept that this is necessarily the case. It seems more likely that the wisdom we have been describing in this paper is that pertaining to an individual (human) organism―even though that individual may well act in the knowledge and consideration of the society in which he or she is embedded, as in our “hypothetical example”. It seems more likely that a yet “higher form of wisdom” could be established, which resides in the abstraction of “society”, and which establishes its own code of auto-defined good and evil through globally-correlated peer pressure. Such a phenomenon would be a socially-hyperscalar construction of individual-hyperscalar elements. Could this equate to ethics?

7. Intelligence, Sapience and Semiotics

The argumentation we have presented is not in itself strange―it is an empirical construction, not an intellectual one. Apparent strangeness appears purely as a result of our insistence on accurately locating the viewpoints we adopt in our observations.

There is no obvious reason why nature should obey the presupposition that rationality be homogeneous across different scales; “reason” leads us to exactly the opposite conclusion. Information is always contextual. Information processing in a system always takes place between “architectural” scales, rather than at a single scale. Intelligence is the “tool” which permits an “overview” of the relevance of individual items of information to their assembly for a scale-specific purpose. The overall scalar and hyperscalar characters of informational architectures are most striking, however, in the way they mirror Peirce’s [33] semiosic categories [34] . We can do no better than to quote the following three passages from Edwina Taborsky [35] .

“There are three basic Peircean modalities of codal organization. They are a Firstness of possibility, a Secondness of individuality and a Thirdness of normative habits of the community… Firstness is an internal codification that measures matter without references to gradients of space and time, and ‘involves no analysis, comparison or any process whatsoever, nor consists in whole or in part of any act by which one stretch of consciousness is distinguished from another’ [33] . Matter encoded within firstness… is unable to activate recording or descriptive systems, which are secondary referential codes that provide the stability of memory”.

Which excellently describes the collection at a single scale whose distinctiveness we have proposed earlier (see Figure 1(a), for example).

“Secondness as a measurement collapses the expansive symmetrical capacities of firstness, by providing spatial gradients and temporal parameters that act as proximate referential values to inhibit and constrain the energy encoded in firstness. Secondness refers to ‘such facts as another, relation, compulsion, effect, dependence, independence, negation, occurrence, reality, result’ [33] . Matter encoded within secondness is oriented and intimately linked to this local context, and we can assign a definite quantitative and qualitative description to its identity”.

Which corresponds to both the emergent scenario illustrated in Figure 1(c) and Figure 3 and its resultant downward elemental slaving and, as we have made clear, requires intelligence for its accomplishment.

“Thirdness is a process of distributive codification, operative both externally and internally, that transforms the multiplicity of diverse sensory-motor data into universal diagrammes. ‘… there is some essentially and irreducibly other element in the universe than pure dynamism or pure chance (and this is) the principle of the growth of principles, a tendency to generalization’ [33] , or more simply, the tendency to ‘take habits’. Thirdness sets up a general model that works to glue, to bind, to relate, to establish relationships and connected interactions. It extracts descriptions from the diverse instantiations of experiences and ‘translates’ them into a syncretic diagramme such that subsequent local instantiations can emerge as versions or representations of these general morphologies. Thirdness is a ‘matter of law, and law is a matter of thought and meaning’ [33] ”.