J. Service Science & Management, 2010, 3, 352-362 doi:10.4236/jssm.2010.33041 Published Online September 2010 (http://www.SciRP.org/journal/jssm) Copyright © 2010 SciRes. JSSM Evaluating Enterprise Risk in a Complex Environment Ivan De Noni1, Luigi Orsi2, Luciano Pilotti1 1University of Milan, DEAS, Milan, Italy; 2University of Padua, Padua, Italy. Email: ivan.denoni@unimi.it Received June 11th, 2010; revised July 19th, 2010; accepted August 21st, 2010. ABSTRACT This paper examines the relationship between operational risk management and knowledge learning process, with an emphasis on establishing the importance of statistical and mathematical approach on organizational capability to forecast, mitigate and control uncertain and vulnerable situations. Knowledge accumulation reduces critical situations unpredictability and improves organizational capability to face uncertain and potentially harmful events. We retain mathematical and statistical knowledge is organizational key factor in risk measuring and management process. Statis- tical creativity contributes to make quicker the innovation process of organization improves exploration capacity to forecast critical events and increases problem solving capacity, adaptation ability and learning process of organization. We show some important features of statistical approach. First, it makes clear strategic importance of risk culture within every level of organization; quantitative analysis support the emergence of latent troubles and make evident vul- nerability of organization. Second, innovative tools allow to improve risk management and organizational capability to measure total risk exposition and to define a more adequate forecasting and corrective strategy. Finally, it’s not so easy to distinguish between measurable risk and unmeasurable uncertainty, it depends on quantity and quality of available knowledge. Difficulty predictable extreme events can bring out crisis and vulnerable situations. Every innovative ap- proach which increases knowledge accumulation and improves forecasting process should be considered. Keywords: Complexity, Extreme Events, Operational Losses, Quantitative Management 1. Introduction In literature we find out paper explaining mathematical techniques application to operational risk evaluation and other concerning risk management principles and fea- tures. What we would like to do in this paper is to merge this too often separated concepts. Thus, in the first part, we describe some aspects of operational risk mainly with respect to relationship between uncertainty and corporate learning process; in the second one, we argue general mathematical approaches implications on operational risk and knowledge management and then we show an inno- vative mathematical method and exploit its advantages, disadvantages and further extensions. In modern economic context, knowledge management is a more and more important resource for success of firms. However, getting information is only the first step for a long time sustainable development. In a dynamic system in fact, knowledge may result just a short time vantage because its non-excludability property makes its transfer and competitors’ imitation easy [1]. To ac- quire competitive vantages, firms have to develop and continually improve suitable capabilities and organize routines to control, manage and use the knowledge in a profitable way [2]. Thus, knowledge management becomes progressively the success key for securing organization’s sustainability [3], even if a successful learning process needs a good adaptation capability at the same time. Only a supple firm is able to adapt its cognitive patterns to environment and market changes [4] quickly and properly enough to de- velop an effective generative learning process for new knowledge creation [5]. Knowledge management is a dynamic capability [6]; a successful strategy in the short term may become less efficient in the long one if the firm is unable to increase continually its knowledge, improving competences and innovating competitive ad- vantage. So we can conclude efficient knowledge management and adaptation capability are both required to organiza-  Evaluating Enterprise Risk in a Complex Environment353 tion sustainability. When these conditions are both satis- fied, an organization can implement a generative learning process to preserve new knowledge creation and innova- tion. In this perspective, statistical analysis and mathe- matical models are some of possible tools which can help firms to improve knowledge accumulation and decision making processes. More and more detailed and reliable forecasting models, in fact, permit to better predict envi- ronment changes and manage correlated risk, reducing uncertainty and increasing the organization’s problems- solving capability. 2. Organization, Risk and Knowledge Each enterprise can be thought as a socio-economic or- ganization, headed by one or more persons with a pro- pensity to risk [7,8]. So risk is an integral part of the firm. The increasing complexity of modern society makes risk a particularly critical factor because company manage- ment is often unable to face it [9-11]. When we talk about risk we refer in particular to operational risk1. If you consider the more and more critical role of risk in company governance, it’s easy to understand because overlooking or thinking risks in simplistic way may lead to inadequate exposition or unconscious acceptance by the organization. In contrast, when risk is implemented in the corporate culture, it develops into a production factor and its suitable management becomes an essential part of value creation chain [12]. Just when the concept of risk is integrated in corporate culture, you can iden- tify the basis for prudent and responsible corporate gov- ernance [13]. However we need to distinguish between governance and management of business risk. They are two inter- connected but different moments of decision-making process. Government takes care of placing the organiza- tional bounds qualifying the logic of value creation and the maximum tolerable risk, while management tends to decompose the overall business risk in a variety of risks, following the stages of assessment, treatment and reas- sessment of all relevant risk [14]. In other words, risk governance relates to risk culture sharing throughout the all organization as well as to re- duce risk overlooking. Risk management relates to every process or technique which allows to mitigate or remove risk for organization. In first instance potential efficient management depend on efficient governance. Thus, a careful administration of risks leads to some preliminary considerations: A corporate governance is based on a complex and dynamic mix of risks linked inextricably to the en- terprise system; Usually a business risk cannot be fully cancelled; Each action to reduce risk exposition involves an organizational cost and brings out other hazards; The sustainability of any risk depends on the amount of enterprise knowledge and competences. Last point focuses on the relationship between risk and knowledge. The risk reflects the limitations of human knowledge or bias, indicating the possible events to which it is exposed due to the combination of their choices, external conditions and the flow of time. Just if know- ledge was complete and perfect, firm would operate in conditions of certainty [16]. So risk and knowledge are each other mutually dependent: the risk marks the limit of knowledge and it allows the perception of risk [17]. Over time, the learning process of an organization leads to a better understanding of reality and a more awareness of risk and thus allows to reduce uncertainty and to de- velop greater risk management and forecasting capabili- ties. In literature we found three different approaches which try to define the relationship between knowledge and risk: scientist current, social current and critical current. The first one is based on logical-mathematical app- roaches and believes in primacy of knowledge on risk [18,19]. The second one retains limits of knowledge and social interaction processes make the risk a feature of contemporary society, where knowledge contribution mere- ly asserts organizational inability to eliminate risk [20]. The critical current based its thesis on knowledge and risk dynamics, where risk cannot be eliminated but it can be reduced or contained [12]; so the learning creative process of a business organization needs to track the business risk, trying to transform or mitigate it through company skills and competences, which organizational knowledge renews over time. Spread risk culture to any firm level means developing an attitude to adaptation and risk knowledge, looking for useful concepts and approaches to address critical issues of risk assessment and management. Neglecting risks leads the entrepreneurship to a state of myopia that makes it unable to predict or otherwise mitigate critical situa- tions, increasing the vulnerability of all organization, constantly exposed to uncertainty and possible crisis. The information collection and the learning process, increasing knowledge about nature and behavior of a particular event, can help the ability to manage risks and can play a key role in raising resources, tools or new knowledge which, without allow a precise prediction, will be able at least to reduce risk impact. 1According to Basel Committee [15]operational risk is defined as the risk of loss resulting from inadequate or failed internal processes, eople and systems or from external events. Copyright © 2010 SciRes. JSSM  Evaluating Enterprise Risk in a Complex Environment 354 However, the knowledge related to risk management, if misinterpreted, could lead to controversial situations. The larger uncertainty perception is, the stronger will be the people’s inclination to not act; in other words, ma- nager who is facing unknown situation usually tends to be more cautious, ready to come back or change his strategy just perceive discrepancy with respect of his expectations. At opposite, when the decision maker be- lieves to know the event distribution, his strategic be- havior is determined by a cognitive model built on its previous experience and thus makes him less sensitive to environment changes and to perception of every signal which could prevent or handle unexpected situations (ex- treme events) and crisis. 3. Crisis Management and Vulnerability of the Organization We need a more stable and comprehensive concept of crisis in organizations facing complexity and uncertainty as well as a way to reduce risk by better prevention [21]. Crisis management and vulnerability of the organiza- tion are two very important concepts that have had con- siderable attention in management literature as the basis for defining processes of contingency planning such as operational crisis and crisis of legitimation for disaster recovery (bankruptcy, market breakthrough, change in leadership, fraud, etc.). Rarely has it focused on the pro- cesses by which the crisis has been generated, its long- term phases and embedded sources [22]. We need to de- velop a better perspective to explore the generation and nature of crisis events in organizations where, according to Smith [22] “management should not be seen as oper- ating in isolation from the generation of those crisis that they subsequently have to manage, but rather as an inte- gral component of the generation of such events”. But we will see that the customer or the user becomes part of the strategic process to prevent and respond to crisis as “partners” of management and shareholders, sharing col- lective knowledge and information about value creation. The main factors to create the pre-conditions for crisis and vulnerability of organizations are their interaction with the market and the often huge differentiation of this one; the growing importance of consumer expectations and perceptions which are closely connected with the image and reputation of the company and of the man- agement; the technological innovations and long (or short) wave of change that pushes companies to change rapidly not only their products or markets, but also deal- ers, managers and organization; the nature of leadership, its stability and evolution, as in the case of a jump in family control or a huge change of shareholders [23]. For those reasons and many others that serve to create a complex portfolio of potential crisis scenarios, is nec- essary to outline the nature of the crisis management process and to know how the crisis evolve. In the literature we can identify a focus on the devel- opment of contingency plans to cope with a range of cri- sis scenarios in terms of response teams, strategies for continuity service provision, and procedures to protect organizational assets and damage limitation. These are activities very important in stopping dangerous conse- quences of a crisis, but not useful to avoid it, as in the case of an organization’s reputational harm, often irre- versible. Effective crisis management should include a diffuse and systematic attempt to prevent crisis by occur- ring [22,24,25]. According to many authors, the notion of crisis starts from a circularity and interaction between different pro- cess stages not always linearly connected: crisis manage- ment, operational crisis, crisis of legitimation, process of organizational learning. In that view, a good preventative measure is creating resilience [21,25,26,27, 28]. This can be achieved within the organization by: trying to eradicate error traps as a path to explor- ative learning; developing a culture that encourages near-miss re- porting; dealing with the aftermath of crisis; learning lessons from the event; defining an accountability to connect stakeholders for crisis potential [26,27]. According to Smith [22] we need a shift in the way of representing organizations’ dynamic change and proc- esses of management (before, during and after crisis) as nonlinear connections in space and time. This would al- low us to explore pathways of vulnerability and erosion of defences (Figure 1): 1) Crisis of management: the problems are well known; managers believe that their organizations are safe, secure and well run because their short-term perspective sees the comparison between the cost of prevention and per- ceived costs of limitation and recovery derived from an underestimation of the second in respect to the first. The long-term view for many managers is rare, and often “hostages” of operational phases and controls are by- passed. 2) Operational crisis: many characteristics of the crisis are here often visible, but in many cases, contingent and temporarily defined. But we face often situations of deep incubation or latent conditions of crisis factors as if the organization is living in a permanent present, without a past and without a future. Copyright © 2010 SciRes. JSSM  Evaluating Enterprise Risk in a Complex Environment355 Figure 1. The nature of the crisis management process and the potential for emergence [22]. 3) Crisis of legitimation: it starts internally but with immediate external effect attracting media coverage, governmental intervention or public inquiry as in the case of the recent bank crisis in Europe or great compa- nies in USA. It could start from a failure in a specific product or service that causes a loss of customer confi- dence (and/or shareholders as well). At the same time, it will lead to erosion of the demand and probably to finan- cial instability in the long run, with an impact on stability of shareholders and larger stakeholders too. The organ- izational learning will show a probable great fragility. 4) Processes of organizational learning: a crisis of le- gitimation leads to a crisis of procedure and practices and then to the lack of confidence of the middle management. 5) Crisis of interchange between tacit and codified knowledge: we can underline the role of knowledge dif- fusion as a factor of crisis in case of overestimation of codified knowledge with respect to tacit ones, considered as residual errors to remove by a hierarchical control mechanism. Interdependences between tacit and codified knowledge (or between voluntary and involuntary be- havior) are the link with the past and future of the com- pany. They represent both the main source of strategic competences in connection with its identity, and the ar- chitecture of organizational learning able to sustain more generations of entrepreneurs and managers as well as customers. This is key to describing one of the pathways of vulnerability. Critical situation can be due to a lot of internal and external factors. Bad managed critical situation can turn into organizational crisis. Every risk, extreme event and uncertain situation, which cannot be forecasted, miti- gated and controlled, is a potential factor of vulnerability and crisis for organization. It’s important to exploit every innovation and tool which can make management proc- ess easier, more reliable and more responsible. 4. Certainty, Uncertainty and Risk in Decision Making In economic context there are measurable uncertainty and unmeasurable uncertainty. If nothing you can say about uncertain event, risk may be thought as a measure- able uncertainty. Catastrophic event (rare event) is intermedi- ate situation, it’s not a fully unmeasurable uncertainty but an accurate foreseeing could be hard. Extreme event is located in tails of distribution, it’s featured by low probability of occurrence and high nega- tive impact. No information on past behavior allow to exactly understand its dynamic evolution and conse- quently risk managers are unable to forecast it. However mathematical models or statistical tools are not so useless as a lot of authors believe, if you judge they allow increasing knowledge accumulation. Better is your knowledge about a phenomena, better you can face it. According to Epstein [29] you can say a priori whe- ther a risk is measurable or unmeasurable, it depends on the knowledge and information you have about it. For example, we are not yet able to predict an earthquake but we have learned to distinguish the potentially seismic zones according to their geological composition, to build using materials and techniques which sure high seismic resistance. Thus was possible on base of statistical analyses which allowed to verify where earthquakes happened with larger probability rate and to value the capacity of some materials to respond efficiently to stress actions. In other words, even if you cannot predict a catastrophic event, you can study its features and im- prove organization capability to adequately face it and to act fast and efficiently to reduce its impact when the event occurs. In front to these situations, manager can choice differ- ent strategies. He may decide on responsible manage- ment of every risk or only more ordinary risks account- ing. Many decision makers tend to overlook highly im- probable events, though very dangerous; most of them usually think coverage costs related to the extreme event prediction are in the time higher than costs needed to face their occurrence. Probability estimation for an out- come based on judgment and experience may result suc- cessful but it depends on entrepreneurship or manage- ment capability and competence and in uncertain case on randomness. Mathematicals and statisticals allow to get an objective and more adequate probability estimation. Use of statistical techniques for objective management of risks effects on organizational culture and enhance pre- Copyright © 2010 SciRes. JSSM  Evaluating Enterprise Risk in a Complex Environment 356 dictive capacity at every firm’s level. However, neglect to study extreme event distribution slows knowledge learning process and reduces organiza- tion’ absorptive and adaptation capability. Then overlooking behavior attends a high risk propen- sity of management and thus reflects negatively on the corporate culture, reducing liability and risk awareness and exposing the company to a higher probability of harmful events occurrence in the long time. Knight [30] claimed “there is no difference for con- duct between a measurable risk and unmeasurable un- certainty”. Risk management doesn’t mean necessarily to consider every potential risk, it regards awareness con- cept. Prudent management suggests you can choose to apply corrective measures and mitigation actions or rather decide consciously to neglect any specific risks. What is important a manager should make decisions within a rational and responsible approach based on pro- bability of occurrence, predictability and impact estima- tion. Then, a prudent and responsible decision maker should consider, in its evaluation and selection of strategies to follow, each time the reference context, differentiating his behavior from situations of certainty, risk or uncer- tainty. We talk about decision making under certainty when you can be sure, without doubt the authenticity of a case; in risk conditions, when you cannot be sure of the authenticity of a case but this one can be estimated with a certain probability rate; in uncertainty, when you cannot assess the authenticity of a case and cannot understand the probability that this is true, because you haven’t in- formation to make a reasonable estimate [31]. It is essential to distinguish between conditions of cer- tainty, uncertainty or risk, because it has power over the chance to estimate probability of occurrence of an event and to determine the most appropriate and effective deci- sion-making strategies in a specific situation. Under con- ditions of certainty, you will choose the action whose outcome provides the greatest usefulness; in a risky situation, you will value the greatest expected utility; in uncertain case, no decision can be considered completely reliable or reasonable and effects should be considered random [32]. In situation of uncertainty, manager doesn’t know historical data suitability and future probability of occurrence, and there are infinite factors which could influence events evolution and change preliminary deci- sion-making conditions. Normally, in order to know the risks we are exposed, we need to choose the probability distribution form so well as to calculate the risks and find out the probability that a past event comes up again in the future. If you need a probability distribution to understand the future behavior, it’s also true past events knowledge is neces- sary to determine the probability distribution. In other words, we are in a vicious circle [35]. One of the main problems is that risk management science has been addressed, in the last century, by an econometric and mathematical point of view, looking for potential models which were able to forecast loss distri- bution. What it’s not clear is that risks of using a wrong probability distribution aren’t obviously predictable and can be even more dangerous than those detected by cho- sen distribution tails [36]. The main problem of risk management is determined by the fact that general properties of the generators (dis- tribution shape)2 usually outline a uncertain situation and not hazardous one. The worst mistake that can make the risk manager is to confuse uncertainty with risk, failing to define class and generator parameters.3 A generator has specific parameters that determine certain values of the distribution, allows analysts to cal- culate the probability that a certain event occurred. Usu- ally the generator isn’t known and there is no independ- ent way to determine the parameters, unless you try to deduce from the past behavior of the generator. To esti- mate the parameters from historical data is still necessary to assume the generator class (Normal, Poisson, Bino- mial and other). These estimates are more accurate as larger is the amount of data available. Logically, an inappropriate choice of the type of generator immediately affect the reliability of results (in terms of imprecise probability of occurrence of risky events). The risk manager can run into several situations: the type of generator is easily identifiable and there is an adequate supply of past data; the generator can be considered reliable, but the lack of historical data makes impossible to correctly de- termine the moments; you cannot determine either the parameters or the general class of membership. 2The term “generator” refers to the type of distribution that best esti- mate a well-defined set of data. 3See [30,33]. The distinction between risk and uncertainty is roughly that risk refers to situations where the perceived likelihoods of events of interest can be represented by probabilities measured by statistical tools using historical data, whereas uncertainty refers to situations where the information available to the decision-maker is too imprecise to be summarized by a probability measure [30,33,34]. The last point is usually the dominant situation in which the relationship between expected risk and actual risk is still undetermined or accidental. There is a state of uncertainty, where it cannot be sure what will happen, or make any estimates on the probability of hypothetical Copyright © 2010 SciRes. JSSM  Evaluating Enterprise Risk in a Complex Environment357 cases. Therefore, risk managers should not confuse uncer- tainty with risk, failing to define both the class generator and its parameters. A fact is making decisions under risk, where you know the distribution of a given phenomenon and the probability that a harmful event, another fact is to do it under conditions of uncertainty where the distribu- tion is only conceivable and the probability of events is not accurately defined. Further perspective is provided by recalling the role of differentiability in decision theory under risk, where util- ity functions are defined on cumulative distribution func- tions. Much as calculus is a powerful tool, Machina [37] has shown that differential methods are useful in decision theory under risk. Epstein [29] adds to the demonstration in Machina that differential techniques are useful also for analysis of decision-making under uncertainty. Operational losses are usually forecasted using para- metric and actuarial approaches as LDA (Loss Distribu- tion Approach) or more cautious EVT (Extreme Value Theory) [38]. The main problems of this approaches concern the choice to reduce any event distribution to consolidated generator (normal, lognormal, GPD or other) and the need for consistent time series to obtain values appropriate risk. The key attraction of EVT is that it offers a set of ready-made approaches to a challenging problem of quantitative operational risk analysis and try to make risks, which are both extreme and rare, appropriately modeled. Applying classical EVT to operational loss data however raises some difficult issues. The obstacles are not really due to a technical justification of EVT, but more to the nature of the data. EVT is a natural set of statistical techniques for estimating high quantiles of a loss distribution, which well works with sufficient accu- racy only when the data satisfy specific conditions [39]. The innovation introduced by fractal model is the flexibility to adapt event distribution to real one without setting up the best generator. So you don’t need to as- sume the shape of the generator because ifs allows to reproduce the structure of real distribution on different scales, exploiting the properties of self-similarity4 of fra- ctals. The fractal building is based on an innovative algo- rithm which is iterated a theoretically infinite number of times so that, in each iteration, the approximated distri- bution better estimates the real one. The IFS (Iterated Functions System) technique finds out the more appro- priate generator without a known model application [40]. Moreover the properties of fractals allow to estimate the event distribution in a reliable manner even if we have a lack of historical data. Therefore the advantage of this approach is to give risk managers a tool to avoid the mistake of neglecting risks, regardless having to fix a suitable standard generator. A best estimate of an event risk level enhances the effi- ciency of its management, monitoring and control and re- duces exposure of the organization. 5. Sample and Methodology Our analysis is based on a two year operational loss data collection by an Italian banking group5. The dataset con- tains operational losses broken down by eight business lines and seven event types in accordance with the Basel II rules. The business lines are: Corporate Finance, Tra- ding & Sales, Retail Banking, Commercial Banking, Pay- ment & Settlement, Agency Services, Asset Management and Retail Brokerage. The event types are: Internal Fraud, External Fraud, Workplace Safety, Business Practice, Damage to Physical Assets, Systems Failures, Execution & Process Management. The available data consist of a collection of opera- tional losses of an Italian banking group for a time period of two years divided into company code, type of business line, risk drivers, event type, amount of loss, date of oc- currence, frequency of occurrence. To have a significant analysis, we had to use a time horizon of one month instead of one year for our estima- tions (then, we have 24 observations of the aggregate monthly loss), and to focus only on the business line (we do not care of the event type); we analyze two different business lines: the traditionally HFLI6 retail banking [15] and the traditionally LFHI7 retail brokerage [41]. In Table 1 we show the descriptive statistics of retail banking and retail brokerage. In the retail banking busi- ness line we have 940 loss observations (high frequency business lines), the minimum loss is 430 euros, the maximum loss is 1066685 euros and the average loss is 13745 euros. In the retail brokerage business line we have 110 loss observations (low frequency business line) with a minimum loss of about 510 euros, maximum loss of 700000 euros and an average loss of 28918 euros (higher than retail banking business line). We have used for our analysis three types of ap- proaches: the traditional Loss distribution approach (LDA), the refined Extreme Value Theory (EVT) and the innovative fractal based approach Iterated function sys- 5For reasons of confidentiality we cannot use the group name 6High Frequency Low Impact 7Low Frequency High Impact 4The basis of self-similarity is a particular geometric transformation called dilation that allows you to enlarge or reduce a figure leaving unchanged the form. Copyright © 2010 SciRes. JSSM  Evaluating Enterprise Risk in a Complex Environment 358 Table 1. Descriptive statistics of retail banking (left) and retail brokerage (right). Retail Banking (940 obs.) Retail Brokerage (110 obs.) Min. 438 509.6 1st Qu. 1302 1071.4 Median 3000 2124.3 Mean 13745 28918.3 3rd Qu. 8763 13375.0 Max. 1066685 700000.0 tems (IFS). First of all, we consider the actuarial-based Loss Dis- tribution Approach. The goal of the LDA methodology consists in identifying the loss severity and frequency distributions and then calculate the aggregate loss distri- bution through a convolution between severity and fre- quency. LDA is built upon two different distributions, esti- mated for every cell of the Business Line-Event Type1 matrix (ij): the distribution FXij(x) of the random variable Xij which represent the loss amount trigged by a single loss event. This distribution is called loss severity distri- bution. The distribution Pij(n) of the counting random variable Nij, which probability function is pij(k) = P(N = k). Pij is said loss frequency distribution and corresponds to: ,, n ij ij Pn P 0k These two distributions (which have to be independent from each other) represent the core o the LDA approach, and are used to obtain the operational loss calculated on (mainly) a one-year horizon (in our case one-month ho- rizon) for the ij cell: ,,, ij N ij ijn LX 0n The main approach to study extreme events is the Ex- treme Value Theory (EVT), which is a statistical meth- odology that allows analysts to handle separately the tail and the body of the distribution, so that the influence of the normal losses on the estimation of the particularly high quantiles can be eliminated. This technique was developed to analyze the behavior of rare events and to prevent natural catastrophes (i.e., floods or losses due to fires). There are two classes of distribution in particular which prove to be useful for modeling extreme risks: the first one is the Generalized Extreme Value (GEV) and the second one is the Generalized Pareto Distribution (GPD). The Iterated Function Systems (IFS) represent a mathematically complex class of innovative and non- parametric fractal methods to create and generate fractal objects as an approach to shift between time towards space [40]. The fractals are geometric figures that can be represented at different dimensional levels, but they con- sist in an infinite replica of the same pattern on a smaller and smaller scale and so they are made up of multiple copies of themselves. This fundamental property of in- variance is called self-similarity, one of the two principal properties that describe a fractal. The second property, not less important, is the indefiniteness, which is the pos- sibility to fractionate virtually till infinite every part be- fore going to the next one. Hence, to “draw” a fractal through a processor, the maximum number of iterations must be specified, because a finite time is insufficient to calculate a point of the fractal at infinite iterations. One of the IFS possible fields of application is risk management, in which fractal methods are used as non-parametric estimation methods as an alternative to the Loss Distribution Approach (LDA) and the EVT [39]. These innovative methods can both interpret the data of loss in a more accurate way, and estimate and recreate the possible population from which they could derive, especially when there are few observations or data. The advantage of this approach is that the elaboration proc- esses can be reduced while improving, at the same time, the capacity of estimation of the patrimonial require- ments necessary to cover the expected and unexpected operational losses8. IFS methods eliminate the necessity to analyze the distribution of frequency9, of severity10 and of the correlated convolutions between them, simulating directly the cumulative distribution of the aggregate losses and then applying the most efficient measure of risk11. Consequently, they become a fundamental element in the analysis of losses, especially in correlation with the so called extreme losses, which are the losses that, due to their very low probability of occurrence, tend to be ig- nored, although their occurrence could determine catas- trophic consequences. In the next section, the fractal approach will be applied 8The expected loss is defined as the mean of the losses observed in the revious periods. The unexpected loss is defined as the difference between the VaR and the expected loss and, resulting more difficult to e represented in a model, represent for the business management an element of uncertainty which can be minimized only through ad adequate estimation. 9For distribution of frequency we intend the frequency of the occurrence of the events. 10For distribution of severity we intend the financial impacts generated by the losses. 11In our case Value at Risk Copyright © 2010 SciRes. JSSM  Evaluating Enterprise Risk in a Complex Environment359 to a specific case with the intent to demonstrate, at a prac- tical level, what kind of information can emerge from the use of this approach and how this information can be used to improve the business management. 6. Estimation by IFS Approach The non-parametric estimation we offered as an alterna- tive way to LDA methodology and the Extreme Value Theory is the IFS fractal based approach which should be able to interpret loss data in the best possible way and to simulate a population (see Figure 2) from which our observations could come and especially in the presence of a few observations and missing data (as in the case of retail brokerage). The advantage of this approach is to eliminate the analysis on frequency and severity distribu- tion with related convolution and the previous choice of a known distribution to directly simulate the cumulative distribution function of the aggregate operational losses and then apply a risk measure to calculate capital re- quirements to cover operational losses in the business lines we studied. The Iterated Function Systems, designed originally from Barnsley for the digital image transfer [42], depth by Forte and Vrscay [43] for solving inverse problems, and used by Iacus and La Torre for their estimation and simulation mathematical property of probability func- tions [40] and, finally, adapted to the capital require- ments calculation of operational risk, have shown over other methods (such as LDA and EVT) more relative efficiency in terms of ability to reconstruct a population of losses. We used the function arctang for transforming the values of loss in values between 0 and 1 to allow the IFS approach, that functions on a finite support (in our case precisely between 0 and 1), to estimate the operational cumulative distribution function starting from the em- pirical distribution function (EDF). First, we have to demonstrate that IFS approach is bet- ter than LDA and EVT to reconstruct a population from Figure 2. Example of IFS simulation in retail banking. which our information could come and especially in the presence of few data. The methodology to demonstrate the capability of the IFS estimator to reconstruct the original population is to calculate a statistical distance from the original distribution of the IFS simulation and comparing the results obtained with the classic method used in the actuarial science (LDA) and the most innova- tive method of Extreme Value Theory. For our analysis we use observations of operational losses caused by fires. The database Danish Fire Losses in literature has been used to test both classical techniques such as LDA that EVT as the newest techniques and lends itself very well to the study of extreme and complex events as demon- strated by McNeil et al. [44] and therefore it represents a good test for analyzing and evaluating the relative effi- ciency of the fractal approach versus parametric tech- niques such as LDA and EVT. This database contains daily observations of loss arising fire in Denmark in mil- lions of Danish crowns from January 1980 to 1990 and is free downloadable in the package QRMLib by R 2.10.0 project. We proceed extracting random samples of dif- ferent sizes from the database and then simulate the real distribution of losses through the use of IFS maps for small sample size (n = 10, 20, 30) and medium sample (n = 50, 100, 250). Finally we compare the results obtained by IFS approach in terms of AMSE12 distance with the results obtained by LDA and EVT approaches. In Table 2 we show the behavior of the AMSE index13 (calculated over 100 simulations for each sample size). The indices show us how IFS approach is more effi- cient for small sample size than LDA and EVT, while for samples of medium and large size we find an asymptotic behavior between LDA approach and IFS one. Secondly, we apply this new fractal methodology for estimating enterprise capital requirements that a firm must allocate to cover operational risks. We know the main problem for the operational risk analysis is the lack of information and the lack of data, so we use the power and the capability of the fractal objects to reconstruct a population starting from this lack of information to better understand the real risk profile of the firm and to im- prove the decision making process inside the company. In Table 3 we show the result of non-parametric estima- tion made by IFS approach for the retail banking and retail brokerage business lines compared with the value obtained by LDA VaR, EVT VaR and the maximum value of the empirical distribution function. In the two business lines in which we were able to ap- ply all the three approaches proposed in this paper, we 12Average mean square error 13Lower is the value of the AMSE index better is IFS approach versus LDA and EVT Copyright © 2010 SciRes. JSSM  Evaluating Enterprise Risk in a Complex Environment 360 Table 2. Relative efficiency of IFS estimator over LDA and EVT. n AMSE over LDA AMSE over EVT 10 65.13 24.05 20 73.52 31.26 30 75.15 25.95 50 79.33 22.27 100 111.12 21.05 250 115.67 21.11 Table 3. Value at Risk by different approaches. Business line LDA VaR EVT VaR IFS VaR Max EDF Retail banking 1531369 4420998 3200000 2248354 Retail brokerage 1061966 4929569 1451000 931607 obtained, with IFS method, estimates in the middle be- tween the Extreme Value Theory which tends to overes- timate widely the capital requirements and the Loss Dis- tribution Approach which tends to underestimate the re- quirements (for example in the case of retail banking). Another IFS advantage is the ease of use, meaning that you do not need to simulate a distribution for the severity and for the frequency and the related convolution, but is enough to measure directly the aggregated operational loss distribution (monthly in our case given the number of observations available) and apply to this distribution a measure of risk. The strength of this fractal approach has demonstrated its ability to capture the true risk profile of a company even with lack of data. Of course not everything is positive, but we must take into account and know perfectly well there are inherent disadvantages and limitations that make each model less accurate and sensitive to errors. Firstly like all nonpara- metric approaches, the IFS are very sensitive to the sam- ple data used. Several samples extracted from the same population can lead to estimates and simulations widely different between them. It is a good idea to use a very large number of simulations in order to obtain consistent estimates. 7. Conclusions In recent years, there has been increasing concern among researchers, practitioners, and regulators over how to evaluate models of operational risk. The actuarial methods (LDA) and the Extreme Value Theory (EVT) constitute a basis of great value to fully understand the nature and the mathematical-statistical properties of the process underlying the operational losses, as regards the severity of losses, the frequency of losses and the relationship between them. Several authors have commented that only by having thousands of observations can interval forecasts be as- sessed and traditional techniques need a large amount of data to be precise and effective. However, currently available data are still limited and traditional methodolo- gies fail to grasp the correct risk profile of firms and fi- nancial institutions, as shown the empirical analysis un- dertaken in this article. With IFS technique you can es- timate appropriate risk value even if you have just few data. Increasing data availability, IFS outcomes tend to be as suitable as traditional methods. So, we can say that the study, the analysis and the im- provement of innovative methods such as Iterated func- tion systems (IFS) is a valuable support for measurement and management the operational risk alongside actuarial parametric techniques. Not only that, their use is not lim- ited only in the next years, pending more complete series. Their use should include those areas of operational risk, which is by definition heterogeneous and complex, have a limited numbers of events, but their impacts can, how- ever, be devastating for firms and stakeholders. Precisely for this reason the use and improvement of these innova- tive tools must continue for the foreseeable future be- cause there will always be the need in this area of inno- vative approaches able to predict, with good approxima- tion, situations starting from this lack of data and recon- struct a precise and faithful population to integrate and assess the results of other different approaches. As such as every mathematical method, IFS could help risk management making quicker knowledge learning process but what is really important is a careful and pru- dent management behavior. Thus we can conclude, firstly, mathematical approa- ches are effective only when they are integrated and shared in a responsible corporate culture. Secondly, IFS as a nonparametric method is sensitive to the composi- tion of used sample; for an appropriate estimation of op- erational loss with IFS you should reapply the methods more times and then estimate an adequate average level. More cautiously you apply mathematical techniques, more probable is a accurate estimation of hedging value of the total operational risk. Finally, being the first time IFS is applied to operational risk, future improvements are probable. In our intentions is we would extend the application of this methods to financial and credit risk. However this goal needs we are able to use IFS to estimate not just probability function but density one. The main problem it Copyright © 2010 SciRes. JSSM  Evaluating Enterprise Risk in a Complex Environment361 requires to apply a Fast Fourier Transform, as shown by Iacus and La Torre [40]. REFERENCES [1] D. Foray, “Economics of Knowledge,” MIT Press, 2004. [2] S. Vicari, “Conoscenza e impresa,” Sinergie rivista di studi e ricerche, Vol. 76, 2008, pp. 43-66. [3] D. J. Teece, “Managing Intellectual Capital,” Oxford Uni- versity Press, Oxford, 2000. [4] E. Altman, “Financial Ratios, Discriminant Analysis and the Prediction of Corporate Bankruptcy,” Journal of Fi- nance, Vol. 23, No. 4, 1968, pp. 569-609. [5] L. Pilotti, “Le Strategie dell’Impresa,” Carocci, Roma, 2005. [6] R. M. Grant, “Toward a Knowledge-Based Theory of the Firm,” Strategic Management Journal, Vol. 17, No. Spe- cial Issue, 1996, pp. 109-122. [7] J. A. Schumpeter, “Business Cycles,” McGraw-Hill Book Company, New York, 1939. [8] A. Pagani, “Il nuovo imprenditore,” Franco Angeli, Mila- no, 1967. [9] M. Haller, “New Dimension of Risk: Consequences Mana- gement,” The Geneva Papers on Risk and Insurance, Vol. 3, 1978, pp. 3-15. [10] G. Dickinson, “Enterprise Risk Management: Its Origin and Conceptual Foundation,” The Geneva Papers on Risk and Insurance, Vol. 26, No. 3, 2001. [11] E. Rullani, “Economia del rischio e seconda modernità,” In S. Maso, Ed., Il rischio e l’anima dell’Occidente, Cafoscarina, Venezia, 2005, pp. 183-202. [12] G. M. Mantovani, “Rischio e valore dell’impresa. L’ap- proccio contingent claim della finanza aziendale,” Egea, Milano, 1998. [13] G. M. Golinetti, L. Proietti and G. Vagnani, “L’azione di governo tra competitività e consonanza,” In G. M. Goline- lli, Ed., Verso la scientificazione dell’azione di governo. L’approccio sistemico al governo dell’impresa, Cedam, Padova, 2008. [14] L. Proietti, “Rischio e conoscenza nel governo dell’impre- sa,” Sinergie rivista di studi e ricerche, Vol. 76, 2008, pp. 191-215. [15] BIS, “International Convergence of Capital Measurement and Capital Standards,” Basel Committee on Banking Su- pervision, 2004. [16] P. Davidson, “Money and the Real World,” The Econo- mic Journal, Vol. 82, No. 325, 1972, pp. 101-115. [17] P. Streeten, “The Cheerful Pessimist: Gunnar Myrdal the Dissenter (1898-1987),” World Development, Vol. 26, No. 3, 1998, pp. 539-550. [18] K. J. Arrow, “Alternative Approaches to the Theory of Choice in Risk-Taking Situations,” Econometria, Vol. 19, 1951, pp. 404-437. [19] H. M. Markowitz, “Foundation of Portfolio Theory,” Jour- nal of Finance, Vol. 46, No. 2, 1991, pp. 469-477. [20] U. Beck, “Risk Society: Towards a New Modernity,” Sage, London, 1992. [21] D. Elliott and D. Smith, “Football Stadia Disaster in the UK: Learning from Tragedy,” Industrial and Environ- mental Crisis Quarterly, Vol. 7, No. 3, 1993, pp. 205-229. [22] D. Smith, “Business (not) as Usual: Crisis Management, Service Recovery and the Vulnerability of Organizations,” Journal of Services Marketing, Vol. 19, No. 5, 2005, pp. 309-320. [23] P. M. Allen and M. Strathern, “Evolution, Emergence and Learning in Complex systems,” Emergence, Vol. 5, No. 4, 2003, pp. 8-33. [24] D. Smith, “Beyond Contingency Planning: Towards a Mo- del of Crisis Management,” Industrial Crisis Quarterly, Vol. 4, No. 4, 1990, pp. 263-275. [25] D. Smith, “On a Wing and a Prayer? Exploring the Hu- man Components of Technological Failure,” Systems Re- search and Behavioral Science, Vol. 17, No. 6, 2000, pp. 543-559. [26] J. T. Reason, “The Contribution of Latent Human Fail- ures to the Breakdown of Complex Systems,” Philoso- phical Transactions of the Royal Society of London, Se- ries B, Vol. 327, No. 1241, 1990, pp. 475-484. [27] J. T. Reason, “Managing the Risks of Organizational Acci- dent,” Ashgate, Aldershot, 1997. [28] C. Sipika and D. Smith, “From Disaster to Crisis: The Failed Turnaround of Pan American Airlines,” Journal Contingencies and Crisis Management, Vol. 1, No. 3, 1993, pp. 138-151. [29] L. G. Epstein, “A Definition of Uncertainty Aversion,” Review of Economic Studies, Vol. 66, No. 3, 1999. [30] F. Knight, “Risk, Uncertainty and Profit,” Houghton Mif- flin, Boston and New York, 1921. [31] D. Pace, “Economia del rischio. Antologia di scritti su rischio e decisione economica,” Giuffrè, 2004. [32] J. V. Neumann and O. Morgenstern, “Theory of Games and Economic Behavior,” Princeton University Press, 1944. [33] J. M. Keynes, “The General Theory of Employment, In- terest and Money,” Macmillan, New York, 1936. [34] J. M. Keynes, “A Treatise on Probability,” Macmillan, New York, 1921. [35] N. N. Taleb and A. Pilpel, “On the Unfortunate Problem of the Nonobservability of the Probability Distribution,” 2004. http://www.fooledbyrandomness.com/knowledge.pdf [36] R. Lowenstein, “When Genius Failed: The Rise and Fall of Long-Term Capital Management,” Random House, New York, 2000. [37] M. J. Machina and D. Schmeidler, “A More Robust Defi- nition of Subjective Probability,” Econometrica, Vol. 60, No. 4, 1992, pp. 745-780. [38] J. Neslehová, P. Embrechts and V. Chavez-Demoulin, “Infinite-Mean Models and the LDA for Operational Risk,” Journal of Operational Risk, Vol. 1, No. 1, 2006, pp. 3-25. Copyright © 2010 SciRes. JSSM  Evaluating Enterprise Risk in a Complex Environment 362 [39] P. Embrechts and G. Samorodnitsky, “Ruin Problem and How Fast Stochastic Processes Mix,” The Annals of Ap- plied Probability, Vol. 13, No. 1, 2003, pp. 1-36. [40] S. Iacus and D. L. Torre, “Approximating Distribution Functions by Iterated Function Systems,” Journal of Ap- plied Mathematics and Decision Sciences, Vol. 2005, No. 1, 2005, pp. 33-46. [41] M. Moscadelli, “The Modeling of Operational Risk: Ex- perience with the Analysis of the Data Collected by the Basel Committee,” Temi di discussione, No. 517, 2004. [42] M. Barnsley and L. Hurd, “Fractal Image Compression,” AK Peters. Ltd, Wellesley, MA, 1993. [43] B. Forte and E. Vrscay, “Inverse Problem Methods for Generalized Fractal Transforms,” In: Y. Fisher, Ed., Frac- tal Image Encoding and Analysis, Springer-Verlag, Trond- heim, 1995. [44] A. J. McNeil, R. Frey and P. Embrechts, “Quantitative Risk Management: Concepts, Techniques, and Tools,” Princeton University Press, Princeton, 2005. Copyright © 2010 SciRes. JSSM

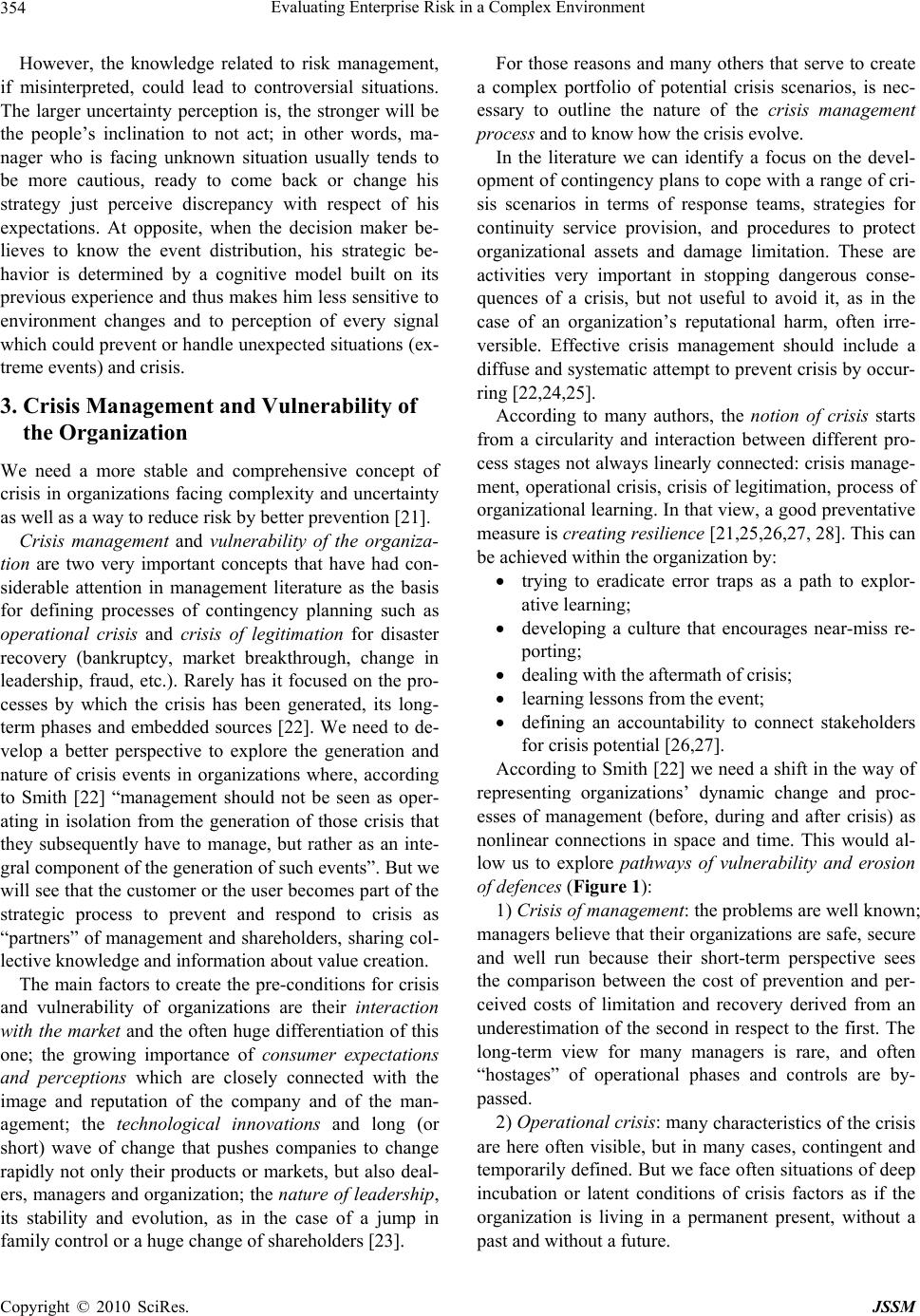

|