Paper Menu >>

Journal Menu >>

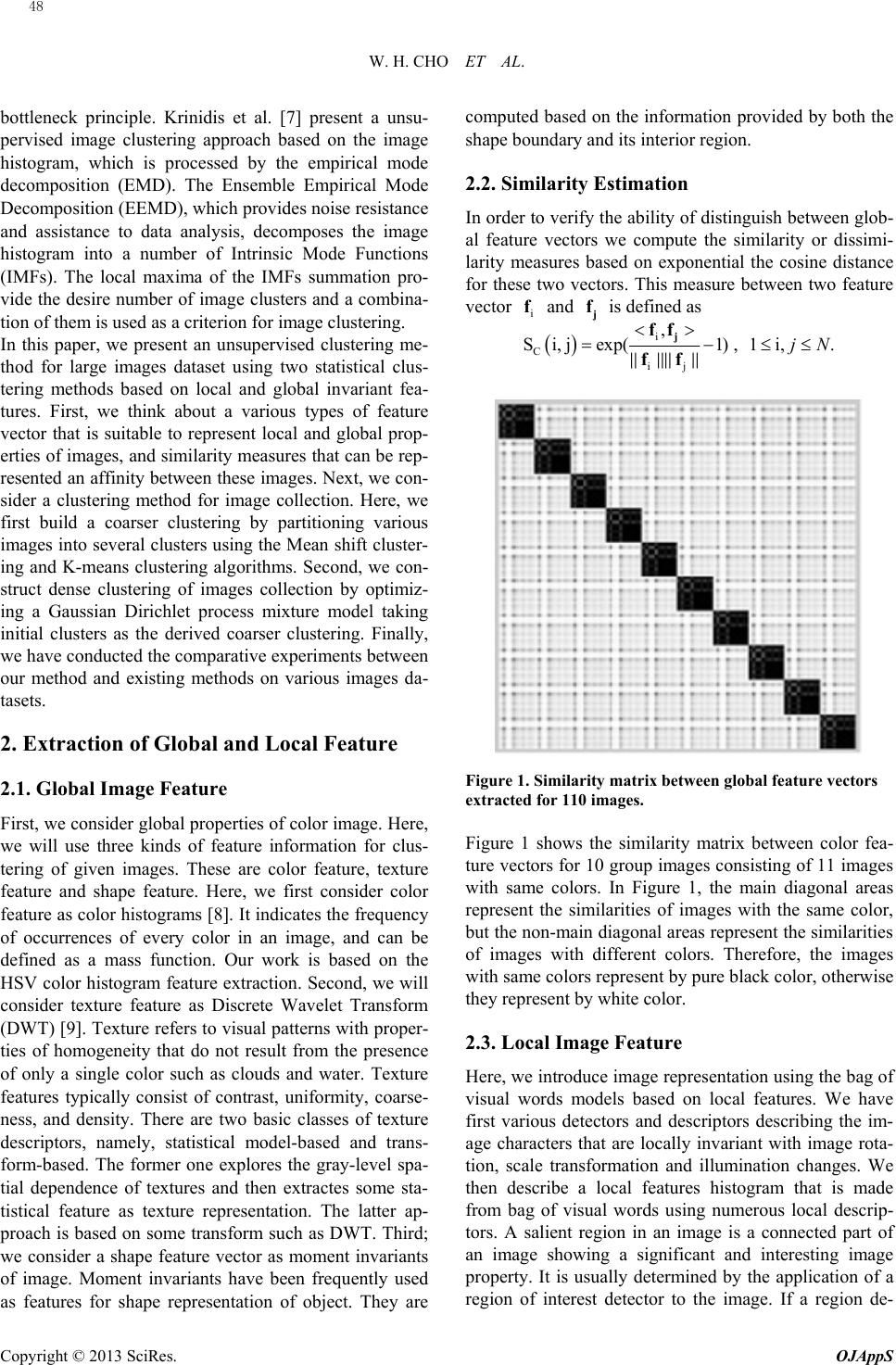

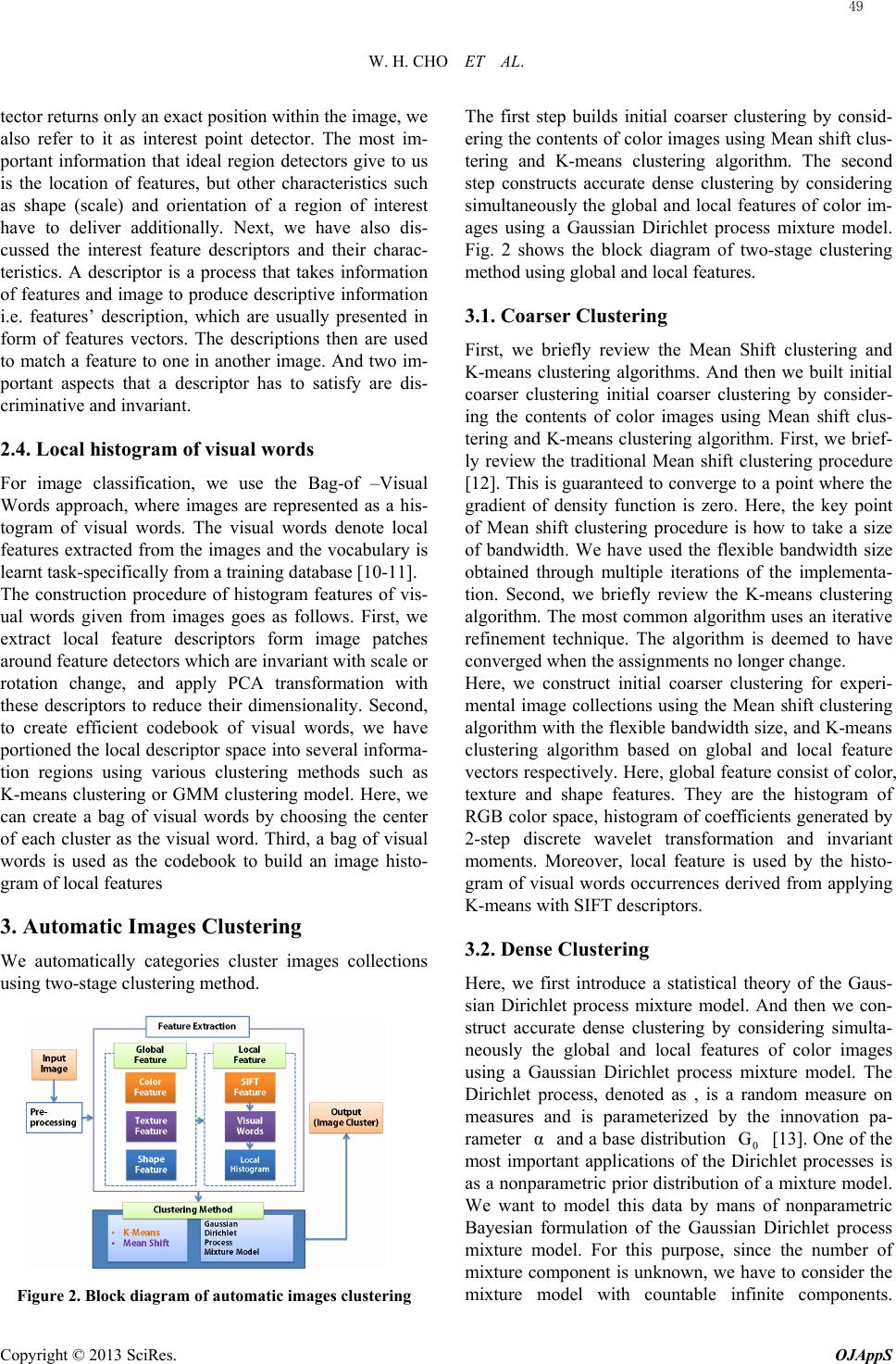

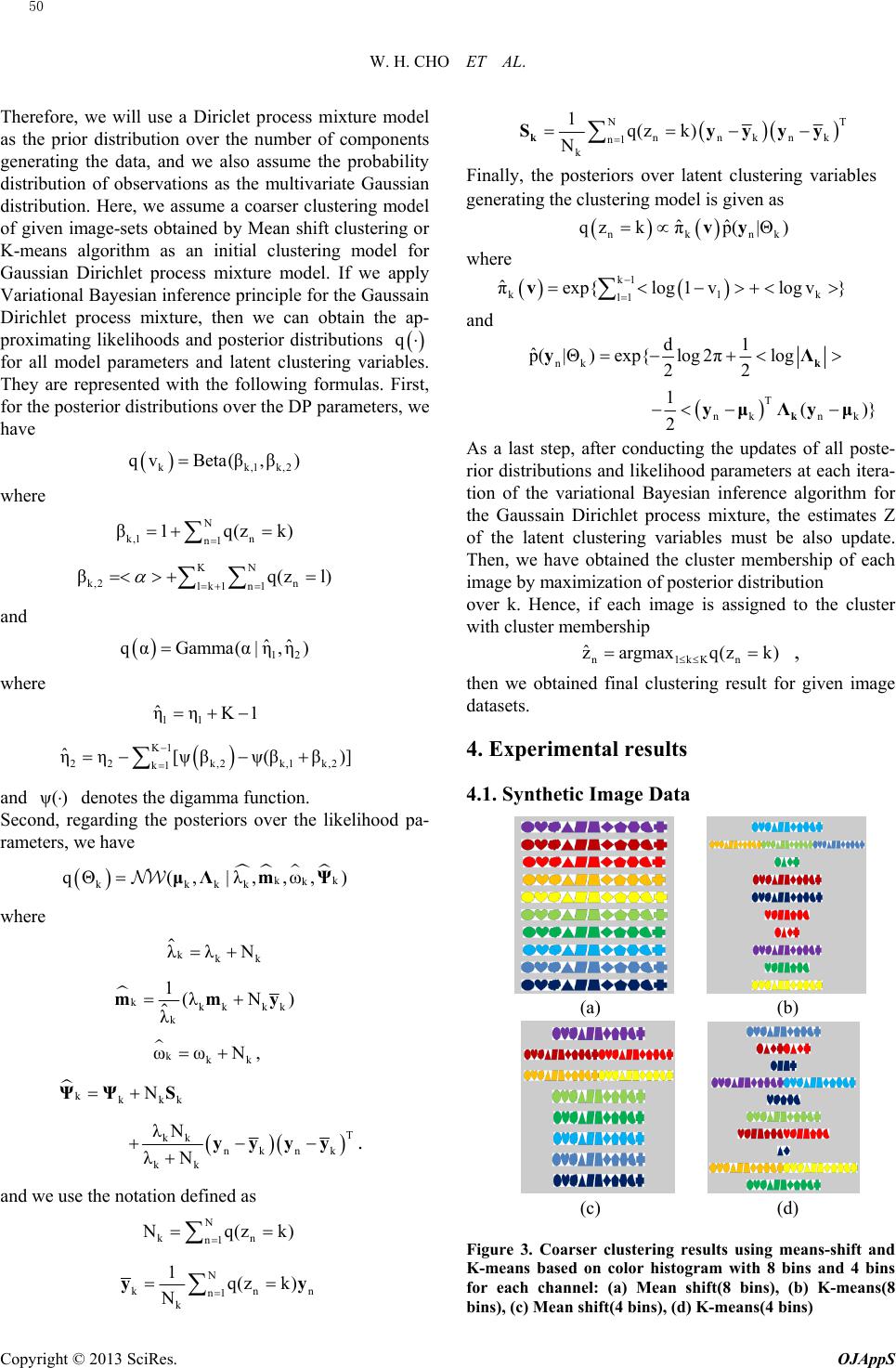

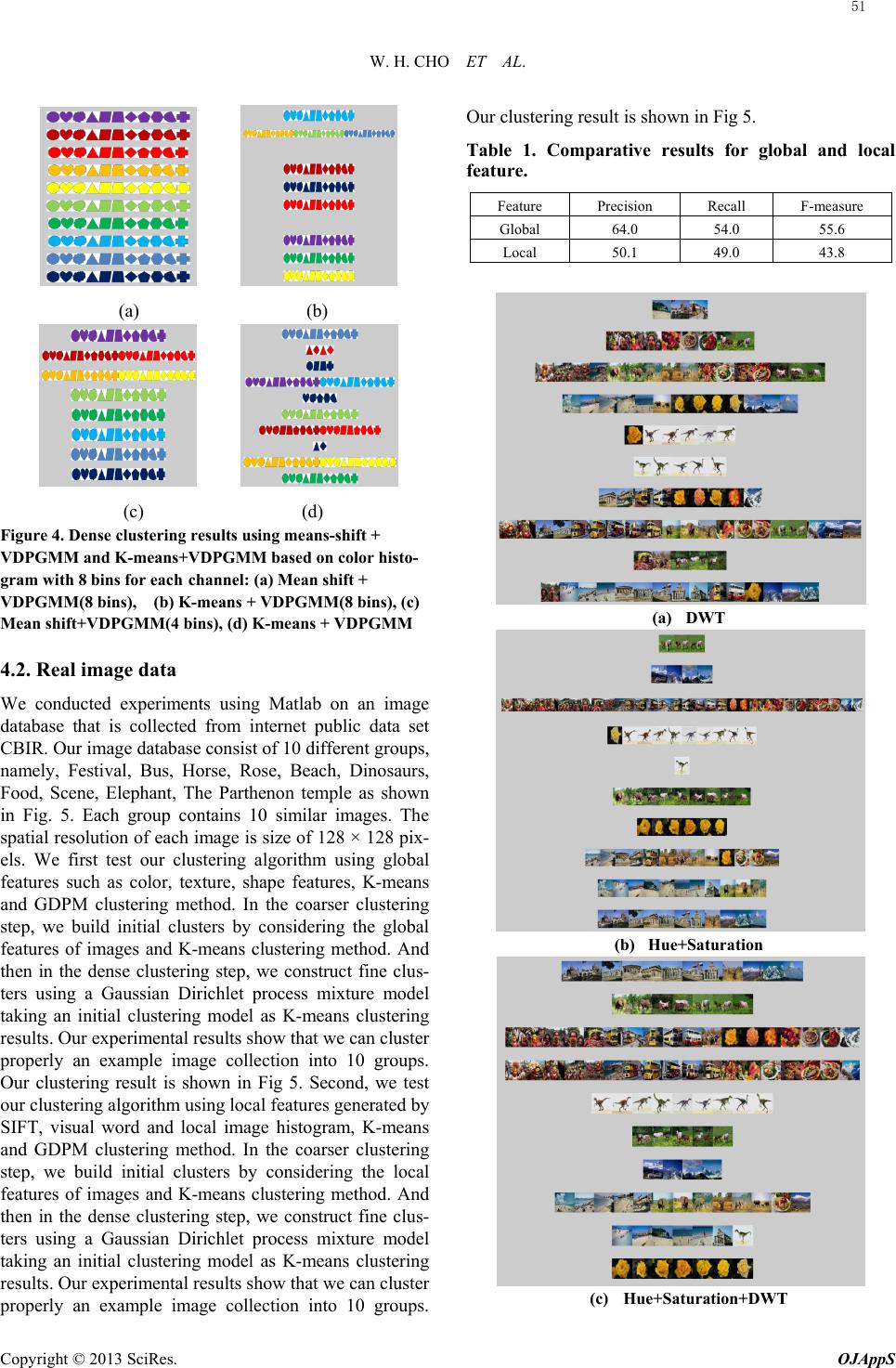

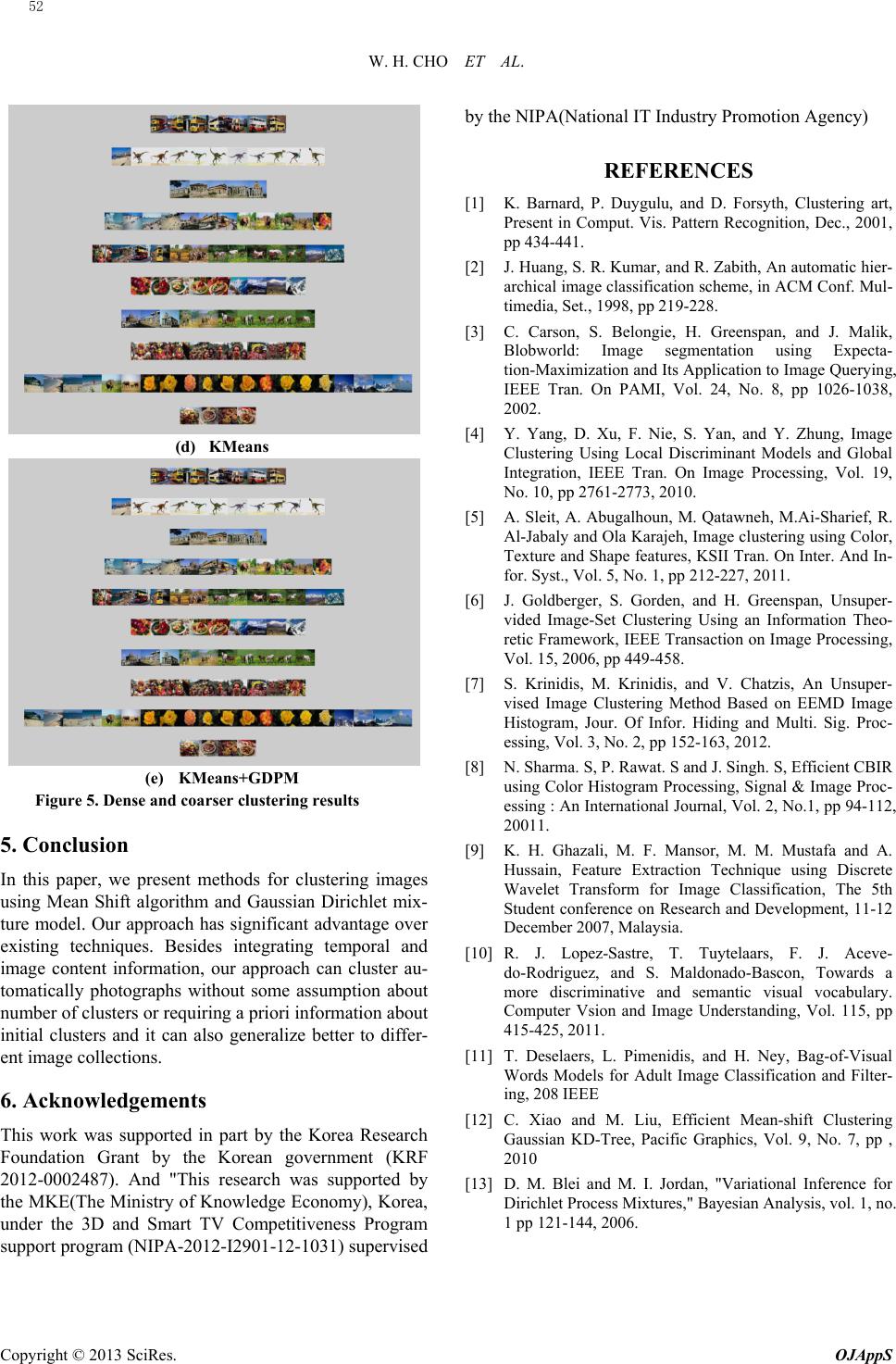

Open Journal of Applied Sciences, 2013, 3, 47-52 Published Online March 2013 (http://www.scirp.org/journal/ojapps) Copyright © 2013 SciRes. OJAppS Automatic Classification for Various Images Collections Using Two Stages Clustering Method Wan Hyun Cho1, In Seop Na2, Jun Yong Choi1 , Tae Hoon Lee1 1Department of Statistics, Chonnam National University, Gwagnju, Korea 2School of School of Electronic & Computer Engineering, Chonnam National University, Gwangju, Korea Email: whcho@jnu.ac.kr, ypencil@hanmail.net, abyss1225@hanmail.net, lth10916@hanmail.net Received 2012 ABSTRACT In this paper, we propose an automatic classification for various images collections using two stage clustering method. Here, we have used global and local image features. First, we review about various types of feature vector that is suit- able to represent local and global properties of images, and similarity measures that can be represented an affinity be- tween these images. Second, we consider a clustering method for image collection. Here, we first build a coarser clus- tering by partitioning various images into several clusters using the flexible Mean shift algorithm and K-mean cluster- ing algorithm. Second, we construct dense clustering of images collection by optimizing a Gaussian Dirichlet process mixture model taking initial clusters as given coarser clustering. Finally, we have conducted the comparative experi- ments between our method and existing methods on various images datasets. Our approach has significant advantage over existing techniques. Besides integrating temporal and image content information, our approach can cluster auto- matically photographs without some assumption about number of clusters or requiring a priori information about initial clusters and it can also generalize better to different image collections. Keywords: Automatic Classification; Images Collections; Clustering; Mean Shift; Gaussian Dirichlet Processing Mixture Model 1. Introduction The general goal in the image clustering is to classify the different image objects or patterns in such a way that samples of the same cluster are more similar to one an- other samples belonging to different clusters. However, clustering task is a difficult problem due to many as- sumptions, different contexts and the variety of input data [1]. In the last few decades, it has been growing in- terests in developing effective and fast methods for clas- sify an input image into different clusters. These methods are mainly divided in two types of clustering algorithms, such as supervised and unsupervised methods. In the supervised image clustering algorithms, the researchers incorporate a priori knowledge, such as the number of image clusters. Huang et al. [2] propose a hierarchical classification tree that is generated via supervised learn- ing, using a training set of images with known class la- bels. The tree is next used to categorize new images en- tered into the database. Carson et al. [3] used a naive Bayes algorithm to learn image categories in a super- vised learning scheme. The images are represented by a set of homogeneous regions in color and texture feature space, based on the “Blob-world” image representation. Yang et al. [4] propose a new clustering algorithm, re- ferred to local discriminate models and global integration (LDMGI), which utilizes both manifold information and discriminate information for data clustering. They theo- retically prove that K-means and DisKmeans are both special cases of LDMGI. They also show that LDMGI is a type of special clustering algorithm. Thus, they provide a new perspective to discover and understand the rela- tionships between K-means (or DisKmeans) and other spectral clustering algorithms. Sleit et al. [5] propose Content Based Image retrieval (CBIR) scheme that ex- tracts color, texture, and shape feature of images. Then, they group similar images together using K-mean clus- tering. They use the color histogram, Gabor filters, and Fourier descriptors for color, texture, and shape features respectively. The main restriction in supervised image clustering is that human intervention is required. On the other hand, unsupervised methods aim at provid- ing the correct number of image clusters without any a priori information. Goldberger et al. [6] combine dis- crete and continuous image models with informa- tion-theoretic based criteria for unsupervised hierarchical image-set clustering. The continuous image modeling is based on mixture of Gaussian densities. The unsuper- vised image-set clustering is conduct by the information  W. H. CHO ET AL. Copyright © 2013 SciRes. OJAppS bottleneck principle. Krinidis et al. [7] present a unsu- pervised image clustering approach based on the image histogram, which is processed by the empirical mode decomposition (EMD). The Ensemble Empirical Mode Decomposition (EEMD), which provides noise resistance and assistance to data analysis, decomposes the image histogram into a number of Intrinsic Mode Functions (IMFs). The local maxima of the IMFs summation pro- vide the desire number of image clusters and a combina- tion of them is used as a criterion for image clustering. In this paper, we present an unsupervised clustering me- thod for large images dataset using two statistical clus- tering methods based on local and global invariant fea- tures. First, we think about a various types of feature vector that is suitable to represent local and global prop- erties of images, and similarity measures that can be rep- resented an affinity between these images. Next, we con- sider a clustering method for image collection. Here, we first build a coarser clustering by partitioning various images into several clusters using the Mean shift cluster- ing and K-means clustering algorithms. Second, we con- struct dense clustering of images collection by optimiz- ing a Gaussian Dirichlet process mixture model taking initial clusters as the derived coarser clustering. Finally, we have conducted the comparative experiments between our method and existing methods on various images da- tasets. 2. Extraction of Global and Local Feature 2.1. Global Image Feature First, we consider global properties of color image. Here, we will use three kinds of feature information for clus- tering of given images. These are color feature, texture feature and shape feature. Here, we first consider color feature as color histograms [8]. It indicates the frequency of occurrences of every color in an image, and can be defined as a mass function. Our work is based on the HSV color histogram feature extraction. Second, we will consider texture feature as Discrete Wavelet Transform (DWT) [9]. Texture refers to visual patterns with proper- ties of homogeneity that do not result from the presence of only a single color such as clouds and water. Texture features typically consist of contrast, uniformity, coarse- ness, and density. There are two basic classes of texture descriptors, namely, statistical model-based and trans- form-based. The former one explores the gray-level spa- tial dependence of textures and then extractes some sta- tistical feature as texture representation. The latter ap- proach is based on some transform such as DWT. Third; we consider a shape feature vector as moment invariants of image. Moment invariants have been frequently used as features for shape representation of object. They are computed based on the information provided by both the shape boundary and its interior region. 2.2. Similarity Estimation In order to verify the ability of distinguish between glob- al feature vectors we compute the similarity or dissimi- larity measures based on exponential the cosine distance for these two vectors. This measure between two feature vector i f and j f is defined as i C ij , Si,j exp(1) j ff ff , 1i, .jN Figure 1. Similarity matrix between global feature vectors extracted for 110 images. Figure 1 shows the similarity matrix between color fea- ture vectors for 10 group images consisting of 11 images with same colors. In Figure 1, the main diagonal areas represent the similarities of images with the same color, but the non-main diagonal areas represent the similarities of images with different colors. Therefore, the images with same colors represent by pure black color, otherwise they represent by white color. 2.3. Local Image Feature Here, we introduce image representation using the bag of visual words models based on local features. We have first various detectors and descriptors describing the im- age characters that are locally invariant with image rota- tion, scale transformation and illumination changes. We then describe a local features histogram that is made from bag of visual words using numerous local descrip- tors. A salient region in an image is a connected part of an image showing a significant and interesting image property. It is usually determined by the application of a region of interest detector to the image. If a region de- 48  W. H. CHO ET AL. Copyright © 2013 SciRes. OJAppS tector returns only an exact position within the image, we also refer to it as interest point detector. The most im- portant information that ideal region detectors give to us is the location of features, but other characteristics such as shape (scale) and orientation of a region of interest have to deliver additionally. Next, we have also dis- cussed the interest feature descriptors and their charac- teristics. A descriptor is a process that takes information of features and image to produce descriptive information i.e. features’ description, which are usually presented in form of features vectors. The descriptions then are used to match a feature to one in another image. And two im- portant aspects that a descriptor has to satisfy are dis- criminative and invariant. 2.4. Local histogram of visual words For image classification, we use the Bag-of –Visual Words approach, where images are represented as a his- togram of visual words. The visual words denote local features extracted from the images and the vocabulary is learnt task-specifically from a training database [10-11]. The construction procedure of histogram features of vis- ual words given from images goes as follows. First, we extract local feature descriptors form image patches around feature detectors which are invariant with scale or rotation change, and apply PCA transformation with these descriptors to reduce their dimensionality. Second, to create efficient codebook of visual words, we have portioned the local descriptor space into several informa- tion regions using various clustering methods such as K-means clustering or GMM clustering model. Here, we can create a bag of visual words by choosing the center of each cluster as the visual word. Third, a bag of visual words is used as the codebook to build an image histo- gram of local features 3. Automatic Images Clustering We automatically categories cluster images collections using two-stage clustering method. Figure 2. Block diagram of automatic images clustering The first step builds initial coarser clustering by consid- ering the contents of color images using Mean shift clus- tering and K-means clustering algorithm. The second step constructs accurate dense clustering by considering simultaneously the global and local features of color im- ages using a Gaussian Dirichlet process mixture model. Fig. 2 shows the block diagram of two-stage clustering method using global and local features. 3.1. Coarser Clustering First, we briefly review the Mean Shift clustering and K-means clustering algorithms. And then we built initial coarser clustering initial coarser clustering by consider- ing the contents of color images using Mean shift clus- tering and K-means clustering algorithm. First, we brief- ly review the traditional Mean shift clustering procedure [12]. This is guaranteed to converge to a point where the gradient of density function is zero. Here, the key point of Mean shift clustering procedure is how to take a size of bandwidth. We have used the flexible bandwidth size obtained through multiple iterations of the implementa- tion. Second, we briefly review the K-means clustering algorithm. The most common algorithm uses an iterative refinement technique. The algorithm is deemed to have converged when the assignments no longer change. Here, we construct initial coarser clustering for experi- mental image collections using the Mean shift clustering algorithm with the flexible bandwidth size, and K-means clustering algorithm based on global and local feature vectors respectively. Here, global feature consist of color, texture and shape features. They are the histogram of RGB color space, histogram of coefficients generated by 2-step discrete wavelet transformation and invariant moments. Moreover, local feature is used by the histo- gram of visual words occurrences derived from applying K-means with SIFT descriptors. 3.2. Dense Clustering Here, we first introduce a statistical theory of the Gaus- sian Dirichlet process mixture model. And then we con- struct accurate dense clustering by considering simulta- neously the global and local features of color images using a Gaussian Dirichlet process mixture model. The Dirichlet process, denoted as , is a random measure on measures and is parameterized by the innovation pa- rameter α and a base distribution 0 G [13]. One of the most important applications of the Dirichlet processes is as a nonparametric prior distribution of a mixture model. We want to model this data by mans of nonparametric Bayesian formulation of the Gaussian Dirichlet process mixture model. For this purpose, since the number of mixture component is unknown, we have to consider the mixture model with countable infinite components. 49  W. H. CHO ET AL. Copyright © 2013 SciRes. OJAppS Therefore, we will use a Diriclet process mixture model as the prior distribution over the number of components generating the data, and we also assume the probability distribution of observations as the multivariate Gaussian distribution. Here, we assume a coarser clustering model of given image-sets obtained by Mean shift clustering or K-means algorithm as an initial clustering model for Gaussian Dirichlet process mixture model. If we apply Variational Bayesian inference principle for the Gaussain Dirichlet process mixture, then we can obtain the ap- proximating likelihoods and posterior distributions q for all model parameters and latent clustering variables. They are represented with the following formulas. First, for the posterior distributions over the DP parameters, we have kk,1k,2 qv Beta(β,β) where N k,1 n n1 β1q(zk) KN k,2 n lk1 n1 βq(z l) and 12 ˆˆ qαGamma( α|η,η) where 11 ˆ ηηK1 K1 22k,2k,1 k,2 k1 ˆ ηη [ψβ ψ(ββ)] and ψ() denotes the digamma function. Second, regarding the posteriors over the likelihood pa- rameters, we have kk k kkkk qΘ(, |λ,,ω,)μΛ mΨ where kkk λλ N kkk kk k 1(λN) λ mmy kkk ωω N , kkkk NΨΨ S T kk nknk kk λN λN yyyy. and we use the notation defined as N kn n1 N q(z k) N knn n1 k 1q(z k) N yy T N nnknk n1 k 1q(z k) N k Syyyy Finally, the posteriors over latent clustering variables generating the clustering model is given as nknk ˆ ˆ qz kπp( |Θ) vy where k1 klk l1 ˆ πexp{log1vlog v} v and nk d1 ˆ p( |Θ)exp{ log2πlog 22 k yΛ T nk nk 1()} 2 k yμΛyμ As a last step, after conducting the updates of all poste- rior distributions and likelihood parameters at each itera- tion of the variational Bayesian inference algorithm for the Gaussain Dirichlet process mixture, the estimates Z of the latent clustering variables must be also update. Then, we have obtained the cluster membership of each image by maximization of posterior distribution over k. Hence, if each image is assigned to the cluster with cluster membership n1kKn ˆ zargmax q(zk) , then we obtained final clustering result for given image datasets. 4. Experimental results 4.1. Synthetic Image Data (a) (b) (c) (d) Figure 3. Coarser clustering results using means-shift and K-means based on color histogram with 8 bins and 4 bins for each channel: (a) Mean shift(8 bins), (b) K-means(8 bins), (c) Mean shift(4 bins), (d) K-means(4 bins) 50  W. H. CHO ET AL. Copyright © 2013 SciRes. OJAppS (a) (b) (c) (d) Figure 4. Dense clustering results using means-shift + VDPGMM and K-means+VDPGMM based on color histo- gram with 8 bins for each channel: (a) Mean shift + VDPGMM(8 bins), (b) K-means + VDPGMM(8 bins), (c) Mean shift+VDPGMM(4 bins), (d) K-means + VDPGMM 4.2. Real image data We conducted experiments using Matlab on an image database that is collected from internet public data set CBIR. Our image database consist of 10 different groups, namely, Festival, Bus, Horse, Rose, Beach, Dinosaurs, Food, Scene, Elephant, The Parthenon temple as shown in Fig. 5. Each group contains 10 similar images. The spatial resolution of each image is size of 128 × 128 pix- els. We first test our clustering algorithm using global features such as color, texture, shape features, K-means and GDPM clustering method. In the coarser clustering step, we build initial clusters by considering the global features of images and K-means clustering method. And then in the dense clustering step, we construct fine clus- ters using a Gaussian Dirichlet process mixture model taking an initial clustering model as K-means clustering results. Our experimental results show that we can cluster properly an example image collection into 10 groups. Our clustering result is shown in Fig 5. Second, we test our clustering algorithm using local features generated by SIFT, visual word and local image histogram, K-means and GDPM clustering method. In the coarser clustering step, we build initial clusters by considering the local features of images and K-means clustering method. And then in the dense clustering step, we construct fine clus- ters using a Gaussian Dirichlet process mixture model taking an initial clustering model as K-means clustering results. Our experimental results show that we can cluster properly an example image collection into 10 groups. Our clustering result is shown in Fig 5. Table 1. Comparative results for global and local feature. Feature Precision Recall F-measure Global 64.0 54.0 55.6 Local 50.1 49.0 43.8 (a) DWT (b) Hue+Saturation (c) Hue+Saturation+DWT 51  W. H. CHO ET AL. Copyright © 2013 SciRes. OJAppS (d) KMeans (e) KMeans+GDPM Figure 5. Dense and coarser clustering results 5. Conclusion In this paper, we present methods for clustering images using Mean Shift algorithm and Gaussian Dirichlet mix- ture model. Our approach has significant advantage over existing techniques. Besides integrating temporal and image content information, our approach can cluster au- tomatically photographs without some assumption about number of clusters or requiring a priori information about initial clusters and it can also generalize better to differ- ent image collections. 6. Acknowledgements This work was supported in part by the Korea Research Foundation Grant by the Korean government (KRF 2012-0002487). And "This research was supported by the MKE(The Ministry of Knowledge Economy), Korea, under the 3D and Smart TV Competitiveness Program support program (NIPA-2012-I2901-12-1031) supervised by the NIPA(National IT Industry Promotion Agency) REFERENCES [1] K. Barnard, P. Duygulu, and D. Forsyth, Clustering art, Present in Comput. Vis. Pattern Recognition, Dec., 2001, pp 434-441. [2] J. Huang, S. R. Kumar, and R. Zabith, An automatic hier- archical image classification scheme, in ACM Conf. Mul- timedia, Set., 1998, pp 219-228. [3] C. Carson, S. Belongie, H. Greenspan, and J. Malik, Blobworld: Image segmentation using Expecta- tion-Maximization and Its Application to Image Querying, IEEE Tran. On PAMI, Vol. 24, No. 8, pp 1026-1038, 2002. [4] Y. Yang, D. Xu, F. Nie, S. Yan, and Y. Zhung, Image Clustering Using Local Discriminant Models and Global Integration, IEEE Tran. On Image Processing, Vol. 19, No. 10, pp 2761-2773, 2010. [5] A. Sleit, A. Abugalhoun, M. Qatawneh, M.Ai-Sharief, R. Al-Jabaly and Ola Karajeh, Image clustering using Color, Texture and Shape features, KSII Tran. On Inter. And In- for. Syst., Vol. 5, No. 1, pp 212-227, 2011. [6] J. Goldberger, S. Gorden, and H. Greenspan, Unsuper- vided Image-Set Clustering Using an Information Theo- retic Framework, IEEE Transaction on Image Processing, Vol. 15, 2006, pp 449-458. [7] S. Krinidis, M. Krinidis, and V. Chatzis, An Unsuper- vised Image Clustering Method Based on EEMD Image Histogram, Jour. Of Infor. Hiding and Multi. Sig. Proc- essing, Vol. 3, No. 2, pp 152-163, 2012. [8] N. Sharma. S, P. Rawat. S and J. Singh. S, Efficient CBIR using Color Histogram Processing, Signal & Image Proc- essing : An International Journal, Vol. 2, No.1, pp 94-112, 20011. [9] K. H. Ghazali, M. F. Mansor, M. M. Mustafa and A. Hussain, Feature Extraction Technique using Discrete Wavelet Transform for Image Classification, The 5th Student conference on Research and Development, 11-12 December 2007, Malaysia. [10] R. J. Lopez-Sastre, T. Tuytelaars, F. J. Aceve- do-Rodriguez, and S. Maldonado-Bascon, Towards a more discriminative and semantic visual vocabulary. Computer Vsion and Image Understanding, Vol. 115, pp 415-425, 2011. [11] T. Deselaers, L. Pimenidis, and H. Ney, Bag-of-Visual Words Models for Adult Image Classification and Filter- ing, 208 IEEE [12] C. Xiao and M. Liu, Efficient Mean-shift Clustering Gaussian KD-Tree, Pacific Graphics, Vol. 9, No. 7, pp , 2010 [13] D. M. Blei and M. I. Jordan, "Variational Inference for Dirichlet Process Mixtures," Bayesian Analysis, vol. 1, no. 1 pp 121-144, 2006. 52 |