Text Classification Using Support Vector Machine with Mix ture of Kernel

Copyright © 2012 SciRes. JSEA

58

82.00%

84.00%

86.00%

88.00%

90.00%

92.00%

94.00%

96.00%

98.00%

100.00%

102.00%

recall

MK-SVM recall

precision

MK-SVM precision

F1

MK-SVM F1

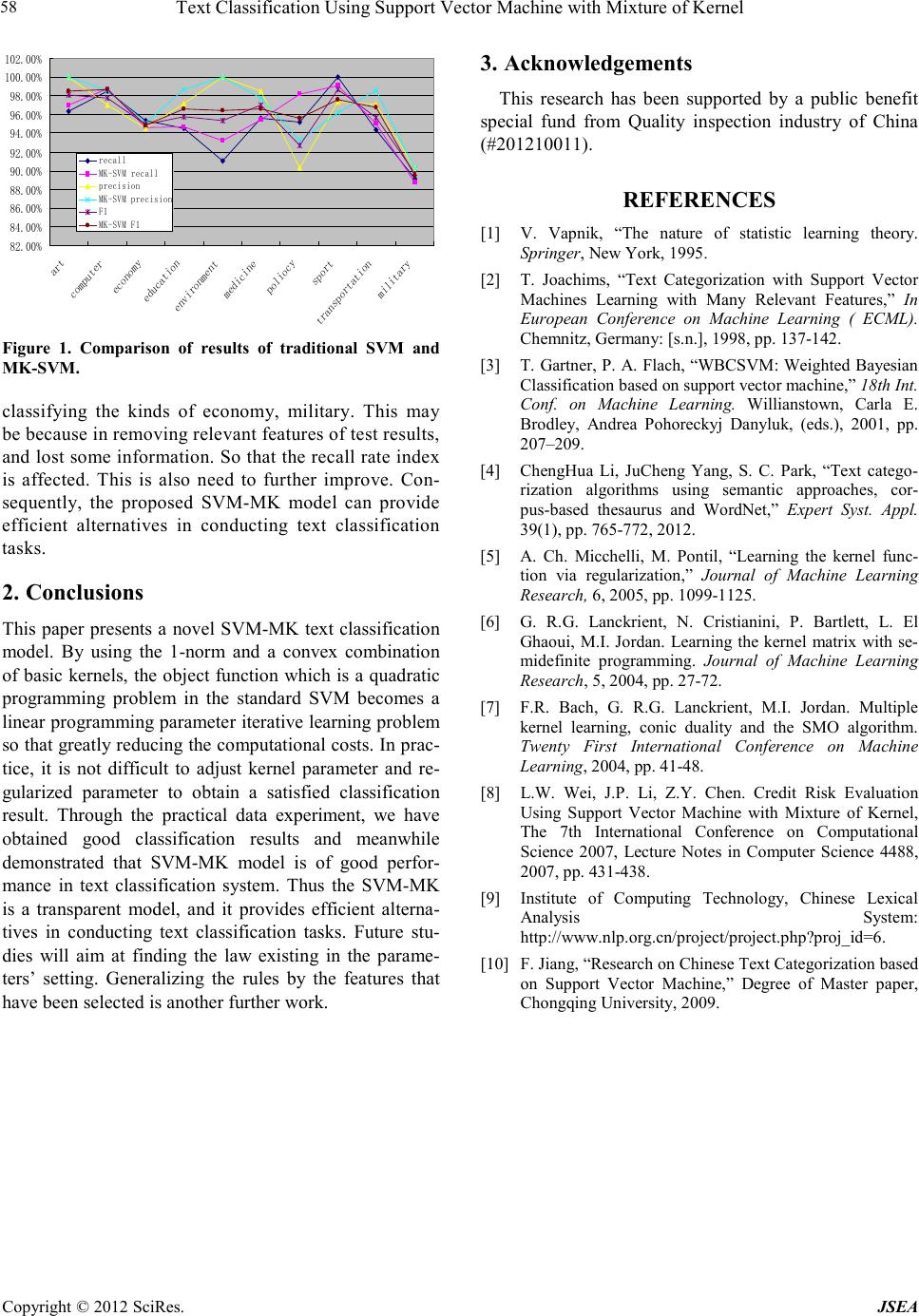

Figure 1. Comparison of results of traditional SVM and

MK-SVM.

classifying the kinds of economy, military. This may

be because i n remo ving rele vant feat ur es of te st r e s ul ts,

and lo st so me infor mation. So that the recall rate index

is affected. This is also need to further improve. Con-

sequently, the proposed SVM-MK model can provide

efficient alternatives in conducting text classification

tasks.

2. Conclusions

This paper presents a novel SVM-MK text classification

model. By using the 1-norm and a convex combination

of basic kernels, the object function which is a quadratic

programming problem in the standard SVM becomes a

linea r pro grammi ng paramete r iterative learning problem

so that greatly reducin g the computational costs. In prac-

tice, it is not difficult to adjust kernel parameter and re-

gularized parameter to obtain a satisfied classification

result. Through the practical data experime n t , we have

obtained good classification results and meanwhile

demonstrated that SVM-MK model is of good perfo r-

mance in text classification syste m. Thus the SVM-MK

is a transpar ent model, and it provides efficient alterna-

tives in conducting text classification tasks. Future stu-

dies will aim at finding the law existing in the parame-

ters’ setting. Generalizing the rules by the features that

have been selected is another f urther wo rk.

3. Acknowledgements

This research has been supported by a public benefit

special fund from Quality inspection industry of China

(#201210011).

REFERENCES

[1] V. Vapnik, “The nature of statistic learning theory.

Springer, New York, 1995.

[2] T. Joachims, “Text Categorization with Support Vector

Machines Learning with Many Relevant Features,” In

European Conference on Machine Learning ( ECML).

Chemnitz, Germany: [s.n.], 1998, pp. 137-142.

[3] T. Gartner, P. A. Flach, “WBCSVM: Weighted Bayesian

Classification based on support vector machine,” 18th Int.

Conf. on Machine Learning. Willianstown, Carla E.

Brodley, Andrea Pohoreckyj Danyluk, (eds.), 2001, pp.

207–209.

[4] ChengHua Li, JuCheng Yang, S. C. P ar k, “Text catego-

rization algorithms using semantic approaches, cor-

pus-based thesaurus and WordNet,” Expert Syst. Appl.

39(1), pp. 765-772, 2012.

[5] A. Ch. Micchelli, M. Pontil, “Learning the kernel func-

tion via regularization,” Journal of Machine Learning

Research, 6, 2005, pp. 1099-1125.

[6] G. R.G. Lanckrient, N. Cristianini, P. Bartlett, L. El

Ghaoui, M.I. Jordan. Learning the kernel matrix with se-

mide finite programming. Journal of Machine Learning

Research, 5, 2004, pp. 27-72.

[7] F.R. Bach, G. R.G. Lanckrient, M.I. Jordan. Multiple

kernel learning, conic duality and the SMO algorithm.

Twent y First International Conference on Machine

Learning, 2004, pp. 41-48.

[8] L.W. Wei, J.P. Li, Z.Y. Chen. Credit Risk Evaluation

Using Support Vector Machine with Mixture of Kernel,

The 7th International Conference on Computational

Science 2007, Lecture Notes in Computer Science 4488,

2007, pp. 431-438.

[9] Institute of Computing Technology, Chinese Lexical

Analysis System:

http://www.nlp.org.cn/project/project.php?proj_id=6.

[10] F. Jiang, “Research on Chinese Text Categor ization based

on Support Vector Machine,” Degree of Master paper,

Chongqing University, 2009.